Paper:

Wearable Hand Pose Estimation for Remote Control of a Robot on the Moon

Sota Sugimura and Kiyoshi Hoshino

University of Tsukuba

1-1-1 Tennodai, Tsukuba 305-8573, Japan

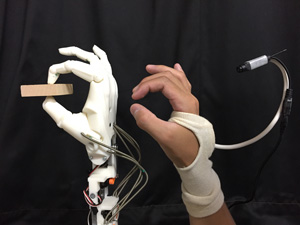

In recent years, a plan has been developed to conduct an investigation of an unknown environment by remotely controlling an exploration robot system sent to the Moon. For the robot to successfully perform sophisticated tasks, it must implement multiple degrees of freedom in not only its arms and legs but also its hands and fingers. Moreover, the robot has to be a humanoid type so that it can use tools used by astronauts, with no modification. On the other hand, to manipulate the multiple-degrees-of-freedom robot without learning skills necessary for manipulation and to minimize the psychological burden on operators, employing a method that utilizes everyday movements of operators as input to the robot, rather than a special controller, is ideal. In this paper, the authors propose a compact wearable device that allows for the estimation of the hand pose (hand motion capture) of the subject. The device has a miniature wireless RGB camera that is placed on the back of the user’s hand, rather than on the palm. Attachment of the small camera to the back of the hand may make it possible to minimize the restraint on the subject’s motions during motion capture. In the conventional techniques, the camera is attached on the palm because images of fingertips always need to be captured by the camera before images of any other part of the hand can be captured. In contrast, the image processing algorithm proposed herein is capable of estimating the hand pose with no need for first capturing the fingertips.

Robot control by wearable hand pose estimation

- [1] J. Haruyama et al., “Possible lunar lava tube skylight observed by SELENE cameras,” Geophysical Research Letters, Vol.36, No.21, L21206, 2009. doi: 10.1029/2009GL040635

- [2] J. Haruyama et al., “Mission Concepts of Unprecedented Zipangu Underworld of the Moon Exploration (UZUME) Project,” Trans. of the Japan Society for Aeronautical and Space Sciences, Aerospace Technology Japan, Vol.14, No.ists30, pp. 147-150, 2016.

- [3] I. Kawano et al., “System Study of Exploration of Lunar and Mars Holes and underlying subsurface,” Proc. of 60th Space Sciences and Technology Conf., 1C07 (JSASS-2016-4039), 2016.

- [4] K. Hoshino, N. Igo, M. Tomida, and H. Kotani, “Teleoperating System for Manipulating a Moon Exploring Robot on the Earth,” Int. J. of Automation Technology, Vol.11, No.3, pp. 433-441, 2017.

- [5] A. K. Bejczy, W. S. Kim, and S. C. Venema, “The Phantom Robot: Predictive Displays for Teleoperation with Time Delay,” Proc. of IEEE Int. Conf. on Robotics and Automation, pp. 546-551, 1990.

- [6] T. J. Tarn and K. Brady, “A framework for the control of time-delayed telerobotic systems,” IFAC Proceedings, Vol.30, No.20, pp. 599-604, 1997.

- [7] K. Hoshino, T. Kasahara, M. Tomida, and T. Tanimoto, “Gesture-world environment technology for mobile manipulation – remote control system of a robot with hand pose estimation,” J. of Robotics and Mechatronics, Vol.24, No.1, pp. 180-190, 2012.

- [8] K. Hoshino, “Hand Gesture Interface for Entertainment Games,” R. Nakatsu, M. Rauterberg, and P. Ciancarini (Ed.), Handbook of Digital Games and Entertainment Technologies, Springer, pp. 1-20, 2015. ISBN: 978-981-4560-52-8

- [9] M. Tomida and K. Hoshino, “Wearable device for high-speed hand pose estimation with a miniature camera,” J. of Robotics and Mechatronics, Vol.27, No.2, pp. 167-173, 2015.

- [10] K. Hoshino, “Dexterous robot hand control with data glove by human imitation,” IEICE Trans. on Information and Systems, Vol.E89-D, No.6, pp. 1820-1825, 2006.

- [11] D. Kim, O. Hilliges, S. Izadi, A. Butler, J. Chen, I. Oikonomidis, and P. Olivier, “Digits: Freehand 3D Interactions Anywhere Using a Wrist-Worn Gloveless Sensor,” UIST’12 Proc. 25th annual ACM Symposium on User Interface and Software Technology, pp. 167-176, 2012.

- [12] L. A. F. Fernandes, V. F. Pamplona, J. L. Prauchner, L. P. Nedel, and M. M. Oliveira, “Conceptual Image-Based Data Glove for Computer-Human Interaction,” Revista de Informática Teórica e Aplicada (RITA), Vol.15, No.3, pp. 75-94, 2008.

- [13] R. Fukui, M. Watanabe, T. Gyota, M. Shimosaka, and T. Sato, “Hand Shape Classification with a Wrist Contour Sensor: Development of a Prototype Device,” UbiComp ’11, pp. 311-314, 2011.

- [14] N. Otsu and T. Kurita, “A new scheme for practical, flexible and intelligent vision systems,” Proc. IAPR. Workshop on Computer Vision, pp. 431-435, 1998.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.