Paper:

3D Measurement of Large Structure by Multiple Cameras and a Ring Laser

Hiroshi Higuchi*, Hiromitsu Fujii**, Atsushi Taniguchi***, Masahiro Watanabe***, Atsushi Yamashita*, and Hajime Asama*

*The University of Tokyo

7-3-1 Hongo, Bunkyo-ku, Tokyo 113-8656, Japan

**Chiba Institute of Technology

2-17-1 Tsudanuma, Narashino-shi, Chiba 275-0016, Japan

***Hitachi, Ltd.

292 Yoshida-cho, Totsuka-ku, Yokohama-shi, Kanagawa 244-0817, Japan

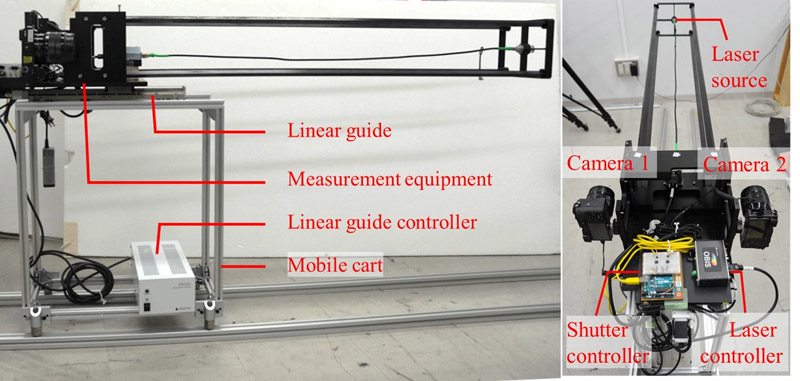

This paper presents an effective mobile three-dimensional (3D) measurement system that can obtain measurements from the inside of large structures such as railway vehicles, elevators, and escalators. In the proposed method, images are acquired by moving measurement equipment composed of a ring laser and two cameras. From the acquired images, accurate cross-sectional shapes, which are obtained via a light-section method by each camera, are integrated into a unified coordinate system using pose estimation based on bundle adjustment. We focus on the method of separately extracting the information necessary for the two processes – the light-section method and pose estimation – from the acquired images. The laser areas used for the light-section method are detected by a bandpass color filter. Further, a new block matching technique is introduced to eliminate the influence of the laser light, which causes incorrect detection of corresponding points. Through an experiment, we confirm the validity of the proposed 3D measurement method.

3D measurement system for large target

- [1] G. Sansoni, M. Trebeschi, and F. Docchio, “State-of-the-art and Applications of 3D Imaging Sensors in Industry, Cultural Heritage, Medicine, and Criminal Investigation,” Sensors, Vol.9, pp. 568-601, 2009.

- [2] P. J. Besl and N. D. McKay, “A Method for Registration of 3-D Shapes,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.14, No.2, pp. 239-256, 1992.

- [3] C. Thomson, G. Apostolopoulos, D. Backes, and J. Boehm, “Mobile Laser Scanning for Indoor Modelling,” ISPRS Annals of Photogrammetry, Remote Sensing and Spatial Information Sciences, No.2, pp. 289-293, 2013.

- [4] R. Kaijaluoto, A. Kukko, and J. Hyyppä, “Precise Indoor Localization for Mobile Laser Scanner,” ISPRS-Int. Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Vol.1, pp. 1-6, 2015.

- [5] M. Morita, T. Nishida, Y. Arita, M. Shige-eda, E. Maria, R. Gallone, and N. Giannoccaro, “Development of Robot for 3D Measurement of Forest Environment,” J. Robot. Mechatron., Vol.30, No.1, pp. 145-154, 2018.

- [6] Y. Bok, D. Choi, Y. Jeong, and I. Kweon, “Capturing City-level Scenes with A Synchronized Camera-laser Fusion Sensor,” 2011 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 4436-4441, 2011.

- [7] B. Zheng, T. Oishi, and K. Ikeuchi, “Rail Sensor: A Mobile Lidar System for 3D Archiving the Bas-reliefs in Angkor Wat,” IPSJ Trans. on Computer Vision and Applications, Vol.7, pp. 59-63, 2015.

- [8] Y. Shi, S. Ji, X. Shao, P. Yang, W. Wu, Z. Shi, and R. Shibasaki, “Fusion of A Panoramic Camera and 2D Laser Scanner Data for Constrained Bundle Adjustment in GPS-denied Environments,” Image and Vision Computing, Vol.40, pp. 28-37, 2015.

- [9] A. Obara, X. Yang, and H. Oku, “Structured Light Field Generated by Two Projectors for High-Speed Three Dimensional Measurement,” J. Robot. Mechatron., Vol.28, No.4, pp. 523-532, 2016.

- [10] K. Matsui, A. Yamashita, and T. Kaneko, “3-D Shape Measurement of Pipe by Range Finder Constructed with Omni-directional Laser and Omni-directional Camera,” Proc. of the 2010 IEEE Int. Conf. on Robotics and Automation, pp. 2537-2542, 2010.

- [11] A. Yamashita, K. Matsui, R. Kawanishi, T. Kaneko, T. Murakami, H. Omori, T. Nakamura, and H. Asama, “Self-Localization and 3-D Model Construction of Pipe by Earthworm Robot Equipped with Omni-Directional Rangefinder,” Proc. of the 2011 IEEE Int. Conf. on Robotics and Biomimetics, pp. 1017-1023, 2011.

- [12] A. Duda and J. Albiez, “Back Projection Algorithm for Line Structured Light Extraction,” Proc. of the IEEE/MTS OCEANS, pp. 1-7, 2013.

- [13] A. Duda, J. Schwendner, and C. Gaudig, “SRSL: Monocular Self-referenced Line Structured Light,” Proc. of the 2015 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 717-722, 2015.

- [14] H. Higuchi, H. Fujii, A. Taniguchi, M. Watanabe, A. Yamashita, and H. Asama, “3D Measurement of Large Structures with a Ring Laser and a Camera Using Structure from Motion for Integrating Cross Section,” Proc. of the 6th Int. Conf. on Advanced Mechatronics, pp. 233-234, 2015

- [15] S. Shimizu and J. W. Burdick, “Eccentricity Estimator for Wide-angle Fovea Vision Sensor,” J. Robot. Mechatron., Vol.21, No.1, pp. 128-134, 2009.

- [16] J. Cruz-Mota, I. Bogdanova, B. Paquier, M. Bierlaire, and J. P. Thiran, “Scale Invariant Feature Transform on the Sphere, Theory and Applications,” Int. J. of Computer Vision, Vol.98, No.2, pp. 217-241, 2012.

- [17] M. Lourenco, J. P. Barreto, and F. Vasconcelos, “sRD-SIFT: Keypoint Detection and Matching in Images With Radial Distortion,” IEEE Trans. on Robotics, Vol.28, No.3, pp. 752-760, 2012.

- [18] A. Furnari, G. M. Farinella, A. R. Bruna, and S. Battiato, “Affine Covariant Features for Fisheye Distortion Local Modeling,” IEEE Trans. on Image Processing, Vol.26, No.2, pp. 696-710, 2017.

- [19] Y. Ando, T. Tsubouchi, S. Yuta, and Y. Andou, “Development of Ultra Wide Angle Laser Range Sensor and Navigation of a Mobile Robot in a Corridor Environment,” J. Robot. Mechatron., Vol.11, No.1, pp. 25-32, 1999.

- [20] B. Triggs, P. F. McLauchlan, R. I. Hartley, and A. W. Fitzgibbon, “Bundle Adjustment – a Modern Synthesis,” Proc. of the Int. Workshop on Vision Algorithms, pp. 298-372, 1999.

- [21] A. Shibata, Y. Okumura, H. Fujii, A. Yamashita, and H. Asama, “Refraction-Based Bundle Adjustment for Scale Reconstructible Structure from Motion,” J. Robot. Mechatron., Vol.30, No.4, pp. 660-670, 2018.

- [22] C. Mertz, S. J. Koppal, S. Sia, and S. Narasimhan, “A Low-Power Structured Light Sensor for Outdoor Scene Reconstruction and Dominant Material Identification,” Proc. of the 2012 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition Workshop, pp. 15-22, 2012.

- [23] R. B. Fisher and D. K. Naidu, “A Comparison of Algorithms for Subpixel Peak Detection,” J. L. C. Sanz (Eds.), “Image Technology,” Springer, pp. 385-404, 1996.

- [24] D. W. Marquardt, “An Algorithm for Least-Squares Estimation of Nonlinear Parameters,” J. of the Society for Industrial and Applied Mathematics, Vol.11, No.2, pp. 431-441, 1963.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.