Paper:

Verification of Acoustic-Wave-Oriented Simple State Estimation and Application to Swarm Navigation

Tomoha Kida, Yuichiro Sueoka, Hiro Shigeyoshi, Yusuke Tsunoda, Yasuhiro Sugimoto, and Koichi Osuka

Osaka University

2-1 Yamadaoka, Suita, Osaka 565-0871, Japan

Cooperative swarming behavior of multiple robots is advantageous for various disaster response activities, such as search and rescue. This study proposes an idea of communication of information between swarm robots, especially for estimating the orientation and direction of each robot, to realize decentralized group behavior. Unlike the conventional camera-based systems, we developed robots equipped with a speaker array system and a microphone system to utilize the time difference of arrival (TDoA). Sound waves outputted by each robot was used to estimate the relative direction and orientation. In addition, we attempt to utilize two characteristics of sound waves in our experiments, namely, diffraction and superposition. This paper also investigates the accuracy of state estimation in cases where the robots output sounds simultaneously and are not visible to each other. Finally, we applied our method to achieve behavioral control of a swarm of five robots, and demonstrated that the leader robot and follower robots exhibit good alignment behavior. Our methodology is useful in scenarios where steps or obstacles are present, in which cases camera-based systems are rendered unusable because they require each robot to be visible to each other in order to collect or share information. Furthermore, camera-based systems require expensive devices and necessitate high-speed image processing. Moreover, our method is applicable for behavioral control of swarm robots in water.

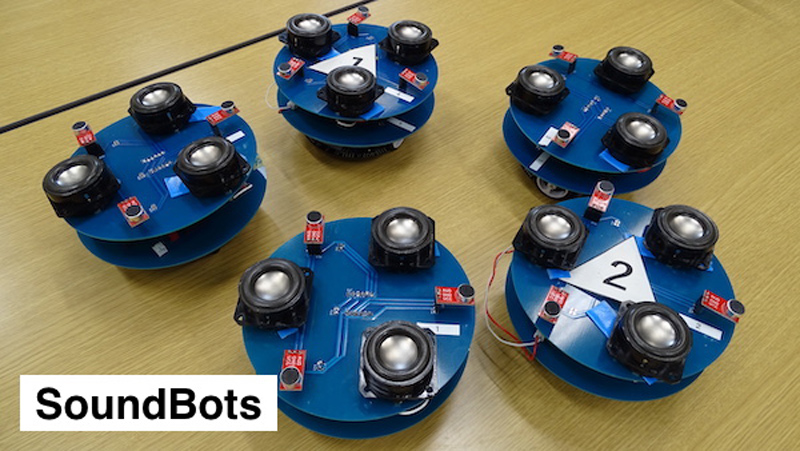

SoundBots: acoustic-aided swarm robots

- [1] M. Rubenstein, A. Cornejo, and R. Nagpal, “Programmable self-assembly in a thousand-robot swarm,” Science, Vol.345, Issue 6198, pp. 795-799, 2014.

- [2] E. Şahin, “Swarm robotics: From sources of inspiration to domains of application,” Int. Workshop on Swarm Robotics, pp. 10-20, 2004.

- [3] M. Brambilla et al., “Swarm robotics: a review from the swarm engineering perspective,” Swarm Intelligence, Vol.7, No.1, pp. 1-41, 2013.

- [4] J. L. Deneubourg et al., “The Dynamics of Collective Sorting: Robot-like Ants and Ant-like Robots,” From Animals to Animats, pp. 336-363, 1991.

- [5] D. Kurabayashi et al., “Adaptive Formation Transition of a Swarm of Mobile Robots Based on Phase Gradient,” J. Robot. Mechatron., Vol.22, No.4, pp. 467-474, 2010.

- [6] R. Beckers, O. E. Holland, and J.-L. Deneubourg, “From Local Actions to Global Tasks: Stigmergy and Collective Robotics,” Artificial Life IV, MIT Press, pp. 181-189, 1994.

- [7] H. Asama, “Trends of distributed autonomous robotic systems,” Distributed Autonomous Robotic Systems, Springer, pp. 3-8, 1994.

- [8] M. Ballerini, V. Cabibbo, R. Candelier, E. Cisbani, I. G. Dina, V. Lecomte, A. Orlamdi, G. Parisi, A. Procaccini, M. Viale, and V. Zdravkovic, “Interaction ruling animal collective behavior depends on topological rather than metric distance: Evidence from a field study,” Proc. Natl. Acad. Sci., Vol.105, pp. 1232-1237, 2008.

- [9] B. L. Partridge, “Internal dynamics and the interrelations of fish in schools,” J. of Comparative Physiology, Vol.144, pp. 313-325, 1981.

- [10] A. Strandburg-Peshkin et al., “Visual sensory networks and effective information transfer in animal groups,” Current biology: CB. 23. R709-11, 2013.

- [11] C. R. Reynolds, “Flocks, herds and schools: A distributed behavioral model,” Proc. of the 14th Annual Conf. on Computer Graphics and Interactive Techniques, pp. 25-34, 1987.

- [12] I. D. Couzin and J. Krause, “Self-organization and collective behavior in vertebrates,” Adv. Stud. Behav., Vol.32, pp. 1-75, 2003.

- [13] T. Vicsek et al., “Novel Type of Phase Transition in a System of Self-Driven Particles,” Physical Review Letters, Vol.75, No.6, pp. 1226-1229, 1995.

- [14] A. Czirók and T. Vicsek, “Collective behavior of interacting self-propelled particles,” Physica A: Statistical Mechanics and its Applications, Vol.281, Issues 1-4, pp. 17-29, 2000.

- [15] T. Nishiura, T. Yamada, S. Nakamura, and K. Shikano, “Localization of multiple sound sources based on a CSP analysis with a microphone array,” Proc. of 2000 IEEE Int. Conf. on Acoustics, Speech, and Signal Processing (Cat. No.00CH37100), pp. II1053-II1056, Vol.2, 2000.

- [16] K. Sekiguchi, Y. Bando, K. Nakamura, K. Nakadai, K. Itoyama, and K. Yoshii, “Online simultaneous localization and mapping of multiple sound sources and asynchronous microphone arrays,” 2016 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 1973-1979, 2016.

- [17] T. Mizumoto, I. Aihara, T. Otsuka, H. Awano, and H. G. Okuno, “Swarm of sound-to-light conversion devices to monitor acoustic communication among small nocturnal animals,” J. Robot. Mechatron., Vol.29, No.1, pp. 255-267, 2017.

- [18] K. Hoshiba, K. Nakadai, M. Kumon, and H. G. Okuno, “Assessment of MUSIC-Based Noise-Robust Sound Source Localization with Active Frequency Range Filtering,” J. Robot. Mechatron., Vol.30, No.3, pp. 426-435, 2018.

- [19] Z. Meng, “Design and implementation of sound tracking multi-robot system in wireless sensor networks,” 2017 First Int. Conf. on Electronics Instrumentation & Information Systems (EIIS), pp. 1-6, 2017.

- [20] Y. Tsunoda, Y. Sueoka, and K. Osuka, “Experimental Analysis of Acoustic Field Control-based Robot Navigation,” J. Robot. Mechatron., Vol.31, No.1, pp. 110-117, 2019.

- [21] J.-M. Valin, F. Michaud, J. Rouat, and D. Letourneau, “Robust sound source localization using a microphone array on a mobile robot,” Proc. 2003 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 1228-1233, 2013.

- [22] H. Liu and M. Shen, “Continuous sound source localization based on microphone array for mobile robots,” 2010 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 4332-4339, 2010.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.