Paper:

Vision-Based Finger Tapping Detection Without Fingertip Observation

Shotaro Narita, Shingo Kagami, and Koichi Hashimoto

Graduate School of Information Sciences, Tohoku University

6-6-01 Aramaki Aza Aoba, Aoba-ku, Sendai 980-8579, Japan

A machine learning approach is investigated in this study to detect a finger tapping on a handheld surface, where the movement of the surface is observed visually; however, the tapping finger is not directly visible. A feature vector extracted from consecutive frames captured by a high-speed camera that observes a surface patch is input to a convolutional neural network to provide a prediction label indicating whether the surface is tapped within the sequence of consecutive frames (“tap”), the surface is still (“still”), or the surface is moved by hand (“move”). Receiver operating characteristics analysis on a binary discrimination of “tap” from the other two labels shows that true positive rates exceeding 97% are achieved when the false positive rate is fixed at 3%, although the generalization performance against different tapped objects or different ways of tapping is not satisfactory. An informal test where a heuristic post-processing filter is introduced suggests that the use of temporal history information should be considered for further improvements.

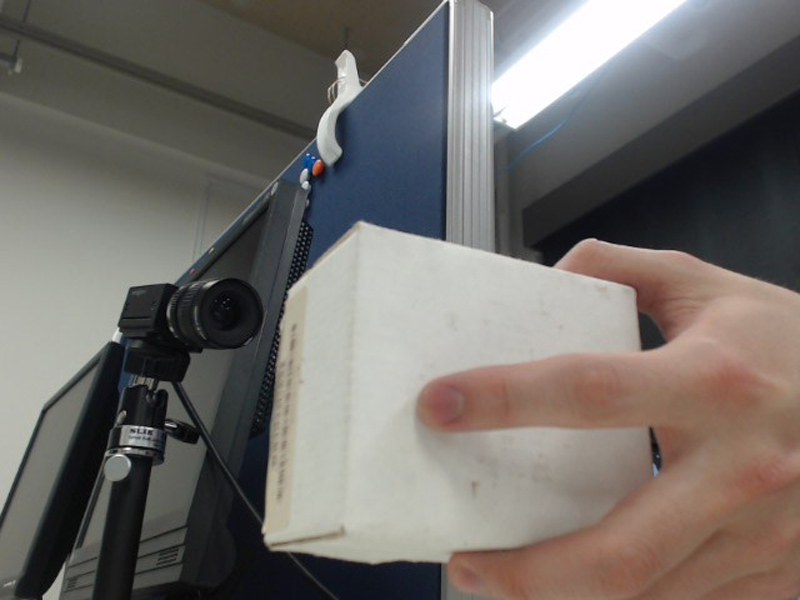

Back face of box invisible to camera tapped by finger

- [1] A. Agarwal, S. Izadi, M. Chandraker, and A. Blake, “High precision multi-touch sensing on surfaces using overhead cameras,” Second Annual IEEE Int. Workshop on Horizontal Interactive Human-Computer Systems (TABLETOP’07), pp. 197-200, doi: 10.1109/TABLETOP.2007.29, 2007.

- [2] S. Izadi, A. Agarwal, A. Criminisi, J. Winn, A. Blake, and A. Fitzgibbon, “C-slate: a multi-touch and object recognition system for remote collaboration using horizontal surfaces,” Second Annual IEEE Int. Workshop on Horizontal Interactive Human-Computer Systems (TABLETOP’07), pp. 3-10, doi: 10.1109/TABLETOP.2007.34, 2007.

- [3] A. D. Wilson, “Playanywhere: a compact interactive tabletop projection-vision system,” 18th Annual ACM Symposium on User Interface Software and Technology (UIST 2005), pp. 83-92, doi: 10.1145/1095034.1095047, 2005.

- [4] E. Posner, N. Starzicki, and E. Katz, “A single camera based floating virtual keyboard with improved touch detection,” 2012 IEEE 27th Convention of Electrical & Electronics Engineers in Israel (IEEEI), pp. 1-5, doi: 10.1109/EEEI.2012.6377072, 2012.

- [5] T. Matsubara, N. Mori, T. Niikura, and S. Tano, “Touch detection method for non-display surface using multiple shadows of finger,” 2017 IEEE 6th Global Conf. on Consumer Electronics (GCCE), pp. 1-5, doi: 10.1109/GCCE.2017.8229364, 2017.

- [6] D. Alex, P. Gorur, B. Amrutur, and K. Ramakrishnan, “Lamptop: touch detection for a projector-camera system based on shape classification,” 2013 ACM Int. Conf. on Interactive Tabletops and Surfaces (ITS’13), pp. 429-432, doi: 10.1145/2512349.2514921, 2013.

- [7] H. Benko and A. D. Wilson, “Depthtouch: Using depth-sensing camera to enable freehand interactions on and above the interactive surface,” Technical report, Microsoft Research, MSR-TR-2009-23, 2009.

- [8] A. D. Wilson, “Using a depth camera as a touch sensor,” ACM Int. Conf. on Interactive Tabletops and Surfaces (ITS 2010), pp. 69-72, doi: 10.1145/1936652.1936665, 2010.

- [9] A. D. Wilson and H. Benko, “Combining multiple depth cameras and projectors for interactions on, above and between surfaces,” 23rd Annual ACM Symposium on User Interface Software and Technology (UIST 2010), pp. 273-282 doi: 10.1145/1866029.1866073, 2010.

- [10] C. Harrison, H. Benko, and A. D. Wilson, “Omnitouch: wearable multitouch interaction everywhere,” 24th Annual ACM Symposium on User Interface Software and Technology (UIST 2011), pp. 441-450, doi: 10.1145/2047196.2047255, 2011.

- [11] L. Zhang and T. Matsumaru, “Near-field touch interface using time-of-flight camera,” J. Robot. Mechatron., Vol.28, No.5, pp. 759-775, doi: 10.20965/jrm.2016.p0759, 2016.

- [12] P. D. Wellner, “Interacting with paper on the digitaldesk,” Technical Report, University of Cambridge, Computer Laboratory, 1994.

- [13] P. Mistry and P. Maes, “Sixthsense: a wearable gestural interface,” ACM Int. Conf. on Computer Graphics and Interactive Techniques in Asia (SIGGRAPH Asia 2009 Sketches), Article No.11, doi: 10.1145/1667146.1667160, 2009.

- [14] M. Sato, I. Poupyrev, and C. Harrison, “Touché: Enhancing touch interaction on humans, screens, liquids, and everyday objects,” SIGCHI Conf. on Human Factors in Computing Systems (CHI’12), pp. 483-492, doi: doi.org/10.1145/2207676.2207743, 2012.

- [15] S. Kagami and K. Hashimoto, “A full-color single-chip-dlp projector with an embedded 2400-fps homography warping engine,” 45th ACM SIGGRAPH Int. Conf. and Exhibition on Computer Graphics and Interactive Techniques (SIGGRAPH 2018 Emerging Technologies), Article No.1, doi: 10.1145/3214907.3214927, 2018.

- [16] S. Kagami and K. Hashimoto, “Animated stickies: Fast video projection mapping onto a markerless plane through a direct closed-loop alignment,” IEEE Trans. Visualization and Computer Graphics, Vol.25, No.11, pp. 3095-3105, doi: 10.1109/TVCG.2019.2932248, 2019.

- [17] S. Kagami and K. Hashimoto, “Interactive stickies: Low-latency projection mapping for dynamic interaction with projected images on a movable surface,” 47th ACM SIGGRAPH Int. Conf. and Exhibition on Computer Graphics and Interactive Techniques (SIGGRAPH 2020 Emerging Technologies), Article No.16. doi: 10.1145/3388534.3407291, 2020.

- [18] K. Yamamoto, S. Ikeda, T. Tsuji, and I. Ishii, “A real-time finger-tapping interface using high-speed vision system,” IEEE Int. Conf. on Systems, Man and Cybernetics (SMC’06), Vol.1, pp. 296-303, doi: 10.1109/ICSMC.2006.384398, 2006.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.