Abstract

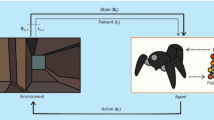

In the path planning using Q-learning of the mobile agent, the convergence speed is too slow. So, based on Q-learning, two hybrid algorithms are proposed to improve the above problem in this paper. One algorithm is combining Manhattan distance and Q-learning (CMD-QL); the other one is combining flower pollination algorithm and Q-learning (CFPA-QL). In the former algorithm, the Q table is firstly initialized with Manhattan distance to enhance the learning efficiency of the initial stage of Q-learning; secondly, the selection strategy of the ε-greedy action is improved to balance the exploration-exploitation relationship of the mobile agent’s actions. In the latter algorithm, the flower pollination algorithm is first used to initialize the Q table, so that Q-learning can obtain the necessary prior information which can improve the overall learning efficiency; secondly, the ε-greedy strategy under the minimum value of the exploration factor is adopted, which makes effective use of the action with high value. Both algorithms have been tested under known, partially known, and unknown environments, respectively. The test results show that the CMD-QL and CFPA-QL algorithms proposed in this paper can converge to the optimal path faster than the single Q-learning method, besides the CFPA-QL algorithm has the better efficiency.

Similar content being viewed by others

REFERENCES

Wang, Q., Cheng, J., and Li, X.L., Research on robot path planning method in complex environment, Comput. Simul., 2017, vol. 34, no. 10, pp. 296–300.

Tao, Z., Gao, Y.F., Zheng, T.J., et al., Research on path planning in honeycomb grid map based on A* algorithm, J. North Univ. China (Nat. Sci. Ed.), 2020, vol. 41, no. 4, pp. 310–317.

Wu, D., Wang, R.F., Fu, X., et al., Research on local path optimization algorithm of mining robot, Coal Eng., 2020, vol. 52, no. 3, pp. 132–136.

Chen, J.F., Huang, W.H., Wang, X., et al., Mobile robot path planning based on improved ant colony algorithm, High Technol. Lett., 2020, vol. 30, no. 3, pp. 291–297.

Xiao, H.H. and Duan, Y.M., Research on path planning of mobile robot based on improved flower pollination algorithm, Software Guide, 2018, vol. 17, no. 11, pp. 22–25.

Ren, Y. and Zhao, H.B., Robot obstacle avoidance and path planning based on improved artificial potential field method, Comput. Simul., 2020, vol. 37, no. 2, pp. 360–364.

Cui, L. and Zhu, X.J., Research and simulation of service robot intelligent navigation system based on ROS, Sensors Microsyst., 2020, vol. 39, no. 2, pp. 22–25.

Zheng, K.L., Han, B.L., and Wang, X.D., Ackerman robot motion planning system based on improved TEB algorithm, Sci. Technol. Eng., 2020, vol. 20, no. 10, pp. 3997–4003.

Zheng, Y.B., Xi, P.X., Wang, L.L., et al., Multi-agent formation control and obstacle avoidance method based on fuzzy artificial potential field method, Comput. Eng. Sci., 2019, vol. 41, no. 8, pp. 1504–1511.

Ma, X.L. and Mei, H., Research on mobile robot global path planning based on two-way jump point search algorithm, Mech. Sci. Technol., 2020, vol. 39, no. 10, pp. 1624–1631.

Chang, L., Shan, L., Jiang, C., and Dai, Y., Reinforcement based mobile robot path planning with improved dynamic window approach in unknown environment, Auton. Robots, 2021, vol. 45, no. 1, pp. 51–76. https://doi.org/10.1007/s10514-020-09947-4

Bernstein, A.V., Burnaev, E.V., and Kachan, O.N., Reinforcement learning for computer vision and robot navigation, Machine Learning and Data Mining in Pattern Recognition. MLDM 2018, Perner, P., Ed., Lecture Notes in Computer Science, vol. 10935, Cham: Springer, 2018, pp. 258–272. https://doi.org/10.1007/978-3-319-96133-0_20

Song, Y., Li, Y.B., and Li, C.H., Mobile robot path planning and reinforcement learning initialization, Control Theory Appl., 2012, vol. 29, no. 12, pp. 1623–1628.

Lin, L., Xie, H., Zhang, D., and Shen, L., Supervised neural Q_learning based motion control for bionic underwater robots, J. Bionic Eng., 2010, vol. 7, pp. S177–S184. https://doi.org/10.1016/S1672-6529(09)60233-X

Oh, C.-H., Nakashima, T., and Ishibuchi, H., Initialization of Q-values by fuzzy rules for accelerating Q-learning, IEEE Int. Joint Conf. on Neural Networks Proc. IEEE World Congress on Comput. Intell., Anchorage, Alaska, 1998, IEEE, 1998, vol. 3, pp. 2051–2056. https://doi.org/10.1109/IJCNN.1998.687175

Zhang, Q., Li, M., Wang, X., and Zhang, Y., Reinforcement learning in robot path optimization, J. Software, 2012, vol. 7, no. 3, pp. 657–662. https://doi.org/10.4304/jsw.7.3.657-662

Wiewiora, E., Potential-based shaping and Q-value initialization are equivalent, J. Artif. Intell. Res., 2003, vol. 19, no. 1, pp. 205–208. https://doi.org/10.1613/jair.1190

Xu, X.S. and Yuan, J., Path planning method for mobile robot based on improved reinforcement learning, J. Chin. Inertial Technol., 2019, vol. 27, no. 3, pp. 314–320.

Dong, P.F., Zhang, Z.A., Mei, X.H., et al., Reinforcement learning path planning algorithm introducing potential field and trap search, Comput. Eng. Appl., 2018, vol. 54, no. 16, pp. 129–134.

Li, C., Li, M.J., and Du, J.J., An improved method of reinforcement learning action strategy ε-greedy, Comput. Technol. Autom., 2019, vol. 38, no. 2, pp. 141–145.

Zhang, H., Research and implementation of unmanned vehicle path planning based on reinforcement learning, PhD Dissertation, Jining, China: Qufu Normal Univ., 2019.

Yang, X.S., Flower pollination algorithm for global optimization, Unconventional Computation and Natural Computation. UCNC 2012, Durand-Lose, J. and Jonoska, N., Eds., Lecture Notes in Computer Science, vol. 7445, Berlin: Springer, 2012, pp. 240–249. https://doi.org/10.1007/978-3-642-32894-7_27

Funding

This work is supported by the National Natural Science Foundation (NNSF), China (grant nos. 61473179, 61973184, and 61573213); The Natural Science Foundation of Shandong province, China (grant no. ZR2019MF024); SDUT and Zibo City Integration Development Project, China (grant no. 2018ZBXC295).

Author information

Authors and Affiliations

Corresponding author

About this article

Cite this article

Tengteng Gao, Li, C., Liu, G. et al. Hybrid Path Planning Algorithm of the Mobile Agent Based on Q-Learning. Aut. Control Comp. Sci. 56, 130–142 (2022). https://doi.org/10.3103/S0146411622020043

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3103/S0146411622020043