Abstract

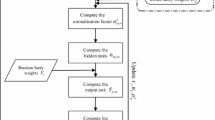

Lowe [1] proposed that the kernel parameters of a radial basis function (RBF) neural network may first be fixed and the weights of the output layer can then be determined by pseudo-inverse. Jang, Sun, and Mizutani (p.342 [2]) pointed out that this type of two-step training methods can also be used in fuzzy neural networks (FNNs). By extensive computer simulations, we [3] demonstrated that an FNN with randomly fixed membership function parameters (FNN-RM) has faster training and better generalization in comparison to the classical FNN. To provide a theoretical basis for the FNN-RM, we present an intuitive proof of the universal approximation ability of the FNN-RM in this paper, based on the orthogonal set theory proposed by Kaminski and Strumillo for RBF neural networks [4].

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Lowe, D.: Adaptive Radial Basis Function Nonlinearities, and the Problem of Generalisation. In: Proc. First IEE International Conference on Artificial Neural Networks, pp. 29–33 (1989)

Jang, J.S.R., Sun, C.T., Mizutani, E.: Neuro-fuzzy and Soft Computing. Prentice Hall International Inc., Englewood Cliffs (1997)

Wang, L., Liu, B., Wan, C.R.: A Novel Fuzzy Neural Network with Fast Training and Accurate Generalization. In: International Symposium Neural Networks, pp. 270–275 (2004)

Kaminski, W., Strumillo, P.: Kernel Orthogonalization in Radial Basis Function Neural Networks. IEEE Trans. Neural Networks 8, 1177–1183 (1997)

Frayman, Y., Wang, L.: Torsional Vibration Control of Tandem Cold Rolling Mill Spindles: a Fuzzy Neural Approach. In: Proc. the Australia-Pacific Forum on Intelligent Processing and Manufacturing of Materials, vol. 1, pp. 89–94 (1997)

Frayman, Y., Wang, L.: A Fuzzy Neural Approach to Speed Control of an Elastic Two-mass System. In: Proc. 1997 International Conference on Computational Intelligence and Multimedia Applications, pp. 341–345 (1997)

Frayman, Y., Wang, L.: Data Mining Using Dynamically Constructed Recurrent Fuzzy Neural Networks. In: Wu, X., Kotagiri, R., Korb, K.B. (eds.) PAKDD 1998. LNCS, vol. 1394, pp. 122–131. Springer, Heidelberg (1998)

Frayman, Y., Ting, K.M., Wang, L.: A Fuzzy Neural Network for Data Mining: Dealing with the Problem of Small Disjuncts. In: Proc. 1999 International Joint Conference on Neural Networks, vol. 4, pp. 2490–2493 (1999)

Wang, L., Frayman, Y.: A Dynamically-generated Fuzzy Neural Network and Its Application to Torsional Vibration Control of Tandem Cold Rolling Mill Spindles. Engineering Applications of Artificial Intelligence 15, 541–550 (2003)

Frayman, Y., Wang, L., Wan, C.R.: Cold Rolling Mill Thickness Control Using the Cascade-correlation Neural Network. Control and Cybernetics 31, 327–342 (2002)

Frayman, Y., Wang, L.: A Dynamically-constructed Fuzzy Neural Controller for Direct Model Reference Adaptive Control of Multi-input-multi-output Nonlinear Processes. Soft Computing 6, 244–253 (2002)

Jang, J.S.R.: Anfis: Adaptive-network-based Fuzzy Inference Systems. IEEE Trans. Syst., Man, Cybern. B 23, 665–685 (1993)

Shar, S., Palmieri, F., Datum, M.: Optimal Filtering Algorithms for Fast Learning in Feedforward Neural Networks. Neural Networks 5, 779–787 (1992)

Korner, T.W.: Fourier Analysis. Cambridge University Press, Cambridge (1988)

Wang, L., Fu, X.J.: Data Mining with Computational Intelligence. Springer, Berlin (2005)

Fu, X.J., Wang, L.: Data Dimensionality Reduction with Application to Simplifying RBF Network Structure and Improving Classification Performance. IEEE Trans. Syst., Man, Cybern. B 33, 399–409 (2003)

Fu, X.J., Wang, L.: Linguistic Rule Extraction from a Simplified RBF Neural Network. Computational Statistics 16, 361–372 (2001)

Fu, X.J., Wang, L., Chua, K.S., Chu, F.: Training RBF Neural Networks on Unbalanced Data. In: Wang, L., et al. (eds.) Proc. 9th International Conference on Neural Information Processing (ICONIP 2002), vol. 2, pp. 1016–1020 (2002)

Kwok, T.Y., Yeung, D.Y.: Objective Functions for Training New Hidden Units in Constructive Neural Networks. IEEE Trans. Neural Networks 18, 1131–1148 (1997)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2005 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Wang, L., Liu, B., Wan, C. (2005). On the Universal Approximation Theorem of Fuzzy Neural Networks with Random Membership Function Parameters. In: Wang, J., Liao, X., Yi, Z. (eds) Advances in Neural Networks – ISNN 2005. ISNN 2005. Lecture Notes in Computer Science, vol 3496. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11427391_6

Download citation

DOI: https://doi.org/10.1007/11427391_6

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-25912-1

Online ISBN: 978-3-540-32065-4

eBook Packages: Computer ScienceComputer Science (R0)