Abstract

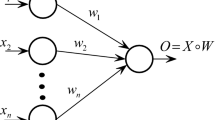

In this paper, we present a new training algorithm for a fuzzy perceptron. In the case where the dimension of the input vectors is two and the training examples are separable, we can prove a finite convergence, i.e., the training procedure for the network weights will stop after finite steps. When the dimension is greater than two, stronger conditions are needed to guarantee the finite convergence.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Nikov, A., Stoeva, S.: Quick Fuzzy Backpropagation Algorithm. Neural Networks 14, 231–244 (2001)

Castro, J.L., Delgado, M., Mantas, C.J.: A Fuzzy Rule-based Algorithm to Train Perceptrons. Fuzzy Sets and Systems 118, 359–367 (2001)

Pandit, M., Srivastava, L., Sharma, J.: Voltage Contingency Ranking Using Fuzzified Multilayer Perceptron. Electric Power Systems Research 59, 65–73 (2001)

Chowdhury, P., Shukla, K.K.: Incorporating Fuzzy Concepts along with Dynamic Tunneling for Fast and Robust Training ofMultilayer Perceptrons. Neurocomputing 50, 319–340 (2003)

Stoeva, S., Nikov, A.: A Fuzzy Backpropagation Algorithm. Fuzzy Sets and Systems 112, 27–39 (2000)

Liang, Y.C., Feng, D.P., Lee, H.P., Lim, S.P., Lee, K.H.: Successive Approximation Training Algorithm for Feedforward Neural Networks. Neurocomputing 42, 311–322 (2002)

Wu, W., Shao, Z.: Convergence of Online Gradient Methods for Continuous perceptrons with Linearly Separable Training Patterns. Applied Mathematics Letters 16, 999–1002 (2003)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2005 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Yang, J., Wu, W., Shao, Z. (2005). A New Training Algorithm for a Fuzzy Perceptron and Its Convergence. In: Wang, J., Liao, X., Yi, Z. (eds) Advances in Neural Networks – ISNN 2005. ISNN 2005. Lecture Notes in Computer Science, vol 3496. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11427391_97

Download citation

DOI: https://doi.org/10.1007/11427391_97

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-25912-1

Online ISBN: 978-3-540-32065-4

eBook Packages: Computer ScienceComputer Science (R0)