Abstract

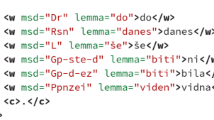

In this paper, a hybrid language model which combines a word-based n-gram and a category-based Stochastic Context-Free Grammar (SCFG) is evaluated for training data sets of increasing size. Different estimation algorithms for learning SCFGs in General Format and in Chomsky Normal Form are considered. Experiments on the UPenn Treebank corpus are reported. These experiments have been carried out in terms of the test set perplexity and the word error rate in a speech recognition experiment.

This work has been partially supported by the Spanish MCyT under contract (TIC2002/04103-C03-03) and by Agencia Valenciana de Ciencia y Tecnología under contract GRUPOS03/031.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Bahl, L.R., Jelinek, F., Mercer, R.L.: A maximum likelihood approach to continuous speech recognition. IEEE Trans. Pattern Analysis and Machine Intelligence PAMI-5(2), 179–190 (1983)

Baum, L.E.: An inequality and associated maximization technique in statistical estimation for probabilistic functions of markov processes. Inequalities 3, 1–8 (1972)

Benedí, J.M., Sánchez, J.A.: Estimation of stochastic context-free grammars and their use as language models. Computer Speech and Language (2005) (to appear)

Charniak, E.: Tree-bank grammars. Technical report, Departament of Computer Science, Brown University, Providence, Rhode Island (January 1996)

Chelba, C., Jelinek, F.: Structured language modeling. Computer Speech and Language 14, 283–332 (2000)

Jelinek, F., Lafferty, J.D.: Computation of the probability of initial substring generation by stochastic context-free grammars. Computational Linguistics 17(3), 315–323 (1991)

Lari, K., Young, S.J.: The estimation of stochastic context-free grammars using the insideoutside algorithm. Computer Speech and Language 4, 35–56 (1990)

Linares, D., Benedí, J.M., Sánchez, J.A.: A hybrid language model based on a combination of n-grams and stochastic context-free grammars. ACM Trans. on Asian Language Information Processing 3(2), 113–127 (2004)

Marcus, M.P., Santorini, B., Marcinkiewicz, M.A.: Building a large annotated corpus of english: the penn treebank. Computational Linguistics 19(2), 313–330 (1993)

Ney, H.: Stochastic grammars and pattern recognition. In: Laface, P., De Mori, R. (eds.) Speech Recognition and Understanding. Recent Advances, pp. 319–344. Springer, Heidelberg (1992)

Pereira, F., Schabes, Y.: Inside-outside reestimation from partially bracketed corpora. In: Proceedings of the 30th Annual Meeting of the Association for Computational Linguistics, pp. 128–135. University of Delaware (1992)

Roark, B.: Probabilistic top-down parsing and language modeling. Computational Linguistics 27(2), 249–276 (2001)

Rosenfeld, R.: The CMU statistical language modeling toolkit and its use in the 1994 ARPA csr evaluation. In: ARPA Spoken Language Technology Workshop, Austin, Texas, USA (1995)

Stolcke, A.: An efficient probabilistic context-free parsing algorithm that computes prefix probabilities. Computational Linguistics 21(2), 165–200 (1995)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2005 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Sánchez, J.A., Benedí, J.M., Linares, D. (2005). Performance of a SCFG-Based Language Model with Training Data Sets of Increasing Size. In: Marques, J.S., Pérez de la Blanca, N., Pina, P. (eds) Pattern Recognition and Image Analysis. IbPRIA 2005. Lecture Notes in Computer Science, vol 3523. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11492542_72

Download citation

DOI: https://doi.org/10.1007/11492542_72

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-26154-4

Online ISBN: 978-3-540-32238-2

eBook Packages: Computer ScienceComputer Science (R0)