Abstract

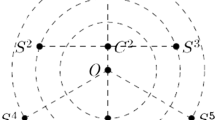

In Machine Learning, ensembles are combination of classifiers. Their objective is to improve the accuracy. In previous works, we have presented a method for the generation of ensembles, named rotation-based. It transforms the training data set; it groups, randomly, the attributes in different subgroups, and applies, for each group, an axis rotation. If the used method for the induction of the classifiers is not invariant to rotations in the data set, the generated classifiers can be very different. In this way, different classifiers can be obtained (and combined) using the same induction method.

The bias-variance decomposition of the error is used to get some insight into the behaviour of a classifier. It has been used to explain the success of ensemble learning techniques. In this work the bias and variance for the presented and other ensemble methods are calculated and used for comparison purposes.

This work has been supported by the Spanish MCyT project DPI2001-01809.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Bauer, E., Kohavi, R.: An empirical comparison of voting classification algorithms: Bagging, boosting, and variants. Machine Learning 36(1–2), 105–139 (1999)

Blake, C.L., Merz, C.J.: UCI repository of machine learning databases (1998), http://www.ics.uci.edu/~mlearn/MLRepository.html

Breiman, L.: Bagging predictors. Machine Learning 24(2), 123–140 (1996)

Breiman, L.: Random forests. Machine Learning 45(1), 5–32 (2001)

Dietterich, T.G.: Ensemble methods in machine learning. In: Multiple Classifier Systems 2000, pp. 1–15 (2000)

Domingos, P.: A unified bias-variance decomposition and its applications. In: 17th International Conference on Machine Learning, pp. 231–238 (2000)

Han, J., Kamber, M.: Data Mining: Concepts and Techniques. Morgan Kaufmann, San Francisco (2001)

Ho, T.K.: The random subspace method for constructing decision forests. IEEE Transactions on Pattern Analysis and Machine Intelligence 20(8), 832–844 (1998)

Ho, T.K.: A data complexity analysis of comparative advantages of decision forest constructors. Pattern Analysis and Applications 5, 102–112 (2002)

Kohavi, R., Wolpert, D.: Bias plus variance decomposition for zero-one loss functions. In: 13th International Machine Learning Conference (ICML 1996) (1996)

Kuncheva, L.I.: Combining Pattern Classifiers: Methods and Algorithms. Wiley Interscience, Hoboken (2004)

Quinlan, J.R.: C4.5: programs for machine learning. Morgan Kaufmann, San Mateo (1993)

Rodríguez, J.J., Alonso, C.J.: Rotation-based ensembles. In: urrent Topics in Artificial Intelligence: 10th Conference of the Spanish Association for Artificial Intelligence, pp. 498–506. Springer, Heidelberg (2004)

Schapire, R.E.: The boosting approach to machine learning: An overview. In: MSRI Workshop on Nonlinear Estimation and Classification (2002), http://www.cs.princeton.edu/~schapire/papers/msri.ps.gz

Webb, G.I., Boughton, J.R., Wang, Z.: Not so naive bayes. Machine Learning 58, 5–24 (2005)

Webb, G.I., Conilione, P.: Estimating bias and variance from data (2004), http://www.csse.monash.edu.au/~webb/Files/WebbConilione03.pdf

Witten, I.H., Frank, E.: Data Mining: Practical Machine Learning Tools and Techniques with Java Implementations. Morgan Kaufmann, San Francisco (1999), http://www.cs.waikato.ac.nz/ml/weka

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2005 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Rodríguez, J.J., Alonso, C.J., Prieto, O.J. (2005). Bias and Variance of Rotation-Based Ensembles. In: Cabestany, J., Prieto, A., Sandoval, F. (eds) Computational Intelligence and Bioinspired Systems. IWANN 2005. Lecture Notes in Computer Science, vol 3512. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11494669_95

Download citation

DOI: https://doi.org/10.1007/11494669_95

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-26208-4

Online ISBN: 978-3-540-32106-4

eBook Packages: Computer ScienceComputer Science (R0)