Abstract

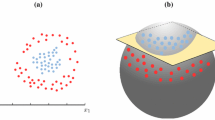

In recent years, support vector machines (SVMs) have become a popular tool for pattern recognition and machine learning. Training a SVM involves solving a constrained quadratic programming problem, which requires large memory and enormous amounts of training time for large-scale problems. In contrast, the SVM decision function is fully determined by a small subset of the training data, called support vectors. Therefore, it is desirable to remove from the training set the data that is irrelevant to the final decision function. In this paper we propose two new methods that select a subset of data for SVM training. Using real-world datasets, we compare the effectiveness of the proposed data selection strategies in terms of their ability to reduce the training set size while maintaining the generalization performance of the resulting SVM classifiers. Our experimental results show that a significant amount of training data can be removed by our proposed methods without degrading the performance of the resulting SVM classifiers.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Boser, B.E., Guyon, I.M., Vapnik, V.N.: A training algorithm for optimal margin classifiers. In: Haussler, D. (ed.) Proceedings of the 5th Annual ACM Workshop on Computational Learning Theory, pp. 144–152 (1992)

Cortes, C., Vapnik, V.N.: Support vector networks. Machine Learning 20, 273–297 (1995)

Vapnik, V.N.: Statistical Learning Theory. Wiley, New York (1998)

Joachims, T.: Making large-scale SVM learning practical. In: Schölkopf, B., Burges, C.J.C., Smola, A.J. (eds.) Advances in Kernel Methods - Support Vector Learning, pp. 169–184. MIT Press, Cambridge (1999)

Shin, H.J., Cho, S.Z.: Fast pattern selection for support vector classifiers. In: Whang, K.-Y., Jeon, J., Shim, K., Srivastava, J. (eds.) PAKDD 2003. LNCS (LNAI), vol. 2637, pp. 376–387. Springer, Heidelberg (2003)

Almeida, M.B., Braga, A.P., Braga, J.P.: SVM-KM: speeding SVMs learning with a priori cluster selection and k-means. In: Proceedings of the 6th Brazilian Symposium on Neural Networks, pp. 162–167 (2000)

Zheng, S.F., Lu, X.F., Zheng, N.N., Xu, W.P.: Unsupervised clustering based reduced support vector machines. In: Proceedings of IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), vol. 2, pp. 821–824 (2003)

Koggalage, R., Halgamuge, S.: Reducing the number of training samples for fast support vector machine classification. Neural Information Processing - Letters and Reviews 2(3), 57–65 (2004)

Zhang, W., King, I.: Locating support vectors via β-skeleton technique. In: Proceedings of the International Conference on Neural Information Processing (ICONIP), pp. 1423–1427 (2002)

Abe, S., Inoue, T.: Fast training of support vector machines by extracting boundary data. In: Dorffner, G., Bischof, H., Hornik, K. (eds.) ICANN 2001. LNCS, vol. 2130, pp. 308–313. Springer, Heidelberg (2001)

Lee, Y.J., Mangasarian, O.L.: RSVM: Reduced support vector machines. In: Proceedings of the First SIAM International Conference on Data Mining (2001)

Huang, S.Y., Lee, Y.J.: Reduced support vector machines: a statistical theory. Technical report, Institute of Statistical Science, Academia Sinica, Taiwan (2004), http://www.stat.sinica.edu.tw/syhuang/

Vapnik, V.N.: Estimation of Dependence Based on Empirical Data. Springer, Berlin (1982)

Osuna, E., Freund, R., Girosi, R.: Support vector machines: training and applications. A.I. Memo AIM - 1602. MIT A.I. Lab (1996)

Platt, J.: Fast training of support vector machines using sequential minimal optimization. In: Schölkopf, B., Burges, C.J.C., Smola, A.J. (eds.) Advances in Kernel Methods - Support Vector Learning, pp. 185–208. MIT Press, Cambridge (1999)

Bennett, K.P., Bredensteiner, E.J.: Duality and geometry in SVM classifiers. In: Proceedings of 17th International Conference on Machine Learning, pp. 57–64 (2000)

Crisp, D.J., Burges, C.J.C.: A geometric interpretation of nu-svm classifiers. In: Advances in Neural Information Processing Systems, vol. 12 (1999)

Blake, C.L., Merz, C.J.: UCI Repository of machine learning databases (1998), http://www.ics.uci.edu/~mlearn/MLRepository.html

Syed, N.A., Liu, H., Sung, K.K.: A study of support vectors on model independent example selection. In: Proceedings of the Workshop on Support Vector Machines at the International Joint Conference on Artificial Intelligence (1999)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2005 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Wang, J., Neskovic, P., Cooper, L.N. (2005). Training Data Selection for Support Vector Machines. In: Wang, L., Chen, K., Ong, Y.S. (eds) Advances in Natural Computation. ICNC 2005. Lecture Notes in Computer Science, vol 3610. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11539087_71

Download citation

DOI: https://doi.org/10.1007/11539087_71

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-28323-2

Online ISBN: 978-3-540-31853-8

eBook Packages: Computer ScienceComputer Science (R0)