Abstract

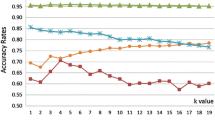

The k-nearest neighbor rule is one of the most attractive pattern classification algorithms. In practice, the value of k is usually determined by the cross-validation method. In this work, we propose a new method that locally determines the number of nearest neighbors based on the concept of statistical confidence. We define the confidence associated with decisions that are made by the majority rule from a finite number of observations and use it as a criterion to determine the number of nearest neighbors needed. The new algorithm is tested on several real-world datasets and yields results comparable to those obtained by the k-nearest neighbor rule. In contrast to the k-nearest neighbor rule that uses a fixed number of nearest neighbors throughout the feature space, our method locally adjusts the number of neighbors until a satisfactory level of confidence is reached. In addition, the statistical confidence provides a natural way to balance the trade-off between the reject rate and the error rate by excluding patterns that have low confidence levels.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Duda, R.O., Hart, P.E., Stock, D.G.: Pattern Classification. John Wiley & Sons, New York (2000)

Fix, E., Hodges, J.: Discriminatory analysis, nonparametric discrimination: consistency properties. Technical Report 4, USAF School of Aviation Medicine, Randolph Field, Texas (1951)

Cover, T.M., Hart, P.E.: Nearest neighbor pattern classification. IEEE Transactions on Information Theory 13, 21–27 (1967)

Devroye, L.: On the inequality of cover and hart. IEEE Transactions on Pattern Analysis and Machine Intelligence 3, 75–78 (1981)

Stone, C.J.: Consistent nonparametric regression. Annals of Statistics 5, 595–645 (1977)

Devroye, L., Györfi, L., Krzyżak, A., Lugosi, G.: On the strong universal consistency of nearest neighbor regression function estimates. Annals of Statistics 22, 1371–1385 (1994)

Geman, S., Bienenstock, E., Doursat, R.: Neural networks and the bias/variance dilemma. Neural Computation 4, 1–58 (1992)

Friedman, J.: Flexible metric nearest neighbor classification. Technical Report 113, Stanford University Statistics Department (1994)

Hastie, T., Tibshirani, R.: Discriminant adaptive nearest neighbor classification. IEEE Transactions on Pattern Analysis and Machine Intelligence 18, 607–615 (1996)

Domeniconi, C., Peng, J., Gunopulos, D.: Locally adaptive metric nearest-neighbor classification. IEEE Transactions on Pattern Analysis and Machine Intelligence 24, 1281–1285 (2002)

Blake, C., Merz, C.: UCI repository of machine learning databases (1998), http://www.ics.uci.edu/~mlearn/MLRepository.html

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2005 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Wang, J., Neskovic, P., Cooper, L.N. (2005). Locally Determining the Number of Neighbors in the k-Nearest Neighbor Rule Based on Statistical Confidence. In: Wang, L., Chen, K., Ong, Y.S. (eds) Advances in Natural Computation. ICNC 2005. Lecture Notes in Computer Science, vol 3610. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11539087_9

Download citation

DOI: https://doi.org/10.1007/11539087_9

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-28323-2

Online ISBN: 978-3-540-31853-8

eBook Packages: Computer ScienceComputer Science (R0)