Abstract

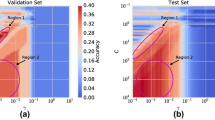

We consider the practical advantage of the Bayesian approach over maximum a posteriori methods in its ability to smoothen the landscape of generalization performance measures in the space of hyperparameters, which is vitally important for determining the optimal hyperparameters. The variational method is used to approximate the intractable distribution. Using the leave-one-out error of support vector regression as an example, we demonstrate a further advantage of this method in the analytical estimation of the leave-one-out error, without doing the cross-validation. Comparing our theory with the simulations on both artificial (the “sinc” function) and benchmark (the Boston Housing) data sets, we get a good agreement.

An erratum to this chapter can be found at http://dx.doi.org/10.1007/11550907_163 .

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

MacKay, D.J.C.: Information Theory, Inference, and Learning Algorithms. Cambridge University Press, Cambridge (2003)

Seeger, M.: Bayesian model selection for support vector machines, Gaussian processes and other kernel classifiers. In: Solla, S.A., Leen, T.K., Müller, K.-R. (eds.) Advances in Neural Information Processing Systems, vol. 12, pp. 603–609. MIT Press, Cambridge (2000)

Opper, M., Saad, D. (eds.): Advanced Mean Field Methods: Theory and Practice. MIT Press, Cambridge (2001); For reviews on the variational method, see the chapters by Jaakola, T., Ghahramani, Z., Beal, M. J. For reviews on the mean-field approximation, see the chapters by Opper, M., Winther, O.

Vapnik, V.N.: The Nature of Statistical Learning Theory. Springer, New York (1995)

Opper, M., Winther, O.: Gaussian Processes and SVM: Mean Field Results and Leave-One-Out Estimator. In: Smola, S., Schuurmans (eds.) Advances in Large Margin Classifiers. MIT Press, Cambridge (1999)

Wong, K.Y.M., Li, F.: Fast Parameter Estimation Using Green’s Functions. In: Ghahramani, Z. (ed.) Advances in Neural Information Processing Systems, vol. 14, pp. 535–542. MIT Press, Cambridge (2000)

Cherkassky, V., Ma, Y.: Practical Selection of SVM Parameters and Noise Estimation for SVM Regression. Neural Networks 17, 113–126 (2004)

Chalimourda, A., Schölkopf, B., Smola, A.J.: Experimentally Optimal ν in Support Vector Regression for Different Noise Models and Parameter Settings. Neural Networks 17, 127–141 (2004)

Vapnik, V.: Statistical Learning Theory. Wiley, New York (1998)

Cristianini, N., Shawe-Taylor, J.: An Introduction to Support Vector Machines. Cambridge University Press, Cambridge (2000)

Chapelle, A., Vapnik, V.: Model Selection for Support Vector Machines. In: Solla, S.A., Leen, T.K., Müller, K.-R. (eds.) Advances in Neural Information Processing Systems, vol. 12, pp. 230–236. MIT Press, Cambridge (2000)

Cherkassky, V., Mülier, F.: Learning From Data: Concepts, Theory, and Methods. Wiley, New York (1998)

Schölkopf, B., Burges, J., Smola, A.: Advances in Kernel Methods: Support Vector Machines, Regularization, and Beyond. MIT Press, Cambridge (1999)

Malzahn, D., Opper, M.: A Statistical Mechanics Approach to Approximate Analytical Bootstrap Averages. In: Becker, S., Thrun, S., Obermayer, K. (eds.) Advances in Neural Information Processing Systems, vol. 15, pp. 327–334. MIT Press, Cambridge (2003)

Gao, Z., Wong, K.Y.M.: In preparation (2005)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2005 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Gao, Z., Wong, K.Y.M. (2005). Smooth Performance Landscapes of the Variational Bayesian Approach. In: Duch, W., Kacprzyk, J., Oja, E., Zadrożny, S. (eds) Artificial Neural Networks: Formal Models and Their Applications – ICANN 2005. ICANN 2005. Lecture Notes in Computer Science, vol 3697. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11550907_38

Download citation

DOI: https://doi.org/10.1007/11550907_38

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-28755-1

Online ISBN: 978-3-540-28756-8

eBook Packages: Computer ScienceComputer Science (R0)