Abstract

The Variational Bayesian learning, proposed as an approximation of the Bayesian learning, has provided computational tractability and good generalization performance in many applications. However, little has been done to investigate its theoretical properties.

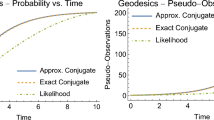

In this paper, we discuss the Variational Bayesian learning of the mixture of exponential families and derive the asymptotic form of the stochastic complexities. We show that the stochastic complexities become smaller than those of regular statistical models, which implies the advantage of the Bayesian learning still remains in the Variational Bayesian learning. Stochastic complexity, which is called the marginal likelihood or the free energy, not only becomes important in addressing the model selection problem but also enables us to discuss the accuracy of the Variational Bayesian approach as an approximation of the true Bayesian learning.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Akaike, H.: Likelihood and Bayes procedure. In: Bernald, J.M. (ed.) Bayesian Statistics, pp. 143–166. University Press, Valencia (1980)

Alzer, H.: On some inequalities for the Gamma and Psi functions. Mathematics of computation 66(217), 373–389 (1997)

Attias, H.: Inferring parameters and structure of latent variable models by variational bayes. In: Proc. of Uncertainty in Artificial Intelligence(UAI 1999) (1999)

Beal, M.J.: Variational algorithms for approximate bayesian inference. Ph.D. Thesis, University College London (2003)

Brown, L.D.: Fundamentals of statistical exponential families. IMS Lecture Notes-Monograph Series, vol. 9 (1986)

Ghahramani, Z., Beal, M.J.: Graphical models and variational methods. In: Saad, D., Opper, M. (eds.) Advanced Mean Field Methods – Theory and Practice. MIT Press, Cambridge (2000)

Hartigan, J.A.: A Failure of likelihood asymptotics for normal mixtures. In: Proceedings of the Berkeley Conference in Honor of J.Neyman and J. Kiefer, vol. 2, pp. 807–810 (1985)

Mackay, D.J.: Bayesian interpolation. Neural Computation 4(2), 415–447 (1992)

Rissanen, J.: Stochastic complexity and modeling. Annals of Statistics 14(3), 1080–1100 (1986)

Sato, M.: Online model selection based on the variational bayes. Neural Computation 13(7), 1649–1681 (2004)

Schwarz, G.: Estimating the dimension of a model. Annals of Statistics 6(2), 461–464 (1978)

Watanabe, K., Watanabe, S.: Lower bounds of stochastic complexities in variational bayes learning of gaussian mixture models. In: Proceedings of IEEE conference on Cybernetics and Intelligent Systems (CIS 2004), pp. 99–104 (2004)

Watanabe, S.: Algebraic analysis for non-identifiable learning machines. Neural Computation 13(4), 899–933 (2001)

Yamazaki, K., Watanabe, S.: Singularities in mixture models and upper bounds of stochastic complexity. International Journal of Neural Networks 16, 1029–1038 (2003)

Yamazaki, K., Watanabe, S.: Stochastic complexity of bayesian networks. In: Proc. of Uncertainty in Artificial Intelligence (UAI 2003) (2003)

Yamazaki, K., Watanabe, S.: Newton diagram and stochastic complexity in mixture of binomial distributions. In: Proc. of Algorithmic Learning Theory (ALT 2004), pp. 350–364 (2004)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2005 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Watanabe, K., Watanabe, S. (2005). Stochastic Complexity for Mixture of Exponential Families in Variational Bayes. In: Jain, S., Simon, H.U., Tomita, E. (eds) Algorithmic Learning Theory. ALT 2005. Lecture Notes in Computer Science(), vol 3734. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11564089_10

Download citation

DOI: https://doi.org/10.1007/11564089_10

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-29242-5

Online ISBN: 978-3-540-31696-1

eBook Packages: Computer ScienceComputer Science (R0)