Abstract

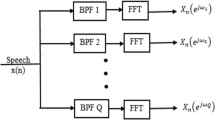

This paper presents an approach to emotion recognition from speech signals. In this approach, the intonation groups (IGs) of the input speech signals are firstly extracted. The speech features in each selected intonation group are then extracted. With the assumption of linear mapping between feature spaces in different emotional states, a feature compensation approach is proposed to characterize the feature space with better discriminability among emotional states. The compensation vector with respect to each emotional state is estimated using the Minimum Classification Error (MCE) algorithm. The IG-based feature vectors compensated by the compensation vectors are used to train the Gaussian Mixture Models (GMMs) for each emotional state. The emotional state with the GMM having the maximal likelihood ratio is determined as the final output. The experimental result shows that IG-based feature extraction and compensation can obtain encouraging performance for emotion recognition.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Reeves, B., Nass, C.: The Media Equation: How People Treat Computers, Televi-sion, and New Media Like Real People and Places. University of Chicago Press, Chicago (1996)

Bhatti, M.W., Wang, Y., Guan, L.: A neural network approach for human emotion recogni-tion in speech. In: IEEE International Symposium on Circuits and Systems, Vancouver, Canada, pp. 181–184 (2004)

Inanoglu, Z., Caneel, R.: Emotive alert : HMM-based emotion detection in voicemail messages. In: IEEE Intelligent User Interfaces 2005, San Diego, California, USA, pp. 251–253 (2005)

Kwon, O.W., Chan, K., Hao, J., Lee, T.W.: Emotion Recognition by Speech Signals. In: 8th European Conference on Speech Communication and Technology, Geneva, Switzerland, pp. 125–128 (2003)

Chuang, Z.J., Wu, C.H.: Multi-Modal Emotion Recognition from Speech and Text. International Journal of Computational Linguistics and Chinese Language Processing 9(2), 1–18 (2004)

Rahurkar, M.A., Hansen, J.H.L.: Frequency Distribution Based Weighted Sub-Band Approach for Classification of Emotional/Stressful Content in Speech. In: 8th European Conference on Speech Communication and Technology, Geneva, Switzerland, pp. 721–724 (2003)

Deng, L., Droppo, J., Acero, A.: Recursive estimation of nonstationary noise using iterative stochastic approximation for robust speech recognition. IEEE Transactions on Speech and Audio 11(6), 568–580 (2003)

Wu, J., Huo, Q.: An environment compensated minimum classification error training approach and its evaluation on aurora2 database. In: 7th International Conference on Spoken Language, Denver, Colorado, USA, pp. 453–456 (2002)

Ververidis, D., Kotropoulos, C., Pitas, I.: Automatic emotional speech classification. In: IEEE International Conference on Acoustics, Speech, and Signal Processing, Montreal, Canada, pp. 593–596 (2005)

Levity, M., Huberz, R., Batlinery, A., Noeth, E.: Use of prosodic speech characteristics for automated detection of alcohol intoxication. In: Prosody in Speech Recognition and Understanding, Molly Pitcher Inn, Red Bank, NJ, USA (2001)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2005 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Chuang, ZJ., Wu, CH. (2005). IG-Based Feature Extraction and Compensation for Emotion Recognition from Speech. In: Tao, J., Tan, T., Picard, R.W. (eds) Affective Computing and Intelligent Interaction. ACII 2005. Lecture Notes in Computer Science, vol 3784. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11573548_46

Download citation

DOI: https://doi.org/10.1007/11573548_46

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-29621-8

Online ISBN: 978-3-540-32273-3

eBook Packages: Computer ScienceComputer Science (R0)