Abstract

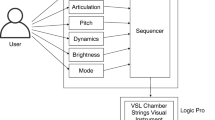

This work proposes a new way for providing feedback to expressivity in music performance. Starting from studies on the expressivity of music performance we developed a system in which a visual feedback is given to the user using a graphical representation of a human face. The first part of the system, previously developed by researchers at KTH Stockholm and at the University of Uppsala, allows the real-time extraction and analysis of acoustic cues from the music performance. Cues extracted are: sound level, tempo, articulation, attack time, and spectrum energy. From these cues the system provides an high level interpretation of the emotional intention of the performer which will be classified into one basic emotion, such as happiness, sadness, or anger. We have implemented an interface between that system and the embodied conversational agent Greta, developed at the University of Rome “La Sapienza” and “University of Paris 8”. We model expressivity of the facial animation of the agent with a set of six dimensions that characterize the manner of behavior execution. In this paper we will first describe a mapping between the acoustic cues and the expressivity dimensions of the face. Then we will show how to determine the facial expression corresponding to the emotional intention resulting from the acoustic analysis, using music sound level and tempo characteristics to control the intensity and the temporal variation of muscular activation.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Juslin, P.N., Laukka, P.: Communication of emotions in vocal expression and music performance: Different channels, same code? Psychological Bulletin 129, 770–814 (2003)

Krumhansl, C.L.: An exploratory study of musical emotions and psychophysiology. Canadian Journal of Experimental Psychology 51, 336–352 (1997)

Höök, K., Bullock, A., Paiva, A., vala, M., Chaves, R., Prada, R.: FantasyA and SenToy. In: Proceedings of the conference on Human factors in computing systems, Ft. Lauderdale, Florida, USA, pp. 804–805. ACM Press, New York (2003)

Marsella, S., Johnson, W., LaBore, C.: Interactive pedagogical drama for health interventions. In: 11th International Conference on Artificial Intelligence in Education, Sydney, Australia (2003)

Gratch, J., Marsella, S.: Some lessons for emotion psychology for the design of lifelike characters. Journal of Applied Artificial Intelligence (special issue on Educational Agents - Beyond Virtual Tutors) 19, 215–233 (2005)

Johnson, W., Vilhjalmsson, H., Marsella, S.: Serious games for language learning: How much game, how much AI? In: 12th International Conference on Artificial Intelligence in Education, Amsterdam, The Netherlands (2005)

Craig, S., Graesser, A., Sullins, J., Gholson, B.: Affect and learning: An exploratory look into the role of affect in learning with AutoTutor. Journal of Educational Media 29, 241–250 (2004)

Massaro, D., Beskow, J., Cohen, M., Fry, C., Rodriquez, T.: Picture my voice: Audio to visual speech synthesis using artificial neural networks. In: International Conference on Auditory-Visual Speech Processing, AVSP 1999, Santa Cruz, USA (1999)

Bickmore, T., Cassell, J.: Social dialogue with embodied conversational agents. In: van Kuppevelt, J., Dybkjaer, L. (eds.) Advances in Natural, Multimodal Dialogue Systems. Kluwer Academic, New York (2005)

Kopp, S., Wachsmuth, I.: Synthesizing multimodal utterances for conversational agents. The Journal of Computer Animation and Virtual Worlds 15 (2004)

Nijholt, A., Heylen, D.: Multimodal communication in inhabited virtual environments. International Journal of Speech Technology 5, 343–354 (2002)

Hartmann, B., Mancini, M., Pelachaud, C.: Implementing expressive gesture synthesis for embodied conversational agents. In: The 6th International Workshop on Gesture in Human-Computer Interaction and Simulation, Valoria, Universitt de Bretagne Sud, France (2005)

Hashimoto, S.: Kansei as the third target of information processing and related topics in japan. In: Proceedings of KANSEI - The Technology of Emotion AIMI International Workshop, Genova, pp. 101–104 (1997)

Picard, R.W.: Affective Computing. MIT Press, Cambridge (1997)

Lokki, T., Savioja, L., Vaananen, R., Huopaniemi, J., Takala, T.: Creating interactive virtual auditory environments. IEEE Computer Graphics and Applications, special issue “Virtual Worlds, Real Sounds” 22 (2002)

Camurri, A., Coletta, P., Recchettti, M., Volpe, G.: Expressiveness and physicality in interaction. Journal of New Music Research 29, 187–198 (2000)

Taylor, R., Torres, D., Boulanger, P.: Using music to interact with a virtual character. In: 5th International Conference on New Interface for Musical Expression - NIME, Vancouver, Canada, pp. 220–223 (2005)

Friberg, A., Schoonderwaldt, E., Juslin, P.N., Bresin, R.: Automatic real-time extraction of musical expression. In: International Computer Music Conference - ICMC 2002, San Francisco. International Computer Music Association, pp. 365–367 (2002)

Friberg, A., Schoonderwaldt, E., Juslin, P.N.: Cuex: An algorithm for extracting expressive tone variables from audio recordings. Accepted for publication in Acoustica united with Acta Acoustica (2005)

Russell, J.A.: A circumplex model of affect. Journal of Personality and Social Psycholog. 39, 1161–1178 (1980)

Gabrielsson, A., Juslin, P.N.: Emotional expression in music. In: Goldsmith, H.H., Davidson, R.J., Scherer, K.R. (eds.) Music and emotion: Theory and research, pp. 503–534. Oxford University Press, New York (2003)

Juslin, P.N.: Communicating emotion in music performance: A review and a theoretical framework. In: Juslin, P.N., Sloboda, J.A. (eds.) Music and emotion: Theory and research, pp. 305–333. Oxford University Press, New York (2001)

Friberg, A.: A fuzzy analyzer of emotional expression in music performance and body motion. In: Proceedings of Music and Music Science, Stockholm 2004 (2005)

Bresin, R., Juslin, P.N.: Rating expressive music performance with colours (Manuscript submitted for publication) (2005)

Bresin, R.: What is the color of that music performance? In: International Computer Music Conference - ICMC 2005, Barcelona, ICMA, pp. 367–370 (2005)

Pelachaud, C., Bilvi, M.: Computational model of believable conversational agents. In: Huget, M.-P. (ed.) Communication in Multiagent Systems. LNCS, vol. 2650, pp. 300–317. Springer, Heidelberg (2003)

Wallbott, H.G.: Bodily expression of emotion. European Journal of Social Psychology 28, 879–896 (1998)

Gallaher, P.: Individual differences in nonverbal behavior: Dimensions of style. Journal of Personality and Social Psychology 63, 133145 (1992)

Perlin, K.: Noise, hypertexture, antialiasing and gesture. In: Ebert, D. (ed.) Texture and Modeling, A Procedural Approach. AP Professional, Cambridge (1994)

Argyle, M., Cook, M.: Gaze and Mutual gaze. Cambridge University Press, Cambridge (1976)

Collier, G.: Emotional Expression. Lawrence Erlbaum Associates, Mahwah (1985)

Boone, R.T., Cunningham, J.G.: Children’s understanding of emotional meaning in expressive body movement. Biennial Meeting of the Society for Research in Child Development (1996)

Abrilian, S., Martine, J.-C., Devillers, L.: A corpus-based approach for the modeling of multimodal emotional behaviors for the specification of embodied agents. In: HCI International, Las Vegas, USA (2005)

Dahl, S., Friberg, A.: Expressiveness of musician’s body movements in performances on marimba. In: Camurri, A., Volpe, G. (eds.) GW 2003. LNCS (LNAI), vol. 2915, pp. 479–486. Springer, Heidelberg (2004)

Dahl, S., Friberg, A.: Visual perception of expressiveness in musicians’ body movements (submitted)

Whissel, C.: The dictionary of affect in language. Emotion Theory, Research and Experience 4 (1989)

Mancini, M., Hartmann, B., Pelachaud, C., Raouzaiou, A., Karpouzis, K.: Expressive avatars in MPEG-4. In: IEEE International Conference on Multimedia & Expo, Amsterdam (2005)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2006 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Mancini, M., Bresin, R., Pelachaud, C. (2006). From Acoustic Cues to an Expressive Agent. In: Gibet, S., Courty, N., Kamp, JF. (eds) Gesture in Human-Computer Interaction and Simulation. GW 2005. Lecture Notes in Computer Science(), vol 3881. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11678816_31

Download citation

DOI: https://doi.org/10.1007/11678816_31

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-32624-3

Online ISBN: 978-3-540-32625-0

eBook Packages: Computer ScienceComputer Science (R0)