Abstract

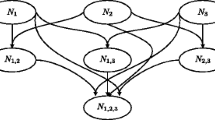

The present paper discusses the issue of enhancing classification performance by means other than improving the ability of certain Machine Learning algorithms to construct a precise classification model. On the contrary, we approach this significant problem from the scope of an extended coding of training data. More specifically, our method attempts to generate more features in order to reveal the hidden aspects of the domain, modeled by the available training examples. We propose a novel feature construction algorithm, based on the ability of Bayesian networks to represent the conditional independence assumptions of a set of features, thus projecting relational attributes which are not always obvious to a classifier when presented in their original format. The augmented set of features results in a significant increase in terms of classification performance, a fact that is depicted to a plethora of machine learning domains (i.e. data sets from the UCI ML repository and the Artificial Intelligence group) using a variety of classifiers, based on different theoretical backgrounds.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Aha, D., Kibler, D., Albert, M.K.: Instance based learning algorithms. Machine Learning 6(1), 37–66 (1991)

Cooper, G., Herskovits, E.: A Bayesian method for the induction of probabilistic networks from data. Machine Learning 9, 309–347 (1992)

Jensen, R.: An Introduction to Bayesian Networks. UCL Press, London (1996)

John, G., Langley, P.: Estimating continuous distributions in Bayesian classifiers. In: Proceedings of the Eleventh Conference on Uncertainty in Artificial Intelligence, pp. 338–345 (1995)

Kavallieratou, E.: Σύστ ημ α Aυτόμα τη ς Eπε ξε ργ ασίας Eγγ ράφο υ κα ι Aνα γνώρι ση ς Xει ρόγρ αφ ων Xαρ ακ τήρω ν Συν εχόμε νη ς Γρα φής, Aνε ξάρτ ητ ο Συγ γρ αφέα, PhD Thesis (2000).

Kohavi, R., Dan, S.: Feature subset selection using the wrapper model: Overfitting and dynamic search space topology. In: Fayyad, U.M., Uthurusamy, R. (eds.) First International Conference on Knowledge, Discovery and Data Mining (1995)

Lam, W., Bacchus, R.: Learning Bayesian belief networks: An approach based on the MDL principle. Computational Intelligence 10(4), 269–293 (1994)

Markovich, S., Rosenstein, D.: Feature Generation Using General Constructor Functions. Machine Learning 49(1), 59–98 (2002)

Murphy, P.M., Aha, D.W.: UCI repository of machine learning databases. [Machine-readable data repository]. University of California, Department of Information and Computer Science, Irvine, CA (1993)

Murphy, P.M., Pazzani, M.J.: Exploring the decision forest: An empirical investigation of Occam’s razor in decision tree induction. Journal of Artificial Intelligence Research 1, 257–275 (1994)

Pearl, J.: Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference. Morgan Kaufmann, San Mateo, CA (1988)

Quinlan, J.: C4.5: Programs for Machine Learning. Morgan Kaufmann, San Francisco (1980); Reiter, R.: A logic for default reasoning. Artificial Intelligence 13(1-2), 81–132 (1993)

Salzberg, S.: Improving classification methods via feature selection. Machine Learning 99 (1993)

Tasikas, A.: Aνα γνώρι ση Oνο μάτω ν Oντ οτήτω ν σε Kείμε να Nέας λλ ην ικής Γλώσσ ας απ οκ λε ισ τι κά με Mηχ αν ική Mάθη σ, Diploma Thesis, University of Patras (2002)

Zervas, P., Maragoudakis, M., Fakotakis, N., Kokkinakis, G.: Learning to predict Pitch Accents using Bayesian Belief Networks for Greek Language. In: LREC 2004, 4th International Conference on Language Resources and Evaluation, Lisbon, Portugal, pp. 2139–2142 (2004)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2006 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Maragoudakis, M., Fakotakis, N. (2006). Bayesian Feature Construction. In: Antoniou, G., Potamias, G., Spyropoulos, C., Plexousakis, D. (eds) Advances in Artificial Intelligence. SETN 2006. Lecture Notes in Computer Science(), vol 3955. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11752912_25

Download citation

DOI: https://doi.org/10.1007/11752912_25

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-34117-8

Online ISBN: 978-3-540-34118-5

eBook Packages: Computer ScienceComputer Science (R0)