Abstract

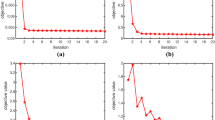

It is wildly recognized that whether the selected kernel matches the data controls the performance of kernel-based methods. Ideally it is expected that the data is linearly separable in the kernel induced feature space, therefore, Fisher linear discriminant criterion can be used as a kernel optimization rule. However, the data may not be linearly separable even after kernel transformation in many applications, a nonlinear classifier is preferred in this case, and obviously the Fisher criterion is not the best choice as a kernel optimization rule. Motivated by this issue, in this paper we present a novel kernel optimization method by maximizing the local class linear separability in kernel space to increase the local margins between embedded classes via localized kernel Fisher criterion, by which the classification performance of nonlinear classifier in the kernel induced feature space can be improved. Extensive experiments are carried out to evaluate the efficiency of the proposed method.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Xiong, H.L., Swamy, M.N.S., Ahmad, M.O.: Optimizing The Kernel In The Empirical Feature Space. IEEE Trans. Neural Networks 16(2), 460–474 (2005)

Amari, S., Wu, S.: Improving Support Vector Machine Classifiers By Modifying Kernel Functions. Neural Networks 12(6), 783–789 (1999)

Ruiz, A., Lopez-de Teruel, P.E.: Nonlinear Kernel-based Statistical Pattern Analysis. IEEE Trans. Neural Networks 12(1), 16–32 (2001)

Ripley, B.D.: Pattern Recognition and Neural Networks. Cambridge Univ. Press, Cambridge (1996)

Blake, C., Keogh, E., Merz, C.J.: UCI Repository Of Machine Learning Databases. Dept. Inform. Comput. Sci. Univ. California, Irvine (1998) [Online] Available, http://www.ics.uci.edu/mlearn

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2006 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Chen, B., Liu, H., Bao, Z. (2006). A Kernel Optimization Method Based on the Localized Kernel Fisher Criterion. In: Wang, J., Yi, Z., Zurada, J.M., Lu, BL., Yin, H. (eds) Advances in Neural Networks - ISNN 2006. ISNN 2006. Lecture Notes in Computer Science, vol 3971. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11759966_134

Download citation

DOI: https://doi.org/10.1007/11759966_134

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-34439-1

Online ISBN: 978-3-540-34440-7

eBook Packages: Computer ScienceComputer Science (R0)