Abstract

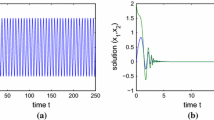

The energy function of continuous-time neural network has been analyzed for testing the existence of stationary points and the global convergence of network. The energy function always has only one stationary point which is a saddle point in the unconstrained space when the total conductance of neuron’s input is zero (G i = 0). However, the stationary points exist only inside the hypercube Rn ∈[0,1] when the total conductance of neuron’s input is not zero (G i ≠ 0). The Hessian matrix of the energy function is used for testing the global convergence of the network.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Hopfield, J.J., Tank, D.W.: Neural Computation of Decisions in Optimization Problems. Biological Cybernetics 52, 141–152 (1985)

Hopfield, J.J., Tank, D.W.: Computing with Neural Circuits: A Model. Science 233, 625–633 (1986)

Friedberg, S., Insel, A.: Introduction to Linear Algebra with Application, 1st edn. Prentice-Hall, Englewood Cliffs (1986)

Michel, A.N., Farrel, J.A., Porod, W.: Qualitative Analysis of Neural Networks. IEEE Trans. Circuits Systems 36, 229–243 (1989)

Zurada, J.M.: Introduction to Artificial Neural Systems, 1st edn. West, St. Paul MN (1992)

Peng, M., Gupta, N., Armitage, A.F.: An investigation into the Improvement of Local Minima of the Hopfield Network. Neural Networks 9(7), 1241–1253 (1996)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 3rd edn. John Hopkins Univ. Press, Baltimore (1996)

Chen, T., Amari, S.I.: Stability Asymmetric Hopfield Networks. IEEE Trans. Neural Networks 12(1), 159–163 (2001)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2006 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Kang, MJ., Kim, HC., Khan, F.A., Song, WC., Zurada, J.M. (2006). Convergence Analysis of Continuous-Time Neural Networks. In: Wang, J., Yi, Z., Zurada, J.M., Lu, BL., Yin, H. (eds) Advances in Neural Networks - ISNN 2006. ISNN 2006. Lecture Notes in Computer Science, vol 3971. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11759966_15

Download citation

DOI: https://doi.org/10.1007/11759966_15

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-34439-1

Online ISBN: 978-3-540-34440-7

eBook Packages: Computer ScienceComputer Science (R0)