Abstract

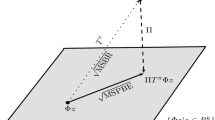

We study online learning where the objective of the decision maker is to maximize her average long-term reward given that some average constraints are satisfied along the sample path. We define the reward-in-hindsight as the highest reward the decision maker could have achieved, while satisfying the constraints, had she known Nature’s choices in advance. We show that in general the reward-in-hindsight is not attainable. The convex hull of the reward-in-hindsight function is, however, attainable. For the important case of a single constraint the convex hull turns out to be the highest attainable function. We further provide an explicit strategy that attains this convex hull using a calibrated forecasting rule.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Hannan, J.: Approximation to Bayes Risk in Repeated Play. Contribution to The Theory of Games, vol. III, pp. 97–139. Princeton University Press, Princeton (1957)

Mannor, S., Shimkin, N.: A geometric approach to multi-criterion reinforcement learning. Journal of Machine Learning Research 5, 325–360 (2004)

Altman, E.: Constrained Markov Decision Processes. Chapman and Hall, Boca Raton (1999)

Shimkin, N.: Stochastic games with average cost constraints. In: Basar, T., Haurie, A. (eds.) Advances in Dynamic Games and Applications, pp. 219–230. Birkhäuser, Basel (1994)

Blackwell, D.: An analog of the minimax theorem for vector payoffs. Pacific J. Math. 6(1), 1–8 (1956)

Spinat, X.: A necessary and sufficient condition for approachability. Mathematics of Operations Research 27(1), 31–44 (2002)

Blackwell, D.: Controlled random walks. In: Proc. Int. Congress of Mathematicians 1954, vol. 3, pp. 336–338. North Holland, Amsterdam (1956)

Mannor, S., Shimkin, N.: The empirical Bayes envelope and regret minimization in competitive Markov decision processes. Mathematics of Operations Research 28(2), 327–345 (2003)

Mertens, J.F., Sorin, S., Zamir, S.: Repeated games. CORE Reprint Dps 9420, 9421 and 9422, Center for Operation Research and Econometrics, Universite Catholique De Louvain, Belgium (1994)

Foster, D.P., Vohra, R.V.: Calibrated learning and correlated equilibrium. Games and Economic Behavior 21, 40–55 (1997)

Cesa-Bianchi, N., Lugosi, G.: Prediction, Learning, and Games. Cambridge University Press, New York (2006)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2006 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Mannor, S., Tsitsiklis, J.N. (2006). Online Learning with Constraints. In: Lugosi, G., Simon, H.U. (eds) Learning Theory. COLT 2006. Lecture Notes in Computer Science(), vol 4005. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11776420_39

Download citation

DOI: https://doi.org/10.1007/11776420_39

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-35294-5

Online ISBN: 978-3-540-35296-9

eBook Packages: Computer ScienceComputer Science (R0)