Abstract

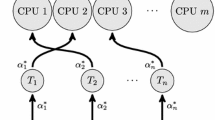

This paper presents a parallel reinforcement learning method considered communication cost. In our method, each agent communicates only action sequences with a constant episode interval. As the communication interval is longer, communication cost is smaller, but parallelism is lower. Implementing our method on PC cluster, we investigate such trade-off characteristics. We show that computation time to learning can be reduced by properly adjusting the communication interval.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Kretchmar, R.M.: Parallel Reinforcement Learning. In: Proc. of the 6th World Conference on Systemics, Cybernetics and Informatics, vol. 6, pp. 114–118 (2002)

Antonova, D.: Parallel Reinforcement Learning - Extending the Concept to Continuous Multi-State Tasks., thesis, Denison University (2003)

Watkins, C.J.H., Dayan, P.: Technical note: Q-learning. Machine Learning 8, 55–68 (1992)

Grefenstette, J.J.: Credit Assignment in Rule Discovery Systems Based on Genetic Algorithms. Machine Learning 3, 225–245 (1988)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2006 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Fujishiro, T., Nakano, H., Miyauchi, A. (2006). Parallel Distributed Profit Sharing for PC Cluster. In: Kollias, S.D., Stafylopatis, A., Duch, W., Oja, E. (eds) Artificial Neural Networks – ICANN 2006. ICANN 2006. Lecture Notes in Computer Science, vol 4131. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11840817_84

Download citation

DOI: https://doi.org/10.1007/11840817_84

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-38625-4

Online ISBN: 978-3-540-38627-8

eBook Packages: Computer ScienceComputer Science (R0)