Abstract

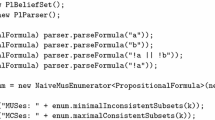

AI systems must be able to learn, reason logically, and handle uncertainty. While much research has focused on each of these goals individually, only recently have we begun to attempt to achieve all three at once. In this talk I will describe Markov logic, a representation that combines first-order logic and probabilistic graphical models, and algorithms for learning and inference in it. A knowledge base in Markov logic is a set of weighted first-order formulas, viewed as templates for features of Markov networks. The weights and probabilistic semantics make it easy to combine knowledge from a multitude of noisy, inconsistent sources, reason across imperfectly matched ontologies, etc. Inference in Markov logic is performed by weighted satisfiability testing, Markov chain Monte Carlo, and (where appropriate) specialized engines. Formulas can be refined using inductive logic programming techniques, and weights can be learned either generatively (using pseudo-likelihood) or discriminatively (using a voted perceptron). Markov logic has been successfully applied to problems in entity resolution, social network modeling, information extraction and others, and is the basis of the open-source Alchemy system.

(Joint work with Stanley Kok, Hoifung Poon, Matt Richardson and Parag Singla.)

Similar content being viewed by others

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2006 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Domingos, P. (2006). Learning, Logic, and Probability: A Unified View. In: Staab, S., Svátek, V. (eds) Managing Knowledge in a World of Networks. EKAW 2006. Lecture Notes in Computer Science(), vol 4248. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11891451_2

Download citation

DOI: https://doi.org/10.1007/11891451_2

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-46363-4

Online ISBN: 978-3-540-46365-8

eBook Packages: Computer ScienceComputer Science (R0)