Abstract

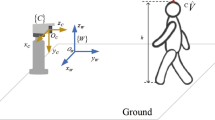

This paper presents real-time human motion analysis for human-machine interface. In general, man-machine ‘smart’ interface requires real-time human motion capturing systems without special devices or markers. Although vision-based human motion capturing systems do not use such special devices and markers, they are essentially unstable and can only acquire partial information because of self-occlusion. When we analyze full-body motion, the problem becomes severer. Therefore, we have to introduce a robust pose estimation strategy to deal with relatively poor results of image analysis. To solve this problem, we have developed a method to estimate full-body human postures, where an initial estimation is acquired by real-time inverse kinematics and, based on the estimation, more accurate estimation is searched for referring to the processed image. The key points are that our system combines silhouette contour analysis and color blob analysis for feature extraction to achieve robust feature extraction and that our system can estimate fullbody human postures from limited perceptual cues such as positions of a head, hands and feet, which can be stably acquired by feature extraction process. In this paper, we outline a real-time and on-line human motion analysis system.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

C. Wren, A. Azarbayejani, T. Darrell, A. Pentland, “Pfinder: Real-Time Tracking of the Human Body”, IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 19, No. 7, pp. 780–785, 1997.

C. Bregler, “Learning and Recognizing Human Dynamics in Video Sequences”, in Computer Vision and Pattern Recognition, pp. 568–574, 1997.

M. Etoh, Y. Shirai, “Segmentation and 2D Motion Estimation by Region Fragments”, International Conference on Computer Vision, pp. 192–199, 1993.

M.K. Leung, Y-H. Yang, “First Sight: A Human Body Outline Labeling System”, IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 17, No. 4, pp. 359–377, 1995.

K. Takahashi, T. Sakaguchi, J. Ohya, “Remarks on a Real-Time 3D Human Body Posture Estimation Method using Trinocular Images,” International Conference on Pattern Recognition, Vol. 4, pp. 693–697, 2000.

Y. Okamoto and R. Cipolla and H. Kazama and Y. Kuno, “Human Interface System Using Qualitative Visual Motion Interpretation”, Transactions of Institute of Electronics, Information and Communication Engineers, Vol. J76-D-II, No. 8, pp. 1813–1821, 1993 (in Japanese).

J. Zhao and N. Badler: Inverse Kinematics Positioning Using Nonlinear Programming for Highly Articulated Figures, Transactions on Computer Graphics, Vol. 13, No. 4, pp. 313–336, 1994.

S. Yonemoto, D. Arita and R. Taniguchi: “Real-Time Visually Guided Human Figure Control Using IK-based Motion Synthesis”, Proc. 5th Workshop on the Application of Computer Vision, pp. 194–200, 2000.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2002 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Hoshino, R., Arita, D., Yonemoto, S., Taniguchi, Ri. (2002). Real-Time Human Motion Analysis Based on Analysis of Silhouette Contour and Color Blob. In: Perales, F.J., Hancock, E.R. (eds) Articulated Motion and Deformable Objects. AMDO 2002. Lecture Notes in Computer Science, vol 2492. Springer, Berlin, Heidelberg. https://doi.org/10.1007/3-540-36138-3_8

Download citation

DOI: https://doi.org/10.1007/3-540-36138-3_8

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-00149-2

Online ISBN: 978-3-540-36138-1

eBook Packages: Springer Book Archive