Abstract

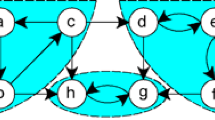

We present the Q-Cut algorithm, a graph theoretic approach for automatic detection of sub-goals in a dynamic environment, which is used for acceleration of the Q-Learning algorithm. The learning agent creates an on-line map of the process history, and uses an efficient Max-Flow/Min-Cut algorithm for identifying bottlenecks. The policies for reaching bottlenecks are separately learned and added to the model in a form of options (macro-actions). We then extend the basic Q-Cut algorithm to the Segmented Q-Cut algorithm, which uses previously identified bottlenecks for state space partitioning, necessary for finding additional bottlenecks in complex environments. Experiments show significant performance improvements, particulary in the initial learning phase.

Chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

References

R. K. Ahuja, T. L. Magnati, and J. B. Orlin. Network Flows Theory, Algorithms and Applications. Prentice Hall Press, 1993.

D. P. Bertsekas and J. N. Tsitsiklis. Neuro-Dynamic Programming. Athena Scientific, 1995.

A. Blum and S. Chawla. Learning from labeled and unlabeled data using graph mincuts. In Proceedings of the 18th International Conference on Machine Learning, pages 19–26. Morgan Kaufmann, 2001.

P. Dayan and G. E. Hinton. Feudal reinforcement learning. In Advances in Neural Information Processing Systems 5. Morgan Kaufmann, 1993.

P. Dayan and C. Watkins. Q-learning. Machine Learning, 8:279–292, 1992.

T. G. Dietterich. Hierarchical reinforcement learning with the MAXQ value function decomposition. Journal of Artificial Intelligence Research, 13:227–303, 2000.

B. Digney. Learning hierarchical control structure for multiple tasks and changing environments. In Proceedings of the Fifth Conference on the Simulation of Adaptive Behavior: SAB 98, 1998.

A. V. Goldberg and R. E. Tarjan. A new approach to the maximum-flow problem. Journal of ACM, 35(4):921–940, October 1988.

D. J. Huang and A. B. Kahng. When clusters meet partitions: A new density based methods for circuit decomposition. In Proceedings of the European Design and Test Conference, pages 60–64, 1995.

L. G. Lin. Self-improving reactive agents based on reinforcement learning, planning and teaching. Machine Learning, 8(3):293–321, 1992.

A. McGovern and A. G. Barto. Automatic discovery of subgoals in reinforcement learning using diverse density. In Proceedings of the 18th International Conference on Machine Learning, pages 361–368. Morgan Kaufmann, 2001.

A. McGovern, R. S. Sutton, and A. H. Fagg. Roles of macro-actions in accelerating reinforcement learning. In Proceedings of the 1997 Grace Hopper Celebration of Women in Computing, pages 13–18, 1997.

J. Morimoto and K. Doya. Acquisition of stand-up behavior by a real robot using hierarchical reinforcement learning. In Proceedings of the 17th International Conference on Machine Learning, pages 623–630. Morgan Kaufmann, 2000.

S. P. Singh, T. Jaakkola, and M. I. Jordan. Reinforcement learning with soft state aggregation. In Advances in Neural Information Processing Systems, volume 7, pages 361–368. The MIT Press, 1995.

R. S. Sutton, D. Precup, and S. Singh. Between MDPs and semi-MDPs: A framework for temporal abstraction in reinforcement learning. Artificial Intelligence, 112:181–211, 1999.

J. N. Tsitsiklis and B. Van Roy. An analysis of temporal-difference learning with function approximation. IEEE Transactions on Automatic Control, 42(5):674–690, 1997.

Y. C. Wei and C. K. Cheng. Ratio cut partitioning for hierarchical designs. IEEE/ACM Transaction on Networking, 10(7):911–921, 1991.

M. Wiering and J. Schmidhuber. HQ-learning. Adaptive Behavior, 6(2):219–246, 1997.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2002 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Menache, I., Mannor, S., Shimkin, N. (2002). Q-Cut—Dynamic Discovery of Sub-goals in Reinforcement Learning. In: Elomaa, T., Mannila, H., Toivonen, H. (eds) Machine Learning: ECML 2002. ECML 2002. Lecture Notes in Computer Science(), vol 2430. Springer, Berlin, Heidelberg. https://doi.org/10.1007/3-540-36755-1_25

Download citation

DOI: https://doi.org/10.1007/3-540-36755-1_25

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-44036-9

Online ISBN: 978-3-540-36755-0

eBook Packages: Springer Book Archive