Abstract

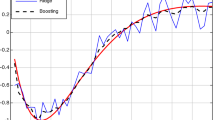

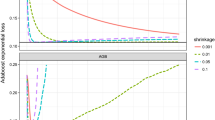

One basic property of the boosting algorithm is its ability to reduce the training error, subject to the critical assumption that the base learners generate ‘weak’ (or more appropriately, ‘weakly accurate’) hypotheses that are better that random guessing. We exploit analogies between regression and classification to give a characterization on what base learners generate weak hypotheses, by introducing a geometric concept called the angular span for the base hypothesis space. The exponential convergence rates of boosting algorithms are shown to be bounded below by essentially the angular spans. Sufficient conditions for nonzero angular span are also given and validated for a wide class of regression and classification systems.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Breiman, L. (1998). Arcing classifiers. The Annals of Statistics, 26 801–849.

Breiman, L. (1997). Prediction games and arcing classifiers. Technical Report 504, Statistics Department, University of California at Berkeley, 1997.

Breiman, L. (1997). Arcing the edge. Technical Report 486, Statistics Department, University of California at Berkeley, 1997

Freund, Y. (1995). Boosting a weak learning algorithm by majority. Information and Computation, 121 256–285.

Freund, Y. and Schapire, R. E. (1996). Game theory, on-line prediction and boosting. Proceedings of the Ninth Annual Conference on Computational Learning Theory, 325–332.

Freund, Y. and Schapire, R. E. (1997). A decision-theoretic generalization of on-line learning and an application to boosting. Journal of Computer and System Sciences, 55 119–139.

Friedman, J. H. (1999). Greedy function approximation: a gradient boosting machine. Technical Report, Department of Statistics, Stanford University

Friedman, J., Hastie, T. Tibshirani, R. (1999). Additive logistic regression: a statistical view of boosting. Technical Report, Department of Statistics, Stanford University

Goldmann, M., Hastad, J., and Razborov, A. (1992). Majority gates vs. general weighted threshold gates. Computational Complexity, 2 277–300.

Jacobs, R. A., Jordan, M. I., Nowlan, S. J., Hinton, G. E. (1991). Adaptive mixtures of local experts. Neural Comp3 79–87.

Jiang, W. (1999). Large time behavior of boosting algorithms for regression and classification. Technical Report, Department of Statistics, Northwestern University.

Jiang, W. (2000). On weak base learners for boosting regression and classification. Technical Report, Department of Statistics, Northwestern University.

Mason, L., Baxter, J., Bartlett, P. Frean, M. Boosting algorithms as gradient descent in function space. Technical Report, Department of Systems Engineering, Australian National University

Schapire, R. E. (1990). The strength of weak learnability. Machine Learning, 5 197–227.

Schapire, R. E. (1999). Theoretical views of boosting. Computational Learning Theory: Fourth European Conference, EuroCOLT’99, 1–10.

Schapire, R. E., Freund, Y., Bartlett, P. AND Lee, W. S. (1998). Boosting the margin: A new explanation for the effectiveness of voting methods. The Annals of Statistics, 26 1651–1686.

Author information

Authors and Affiliations

Rights and permissions

Copyright information

© 2000 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Jiang, W. (2000). Some Results on Weakly Accurate Base Learners for Boosting Regression and Classification. In: Multiple Classifier Systems. MCS 2000. Lecture Notes in Computer Science, vol 1857. Springer, Berlin, Heidelberg. https://doi.org/10.1007/3-540-45014-9_8

Download citation

DOI: https://doi.org/10.1007/3-540-45014-9_8

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-67704-8

Online ISBN: 978-3-540-45014-6

eBook Packages: Springer Book Archive