Abstract

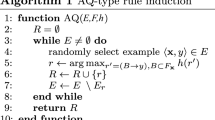

Decision tree learning has become a popular and practical method in data mining because of its high predictive accuracy and ease of use. However, a set of if-then rules generated from large trees may be preferred in many cases because of at least three reasons: (i) large decision trees are difficult to understand as we may not see their hierarchical structure or get lost in navigating them, (ii) the tree structure may cause individual subconcepts to be fragmented (this is sometimes known as the “replicated subtree” problem), (iii) it is easier to combine new discovered rules with existing knowledge in a given domain. To fulfill that need, the popular decision tree learning system C4.5 applies a rule post-pruning algorithm to transform a decision tree into a rule set. However, by using a global optimization strategy, C4.5rules functions extremely slow on large datasets. On the other hand, rule post-pruning algorithms that learn a set of rules by the separate-and-conquer strategy such as CN2, IREP, or RIPPER can be scalable to large datasets, but they suffer from the crucial problem of overpruning, and do not often achieve a high accuracy as C4.5. This paper proposes a scalable algorithm for rule post-pruning of large decision trees that employs incremental pruning with improvements in order to overcome the overpruning problem. Experiments show that the new algorithm can produce rule sets that are as accurate as those generated by C4.5 and is scalable for large datasets.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Brunk, C.A. and Pazzani, M.J.: An Investigation of Noise-Tolerant Relational Concept Learning Algorithms. Proceedings of the 8th International Workshop on Machine Learning (1991), 389–393.

Clark, P., Niblett, T.: The CN2 Induction Algorithm. Machine Learning, 3 (1989), 261–283.

Cohen, W.W.: Fast Effective Rule Induction. Proceeding of the 12th International Conference on Machine Learning, Morgan Kaufmann (1995) 115–123.

Cohen, W.W.: Efficient Pruning Methods for Separate-and-Conquer Rule Learning Systems. Proceeding of the 13th International Joint Conference on Artificial Intelligence, Chambery, France (1993), 988–995.

Frank, E., Witten, I.H.: Generating Accurate Rule Sets Without Global Optimization. Proceeding of the 15th International Conference on Machine Learning, Morgan Kaufmann (1998), 144–151.

Furnkranz, J.: Pruning Algorithms for Rule Learning. Machine Learning, 27 (1997), 139–171.

Furnkranz, J. and Widmer, G.: Incremental Reduced Error Pruning. Proceeding of the 11th International Conference on Machine Learning, Morgan Kaufmann (1994), 70–77.

Mitchell, T.M.: Machine Learning, McGraw-Hill (1997).

Nguyen, T.D. and Ho, T.B.: An Interactive-Graphic System for Decision Tree Induction. Journal of Japanese Society for Artificial Intelligence, Vol. 14,N. 1 (1999), 131–138.

Pagallo, G. and Haussler, D.: Boolean Feature Discovery in Empirical Learning. Machine Learning, 5 (1990), 71–99.

Quinlan, J.R.: C4.5: Programs for Machine Learning, Morgan Kaufmann (1993).

Rivest, R.L.: Learning Decision Lists. Machine Learning, 2 (1987), 229–246.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2001 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Nguyen, T.D., Ho, T.B., Shimodaira, H. (2001). A Scalable Algorithm for Rule Post-pruning of Large Decision Trees. In: Cheung, D., Williams, G.J., Li, Q. (eds) Advances in Knowledge Discovery and Data Mining. PAKDD 2001. Lecture Notes in Computer Science(), vol 2035. Springer, Berlin, Heidelberg. https://doi.org/10.1007/3-540-45357-1_49

Download citation

DOI: https://doi.org/10.1007/3-540-45357-1_49

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-41910-5

Online ISBN: 978-3-540-45357-4

eBook Packages: Springer Book Archive