Abstract

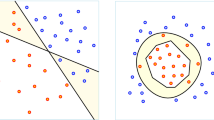

We study the proper learnability of axis parallel concept classes in the PAC learning model and in the exact learning model with membership and equivalence queries. These classes include union of boxes, DNF, decision trees and multivariate polynomials. For the constant dimensional axis parallel concepts C we show that the following problems have the same time complexity

-

1.

C is α-properly exactly learnable (with hypotheses of size at most α times the target size) from membership and equivalence queries.

-

2.

C is α-properly PAC learnable (without membership queries) under any product distribution.

-

3.

There is an α-approximation algorithm for the MinEqui C problem. (given a g ∈ C find a minimal size f ∈ C that is equivalent to g).

In particular, C is α-properly learnable in poly time from membership and equivalence queries if and only if C is α-properly PAC learnable in poly time under the product distribution if and only if MinEqui C has a poly time α-approximation algorithm. Using this result we give the first proper learning algorithm of decision trees over the constant dimensional domain and the first negative results in proper learning from membership and equivalence queries for many classes.

For the non-constant dimensional axis parallel concepts we show that with the equivalence oracle (1) ⇒ (3). We use this to show that (binary) decision trees are not properly learnable in polynomial time (assuming P≠NP) and DNF is not sε-properly learnable (∈ < 1) in polynomial time even with an NP-oracle (assuming Σ 2 p ≠ P NP).

This research was supported by the fund for promotion of research at the Technion. Research no. 120-025. Part of this research was done at the University of Calgary, Calgary, Alberta, Canada and supported by NSERC of Canada.

This research was supported by an NSERC PGS-B Scholarship, an Izaak Walton Killam Memorial Scholarship, and an Alberta Informatics Circle of Research Excellence (iCORE) Fellowship.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

D. Angluin. Queries and concept learning. Machine Learning, 319–342, 1988.

S. Arora, L. Babai, J. Stern, Z. Sweedyk. The hardness of approximate optima in lattices, codes, and systems of linear equations. JCSS, 43, 317–331, 1997.

A. Beimel, F. Bergadano, N. H. Bshouty, E. Kushilevitz, S. Varricchio. Learning functions represented as multiplicity automata. JACM 47(3): 506–530, 2000.

A. Beimel and E. Kushilevitz. Learning boxes in high dimension. Algorithmica, 22(1/2):76–90, 1998.

S. Ben-David, N. H. Bshouty, E. Kushilevitz. A composition theorem for learning algorithms with applications to geometric concept classes. 29th STOC, 1997.

F. Bergadano, D. Catalano, S. Varricchio. Learning sat-k-DNF formulas from membership queries. In 28th STOC, pp 126–130, 1996.

P. Berman, B. DasGupta. Approximating the rectilinear polygon cover problems. In Proceedings of the 4th Canadian Conference on Computational Geometry, pages 229–235, 1992.

N. H. Bshouty, Exact learning of boolean functions via the monotone theory. Information and Computation, 123, pp. 146–153, 1995.

N. H. Bshouty. Simple learning algorithms using divide and conquer. In Proceedings of the Annual ACM Workshop on Computational Learning Theory, 1995.

N. H. Bshouty. A new composition theorem for learning algorithms. Proceedings of the 30th annual ACM Symposium on Theory of Computing (STOC), May 1998.

N. H. Bshouty, R. Cleve, R. Gavalda, S. Kannan, C. Tamon. Oracles and queries that are sufficient for exact learning. JCSS 52(3): pp. 421–433, 1996.

N. H. Bshouty, P. W. Goldberg, S. A. Goldman, D. H. Mathias. Exact learning of discretized geometric concepts. SIAM J. of Comput. 28(2), 678–699, 1999.

Z. Chen and W. Homer. The bounded injury priority method and the learnability of unions of rectangles. Annals of Pure and Applied Logic 77(2):143–168, 1996.

Z. Chen, W. Maass. On-line learning of rectangles and unions of rectangles, Machine Learning, 17(1/2), pages 201–223, 1994.

V. J. Dielissen, A. Kaldewaij. Rectangular partition is polynomial in two dimensions but NP-complete in three. In IPL, pages 1–6, 1991.

D. Franzblau. Performance guarantees on a sweep-line heuristic for covering rectilinear polygons with rectangles. SIAM J. on Discrete Math 2, 307–321, 1989.

L. Hellerstein, K. Pillaipakkamnatt, V. Raghavan, D. Wilkins. How many queries are needed to learn? JACM, 43(5), pages 840–862, 1996.

L. Hellerstein and V. Raghavan. Exact learning of DNF formulas using DNF hypotheses. In 34rd Annual Symposium on Theory of Computing (STOC), 2002.

A. Klivans and R. Servedio. Learning DNF in Time 2O(n1/3). In 33rd Annual Symposium on Theory of Computing (STOC), 2001, pp. 258–265.

W. Lipski, Jr., E. Lodi, F. Luccio, C. Mugnai and L. Pagli. On two-dimensional data organization II. In Fund. Inform. 2, pages 245–260, 1979.

W. Maass and M. K. Warmuth Efficient learning with virtual threshold gates Information and Computation, 141(1): 66–83, 1998.

K. Pillaipakkamntt, V. Raghavan, On the limits of proper learnability of subclasses of DNF formulas, Machine Learning, 25(2/3), pages 237–263, 1996.

L. Pitt and L. G. Valiant. Computational Limitations on Learning from Examples. Journal of the ACM, 35(4):965–984, October 1988.

R. E. Schapire, L. M. Sellie. Learning sparse multivariate polynomial over a field with queries and counterexamples. In Proceedings of the Sixth Annual ACM Workshop on Computational Learning Theory. July, 1993.

C. Umans. Hardness of approximating Σ2 p minimization problems. In Proceedings of the 40th Symposium on Foundations of Computer Science, 1999.

L. Valiant. A theory of the learnable. Communications of the ACM, 27(11):1134–1142, November 1984.

H. Zantema, H. Bodlaender. Finding small equivalent decision trees is hard. In International Journal of Foundations of Computer Science, 11(2): 343–354, 2000.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2002 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Bshouty, N.H., Burroughs, L. (2002). On the Proper Learning of Axis Parallel Concepts. In: Kivinen, J., Sloan, R.H. (eds) Computational Learning Theory. COLT 2002. Lecture Notes in Computer Science(), vol 2375. Springer, Berlin, Heidelberg. https://doi.org/10.1007/3-540-45435-7_20

Download citation

DOI: https://doi.org/10.1007/3-540-45435-7_20

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-43836-6

Online ISBN: 978-3-540-45435-9

eBook Packages: Springer Book Archive