Abstract

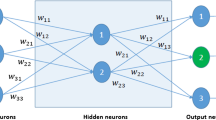

When using neural networks to train a large number of data for classification, there generally exists a learning complexity problem. In this paper, a new geometrical interpretation of McCulloch-Pitts (M–P) neural model is presented. Based on the interpretation, a new constructive learning approach is discussed. Experimental results show that the new algorithm can greatly reduce the learning complexity and can be applied to real classification problems with a vast amount of data.

Supported by National Nature Science Foundation & National Basic Research Program of China

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

McCulloch, W. S. and Pitts, W.: A logical calculus of the ideas immanent in neurons activity, Bulletin of Mathematical Biophysics, 5 (1943) 115–133

Cooper, P. W.: A note on adaptive hypersphere decision boundary, IEEE Transactions on Electronic Computers (1996) 948–949

Roy, A. and Mukhopadhyay, S.: Pattern classification using linear programming, ORSA Journal on Computer, 3(1) (1991) 66–80

Mukhopadhyay, S., Roy, A., Kim, L. S. and Govil, S.: A polynomial time algorithm for generating neural networks for pattern classification: Its stability properties and some test results, Neural Computation, 5(2) (1993) 317–330

Musavi, M. T. M., Ahmed, W., Chan, K. H., Faris, K. B. and Hummels, D. M.: On the training of radial basis function classifiers, Neural Networks, vol.5 (1992) 593–603

Lee, S. and Kil, R.: Multilayer feedforward potential function network,” in Proc. of the IEEE Second International Conference on Neural Networks, San Diego IEEE, New York (1988) 161–171

Reilly, D. L. and Cooper, L. N.: An overview of neural networks: early models to real world systems, in An Introduction to Neural and Electronic Networks, Zornetzer, S. F., Davis, J. L. and Lau, C. (eds.) Academic Press San Diego (1990) 227–246

Abe, S and Lan, M. S.: A method for fuzzy rules extraction directly from numerical data and its application to pattern classification, IEEE Transactions on Fuzzy Systems, vol.3no.1 (1995) 18–28.

Abe, S. and Thawonmas, R.: A fuzzy classifier with ellipsoidal regions, IEEE Trans. on Fuzzy Systems, vol.5no.3 (1997) 358–368

Fahlman, S. E. and Lebiere, C.: The cascade-correlation learning architecture, In Tourdtzhy, D. S. (ed.), Advances in Neural Information Processing Systems, 2, San Mateo, CA, Morgan Kaufmann, (1990) 524–532

Lang, K. J. and Witbrock, M. L.: Learning to tell two spirals apart, in Proc. of the 1988 Connectionists Models Summer Schools (Pittsburgh, 1988), Touretzky, D., Hinton, G. and Sejnowski, T. (eds.) Morgan Kaufmann, San Mateo, CA (1988) 52–59

Hassoun, M. H.: Fundamentals of Artificial Neural Networks, MIT Press, Cambridge, Massachusetts (1995)

Baum, E. B. and Lang, K. J.: Constructing hidden units using examples and queries, In Lippman, R. P., et al (eds.): Neural Information Processing Systems, 3 San Mateo, CA: Morgan Kaufmann (1991) 904–910

Chen, Q. C., et. al.: Generating-shrinking algorithm for learning arbitrary classification, Neural Networks, 7 (1994) 1477–1489

Roberts, F. S.: Applied Combinatorics, Prentice-Hall, Inc. (1984)

Hong, J. R.: AE1, An extension matrix approximate method for the general covering problem, Int. J. of Computer and Information Science, 14(6) (1985) 421–437

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 1999 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Zhang, L., Zhang, B. (1999). Neural Network Based Classifiers for a Vast Amount of Data. In: Zhong, N., Zhou, L. (eds) Methodologies for Knowledge Discovery and Data Mining. PAKDD 1999. Lecture Notes in Computer Science(), vol 1574. Springer, Berlin, Heidelberg. https://doi.org/10.1007/3-540-48912-6_32

Download citation

DOI: https://doi.org/10.1007/3-540-48912-6_32

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-65866-5

Online ISBN: 978-3-540-48912-2

eBook Packages: Springer Book Archive