Abstract

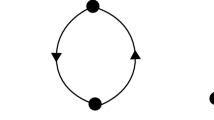

We prove that a class of architectures called NARX neural networks, popular in control applications and other problems, are at least as powerful as fully connected recurrent neural networks. Recent results have shown that fully connected networks are Turing equivalent. Building on those results, we prove that NARX networks are also universal computation devices. NARX networks have a limited feedback which comes only from the output neuron rather than from hidden states. There is much interest in the amount and type of recurrence to be used in recurrent neural networks. Our results pose the question of what amount of feedback or recurrence is necessary for any network to be Turing equivalent and what restrictions on feedback limit computational power.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

N. Alon, A.K. Dewdney, and T.J. Ott. Efficient simulation of finite automata by neural nets. JACM, 38(2):495–514, 1991.

A.D. Back and A.C. Tsoi. FIR and IIR synapses, a new neural network architecture for time series modeling. Neural Computation, 3(3):375–385, 1991.

S. Chen, S.A. Billings, and P.M. Grant. Non-linear system identification using neural networks. Int. J. Control, 51(6):1191–1214, 1990.

D.S. Clouse, C.L. Giles, B.G. Horne, and G.W. Cottrell. Learning large deBruijn automata with feed-forward neural networks. Technical Report CS94-398, CSE Dept., UCSD, La Jolla, CA, 1994.

J. Connor, L.E. Atlas, and D.R. Martin. Recurrent networks and NARMA modeling. In NIPS4, pages 301–308, 1992.

G. Cybenko. Approximation by superpositions of a sigmoidal function. Math. of Control, Signals, and Sys., 2(4):303–314, 1989.

B. de Vries and J.C. Principe. The gamma model — A new neural model for temporal processing. Neural Networks, 5:565–576, 1992.

P. Frasconi, M. Gori, and G. Soda. Local feedback multilayered networks. Neural Computation, 4:120–130, 1992.

C.L. Giles, B.G. Horne, and T. Lin. Learning a class of large finite state machines with a recurrent neural network. Neural Networks, 1995. In press.

B.G. Horne and C.L. Giles. An experimental comparison of recurrent neural networks. In NIPS7, 1995. To appear.

B.G. Horne and D.R. Hush. Bounds on the complexity of recurrent neural network implementations of finite state machines. In NIPS6, pages 359–366, 1994.

J. Kilian and H.T. Siegelmann. On the power of sigmoid neural networks. In Proc. 6th ACM Work. on Comp. Learning Theory, pages 137–143, 1993.

Z. Kohavi. Switching and finite automata theory. McGraw-Hill, New York, NY, 2nd edition, 1978.

I.J. Leontaritis and S.A. Billings. Input-output parametric models for non-linear systems: Part I: deterministic non-linear systems. Int. J. Control, 41(2):303–328, 1985.

W.S. McCulloch and W.H. Pitts. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophysics, 5:115–133, 1943.

M.L. Minsky. Computation: Finite and infinite machines. Prentice-Hall, Englewood Cliffs, 1967.

K.S. Narendra and K. Parthasarathy. Identification and control of dynamical systems using neural networks. IEEE Trans. on Neural Networks, 1:4–27, March 1990.

C.W. Omlin and C.L. Giles. Stable encoding of large finite-state automata in recurrent neural networks with sigmoid discriminants. Neural Computation, 1996. accepted for publication.

S.-Z. Qin, H.-T. Su, and T.J. McAvoy. Comparison of four neural net learning methods for dynamic system identification. IEEE Trans. on Neural Networks, 3(1):122–130, 1992.

H.T. Siegelmann, B.G. Horne, and C.L. Giles. Computational capabilities of NARX neural networks. Technical Report UMIACS-TR-95-12 and CS-TR-3408, Institute for Advanced Computer Studies, University of Maryland, 1995.

H.T. Siegelmann and E.D. Sontag. Analog computation via neural networks. Theoretical Computer Science, 131:331–360, 1994.

H.T. Siegelmann and E.D. Sontag. On the computational power of neural networks. J. Comp. and Sys. Science, 50(1):132–150, 1995.

H.T. Siegelmann, E.D. Sontag, and C.L. Giles. The complexity of language recognition by neural networks. In Algorithms, Software, Architecture (Proc. of IFIP 12th World Computer Congress), pages 329–335. North-Holland, 1992.

H.-T. Su, T.J. McAvoy, and P. Werbos. Long-term predictions of chemical processes using recurrent neural networks: A parallel training approach. Ind. Eng. Chem. Res., 31:1338–1352, 1992.

Author information

Authors and Affiliations

Editor information

Rights and permissions

Copyright information

© 1995 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Horne, B.G., Siegelmann, H.T., Giles, C.L. (1995). What NARX networks can compute. In: Bartosek, M., Staudek, J., Wiedermann, J. (eds) SOFSEM '95: Theory and Practice of Informatics. SOFSEM 1995. Lecture Notes in Computer Science, vol 1012. Springer, Berlin, Heidelberg. https://doi.org/10.1007/3-540-60609-2_5

Download citation

DOI: https://doi.org/10.1007/3-540-60609-2_5

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-60609-3

Online ISBN: 978-3-540-48463-9

eBook Packages: Springer Book Archive