Abstract

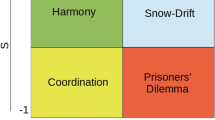

Approaches which tackle coordination in multi-agent systems have mostly taken communication for granted. In societies of individually-motivated agents where the communication costs are prohibitive, there should be other mechanisms to allow them to coordinate when interacting. In this paper game theory is used as a mathematical tool for modelling the interactions among agents and as a mechanism for coordination with less communication. However we want to loose the assumption that agents are always rational. In the approach discussed here, agents learn how to coordinate by playing repeatedly with neighbors. The dynamics of the interaction is modelled by means of genetic operators. In this way, the behavior of agents as well as the equilibrium of the system can adapt to major external perturbations. If the interaction lasts long enough, then agents can asymptotically learn new coordination points.

the author is supported by Conselho Nacional de Pesquisa Cientifica e Tecnológica — CNPq — Brasil.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Axelrod, R. (1984). The Evolution of Cooperation. Basic Books, New York.

Fudenberg, D. and D. Kreps (1989). A Theory of Learning, Experimentation and Equilibrium in Games. Stanford University. Cited in Kalai and Lehrer, 1993.

Fudenberg, D. and D. Levine(1993). Steady State Learning and Nash Equilibrium. Econometrica, 61: 547–573.

Huberman, B.A. and N. S. Glance (1993). Evolutionary games and computer simulations. Proc. Natl. Acad. Sci., 90: 7716–7718.

Kalai, E. and E. Lehrer (1993). Rational Learning leads to Nash Equilibrium. Econometrica, 61: 1019–1045.

Kandori, M., G.G. Mailath and R. Rob. Learning, mutation and long run equilibria in games. Econometrica, 61: 29–56.

Lindgren, K. and M.G. Norhahl (1994). Evolutionary dynamics of spatial games. Physica, D 75: 292–309.

Maynard Smith, J. and G. R. Price (1973). The logic of animal conflict. Nature, 246: 15–18.

Nowak, M.A. and R.M. May (1992). Evolutionary games and spatial chaos. Nature, 359: 826–829.

Rosenschein, J.S. and G. Zlotkin (1994). Rules of Encounter. The MIT Press, Cambridge (MA)-London.

Sastry, P.S., V.V. Phansalkar and M.A.L. Thathachar (1994). Decentralized Learning of Nash Equilibria in Multi-Person Stochastic Games with Incomplete Information. IEEE Trans. on Systems, Man and Cybernetics, 24: 769–777.

Smith, R.G. and R. Davis (1981). Frameworks for cooperation in distributed problem solving. IEEE Trans. on Systems, Man and Cybernetics, 11, 61–70.

Wellman, M. P. (1992). A general equilibrium approach to distributed transportation planning. In Proc. of the tenth National Conf. on Artificial Intelligence, San Jose, California.

Author information

Authors and Affiliations

Editor information

Rights and permissions

Copyright information

© 1996 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Bazzan, A.L.C. (1996). Coordination among individually-motivated agents: An evolutionary approach. In: Borges, D.L., Kaestner, C.A.A. (eds) Advances in Artificial Intelligence. SBIA 1996. Lecture Notes in Computer Science, vol 1159. Springer, Berlin, Heidelberg. https://doi.org/10.1007/3-540-61859-7_8

Download citation

DOI: https://doi.org/10.1007/3-540-61859-7_8

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-61859-1

Online ISBN: 978-3-540-70742-4

eBook Packages: Springer Book Archive