Abstract

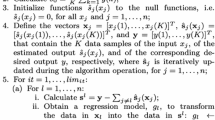

Feature Selection is the problem of choosing a small subset of features that ideally is necessary and sufficient to describe the target concept. Feature selection is of paramount importance for any learning algorithm. We propose a new feature selection methodology based on the ‘Blurring’ measure, and empirically evaluate features selected through information-theoretic measures, stepwise multiple regression analyses, and the proposed method. We use neurofuzzy systems to compare the performance of these Feature Selection methods. Preliminary results using two data sets and the proposed Feature Selection method are promising.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Battiti, R.: Using Mutual Information for Selecting Features in Supervised Neural Net Learning. IEEE Transactions on Neural Networks. 5 4 (1994) 537–550

Chang, C.-Y.: Dynamic Programming as Applied to Feature Subset Selection in a Pattern Recognition System. IEEE Transactions on Systems, Man, and Cybernetics. 3 2 (1973) 166–171

Cover, T. M.: The Best Two Independent Measurements are Not the Two best. IEEE Transactions on Systems, Man, and Cybernetics. January (1974) 116–117

Elashoff, J. D., Elashoff, R. M., and Goldman, G. E.: On the Choice of Variables in Classification Problems with Dichotomous Variables. Biometrika. 54 (1967) 668–670

Elomaa, T., and Ukkonen, E.: A Geometric Approach to Feature Selection. Proceedings of the European Conference on Machine Learning. (1994) 351–354

Fisher, R. A.: The Use of Multiple Measurements in Taxonomic Problems. Annual Eugenics. 7 II (1936) 179–188

Kira, K., and Rendell, L. A.: A Practical Approach to Feature Selection. Proceedings of the Ninth International Conference on Machine Learning. (1992) 249–256

Kittler, J.: Mathematical Methods of Feature Selection in Pattern Recognition. International Journal of Man-Machine Studies. 7 (1975) 609–637

Malki, H. A., and Moghaddamjoo, A.: Using the Karhunen-Loe've Transformation in the Back-Propagation Training Algorithm. IEEE Transactions on Neural Networks. 2 1 (1991) 162–165

Meisel, W. S.: Computer-Oriented Approaches to Pattern Recognition. (1972) Academic Press, New York

Nauck, D., Klawonn, F., and Kruse, R.: Combining Neural Networks and Fuzzy Controllers. Proceedings of the 8th Austrian AI Conference. (1993) 35–46

Piramuthu, S., and Ragavan, H., Improving Connectionist Learning with Symbolic Feature Construction. Connection Science. 1 4 (1992) 33–43

Quinlan, J. R.: Decision Trees and Decision Making. IEEE Transactions on Systems, Man and Cybernetics. 20 2 (1990) 339–346

Rendell, L., and Ragavan, H.: Improving the Design of Induction Methods by Analyzing Algorithm Fuctionality and Data-Based Concept Complexity. International Joint Conference on Artificial Intelligence. (1993) 952–958

The SAS System. SAS Institute Inc. Gary, North Carolina (1995)

Toussaint, G. T.: Note on Optimal Selection of Independent Binary-valued Features for Pattern Recognition. IEEE Transactions on Information Theory. 17 (1971) 618

Author information

Authors and Affiliations

Editor information

Rights and permissions

Copyright information

© 1996 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Piramuthu, S. (1996). Effects of Feature Selection with ‘Blurring’ on neurofuzzy systems. In: Arikawa, S., Sharma, A.K. (eds) Algorithmic Learning Theory. ALT 1996. Lecture Notes in Computer Science, vol 1160. Springer, Berlin, Heidelberg. https://doi.org/10.1007/3-540-61863-5_41

Download citation

DOI: https://doi.org/10.1007/3-540-61863-5_41

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-61863-8

Online ISBN: 978-3-540-70719-6

eBook Packages: Springer Book Archive