Abstract

In the previous chapters, we have discussed the fundamentals of BSN hardware and processing techniques including multi-sensor fusion, context aware and autonomic sensing. In this chapter, we will use bio-motion analysis as an exemplar to demonstrate how some of these methods are used for practical applications involving multiple wearable sensors.

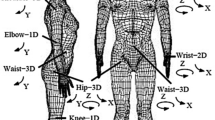

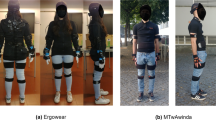

Motion Capture (Mocap) and reconstruction is the process of recording the general body movement of a human subject or living being and translating the movement onto a 3D model such that the model performs the same actions as the subject (De Aguiar E, Theobalt C, Stoll C, Seidel HP. Marker-less deformable mesh tracking for human shape and motion capture. In: Proceeding of IEEE Conference on Computer Vision and Pattern Recognition, pp 1–9, 2007). The Mocap technology has been used for a variety of applications, from delivering realistic animation in filming and entertainment to assessing the performance of professional athletes. Clinically, motion reconstruction systems are increasingly used to analyse the biomechanics of patients. The analysis provides an objective measure of physical function to aid interventional planning, evaluate the outcomes of surgical procedures and assess the efficacy of treatment and rehabilitation (King and Paulson Computer 40(9):13–16, 2007; Wong C, Zhang Z, Kwasnicki R, Liu J, and Yang GZ. Motion Reconstruction from Sparse Accelerometer Data Using PLSR, In: Proceedings of ninth International Conference on Wearable and Implantable Body Sensor Networks (BSN), pp 178–183, 2012). Thus far, a number of motion-tracking technologies have been developed and they can be mainly classified as optical tracking, mechanical tracking and inertial-sensor based tracking systems (Yun and Bachmann IEEE Transactions on Robotics 22(6): 1216–1227, 2006).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

De Aguiar E, Theobalt C, Stoll C, and Seidel HP, Marker-less deformable mesh tracking for human shape and motion capture, In: Proceeding of IEEE Conference on Computer Vision and Pattern Recognition, 2007; 1–9.

King B and Paulson L, Motion capture moves into new realms. Computer, 2007, 40(9), 13–16.

Wong C, Zhang Z, Kwasnicki R, Liu J, and Yang GZ, Motion Reconstruction from Sparse Accelerometer Data Using PLSR, In: Proceedings of ninth International Conference on Wearable and Implantable Body Sensor Networks (BSN), 2012; 178–183.

Yun X and Bachmann E, Design, Implementation, and Experimental Results of a Quaternion-Based Kalman Filter for Human Body Motion Tracking, IEEE Transactions on Robotics, 2006, 22(6): 1216–1227.

Vicon Motion Capture System, http://www.vicon.com/

BTS SMART-D Motion Capture System, http://www.btsbioengineering.com/

Qualisys Motion Capture Systems, http://www.qualisys.com

OptiTrack Motion Capture Systems, http://www.naturalpoint.com/optitrack/

Moeslund TB, Hilton A, Krüger V, A survey of advances in vision-based human motion capture and analysis, Computer vision and image understanding, 2006; 104(2): 90–126.

Vlasic D, Adelsberger R, Vannucci G, Barnwell J, Gross M, Matusik W, Popović J, Practical motion capture in everyday surroundings, ACM Transactions on Graphics (TOG), 007; 26(3): 35.

Hasler N, Rosenhahn B, Thormahlen T, Wand M, Gall J, Seidel HP, Markerless motion capture with unsynchronized moving cameras, In proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2009; 224–231.

Liu Y, Stoll C, Gall J, Seidel HP, Theobalt C, Markerless motion capture of interacting characters using multi-view image segmentation, In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2011; 1249–1256.

Organic Motion, http://www.organicmotion.com/

Yoon J, Novandy B, Yoon CH, Park KJ, A 6-DOF gait rehabilitation robot with upper and lower limb connections that allows walking velocity updates on various terrains, IEEE/ASME Transactions on Mechatronics, 2010; 15(2): 201–215.

Schabowsky CN, Godfrey SB, Holley RJ, Lum PS, Development and pilot testing of HEXORR: hand EXOskeleton rehabilitation robot, Journal of Neuroengineering and Rehabilitation, 2010; 7(1):36.

Gypsy 7 Motion Capture System, http://www.metamotion.com/gypsy/gypsy-motion-capture-system.htm

Ekso Bionic Suit, http://www.eksobionics.com/ekso

ArmeoPower Arm Exoskeleton, http://www.hocoma.com/products/armeo/

Lokomat Gait Orthosis, http://www.hocoma.com/products/lokomat/

Tao Y, Hu H, A novel sensing and data fusion system for 3D arm motion tracking in tele-rehabilitation, IEEE Transactions on Instrumentation and Measurements, 2008; 57(5):1029–1040.

Huang S, Sun S, Huang Z, Wu J, Ambulatory real-time micro-sensor motion capture, In: Proceedings of the 11th ACM international conference on Information Processing in Sensor Networks, 2012; 107–108

Animazoo Mocap Suits, http://www.animazoo.com/

Roetenberg D, Luinge H and Slycke P, Xsens MVN: full 6DOF human motion tracking using miniature inertial sensors, Xsens Motion Technologies BV Tech. Rep., 2009.

3DSuit 3DTracker, http://www.3dsuit.com/

Micro-Sense Mocap, http://www.microsenstech.com and http://snarc.ia.ac.cn

Zhang Z, Wu J, A novel hierarchical information fusion method for three-dimensional upper limb motion estimation, IEEE Transactions on Instrumentation and Measurement, 2011; 60(11):3709–3719.

McAllister LB, A quick introduction to quaternions, Pi Mu Epsilon Journal, 1989; 9(1):23–25.

Inertial Labs Inc, http://www.inertiallabs.com

Chou JCK, Quaternion kinematic and dynamic differential equations, IEEE Transactions on Robotics and Automation, 1992; 8(1):53–64.

Trawny N, and Stergios IR, Indirect Kalman filter for 3D attitude estimation, University of Minnesota, Dept. of Comp. Sci. & Eng., Tech. Rep, 2005.

Kuipers JB, Quaternions and rotation sequences, Princeton university press, 1999, 127–143.

Sabatini AM, Quaternion-based strap-down integration method for applications of inertial sensing to gait analysis, Medical and Biological Engineering and Computing, 2005, 43(1): 94–101.

Chen Z, Bayesian filtering: From Kalman filters to particle filters and beyond, Statistics, 2003; 182(1):1–69.

Arulampalam MS, Maskell S, Gordon N, Clapp T, A tutorial on particle filters for online nonlinear/non-Gaussian Bayesian tracking, IEEE Transactions on Signal Processing, 2002; 50(2):174–188.

Koch W, On Bayesian tracking and data fusion: a tutorial introduction with examples, IEEE Aerospace and Electronic Systems Magazine, 2010; 25(7):29–52

Hol JD, Schön TB, Luinge H, Slycke PJ and Gustafsson F, Robust real-time tracking by fusing measurements from inertial and vision sensors, Journal of Real-Time Image Processing, 2007; 2(2):149–160.

Jurman D, Jankovec M, Kamnik R, & Topič M, Calibration and data fusion solution for the miniature attitude and heading reference system, Sensors and Actuators A: Physical, 2007; 138(2):411–420.

Shen SC, Chen CJ and Huang HJ, A new calibration method for MEMS inertial sensor module, in: proceeding 11th IEEE International Workshop on of Advanced Motion Control, 2010.

Zhang Z, Yang GZ, Calibration of Miniature Inertial and Magnetic Sensor Units for Robust Attitude Estimation, IEE Transactions on Instrumentation and Measurements, 2014; 63(3):711–718.

Kraft E, A quaternion-based unscented Kalman filter for orientation tracking, In: Proceedings of the sixth International Conference of Information Fusion, 2003.

Zhang ZQ, Ji LY, Huang ZP and Wu JK, Adaptive Information Fusion for Human Upper Limb Movement Estimation, IEEE Transactions on Systems, Man and Cybernetics, Part A: Systems and Humans, 2012; 42(5):1100–1108.

Luinge H and Veltink P, Inclination measurement of human movement using a 3-D accelerometer with autocalibration, IEEE Trans. Neural Syst. Rehabil. Eng., 2004; 12(1): 112–121.

Young A, Use of body model constraints to improve accuracy of inertial motion capture, in: proceeding of International Conference on Wearable and Implantable Body Sensor Networks (BSN), 2010; 180–186.

Sabatini A, Quaternion-based extended Kalman filter for determining orientation by inertial and magnetic sensing, IEEE Transactions on Biomedical Engineering, 2006; 53(7): 1346–1356.

Kang C and Park C, Attitude estimation with accelerometers and gyros using fuzzy tuned Kalman filter, in: proceeding of European Control Conference, 2009; 3713–3718.

Sun S, Meng X, Ji L, Wu J, Wong WC, Adaptive sensor data fusion in motion capture, In: Proceedings of 13th Conference on Information Fusion, 2010; 1–8.

Zhang Z, Meng X, Wu J, Quaternion-Based Kalman Filter With Vector Selection for Accurate Orientation Tracking, IEEE Transactions on Instrumentation and Measurement, 2012; 61(10):2817–2824.

Meng XL, Zhang ZQ, Sun SY, Wu JK, Wong WC, Biomechanical model-based displacement estimation in micro-sensor motion capture, Measurement Science and Technology, 2012: 23(5): 055101.

Maróti M, Kusy B, Simon G and Lédeczi, The flooding time synchronization protocol, In: Proceedings of the 2nd international conference on Embedded networked sensor systems, 2004; 39–49.

TinyOS operating system, http://www.tinyos.net/

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer-Verlag London

About this chapter

Cite this chapter

Zhang, Z., Panousopoulou, A., Yang, GZ. (2014). Wearable Sensor Integration and Bio-motion Capture: A Practical Perspective. In: Yang, GZ. (eds) Body Sensor Networks. Springer, London. https://doi.org/10.1007/978-1-4471-6374-9_12

Download citation

DOI: https://doi.org/10.1007/978-1-4471-6374-9_12

Published:

Publisher Name: Springer, London

Print ISBN: 978-1-4471-6373-2

Online ISBN: 978-1-4471-6374-9

eBook Packages: Computer ScienceComputer Science (R0)