Abstract

Semantic Web Technologies and Human Computation systems have great promise in working together. Human Computation has been used as a solution to help with curating Semantic Web data in the Linked Open Data cloud. In turn, the Semantic Web helps could be used to provide better user continuity and platform consistency across Human Computation systems, allowing for more complex, connected and global Human Computation system to be explored. In this chapter we explore previous work in this combination of the Semantic Web and Human Computation, and raise some challenges and research goals for future work.

Similar content being viewed by others

Keywords

- Human Computation Systems

- Linked Open Data Cloud

- Consistent Platform

- Semantic Automated Discovery And Integration (SADI)

- Siorpaes

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

Introduction

Human computation has come a long way in the pursuit of joining the abilities of humans and machines to solve interesting problems that neither could have done alone. While we have seen great success in human computation in everything from annotating images (Von Ahn 2006) to building global knowledge repositories (Yuen et al. 2009), these systems are still confided to the platforms and data models they were built from. They are silos of services and data, disconnected from each other at both the data and application level. These systems are usually single purpose, and not general enough to be used with new or different data, or in situations different than they were built for. The datasets and data models these systems produce and use can also be difficult to repurpose or reuse. Users of these systems also can’t easily move from one system to the next, instead having to create completely new accounts, having a new identity and different reputation. Their past work and reputation do not transfer over to different human computation systems.

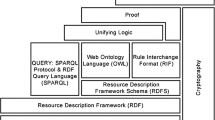

Semantic Web and Linked Data

In the past decade, Semantic Web technologies and linked open data have worked to solve many of the challenges of linking heterogeneous data and services together (Shadbolt et al. 2006). The World Wide Web Consortium (W3C) defines the Semantic Web as “a common framework that allows data to be shared and reused across application, enterprise, and community boundaries”. Where as the World Wide Web is a collection of documents linked together, the Semantic Web is a web of data, concepts, ideas and the relationships that connect them together. This technology allows for data to be integrated in a seamless and schema-less fashion and moves us beyond the unstructured “throw-away” data, often produced by human computation systems, to a more sophisticated system that attempts to bridge the worlds of knowledge representation (the study of how best to represent and encode knowledge) and information presentation (how that information should be displayed) (van Ossenbruggen and Hardman 2002). This structuring and connecting of raw data allows the implicit semantics to become more explicit, allowing for both machines and humans to gain real and new information that may have been hidden before.

The Linked Open Data Cloud is a realization of this web of data. It is a network of over 295 different heterogeneous datasets (covering domains in Life Science and Health Care, Government, Finance, Academic Publications, Geographic, Media and User-generated Content) linked together. A visualization of this data cloud along with statistics can be seen at http://lod-cloud.net/.

While the LOD cloud has grown and expanded greatly in recent years due to the ‘publish first, refine later’ philosophy of the Linking Open Data movement, groups like the Pedantic WebFootnote 1 have revealed issues in data quality and consistence in Linked Open Data (Simperl et al. 2011).

Take for example DBPedia, a semantic version of information found in Wikipedia articles and one of the central nodes in the Linked Open Data Cloud (Auer et al. 2007). DBPedia has an entry for every county in the US, but it is impossible to automatically query or lists all of these entries as the labeling of these US counties are not consistent, or in many cases even exist. This makes the data difficult if not impossible to use. These data errors are difficult to find using automated methods and is often left for the community at large to resolve (Simperl et al. 2011). However the incentive structures for this community to help improve the quality and consistence of Linked Data (assuming there even exists procedures and interfaces that allow this) are usually flawed as there is a disconnect between the effort involved, and the benefit received. Human computation may be able to fix the broken incentive scheme of Linked Open Data (Siorpaes and Hepp 2008a).

It’s also not just in data curation that human computation can be used in Linked Data, but in all parts of the Linked Data life cycle. Link Data creation, alignment and annotation all reflect the interest and expertise of many people, and as such are community efforts that can be facilitated by human computation (Siorpaes and Hepp 2008a). Linked Data also needs better ways to create and manage meta-data and provenance, which is currently missing in many important Linked Datasets. In all of these areas, human abilities are indispensable for the resolution of those particular tasks that are acknowledged to be hardly approachable in a systematic, automated fashion. Human computation can help provide, at scale, these human abilities needed to improve and expand Linked Data (Simperl et al. 2011).

By combining Semantic Web and Human Computation, we get the best of both worlds. We can build the next generation human computation system that reach beyond the platform they were built upon. Their data can be reused repurposed in ways never intended by their creators (thereby answering the call of the Long Tail, in that a large percentage of requests made to a web system are new, or unintended (Anderson 2007)). Likewise, Linked Open Data can be improved by using human computation strategies to help curate and improve data quality, data linking, vocabulary creation, meta-data (data that defines and explains data) and provenance (history of how data has been created, modified and used). In other words, Human Computation can be used to help curate Semantic Web data in the Linked Open Data cloud while the Semantic Web can be used to provide better user continuity and platform consistency across Human Computation systems.

In rest of this chapter, we will consider how Human Computation systems have been used to curate Linked Open Data. We will then discuss some of the large questions and challenges facing promise of Semantic Web technologies being used to connect and expand Human Computation systems.

Examples of Semantic Web Curation Using Human Computation Systems

Many researchers have already explored combining human computation strategies with Semantic Web technologies with the purpose of improving and curating Linked Data. The OntoGames series covers what they list as the complete Semantic Web life cycle in their series of games (Siorpaes and Hepp 2008b). They list this life cycle to be ontology creation, entity linking, and semantic annotation of data. In this section we list the games that fit into these categories (both in and out of the OntoGames series) along with some semantic human computation systems that fall into categories outside these three.

Ontology Creation

This first section deals with applications that use human computation to help collaboratively build ontologies. OntoPronto (a part of the OntoGame Series) is a “Game with a Purpose” to help build the Proto ontology (general interest ontology). In it, two players try to map randomly chosen Wikipedia articles to the most specific class of the Proton ontology. If they agree on a Proton class for their article, they get points and proceed with to next specific level (Siorpaes and Hepp 2008b).

Conceptnet is another system in ontology building. Conceptnet is a semantic network of common knowledge from it’s users. The system works by having users ask other users questions related to interesting topics. The answers from these other users are then evaluated by a majority of the users and taken as basis for constructing the ontology (Havasi et al. 2007).

Entity Linking

One of the most important and difficult parts of Linked Data is linking similar entities together to create the connections between datasets. Different games and human computation system have been built to try to address this issue, allowing from better linking in the Linked Open Data cloud. One of the first of these systems was SpotTheLink (a game in the OntoGames Series). SpotTheLink is a collaborative game to link DBPeida to the Pronto Ontology (as created in the OntoPronto game). In the game, players have to agree on which Pronto ontology class fits best with a randomly selected DBPedia entity. This agreement creates a mapping between these concepts, which is encoded in SKOS (Simple Knowledge Organization System). While the sample size was small, the users that did play the game would play a second or third round over 40 % of the time, showing the potential for replay value and more work done (Thaler et al. 2011).

ZenCrowd is another system that uses both automated techniques and crowdsourcing to link entities in unstructured text to the Linked Open Data cloud. It uses a probabilistic framework to create a list of candidate links from entities it mines out of unstructured text. It then dynamically generates micro-tasks from these entities and candidate link list for use in a mechanical Turk platform, where users complete these tasks to make or rate the links. This unique system allowed for larger amount of links to be created and verified than non semi-automated systems. Issues arose though with payment incentives for the mechanical Turk platform (Demartini et al. 2012).

UrbanMatch is a game used to help link photos on the web to the geographic points of interest they are from. It’s a mobile game, played from a smartphone, that makes players actually visit these points of interest and verify that photos found online actual depict the landmark. The more links a player creates, the more points they gain. While accuracy and completeness of linking data using this game improved, it has yet to be seen if the game mechanics can motivate players to continue play, or invite other to play with them (Celino et al. 2012).

Data Annotation

A lot of the data and information on the web is in an unstructured format (free-text, images, videos, audio files, etc.). Lots of work on systems to bring semantic annotation and structure to this data has been taking place to help make this data more easily available and useable. OntoTube and OntoBay are two such systems that attempt to do this by allowing users to semantically annotate data in YouTube and eBay. In OntoTube, users are shown videos from YouTube, and asked from questions about the video. The answers to these questions correspond to semantic annotations that will be attached to the video, provided that a majority of the users agree in their answers. OntoBay works the same way, with a very similar interface, but instead of videos on YouTube, it works with listings on eBay. These simple games allow for the creation and annotation of new data into the Linked Open Data Cloud, and make the data in YouTube and eBay available to the greater Semantic Web community (Siorpaes and Hepp 2008a).

Data Quality

As stated before, data quality and consistency is a huge issue for Linked Data. Finding and correcting these errors are hard if not impossible to do in a completely automated way. Some researchers have looking into building games to try to incentivize people to help curate Linked Data. WhoKnows? is a quiz game styled after the “Who Want to Be a Millionaire?” game. While playing this game, users can report errors in the answers they get from the quiz. If enough player agree on these errors, a correction, or patch, to this dataset is created, using a patch ontology created by the developers. This allows the correction of the data to be taken in not only by the original publisher of the data, but also by other data consumers who may have their own local copy of the published Linked Data (Waitelonis et al. 2011).

Challenges

Although we have seen some of the current work in using Semantic Web technology with human computation strategies, there is still a lot of work to be done to bring out the full potential. As we discussed before, one of the hopes of merging Semantic Web technology with human computation is the ability to create new cross platform, linked and general-purpose human computation systems. To be able to build systems where the applications and not just the data could be linked together. The systems we reviewed used and contributed back to Linked Data, thus allowing their data to be linked, reused and repurposed by others. However, the applications themselves were standalone and usually single purpose. New work in semantic integration of services, like the RDFAgents protocol (Shinavier 2011) and SADI (Semantic Automated Discovery and Integration) services (Wilkinson et al. 2009) can help bring this along. Efforts to experiment with this technology in human computation systems are greatly needed.

The services we explored all had a wide array of interfaces presented to users. In fact many of the papers reported that developing an interface for their system to be one of the biggest challenges they faced (Siorpaes and Hepp 2008a; Simperl et al. 2011; Kochhar et al. 2010). Semantic Web applications are still very new and experimental, and designing user interfaces that best represent and produce semantic data need to be explored and tested more. How exactly a layperson will read and write to the Semantic Web is still poorly understood. New visualizations and useable designs will be needed.

Building interfaces to semantic systems is not the only challenge to their development. Many issues exist for building systems on top of services and datasets that are constantly in flux, have up-time and reliability constraints, issues in latency, and problems with data quality and consistency (Knuth et al. 2012). Some of these issues will dissipate as these technologies grow and mature, but some are inherent to the very nature of the Semantic Web. New methods in application development to address these challenges must be better researched and explored.

Inline with this challenge, but deserving of it’s own mention, is scalability. Human computation on the Semantic Web will require scalability in both reading and writing semantic data. None of the services previously mentioned attained anything close to web scale (most had number of users in the hundreds). It is yet to be seen what a truly web scale semantic human computation system will look like, and what parts of it architecture will serve as the bottleneck.

New ontologies and vocabularies will need to be developed to help manage and link these human computation systems together. Some of the projects experimented a little with building some ontologies to help patch data corrections to Linked Data (Knuth et al. 2012), but more needs to be explored.

Semantic user management was something that was largely ignored in these examples. One of the hopes of using semantic web technologies in these systems is that users can easily sign on into new systems, and have their points and reputation follow them. There exist protocols in place to handle these use cases, like WebID.Footnote 2 This could create new avenues in linking these systems together. These technologies must be integrated and explored more.

Although the Linked Open Data Cloud is a collection of datasets that are linked together as one big graph, querying or building applications on top of it as if it were one connected graph is incredibly difficult if not impossible. Technologies like SPARQL Federated QueryFootnote 3 and LinkedDataSail (Shinavier 2007) have come about as a response to this challenge. Semantic human computation system need to explore this space more to pull from all of the Linked Open Data Cloud, and not just a node inside it.

As stated before, the Linked Open Data Cloud suffers from a lack of meta-data and provenance information. According to http://lod-cloud.net/, over 63 % of the datasets in the LOD Cloud do not provide provenance meta-data. While many of the systems explored in this chapter worked on increasing accuracy and consistency in Linked Data, none of them had a focus on meta-data and provenance. This is a big gap in the LOD Cloud and human computation methods should be leveraged to help address it.

Many different types of human computation systems have been explored in the past (Yuen et al. 2009). It has yet to be seen what type of incentives, platform, games, rules, systems and architectures will work best for human computation on the Semantic Web (Siorpaes and Hepp 2008a). More experiments and tests must be done to explore this further.

Finally, another area that the Semantic Web could be explored to improve Human Computation systems is by providing a more global, consistent “State Space”. State Space in a Human Computation system is the collection of knowledge, artifacts and skills of both the human users and the computer system that help define the state, or current stage at a given time, of the Human Computation system. This State Space can often be very messy, disjointed, incomplete or inconsistent. The Semantic Web could provide this common platform and medium for representing knowledge that persists despite the asynchronous behaviors of the human participants. More research is needed to explore how this could work, how best to represent this knowledge, and what advantages this could bring to future Human Computation systems.

Conclusion

The future of human computation and the Semantic Web holds great promise. Although there have been some great experiments in building human computation system for the Semantic Web, there are still many challenges and questions left. More work on leveraging the best of semantic web technologies and human computation is greatly needed to bring forth this next generation.

References

Anderson C (2007) The long tail: how endless choice is creating unlimited demand. (Random House Business Books, New York, 2007).

Auer S, Bizer C, Kobilarov G, Lehmann J, Cyganiak R, Ives Z (2007) Dbpedia: a nucleus for a web of open data. In: The semantic web. Springer Berlin Heidelberg, pp 722–735

Celino I, Contessa S, Corubolo M, Dell’Aglio D, Della Valle E, Fumeo S, Krüger T (2012) Urbanmatch–linking and improving smart cities data. In: Linked data on the web workshop, LDOW

Demartini G, Difallah DE, Cudré-Mauroux P (2012) ZenCrowd: leveraging probabilistic reasoning and crowdsourcing techniques for large-scale entity linking. In: Proceedings of the 21st international conference on world wide web, ACM, pp 469–478

Havasi C, Speer R, Alonso J (2007) ConceptNet 3: a flexible, multilingual semantic network for common sense knowledge. In: Recent advances in natural language processing, pp 27–29

Knuth M, Hercher J, Sack H (2012) Collaboratively patching linked data.arXiv preprint arXiv:1204.2715

Kochhar S, Mazzocchi S, Paritosh P (2010) The anatomy of a large-scale human computation engine. In: Proceedings of the ACM SIGKDD workshop on human computation, ACM, Washington, DC, USA, pp 10–17

Shadbolt N, Hall W, Berners-Lee T (2006) The semantic web revisited. Intell Syst IEEE 21(3):96–101

Shinavier J (2007) Functional programs as linked data. In: 3rd workshop on scripting for the semantic web, Innsbruck

Shinavier J (2011) RDFAgents specification. Technical report 20110603, Rensselaer Polytechnic Institute

Simperl E, Norton B, Vrandecic D (2011) Crowdsourcing tasks in linked data management. In: Proceedings of the 2nd workshop on consuming linked data COLD2011 co-located with the 10th international semantic web conference ISWC 2011, Bonn, Germany

Siorpaes K, Hepp M (2008a) Games with a purpose for the semantic web. Intell Syst IEEE 23(3):50–60

Siorpaes K, Hepp M (2008) Ontogame: weaving the semantic web by online games. In: The semantic web: research and applications, Springer Berlin Heidelberg, pp 751–766

Thaler S, Simperl E, Siorpaes K (2011) Spotthelink: a game for ontology alignment. In: Proceedings of the 6th conference for professional knowledge management, Innsbruck, Austria

van Ossenbruggen JR, Hardman HL (2002) Smart style on the semantic web. Centrum voor Wiskunde en Informatica

Von Ahn L (2006) Games with a purpose. Computer 39(6):92–94

Waitelonis J, Ludwig N, Knuth M, Sack H (2011) WhoKnows? Evaluating linked data heuristics with a quiz that cleans up DBpedia. Interact Smart Edu 8(4):236–248

Wilkinson MD, Vandervalk B, McCarthy L (2009) SADI semantic web services-‚cause you can’t always GET what you want! In: Services computing conference. APSCC 2009. IEEE Asia-Pacific. IEEE, pp 13–18

Yuen MC, Chen LJ, King I (2009) A survey of human computation systems. In: Computational science and engineering. CSE’09. International conference on, vol 4, Vancouver, Canada. IEEE, pp 723–728

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Science+Business Media New York

About this chapter

Cite this chapter

DiFranzo, D., Hendler, J. (2013). The Semantic Web and the Next Generation of Human Computation. In: Michelucci, P. (eds) Handbook of Human Computation. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-8806-4_39

Download citation

DOI: https://doi.org/10.1007/978-1-4614-8806-4_39

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4614-8805-7

Online ISBN: 978-1-4614-8806-4

eBook Packages: Computer ScienceComputer Science (R0)