Abstract

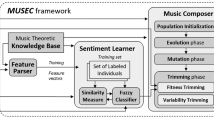

This research investigates the use of emotion data derived from analyzing change in activity in the autonomic nervous system (ANS) as revealed by brainwave production to support the creative music compositional intelligence of an adaptive interface. A relational model of the influence of musical events on the listener’s affect is first induced using inductive logic programming paradigms with the emotion data and musical score features as inputs of the induction task. The components of composition such as interval and scale, instrumentation, chord progression and melody are automatically combined using genetic algorithm and melodic transformation heuristics that depend on the predictive knowledge and character of the induced model. Out of the four targeted basic emotional states, namely, stress, joy, sadness, and relaxation, the empirical results reported here show that the system is able to successfully compose tunes that convey one of these affective states.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Bresin R, Friberg A. Emotional coloring of computer-controlled music performance.Computer Music Journal 2000; 24(4):44-62

Kim S, Andre E. Composing affective music with a generate and sense approach. In:Barr V, Markov Z (eds) Proceedings of the 17th International FLAIRS Conference, Special Track on AI and Music, AAAI Press, 2004

Numao M, Takagi S, Nakamura K. Constructive adaptive user interfaces – composing music based on human feelings. In: Proceedings of the 18th National Conference on AI,AAAI Press, 2002, pp 193-198 108 Max Bramer, Frans Coenen and Miltos Petridis (Eds)

Riecken D. Wolfgang: ‘emotions’ plus goals enable learning. In: Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, 1998, pp 1119-1120

Unehara M, Onisawa T. Music composition system based on subjective evaluation. In: Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, 2003, pp 980-986

Legaspi R, Hashimoto Y, Moriyama K, Kurihara S, Numao M. Music compositional intelligence with an affective flavor. In: Proceedings of the 12th International Conference on Intelligent User Interfaces, ACM Press, 2007, pp 216-224

Sloboda JA. Music structure and emotional response: Some empirical findings.Psychology of Music 1991; 19(2):110-120

Gabrielsson A, Lindstrom E. The influence of musical structure on emotional expression. In: Juslin PN, Sloboda JA (eds) Music and emotion: Theory and research, Oxford University Press, New York, 2001, pp 223-248

Legaspi R, Hashimoto Y, Numao M. An emotion-driven musical piece generator for a constructive adaptive user interface. In: Proceedings of the 9th Pacific Rim International Conference on AI, 2006, pp 890-894 (LNAI 4009)

Roz C. The autonomic nervous system: Barometer of emotional intensity and internal conflict. A lecture given for Confer, 27th March 2001, [a copy can be found in] http://www.thinkbody.co.uk/papers/autonomic-nervous-system.htm

Picard RW, Healey J. Affective wearables. Personal and Ubiquitous Computing 1997; 1(4):231-240

Musha T, Terasaki Y, Haque HA, Ivanitsky GA. Feature extraction from EEGs associated with emotions, Artif Life Robotics 1997; 1:15-19

Juslin PN, Sloboda JA. Music and emotion: Theory and research. Oxford University Press, New York, 2001

Juslin PN. Studies of music performance: A theoretical analysis of empirical findings. In: Proceedings of the Stockholm Music Acoustics Conference, 2003, pp 513-516

Nattee C, Sinthupinyo S, Numao M, Okada T. Learning first-order rules from data with multiple parts: Applications on mining chemical compound data. In: Proceedings of the 21st International Conference on Machine Learning, 2004, pp 77-85

Quinlan JR. Learning logical definitions from relations. Machine Learning 1990; 5:239-266

Tangkitvanich S, Shimura M. Refining a relational theory with multiple faults in the concept and subconcept. In: Machine Learning: Proceedings of the Ninth International Workshop, 1992, pp 436-444 18. Posner J, Russell JA, Peterson BS. The circumplex model of affect: An integrative approach to affective neuroscience, cognitive development, and psychopathology. Development and Psychopathology 2005; 17:715-734.

Wiggins GA, Papadopoulos G, Phon-Amnuaisuk S, Tuson A. Evolutionary methods for musical composition. International Journal of Computing Anticipatory Systems 1999; 1(1)

Johanson BE, Poli R. GP-Music: An interactive genetic programming system for music generation with automated fitness raters. Technical Report CSRP-98-13, School of Computer Science, The University of Birmingham, 1998

Unehara M, Onisawa T. Interactive music composition system – composition of 16-bars musical work with a melody part and backing parts. In: Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, 2004,pp 5736-5741

Unehara M, Onisawa T. Interactive music composition system – composition of 16-bars musical work with a melody part and backing parts. In: Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, 2004, pp 5736-5741

Li T, Ogihara M. Detecting emotion in music. In: Proceedings of the 4th International Conference on Music Information Retrieval, 2003, pp 239-240

Rosenboom D. Extended musical interface with the human nervous system: Assessment and prospectus. Leonardo Monograph Series, Monograph No.1 1990/1997

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2008 Springer-Verlag London Limited

About this paper

Cite this paper

Sugimoto, T., Legaspi, R., Ota, A., Moriyama, K., Kurihara, S., Numao, M. (2008). Modelling Affective-based Music Compositional Intelligence with the Aid of ANS Analyses. In: Bramer, M., Coenen, F., Petridis, M. (eds) Research and Development in Intelligent Systems XXIV. SGAI 2007. Springer, London. https://doi.org/10.1007/978-1-84800-094-0_8

Download citation

DOI: https://doi.org/10.1007/978-1-84800-094-0_8

Publisher Name: Springer, London

Print ISBN: 978-1-84800-093-3

Online ISBN: 978-1-84800-094-0

eBook Packages: Computer ScienceComputer Science (R0)