Abstract

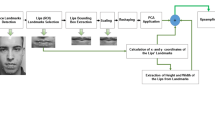

The Audiovisual Speech Recognition (AVSR) most commonly applied to multimodal learning employs both the video and audio information to do Robust Automatic Speech Recognition. Traditionally, AVSR was regarded as the inference and projection, a lot of restrictions on the ability of it. With the in-depth study, DNN becomes an important part of the toolkit in traditional classification tools, such as automatic speech recognition, image classification, natural language processing. AVSR often use some DNN models including Multimodal Deep Autoencoders (MDAEs), Multimodal Deep Belief Network (MDBN) and Multimodal Deep Boltzmann Machine (MDBM), which are always better than the traditional methods. However, such DNN models have several shortcomings: Firstly, they can’t balance the modal fusion and temporal fusion, or even haven’t temporal fusion; Secondly, the architecture of these models isn’t end-to-end. In addition, the training and testing are cumbersome. We designed a DNN model—Aggregate\(\varvec{d}\) Mult\(\varvec{i}\)moda\(\varvec{l}\) Bidirection\(\varvec{a}\)l Recurren\(\varvec{t}\) Mod\(\varvec{e}\)l (DILATE)—to overcome such weakness. The DILATE could be not just trained and tested simultaneously, but alternatively easy to train and prevent overfitting automatically. The experiments show that DILATE is superior to traditional methods and other DNN models in some benchmark datasets.

Yu Wen, woman, born in 1980, master, lecturer, research direction: information resource management, computer network.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Amer, M.R., Siddiquie, B., Khan, S., Divakaran, A., Sawhney, H.: Multimodal fusion using dynamic hybrid models. In: 2014 IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 556–563. IEEE (2014)

Amodei, D., et al.: Deep speech 2: End-to-end speech recognition in english and mandarin. In: ICML (2016)

Atrey, P.K., Hossain, M.A., El Saddik, A., Kankanhalli, M.S.: Multimodal fusion for multimedia analysis: a survey. Multimed. Syst. 16(6), 345–379 (2010)

Goodfellow, I., Bengio, Y., Courville, A.: Deep Learning. MIT Press (2016). http://www.deeplearningbook.org

Graves, A., Fernández, S., Gomez, F., Schmidhuber, J.: Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. In: Proceedings of the 23rd International Conference on Machine Learning, pp. 369–376. ACM (2006)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Hu, D., Li, X., et al.: Temporal multimodal learning in audiovisual speech recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3574–3582 (2016)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012)

Maragos, P., Potamianos, A., Gros, P.: Multimodal Processing and Interaction: Audio, Video, Text, vol. 33. Springer Science & Business Media (2008)

Matthews, I., Cootes, T.F., Bangham, J.A., Cox, S., Harvey, R.: Extraction of visual features for lipreading. IEEE Trans. Pattern Anal. Mach. Intell. 24(2), 198–213 (2002)

Mroueh, Y., Marcheret, E., Goel, V.: Deep multimodal learning for audio-visual speech recognition, pp. 2130–2134 (2015)

Nath, A.R., Beauchamp, M.S.: A neural basis for interindividual differences in the mcgurk effect, a multisensory speech illusion. NeuroImage 59(1), 781–787 (2012)

Nefian, A.V., Liang, L., Pi, X., Liu, X., Murphy, K.: Dynamic bayesian networks for audio-visual speech recognition. EURASIP J. Adv. Signal Process. 2002(11), 1–15 (2002)

Ngiam, J., et al.: Multimodal deep learning. In: Proceedings of the 28th International Conference on Machine Learning (ICML-2011), pp. 689–696 (2011)

Noda, K., Yamaguchi, Y., Nakadai, K., Okuno, H.G., Ogata, T.: Audio-visual speech recognition using deep learning. Appl. Intell. 42(4), 722–737 (2015)

Pascanu, R., Mikolov, T., Bengio, Y.: On the difficulty of training recurrent neural networks. In: International Conference on Machine Learning, pp. 1310–1318 (2013)

Prechelt, L.: Automatic early stopping using cross validation: quantifying the criteria. Neural Networks 11(4), 761–767 (1998)

Rabiner, L.R.: A tutorial on hidden markov models and selected applications in speech recognition. Proc. IEEE 77(2), 257–286 (1989)

Schuster, M., Paliwal, K.K.: Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 45(11), 2673–2681 (1997)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. CoRR abs/1409.1556 (2014)

Srivastava, N., Hinton, G.E., Krizhevsky, A., Sutskever, I., Salakhutdinov, R.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15(1), 1929–1958 (2014)

Srivastava, N., Salakhutdinov, R.: Learning representations for multimodal data with deep belief nets. In: International Conference on Machine Learning Workshop, vol. 79 (2012)

Srivastava, N., Salakhutdinov, R.R.: Multimodal learning with deep boltzmann machines. In: Advances in Neural Information Processing Systems, pp. 2222–2230 (2012)

Sutskever, I., Vinyals, O., Le, Q.V.: Sequence to sequence learning with neural networks. In: Advances in Neural Information Processing Systems, pp. 3104–3112 (2014)

Tamura, S., et al.: Audio-visual speech recognition using deep bottleneck features and high-performance lipreading. In: 2015 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA), pp. 575–582. IEEE (2015)

Tian, C., Yuan, Y., Lu, X.: Deep temporal architecture for audiovisual speech recognition. In: CCF Chinese Conference on Computer Vision, pp. 650–661 (2017)

Viola, P., Jones, M.: Rapid object detection using a boosted cascade of simple features. In: Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, vol. 1, p. I-511. IEEE (2001)

Zhao, G., Barnard, M., Pietikainen, M.: Lipreading with local spatiotemporal descriptors. IEEE Trans. Multimed. 11(7), 1254–1265 (2009)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Wen, Y. et al. (2018). Aggregated Multimodal Bidirectional Recurrent Model for Audiovisual Speech Recognition. In: Sun, X., Pan, Z., Bertino, E. (eds) Cloud Computing and Security. ICCCS 2018. Lecture Notes in Computer Science(), vol 11068. Springer, Cham. https://doi.org/10.1007/978-3-030-00021-9_35

Download citation

DOI: https://doi.org/10.1007/978-3-030-00021-9_35

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-00020-2

Online ISBN: 978-3-030-00021-9

eBook Packages: Computer ScienceComputer Science (R0)