Abstract

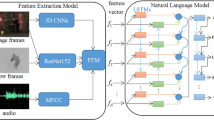

Describing videos in human language is of vital importance in many applications, such as managing massive videos on line and providing descriptive video service (DVS) for blind people. In order to further promote existing video description frameworks, this paper presents an end-to-end deep learning model incorporating Convolutional Neural Networks (CNNs) and Bidirectional Recurrent Neural Networks (BiRNNs) based on a multimodal attention mechanism. Firstly, the model produces richer video representations, including image feature, motion feature and audio feature, than other similar researches. Secondly, BiRNNs model encodes these features in both forward and backward directions. Finally, an attention-based decoder translates sequential outputs of encoder to sequential words. The model is evaluated on Microsoft Research Video Description Corpus (MSVD) dataset. The results demonstrate the necessity of combining BiRNNs with a multimodal attention mechanism and the superiority of this model over other state-of-the-art methods conducted on this dataset.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Kulkarni, G., Premraj, V., Ordonez, V.: BabyTalk: understanding and generating simple image descriptions. IEEE Trans. Pattern Anal. Mach. Intell. 35(12), 2891–2903 (2013)

Chen, X., Zitnick, C.L.: Mind’s eye: a recurrent visual representation for image caption generation. In: IEEE Computer Vision and Pattern Recognition, pp. 2422–2431 (2015)

Rohrbach, M., Qiu, W., Titov, I., et al.: Translating video content to natural language descriptions. In: IEEE International Conference on Computer Vision, pp. 433–440. IEEE Computer Society (2013)

Venugopalan, S., Xu, H., Donahue, J., et al.: Translating videos to natural language using deep recurrent neural networks. Comput. Sci. (2014)

Venugopalan, S., Rohrbach, M., Donahue, J., et al.: Sequence to sequence - video to text. In: IEEE International Conference on Computer Vision, pp. 4534–4542. IEEE (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Computer Vision and Pattern Recognition, pp. 770–778 (2015)

Tran, D., Bourdev, L., Fergus, R., et al.: C3D: Generic Features for Video Analysis. Eprint Arxiv (2014)

Peris, A., Bolanos, M., Radeva, P., Casacuberta, F.: Video description using bidirectional recurrent neural networks. In: Villa, A., Masulli, P., Rivero, A. (eds.) ICANN 2016. LNCS, vol. 9887, pp. 3–11. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-44781-0_1

Yi, B., Yang, Y., Shen, F., et al.: Bidirectional long-short term memory for video description. In: ACM on Multimedia Conference, pp. 436–440. ACM (2016)

Bahdanau, D., Cho, K., Bengio, Y.: Neural machine translation by jointly learning to align and translate. Comput. Sci.(2014)

Yao, L., Torabi, A., Cho, K., et al.: Video description generation incorporating spatio-temporal features and a soft-attention mechanism. Eprint Arxiv 53, 199–211 (2015)

Barbu, A., Bridge, A., Burchill, Z., et al.: Video in sentences out. In: Twenty-Eighth Conference on Uncertainty in Artificial Intelligence. arXiv, 274–283 (2012)

Yu, H., Wang, J., Huang, Z., et al.: Video paragraph captioning using hierarchical recurrent neural networks. In: Computer Vision and Pattern Recognition, pp. 4584–4593. IEEE (2016)

Cho, K., Van Merrienboer, B., Bahdanau, D., et al.: On the properties of neural machine translation: encoder-decoder approaches. Comput. Sci. (2014)

Venugopalan, S., Hendricks, L.A., Mooney, R., et al.: Improving LSTM-based video description with linguistic knowledge mined from text. In: Conference on Empirical Methods in Natural Language Processing, Austin, Texas, pp. 1961–1966 (2016)

Xu, H., Venugopalan, S., Ramanishka, V., et al.: A multi-scale multiple instance video description network. Comput. Sci. 6738, 272–279 (2015)

Bin Y, Yang Y, Shen F, et al. Bidirectional long-short term memory for video description. In: ACM on Multimedia Conference, pp. 436–440 (2016)

Pasunuru, R., Bansal, M.: Multi-task video captioning with video and entailment generation. In: Meeting of the Association for Computational Linguistics, pp. 1273–1283 (2017)

Hershey, S., Chaudhuri, S., Ellis, D.P.W., et al.: CNN architectures for large-scale audio classification. In: IEEE International Conference on Acoustics, Speech and Signal Processing. 2379–190X (2017)

Abdel-Hamid, O., Mohamed, A.R., Jiang, H., et al.: Convolutional neural networks for speech recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 22(10), 1533–1545 (2014)

Jin, Q., Chen, J., Chen, S., et al.: Describing videos using multi-modal fusion. In: ACM on Multimedia Conference, pp. 1087–1091. ACM (2016)

Ramanishka, V., Das, A., Dong, H.P., et al.: Multimodal video description. In: ACM on Multimedia Conference, pp. 1092–1096. ACM (2016)

D’Angelo, E., Paratte, J., Puy, G., et al.: Fast TV-L1 optical flow for interactivity. In: IEEE International Conference on Image Processing, pp. 1885–1888. IEEE (2011)

Tran, D., Bourdev, L., Fergus, R., et al.: Learning Spatiotemporal Features with 3D Convolutional Networks. eprint arXiv:1412.0767 (2014)

Giannakopoulos, T.: pyAudioAnalysis: an open-source python library for audio signal analysis. Plos One 10(12), e0144610 (2015)

Srivastava, N., Mansimov, E., Salakhutdinov, R.: Unsupervised Learning of Video Representations using LSTMs. eprint arXiv:1502.04681 (2015)

Denkowski, M., Lavie, A.: Meteor universal: language specific translation evaluation for any target language. In: The Workshop on Statistical Machine Translation, pp. 376–380 (2014)

Papineni, K., Roukos, S., Ward, T., Zhu, W.J.: BLEU: a method for automatic evaluation of machine translation. Meeting on Association for Computational Linguistics 4, 311–318 (2002)

Zeiler, M.D.: ADADELTA: an adaptive learning rate method. Comput. Sci. (2012)

Acknowledgments

This work was supported by Research and Industrialization for Intelligent Video Processing Technology based on GPUs Parallel Computing of the Science and Technology Supported Program of Jiangsu Province (BY2016003-11) and the Application platform and Industrialization for efficient cloud computing for Big data of the Science and Technology Supported Program of Jiangsu Province (BA2015052).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Du, X., Yuan, J., Liu, H. (2018). Attention-Based Bidirectional Recurrent Neural Networks for Description Generation of Videos. In: Sun, X., Pan, Z., Bertino, E. (eds) Cloud Computing and Security. ICCCS 2018. Lecture Notes in Computer Science(), vol 11068. Springer, Cham. https://doi.org/10.1007/978-3-030-00021-9_40

Download citation

DOI: https://doi.org/10.1007/978-3-030-00021-9_40

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-00020-2

Online ISBN: 978-3-030-00021-9

eBook Packages: Computer ScienceComputer Science (R0)