Abstract

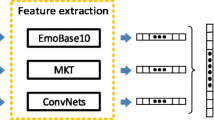

Video affective computing aims to recognize, interpret, process, and simulate human affective of videos from visual, textual, and auditory sources. An intrinsic challenge is how to extract effective representations to analyze affection. In view of this problem, we propose a new video affective content analysis framework. In this paper, we observe the fact that only a few actors play an important role in video, leading the trend of video emotional developments. We provide a novel solution to distinguish the important one and call it the very important person (VIP). Meanwhile, we design a novel keyframes selection strategy to select the keyframes including the VIPs. Furthermore, scale invariant feature transform (SIFT) features corresponding to a set of patches are first extracted from each VIP keyframe, which forms a SIFT feature matrix. Next, the feature matrix is fed to a convolutional neural network (CNN) to learn discriminative representations, which make CNN and SIFT complement each other. Experimental results on two public audio-visual emotional datasets, including the classical LIRIS-ACCEDE and the PMSZU dataset we built, demonstrate the promising performance of the proposed method and achieve better performance than other compared methods.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Amos, B., Ludwiczuk, B., Satyanarayanan, M.: Openface: a general-purpose face recognition library with mobile applications. CMU School of Computer Science (2016)

Baveye, Y., Dellandrea, E., Chamaret, C., Chen, L.: LIRIS-ACCEDE: a video database for affective content analysis. IEEE Trans. Affect. Comput. 6(1), 43–55 (2015)

Chang, C.C., Lin, C.J.: LIBSVM: a library for support vector machines. ACM Trans. Intell. Syst. Technol. (TIST) 2(3), 27 (2011)

Connie, T., Al-Shabi, M., Cheah, W.P., Goh, M.: Facial expression recognition using a hybrid CNN–SIFT aggregator. In: Phon-Amnuaisuk, S., Ang, S.-P., Lee, S.-Y. (eds.) MIWAI 2017. LNCS (LNAI), vol. 10607, pp. 139–149. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-69456-6_12

Ding, W., et al.: Audio and face video emotion recognition in the wild using deep neural networks and small datasets. In: Proceedings of the 18th ACM International Conference on Multimodal Interaction, pp. 506–513. ACM (2016)

Hong, R., Zhang, L., Tao, D.: Unified photo enhancement by discovering aesthetic communities from flickr. IEEE Trans. Image Process. 25(3), 1124–1135 (2016)

Hong, R., Zhang, L., Zhang, C., Zimmermann, R.: Flickr circles: aesthetic tendency discovery by multi-view regularized topic modeling. IEEE Trans. Multimed. 18(8), 1555–1567 (2016)

Lowe, D.G.: Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 60(2), 91–110 (2004)

Lv, Y., Zhou, W., Tian, Q., Sun, S., Li, H.: Retrieval oriented deep feature learning with complementary supervision mining. IEEE Trans. Image Process. 27, 4945–4957 (2018)

Noroozi, F., Marjanovic, M., Njegus, A., Escalera, S., Anbarjafari, G.: Audio-visual emotion recognition in video clips. IEEE Trans. Affect. Comput. 1 (2017). https://doi.org/10.1109/taffc.2017.2713783

Perronnin, F., Larlus, D.: Fisher vectors meet neural networks: a hybrid classification architecture. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3743–3752 (2015)

Sabirin, H., Yao, Q., Nonaka, K., Sankoh, H., Naito, S.: Toward real-time delivery of immersive sports content. IEEE MultiMedia 25(2), 61–70 (2018). https://doi.org/10.1109/mmul.2018.112142739

Shi, X., Shan, Z., Zhao, N.: Learning for an aesthetic model for estimating the traffic state in the traffic video. Neurocomputing 181, 29–37 (2016)

Wagner, J., Lingenfelser, F., Andr, E., Kim, J., Vogt, T.: Exploring fusion methods for multimodal emotion recognition with missing data. IEEE Trans. Affect. Comput. 2(4), 206–218 (2011)

Wang, Y., Guan, L., Venetsanopoulos, A.N.: Kernel cross-modal factor analysis for information fusion with application to bimodal emotion recognition. IEEE Trans. Multimed. 14(3), 597–607 (2012)

Wöllmer, M., Kaiser, M., Eyben, F., Schuller, B., Rigoll, G.: Lstm-modeling of continuous emotions in an audiovisual affect recognition framework. Image Vis. Comput. 31(2), 153–163 (2013)

Yan, J., et al.: Multi-clue fusion for emotion recognition in the wild. In: Proceedings of the 18th ACM International Conference on Multimodal Interaction, pp. 458–463. ACM (2016)

Yao, A., Shao, J., Ma, N., Chen, Y.: Capturing au-aware facial features and their latent relations for emotion recognition in the wild. In: Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, pp. 451–458. ACM (2015)

Zeng, Z., Pantic, M., Roisman, G.I., Huang, T.S.: A survey of affect recognition methods: audio, visual, and spontaneous expressions. IEEE Trans. Pattern Anal. Mach. Intell. 31(1), 39–58 (2009)

Zeng, Z., Tu, J., Pianfetti, B.M., Huang, T.S.: Audio-visual affective expression recognition through multistream fused HMM. IEEE Trans. Multimed. 10(4), 570–577 (2008)

Zhang, Q., Yu, S.P., Zhou, D.S., Wei, X.P.: An efficient method of key-frame extraction based on a cluster algorithm. J. Hum. Kinet. 39(1), 5 (2013)

Zhang, S., Huang, Q., Jiang, S., Gao, W., Tian, Q.: Affective visualization and retrieval for music video. IEEE Trans. Multimed. 12(6), 510–522 (2010)

Zhang, S., Zhang, S., Huang, T., Gao, W., Tian, Q.: Learning affective features with a hybrid deep model for audio-visual emotion recognition. IEEE Trans. Circuits Syst. Video Technol. 1 (2017). https://doi.org/10.1109/tcsvt.2017.2719043

Zhang, T., Zheng, W., Cui, Z., Zong, Y., Yan, J., Yan, K.: A deep neural network-driven feature learning method for multi-view facial expression recognition. IEEE Trans. Multimed. 18(12), 2528–2536 (2016)

Zhu, Y., Jiang, Z., Peng, J., Zhong, S.: Video affective content analysis based on protagonist via convolutional neural network. In: Chen, E., Gong, Y., Tie, Y. (eds.) PCM 2016. LNCS, vol. 9916, pp. 170–180. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-48890-5_17

Acknowledgments

This work was funded by: (i) National Natural Science Foundation of China (Grant No. 61602314); (ii) Natural Science Foundation of Guangdong Province of China (Grant No. 2016A030313043); (iii) Fundamental Research Project in the Science and Technology Plan of Shenzhen (Grant No. JCYJ20160331114551175).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhu, Y., Tong, M., Huang, T., Wen, Z., Tian, Q. (2018). Learning Affective Features Based on VIP for Video Affective Content Analysis. In: Hong, R., Cheng, WH., Yamasaki, T., Wang, M., Ngo, CW. (eds) Advances in Multimedia Information Processing – PCM 2018. PCM 2018. Lecture Notes in Computer Science(), vol 11166. Springer, Cham. https://doi.org/10.1007/978-3-030-00764-5_64

Download citation

DOI: https://doi.org/10.1007/978-3-030-00764-5_64

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-00763-8

Online ISBN: 978-3-030-00764-5

eBook Packages: Computer ScienceComputer Science (R0)