Abstract

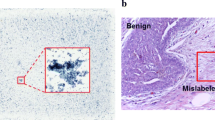

Previous works mainly address the medical datasets with image-wise labels or pixel-wise labels. However, it is difficult to train a model with only image-wise labels, and pixel-wise labels commonly refer to the high expense of annotations. A feasible solution is to make a compromise between data annotation and the performance. In this paper, we propose a cascaded convolutional neural network framework to classify partially annotated pathological images. A segmentation model is trained with the partially annotated samples to detect cancer regions, which are re-identified by a patch-wise classification network. Finally, the segmentation and classification results are combined to make the final image-wise classification. Several experiments are conducted on a landmark medical image dataset with partial annotations. We obtain a classification accuracy of 99.51%, which significantly outperforms other existing methods.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

The challenge is held by Shanghai Big Data Alliance and Center for Applied Information Communication Technology (CAICT), the home page is http://www.datadreams.org/racerace3.html.

References

Chen, H., Qi, X., Yu, L., Heng, P.A.: DCAN: deep contour-aware networks for accurate gland segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2487–2496 (2016)

Chen, L.C., Papandreou, G., Kokkinos, I., Murphy, K., Yuille, A.L.: DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 40(4), 834–848 (2018)

Chollet, F.: Keras. https://github.com/fchollet/keras (2015)

Cireşan, D.C., Giusti, A., Gambardella, L.M., Schmidhuber, J.: Mitosis detection in breast cancer histology images with deep neural networks. In: Mori, K., Sakuma, I., Sato, Y., Barillot, C., Navab, N. (eds.) MICCAI 2013. LNCS, vol. 8150, pp. 411–418. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-40763-5_51

Dhungel, N., Carneiro, G., Bradley, A.P.: The automated learning of deep features for breast mass classification from mammograms. In: Ourselin, S., Joskowicz, L., Sabuncu, M.R., Unal, G., Wells, W. (eds.) MICCAI 2016. LNCS, vol. 9901, pp. 106–114. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46723-8_13

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778 (2016)

Hong, R., Hu, Z., Wang, R., Wang, M., Tao, D.: Multi-view object retrieval via multi-scale topic models. IEEE Trans. Image Process. 25(12), 5814–5827 (2016)

Hong, R., Zhang, L., Tao, D.: Unified photo enhancement by discovering aesthetic communities from flickr. IEEE Trans. Image Process. 25(3), 1124–1135 (2016)

Hong, R., Zhang, L., Zhang, C., Zimmermann, R.: Flickr circles: aesthetic tendency discovery by multi-view regularized topic modeling. IEEE Trans. Multimed. 18(8), 1555–1567 (2016)

Huang, G., Liu, Z., Weinberger, K.Q., van der Maaten, L.: Densely connected convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, vol. 1, p. 3 (2017)

Ke, G., et al.: LightGBM: a highly efficient gradient boosting decision tree. In: Advances in Neural Information Processing Systems, pp. 3149–3157 (2017)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3431–3440 (2015)

Nan, Y., et al.: Partial labeled gastric tumor segmentation via patch-based reiterative learning. arXiv preprint arXiv:1712.07488 (2017)

Paeng, K., Hwang, S., Park, S., Kim, M.: A unified framework for tumor proliferation score prediction in breast histopathology. In: Cardoso, M.J., et al. (eds.) DLMIA/ML-CDS -2017. LNCS, vol. 10553, pp. 231–239. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-67558-9_27

Rakhlin, A., Shvets, A., Iglovikov, V., Kalinin, A.A.: Deep convolutional neural networks for breast cancer histology image analysis. arXiv preprint arXiv:1802.00752 (2018)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Shen, L.: End-to-end training for whole image breast cancer diagnosis using an all convolutional design. In: Neural Information Processing Systems 2017 Workshop on Machine Learning for Health (2017)

Siegel, R.L., Miller, K.D., Jemal, A.: Cancer statistics. CA: Cancer J. Clin. 66(1), 7–30 (2016)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Acknowledgments

This work was supported by National Key Research and Development Program of China 2017YFB1002203, NSFC No. 61572451, and No. 61390514, Fok Ying Tung Education Foundation WF2100060004 and Youth Innovation Promotion Association CAS CX2100060016.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Cui, Y., Wang, Z., Yu, G., Tian, X. (2018). Partially Annotated Gastric Pathological Image Classification. In: Hong, R., Cheng, WH., Yamasaki, T., Wang, M., Ngo, CW. (eds) Advances in Multimedia Information Processing – PCM 2018. PCM 2018. Lecture Notes in Computer Science(), vol 11165. Springer, Cham. https://doi.org/10.1007/978-3-030-00767-6_44

Download citation

DOI: https://doi.org/10.1007/978-3-030-00767-6_44

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-00766-9

Online ISBN: 978-3-030-00767-6

eBook Packages: Computer ScienceComputer Science (R0)