Abstract

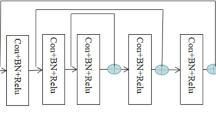

In this paper, we are dedicated to the cross-modal image retrieval between optical images and synthetic aperture radar (SAR) images. This cross-modal retrieval is a challenging task due to the different imaging mechanisms and huge heterogeneity gap. Here, we design a two-stream fully convolutional network to tackle this issue. The network maps the optical and SAR images to a common feature space for comparison. For different modal images, the comparable features are obtained by feeding them into the corresponding branch. Each branch fuses two types of features in a weighted manner. These two kinds of features root in the pooling features of VGG16 at different depths, but are refined by the well-designed channels-aggregated convolution (CAC) operation as well as semi-average pooling (SAP) operation. In order to get a better model, an extensible training approach is proposed. The training of the model is from the local to the whole. Besides, we collect an optical/SAR image retrieval (OSR) dataset. Comprehensive experiments on this dataset demonstrate the effectiveness of our proposed method.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Arandjelovic, R., Gronat, P., Torii, A., Pajdla, T., Sivic, J.: Netvlad: CNN architecture for weakly supervised place recognition. In: CVPR, pp. 5297–5307 (2016)

Babenko, A., Lempitsky, V.: Aggregating local deep features for image retrieval. In: ICCV, pp. 1269–1277 (2015)

Badrinarayanan, V., Kendall, A., Cipolla, R.: Segnet: a deep convolutional encoder-decoder architecture for image segmentation. TPAMI 39(12), 2481–2495 (2017)

Bell, S., Lawrence Zitnick, C., Bala, K., Girshick, R.: Inside-outside net: detecting objects in context with skip pooling and recurrent neural networks. In: CVPR, pp. 2874–2883 (2016)

Bottou, L.: Stochastic gradient descent tricks. In: Montavon, G., Orr, G.B., Müller, K.-R. (eds.) Neural Networks: Tricks of the Trade. LNCS, vol. 7700, pp. 421–436. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-35289-8_25

Cheng, G., Zhou, P., Han, J.: Learning rotation-invariant convolutional neural networks for object detection in VHR optical remote sensing images. TGARS 54(12), 7405–7415 (2016)

Chopra, S., Hadsell, R., LeCun, Y.: Learning a similarity metric discriminatively, with application to face verification. In: CVPR. vol. 1, pp. 539–546. IEEE (2005)

Eysenck, M.W., Keane, M.T.: Cognitive psychology: A student’s handbook. Psychology press, New York (2013)

Fukui, K., Okuno, A., Shimodaira, H.: Image and tag retrieval by leveraging image-group links with multi-domain graph embedding. In: ICIP, pp. 221–225. IEEE (2016)

He, K., Zhang, X., Ren, S., Sun, J.: Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In: ICCV, pp. 1026–1034 (2015)

Hong, R., Hu, Z., Wang, R., Wang, M., Tao, D.: Multi-view object retrieval via multi-scale topic models. TIP 25(12), 5814–5827 (2016)

Hong, R., Zhang, L., Tao, D.: Unified photo enhancement by discovering aesthetic communities from flickr. TIP 25(3), 1124–1135 (2016)

Hong, R., Zhang, L., Zhang, C., Zimmermann, R.: Flickr circles: aesthetic tendency discovery by multi-view regularized topic modeling. TMM 18(8), 1555–1567 (2016)

Jia, Y., et al.: Caffe: convolutional architecture for fast feature embedding. In: ACM MM, pp. 675–678. ACM (2014)

Li, Z., Li, Y., Gao, Y., Liu, Y.: Fast cross-scenario clothing retrieval based on indexing deep features. In: Chen, E., Gong, Y., Tie, Y. (eds.) PCM 2016. LNCS, vol. 9916, pp. 107–118. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-48890-5_11

Luo, M., Chang, X., Li, Z., Nie, L., Hauptmann, A.G., Zheng, Q.: Simple to complex cross-modal learning to rank. CVIU 163, 67–77 (2017)

Mnih, V.: Machine learning for aerial image labeling. Ph.D. thesis, University of Toronto (Canada) (2013)

Mou, L., Schmitt, M., Wang, Y., Zhu, X.X.: A CNN for the identification of corresponding patches in SAR and optical imagery of urban scenes. In: JURSE, pp. 1–4. IEEE (2017)

Qi, Y., Song, Y.Z., Zhang, H., Liu, J.: Sketch-based image retrieval via siamese convolutional neural network. In: ICIP, pp. 2460–2464. IEEE (2016)

Rumelhart, D.E., Hinton, G.E., Williams, R.J.: Learning representations by back-propagating errors. Nature 323(6088), 533 (1986)

Sharma, A., Jacobs, D.W.: Bypassing synthesis: PLS for face recognition with pose, low-resolution and sketch. In: CVPR, pp. 593–600. IEEE (2011)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition (2014). arXiv preprint arXiv:1409.1556

Tambo, A.L., Bhanu, B.: Dynamic bi-modal fusion of images for the segmentation of pollen tubes in video. In: ICIP, pp. 148–152. IEEE (2015)

Wang, K., He, R., Wang, L., Wang, W., Tan, T.: Joint feature selection and subspace learning for cross-modal retrieval. TPAMI 38(10), 2010–2023 (2016)

Wang, K., Yin, Q., Wang, W., Wu, S., Wang, L.: A comprehensive survey on cross-modal retrieval (2016). arXiv preprint arXiv:1607.06215

Wang, Y., Zhu, X.X., Zeisl, B., Pollefeys, M.: Fusing meter-resolution 4-d insar point clouds and optical images for semantic urban infrastructure monitoring. TGARS 55(1), 14–26 (2017)

Wegner, J.D., Ziehn, J.R., Soergel, U.: Combining high-resolution optical and insar features for height estimation of buildings with flat roofs. TGARS 52(9), 5840–5854 (2014)

Zhai, X., Peng, Y., Xiao, J.: Cross-modality correlation propagation for cross-media retrieval. In: ICASSP, pp. 2337–2340. IEEE (2012)

Zhong, P., Gong, Z., Li, S., Schönlieb, C.B.: Learning to diversify deep belief networks for hyperspectral image classification. TGARS 55(6), 3516–3530 (2017)

Acknowledgement

This work was supported in part by 973 Program under Contract 2015CB351803, by Natural Science Foundation of China (NSFC) under Contract 61390514 and 61331017, and by the Fundamental Research Funds for the Central Universities.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhang, Y., Zhou, W., Li, H. (2018). Retrieval Across Optical and SAR Images with Deep Neural Network. In: Hong, R., Cheng, WH., Yamasaki, T., Wang, M., Ngo, CW. (eds) Advances in Multimedia Information Processing – PCM 2018. PCM 2018. Lecture Notes in Computer Science(), vol 11164. Springer, Cham. https://doi.org/10.1007/978-3-030-00776-8_36

Download citation

DOI: https://doi.org/10.1007/978-3-030-00776-8_36

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-00775-1

Online ISBN: 978-3-030-00776-8

eBook Packages: Computer ScienceComputer Science (R0)