Abstract

The aim of this study was to evaluate the performance of a machine learning algorithm applied to depth images for the automated computation of X-ray beam collimation parameters in radiographic chest examinations including posterior-anterior (PA) and left-lateral (LAT) views. Our approach used as intermediate step a trained classifier for the detection of internal lung landmarks that were defined on X-ray images acquired simultaneously with the depth image. The landmark detection algorithm was evaluated retrospectively in a 5-fold cross validation experiment on the basis of 89 patient data sets acquired in clinical settings. Two auto-collimation algorithms were devised and their results were compared to the reference lung bounding boxes defined on the X-ray images and to the manual collimation parameters set by the radiologic technologists.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Boosted tree classifiers

- Gentle AdaBoost

- Anatomical landmarks

- Detection

- Constellation model

- Multivariate regression

- X-ray beam collimation

1 Introduction

Conventional X-ray radiography is still the most frequently performed X-ray examination. According to the ALARAFootnote 1 principle [1], the radiographic technologist is requested to collimate the X-ray beam to the area of interest to avoid overexposure and to reduce scattered radiation. However, as a consequence of time pressure and training level of the technologists, a significant number of X-ray acquisitions need to be retaken, with numbers varying from 3%–10% [2,3,4] to 24,5% [5]. In the study published in [5], it was found that errors in patient positioning and/or collimation were the main reason for retake in 67,5% of the cases, while retakes in chest X-ray exams accounted for 37,7% of the total number of retakes. Optical-based methods haven been proposed in a number of medical imaging applications as a way to automatically assess patient position and morphology and to derive optimal acquisition parameters [6, 7]. More recently, Singh et al. [8] demonstrated significant advances in the detection of anatomical landmarks in depth images acquired by a 3D camera by means of deep convolutional networks. While they used an extensive patient dataset consisting of CT volumetric scans for validation, their results were restricted to the evaluation of landmark detection and body mesh fitting and did not cover automating the CT acquisition itself. In the context of X-ray radiography, MacDougall et al. [9] used a 3D camera to automatically derive patient thickness measurements and detect motion of the examined body part in order to provide guidance to the technologist. Their work did not address the automated computation of system acquisition parameters.

In this paper, we present a method to automatically compute the X-ray beam collimation parameters for posterior-anterior (PA) and lateral (LAT) chest X-ray acquisitions on the basis of 3D camera data. The proposed algorithm detects lung landmarks in the depth image and applies a statistical model to derive patient-specific collimation parameters. Landmarks and regression models were learned from reference data generated on the basis of X-ray and depth image pairs acquired simultaneously during routine clinical exams. The algorithm results are evaluated in a 5-fold cross-validation experiment and compared to the parameters manually set by the radiographic technologists.

2 Methods

A 3D camera (Asus Xtion Pro) was mounted on the side of a clinical X-ray system and connected to a PC for data acquisition. For each patient undergoing an upright chest X-ray exam and having given written consent to participate to the study, a video sequence of depth images covering the duration of the examination was acquired. A total of 89 chest X-ray examinations (PA and LAT views) were acquired over a period of 15 days. The camera was used solely as by-stander, i.e. the depth images were not used in the routine workflow. X-ray retakes were present in 6 out of 89 PA cases and in 1 out of 80 LAT cases leading to 96 PA and 81 LAT acquisitions in total.

2.1 Data Pre-processing

For the retrospective analysis, the following datasets were used: raw X-ray images, depth image series, logfiles from the X-ray system comprising system geometry parameters and collimation parameters selected by the radiographic technologists during the examinations. Based on the timestamps of X-ray release, pairs of X-ray and depth images acquired almost simultaneously were created for each X-ray acquisition and used as basis for the subsequent data analysis. An example is shown in Fig. 1.

Overview of the pre-processing steps: the raw X-ray image with manually annotated lung landmarks (colored dots), lung bounding box (blue rectangle) and collimation window selected by the technologist (purple rectangle) and the corresponding original depth image are shown on the left. The calibrated extrinsic camera parameters, computed on the basis of a 3D point matching approach (middle, top), are applied to compute a reformatted depth image corresponding to a virtual camera located at the X-ray source (right). The annotated lung landmarks are mapped onto this reformatted depth image. (Color figure online)

Calibration of Camera Extrinsic Parameters.

For each examination, the extrinsic camera parameters describing camera position and orientation with respect to the reference coordinate system of the X-ray system were computed. A template matching approach based on the known 3D template of the detector front-cover and an iterative closest point algorithm [10] was applied for this calibration procedure. An example of calibration result is given in Fig. 1.

Reformatting of Depth Images.

Based on the extrinsic camera parameters, reformatted depth images were computed from the original depth images by applying a geometry transformation in which the coordinate system of the X-ray tube was taken as reference. For this purpose, a virtual camera was placed at the position of the X-ray focal point, with the main axes of the camera aligned with the axes of the tube coordinate system, and each depth pixel on the original depth image was re-projected onto this geometry. An example of original and corresponding reformatted depth image is shown in Fig. 1.

This approach offered the advantages to eliminate the variability due to the position of the 3D camera, and to facilitate the projection of landmarks defined in the X-ray image onto the depth image (see below for further details).

2.2 Annotation of Reference Lung Landmarks and Lung Bounding Box

The anatomical extents of the lung were manually annotated in all PA and LAT X-ray images to provide a reference with which to compare the collimation parameters selected by the technologist on the one side and those computed by the auto-collimation algorithm on the other side. For this purpose, 6 anatomical landmarks were defined on PA X-ray views and 5 landmarks were defined on LAT X-ray views (Table 1). Landmark annotation was done by a technical expert, not by a clinical expert. The variability due to inaccurate landmark localization was not quantified within this study.

In each case, parameters of the lung bounding box (\( LungBB_{Left} \), \( LungBB_{Right} \), \( LungBB_{Top} \), and \( LungBB_{Bottom} \)) were derived from the annotated landmarks using min and max operations on the landmark coordinates, without additional margins.

In order to train a classifier for the detection of lung landmarks on the depth images, the lung landmarks annotated in the X-ray images were mapped onto the corresponding reformatted depth images using the calibrated extrinsic camera parameters.

2.3 Trained Classifier for the Detection of Anatomical Landmarks

In this study, the identification of the anatomical landmarks in the depth images employed a classification-based detection approach similar to the technique proposed by Shotton et al. [11], in combination with a constellation model for the estimation of the most plausible landmark configuration and the prediction of missing landmarks [12]. The detection framework was implemented in C++ using OpenCV 3.3.

Using a fixed sampling pattern generated from a uniform sampling distribution, depth values at single positions as well as pair-wise depth differences within a sliding window were used as classification features to characterize the landmarks. To increase robustness against changes in patient position, the size of the pattern was adjusted dynamically based on the depth value of the current pixel.

During the training phase, features were extracted in the vicinity of the annotated landmarks (positive samples), as well as randomly from the remainder of the depth image (negative samples). These data were then used to train landmark-specific classifiers (i.e. boosted tree classifiers based on Gentle AdaBoost [13]). Here, for all landmarks, a single feature descriptor with 2500 elements was employed, while the classifiers were trained with 25 decision trees with a maximum depth of 5. During the detection phase, a mean-shift clustering algorithm [14] was applied as post-processing to reduce the number of candidates classified as possible landmarks.

Finally, the most plausible landmark combination was identified by evaluating all landmark configurations on the basis of a multivariate Gaussian distribution model and retaining only the configuration with the highest probability. If a particular landmark was not detected during the classification step, its most likely position was predicted based on the conditional distribution of the constellation model, as described in [12]. The parameters of the constellation model, which consisted of the Gaussian distribution of the relative landmark coordinates, were estimated from the training data.

For the evaluation of the classifier trained with the patient data, a 5-fold cross-validation approach was chosen to make sure that the detection results were not generated with the same data as used for training.

2.4 Statistical Models for the Computation of Collimation Parameters

Anatomical landmarks detected in the depth images using the classifiers described in Sect. 2.3 were projected onto the coordinate system of the X-ray detector, using the X-ray source as center of projection. In this way, lung landmarks detected by the classifiers, collimation parameters selected by the technologist, and reference lung landmarks and bounding boxes were all available in the same coordinate system.

Multivariable linear regression analysis was then performed to compute prediction models based on the coordinates of the detected landmarks using the function lm of the statistical software R. The left and right X-coordinates of the reference lung bounding box (\( LungBB_{Left} \) and \( LungBB_{Right} \)), respectively upper and lower Y-coordinates (\( LungBB_{Top} \) and \( LungBB_{Bottom} \)), were fitted using the X-coordinates, respectively the Y-coordinates, of the detected landmarks. The regression models were of the form:

where \( y \) is the bounding box parameter to be predicted, \( \left( {x_{i} } \right)_{1 \le i \le n} \) are the landmark coordinates in the X-ray detector coordinate system, \( a \) is the intercept parameter of the regression analysis, and \( \left( {b_{i} } \right)_{1 \le i \le n} \) are the regression coefficients.

To compute a collimation window that will contain the lung with high certainty, safety margins were added to the bounding box coordinates. Denoting \( ROX_{Left} \), \( ROX_{Right} \), \( ROX_{Top} \), and \( ROX_{Bottom} \) the collimation parameters corresponding to the X- and Y-coordinates of the collimation window, the following formula were applied:

Two approaches were applied to compute the offset parameters:

-

1.

QuantileBasedPrediction: Each offset parameter was computed as the 99% quantile of the regression residuals.

-

2.

SDBasedPrediction: Each offset parameter was computed as the standard deviation of the regression residuals multiplied by a given factor (1.65 in this study).

Cases with detection outliers, identified as landmarks with coordinates outside the field-of-view of the X-ray detector, were removed from the regression analysis.

3 Results

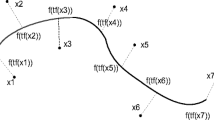

Examples of lung landmark detection results obtained with the trained classifier in four randomly selected cases with both PA and LAT views are shown on Fig. 2. Landmark detection outliers were found in one PA case (for which the distance between patient and 3D camera was significantly larger than for all other cases) and eight LAT cases (which were predominantly due to artefacts induced by clothes hanging loose). These cases were excluded from the subsequent statistical analysis.

Figure 3 summarizes the statistics of the landmark detection errors, computed in the coordinate system of the X-ray images. The largest detection variability was found for the Y-coordinates of lung basal landmarks in both PA and LAT views, which are known to have the largest inter-subject variability because of the influence of the internal organs such as the liver and because of possible different breathing status.

The results of the multivariable regression analysis to predict the parameters of the lung bounding box are summarized in Table 2. Here again, the largest variability is found for the prediction of the lower edge of the lung bounding box in both PA and LAT cases, as well as for the anterior edge in LAT cases. Interestingly, the regression models based on the detected landmarks resulted in a noticeable larger correlation with the lung bounding box parameters than the collimation parameters selected by the technologists. This is an indication that the models were able to infer to some extent the internal lung anatomy from the depth data while the technologists used external morphological landmarks, such as the shoulders, to adjust the collimation parameters.

The difference between the area of the collimation window predicted by the two statistical models, respectively selected by the technologists, and the area of the lung bounding box was computed for each case as an indication of the area unnecessary exposed to direct X-rays. The corresponding statistics are represented as boxplot on Fig. 4. The distribution of cases in which the collimation settings led/would lead to the lung being cropped on at least one edge of the bounding box (i.e. negative margin) is represented as bar-plot on Fig. 4.

4 Discussion/Conclusions

The results summarized in this paper showed the feasibility of training a fully automated algorithm for the detection of internal lung landmarks in depth images acquired during upright chest X-ray examinations and for the subsequent computation of X-ray beam collimation parameters. By using the depth information provided by the 3D camera and the extrinsic camera parameters, the projection of the landmarks onto the X-ray detector could be computed and further processed to estimate the X-ray collimation parameters. As shown by the comparison between the two statistical models computed via regression analysis, decreasing the average area unnecessary exposed to X-rays over the patient population could only be achieved at the risk of increasing the probability of cropping the lungs. Hence, in practice, a trade-off needs to be made between these two conflicting requirements. Interestingly, both statistical models achieved an overall comparable collimation accuracy to the technologists, but led to less variability in over-exposure to X-rays and to noticeably less cases with cropped lungs on the same patient data. In practice, the proposed approach could help reducing the number of X-ray retakes and standardizing the image acquisition process.

Notes

- 1.

ALARA: As Low As Reasonably Achievable

References

Recommendation of the International Commission on Radiological Protection. IRCP Publication 26, Pergamon Press, Oxford (1977)

Foos, D.H., Sehnert, W.J., Reiner, B., Siegel, E.L., Segal, A., Waldman, D.L.: Digital radiography reject analysis: data collection methodology, results, and recommendations from an in-depth investigation at two hospitals. J. Digit. Imaging 22(1), 89–98 (2009)

Hofmann, B., Rosanowsky, T.B., Jensen, C., Wah, K.H.: Image rejects in general direct digital radiography. Acta. Radiol. Open 4 (2015). https://doi.org/10.1177/2058460115604339

Jones, A.K., Polman, R., Willis, C.E., Shepard, S.J.: One year’s results from a server-based system for performing reject analysis and exposure analysis in computed radiography. J. Digit. Imaging 24(2), 243–255 (2011)

Little, K.J., et al.: Unified database for rejected image analysis across multiple vendors in radiography. J Am Coll Radiol 14(2), 208–216 (2016)

Brahme, A., Nyman, P., Skatt, B.: 4D laser camera for accurate patient positioning, collision avoidance, image fusion and adaptive approaches during diagnostic and therapeutic procedures. Med. Phys. 35(5), 1670–1681 (2008)

Grimm, R., Bauer, S., Sukkau, J., Hornegger, J., Greiner, G.: Markerless estimation of patient orientation, posture and pose using range and pressure imaging for automatic patient setup and scanner initialization in tomographic imaging. Int. J. Comput. Assist. Radiol. Surg. 7(6), 921–929 (2012)

Singh, V., et al.: DARWIN: deformable patient avatar representation with deep image network. In: Descoteaux, M., Maier-Hein, L., Franz, A., Jannin, P., Collins, D.L., Duchesne, S. (eds.) MICCAI 2017. LNCS, vol. 10434, pp. 497–504. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-66185-8_56

MacDougall, R., Scherrer, B., Don, S.: Development of a tool to aid the radiologic technologist using augmented reality and computer vision. Pediatr. Radiol. 48(1), 141–145 (2018)

Chen, Y., Medioni, G.: Object modeling by registration of multiple range images. In: IEEE International Conference on Robotics and Automation, vol. 3, pp. 2724–2729 (1991)

Shotton, J., et al.: Efficient human pose estimation from single depth images. IEEE Trans. Pattern Anal. Mach. Intell. 35(12), 2821–2840 (2013)

Pham, T., Smeulders, A.: Object recognition with uncertain geometry and uncertain part detection. Comput. Vis. Image Underst. 99(2), 241–258 (2005)

Friedman, J., Hastie, T., Tibshirani, R.: The Elements of Statistical Learning. Springer Series in Statistics. Springer, New York (2001)

Cheng, Y.: Mean shift, mode seeking, and clustering. IEEE Trans. Pattern Anal. Mach. Intell. 17(8), 790–799 (1995)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Sénégas, J., Saalbach, A., Bergtholdt, M., Jockel, S., Mentrup, D., Fischbach, R. (2018). Evaluation of Collimation Prediction Based on Depth Images and Automated Landmark Detection for Routine Clinical Chest X-Ray Exams. In: Frangi, A., Schnabel, J., Davatzikos, C., Alberola-López, C., Fichtinger, G. (eds) Medical Image Computing and Computer Assisted Intervention – MICCAI 2018. MICCAI 2018. Lecture Notes in Computer Science(), vol 11071. Springer, Cham. https://doi.org/10.1007/978-3-030-00934-2_64

Download citation

DOI: https://doi.org/10.1007/978-3-030-00934-2_64

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-00933-5

Online ISBN: 978-3-030-00934-2

eBook Packages: Computer ScienceComputer Science (R0)