Abstract

In renal transplantation pathology, the Banff grading system is used for diagnosis. We perform a case study on the detection of immune cells in tubules, with the goal of automating part of this grading. We propose a two-step approach, in which we first perform a structure segmentation and subsequently an immune cell detection. We used a dataset of renal allograft biopsies from the Radboud University Medical Centre, Nijmegen, the Netherlands. Our modified U-net reached a Dice score of 0.85 on the structure segmentation task. The F1-score of the immune cell detection was 0.33.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

An important step in many automated medical image analysis applications is semantic segmentation. It describes the task of assigning a class to each pixel of an image. Since the advent of deep learning, great leaps in semantic segmentation performance have been achieved. One of the first applications of deep learning to semantic segmentation came from the Long et al. They used fully convolutional neural networks (F-CNN), omitting the fully connected layers, to perform end-to-end semantic segmentation, achieving state-of-the-art results on multiple datasets [7]. Recently, other fully convolutional networks emerged, achieving great results in semantic segmentation tasks. For instance, U-net applies deconvolutions and skip connections to achieve precise segmentation results [11]. Chen et al. used a contour-aware network to accurately segment gland instances, winning the MICCAI 2015 gland segmentation challenge [1].

The development of high resolution whole slide image (WSI) scanners paved the way for applying semantic segmentation techniques to histopathological slides [3]. Applications in pathology are seen in, for example, cancer diagnosis [6]. However, applications in the kidney are less common. Recent literature on renal tissue segmentation has focused mostly on the automatic segmentation and detection of glomeruli [9, 12].

With thousands of people on the waiting list for kidney transplantation in Europe, correct diagnosis and treatment of rejection of transplanted kidneys is of great importance to prevent the loss of transplanted organs. The Banff classification is an important guideline in such diagnosis [8]. The Banff classification is based on a set of gradings, one of which is the estimation of tubulitis: inflammation of the tubular structures in the kidney. This inflammation is graded by quantifying the amount of immune cells in the tubular structures. The assessment of tubulitis is known to be labor intensive and may possess limited accuracy [2]. As a case study, we build upon our structure segmentation results to quantify tubular inflammation. We attempted to identify and count immune cells within the tubular structures, taking a step towards automatic Banff grading.

Specifically, in this paper we present an approach for automatic multi-class instance segmentation of renal tissue. Instance segmentation extends semantic segmentation by requiring each object to be individually detected. We include a total of seven structures of the kidney anatomy in our segmentation task: proximal, distal and atrophic tubuli, normal and sclerotic glomeruli, arteries and the capsule. Furthermore, we explicitly define a ‘background‘ class, which consists of all tissue that does not fall into one of the included structures. We propose an adapted U-net, which has shown excellent results on segmentation tasks, especially in microscopic imaging.

2 Methods

We require highly detailed segmentation of tubular structures, as we need to be able to locate and count the immune cells within. To this end, we opted for a multi-task setting in which we simultaneously perform a 8-class structure segmentation and a 2-class tubulus border segmentation. The border ground truth maps were created by extracting the contour from each tubulus annotation and dilating it with a disc filter with pixel radius 3.

We modified the original U-net by adding a second decoder pathway before the fourth max-pooling layer. The outputs of the structure and border segmentations for both decoders were separately summed to obtain the output of the network before the soft-max layer. The addition of the second decoder is schematically drawn in Fig. 2. The skip-connections were added to the second decoder in similar fashion as the original implementation. Utilizing max-pooling layers can result in loss of information, which is detrimental for precise segmentation. We hypothesize that adding the second decoder alleviates this problem. After only three max-pooling operations, the first decoder has retained more information. This is especially important at instance boundaries. Simultaneously, the second decoder has a greater receptive field, which is important for correctly classifying larger structures.

The structure segmentation results are used as a basis for the immune cell detection by isolating the tubular structures. We used the same adapted U-net for the immune cell detection task. To generate a ground truth, we converted immune cell annotations, which were dots roughly located at the center, to circular structures with a radius of ten pixels. An overview of all steps performed is shown in Fig. 1.

During training, batches were filled by uniformly sampling pixels across classes from the WSIs. We generated the patches from the WSI, centered around the sampled pixels. This resulted in an imbalance of class occurences, due to differences in size and occurrences of the classes. To account for this, we applied a loss weight to each pixel based on its class. Per batch we calculate the pixel-weight for each class as follows:

with \(P_{c}\) as the weight for class C and \(N_{c}\) as the amount of pixels of class c in the batch.

We separately calculated the border segmentation loss and the structure segmentation loss, leading to the overall loss formula:

where \(L_{b}\) and \(L_{b}\) represent the structure and border loss terms, respectively. \(||W||^{2}_{2}\) denotes the \(\ell _{2}\) regularization term on all weights W. The \(L_{0}\) terms represent the border and structure segmentation results of the individual decoders. We add these terms to force both encoder branches to learn discriminative features. The term \(\omega _{O}\) is halved after each epoch, until it becomes so small that it is essentially dropped from the loss function, leaving only the \(L_{s}\), \(L_{b}\) and regularization terms. We use cross-entropy for all loss terms, incorporating the \(P_{c}\) class weight:

with \(p_{x}\) as the classification output after applying a soft-max, and N as the number of pixels in X.

3 Dataset

3.1 Data Selection

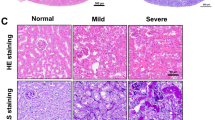

We used a dataset of biopsies originating from a clinical trial, studying the effect of the medicine Rituximab on the incidence of biopsy proven acute rejection within 6 months after transplantation [13]. All biopsies were taken at the Radboud University Medical Centre, Nijmegen, The Netherlands between December 2007 and June 2012. The slides are stained with periodic acid-Schiff (PAS) and digitized using 3D Histech’s Panoramic 250 Flash II scanner. We selected subsets of the dataset for the structure segmentation task and the immune cell detection task, which are described below.

3.2 Structure Segmentation

We selected a total of 24 WSIs from the dataset for the structure segmentation task. In each of the 24 slides, we randomly selected one or two rectangular regions, of approximately 4000 by 3000 pixels, in which all structures of interest were exhaustively annotated. After annotating all structures of interest, every unassigned pixel was placed in the ’background’ class. Annotations were produced by a technician with expertise in renal biopsy histopathology and revised by a pathology resident, under consultation of an experienced nephropathologist. A total of 37 fully annotated rectangular areas were obtained.

3.3 Immune Cell Detection

Annotating immune cells is a difficult task, as it is hard to distinguish tubulus infiltrating lymphocytes from tubular epithelial cells [10]. We selected 5 slides to be fully annotated. There is no overlap between the slides for the structure segmentation and the immune cell detection. To obtain accurate data, we opted to make annotations in a PAS-stain and a CD3-stain of the same slide. First, lymphocytes were annotated in the PAS-stained section. This section was then re-stained with an immunohistochemical staining that shows presence of CD3, a surface marker only present on T-lymphocytes, which is the dominant immune cell population in tubular inflammation. After re-scanning of the slide, new annotations based on intratubular CD3-positivity were conducted. The annotation of both stains was performed by a technician with expertise in the field of renal biopsy histopathology. By intersecting the annotations from both stains, we made a third group of annotated immune cells, which were both visible in the PAS-stain and have been verified by the CD3-stain as truly being T-lymphocytes. Taking the intersection of the two sets resulted in a total amount of 891 immune cell annotations across the five slides. This third group was used for training of the immune cell detection.

4 Experiments and Results

4.1 Implementation Details

A set of parameters was shared across the experiments. For the weights we used He-initialization [4]. The initial learning rate was set at 0.0005, using Adam optimization [5]. The learning rate was halved when no improvement occurred in 5 epochs. Square input patches were sampled from the WSIs with a size of 412 pixels. The structure segmentation task was trained and tested on images at 10x magnification and the immune cell detection task at 40x magnification. We trained the structure segmentation and immune cell detection networks for 60 and 50 epochs, respectively. An epoch consisted of 50 training iterations with batch size of 6. Training was performed on an NVIDIA Titan X GPU.

Visual example of the segmentation results. In the bottom image, the segmentation has been laid over the original image. The colors correspond to the following classes: light-blue/background, green/glomeruli, purple/sclerotic glomeruli, yellow/proximal tubuli, orange/distal tubuli and pink/atrophic tubuli. (Color figure online)

4.2 Structure Segmentation

We performed the segmentation task in 5-fold cross-validation, where we divided the dataset in nineteen slides for training and five slides for evaluation, for each fold. We assessed the performance of the structure segmentation by calculating the Dice score per class. Given the set of ground-truth pixels Y and the set of manually segmented pixels X, the Dice score was calculated for each class separately, as follows:

where c denotes the class. The overall Dice score is calculated by taking the average of the classes, weighted by the pixel-contribution of each class. Scores per class can be seen in Table 1. An example of the segmentation results is shown in Fig. 3. We demonstrate the efficacy of separate structure and border segmentation in Fig. 4. We can observe from these examples that our method was able to accurately separate the boundaries of the instances.

4.3 Immune Cell Detection

We trained the immune cell detection network twice in 5-fold cross-validation on the dataset of five slides, using one slide for evaluation. After a round of training, we identified tubular epithelial cells of which the network produced a high likelihood for immune cells and annotated these as false positives. During the second training round, we specifically sampled from these locations with higher probability. Post-processing of the likelihood maps was used to convert the probabilistic segmentation to detections. The probabilistic segmentations where thresholded at a likelihood of 0.9. Subsequently, connected component analysis was performed to remove segmentation with a size smaller than 300 pixels. We randomly picked a slide for tuning post-processing, and left it out of the evaluation. We report the precision, recall and \(F_{1}\)-score for each individual slide in Table 2. The annotations in the CD3-stain were used as the ground truth. The detection performance of the network is compared with the performance of the technician based on PAS-stain annotations. Despite the low scores, it can be seen that the F1-score of our network is slightly higher than that of the technician.

4.4 Discussion

In this paper, we presented a multi-task approach for accurately segmenting structures in renal tissue. We combined structure and border segmentation, which resulted in an accurate segmentation with separation of adjacent tubular instances. Our structure segmentation can be used as a basis for applications that can further help the pathologist in diagnostic decision making. As an example, detection and quantification of glomeruli can readily be implemented from our results. Some classes had few annotations, resulting in lower Dice score. We built upon the structure segmentation by using it as the basis for the detection of intratubular immune cells. This case study was severely limited by the small dataset of five WSIs. In future work, we want to improve our immune cell detection by increasing the size of and quality of our dataset. We plan to infer a tubular infiltration Banff grading from the immune cell detections, rendering us able to compare our approach with the pathologists’ manual grading.

References

Chen, H., Qi, X., Yu, L., Heng, P.-A.: DCAN: deep contour-aware networks for accurate gland segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2487–2496 (2016)

Elshafie, M., Furness, P.N.: Identification of lesions indicating rejection in kidney transplant biopsies: tubulitis is severely under-detected by conventional microscopy. Nephrol. Dial. Transplant. 27(3), 1252–1255 (2011)

Gurcan, M.N., Boucheron, L.E., Can, A., Madabhushi, A., Rajpoot, N.M., Yener, B.: Histopathological image analysis: a review. IEEE Rev. Biomed. Eng. 2, 147–171 (2009)

He, K., Zhang, X., Ren, S., Sun, J.: Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1026–1034 (2015)

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Litjens, G., et al.: A survey on deep learning in medical image analysis. arXiv preprint arXiv:1702.05747 (2017)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3431–3440 (2015)

Loupy, A., et al.: The banff 2015 kidney meeting report: current challenges in rejection classification and prospects for adopting molecular pathology. Am. J. Transplant. 17(1), 28–41 (2017)

Pedraza, A., Gallego, J., Lopez, S., Gonzalez, L., Laurinavicius, A., Bueno, G.: Glomerulus classification with convolutional neural networks. In: Valdés Hernández, M., González-Castro, V. (eds.) MIUA 2017. CCIS, vol. 723, pp. 839–849. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-60964-5_73

Racusen, L.: Improvement of lesion quantitation for the banff schema for renal allograft rejection. Transplant. Proc. 28, 489–490 (1996)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Temerinac-Ott, M., et al.: Detection of glomeruli in renal pathology by mutual comparison of multiple staining modalities. In: 2017 10th International Symposium on Image and Signal Processing and Analysis (ISPA), pp. 19–24. IEEE (2017)

van den Hoogen, M.W., et al.: Rituximab as induction therapy after renal transplantation: a randomized, double-blind, placebo-controlled study of efficacy and safety. Am. J. Transplant. 15(2), 407–416 (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

de Bel, T., Hermsen, M., Litjens, G., van der Laak, J. (2018). Structure Instance Segmentation in Renal Tissue: A Case Study on Tubular Immune Cell Detection. In: Stoyanov, D., et al. Computational Pathology and Ophthalmic Medical Image Analysis. OMIA COMPAY 2018 2018. Lecture Notes in Computer Science(), vol 11039. Springer, Cham. https://doi.org/10.1007/978-3-030-00949-6_14

Download citation

DOI: https://doi.org/10.1007/978-3-030-00949-6_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-00948-9

Online ISBN: 978-3-030-00949-6

eBook Packages: Computer ScienceComputer Science (R0)