Abstract

Due to the succinct nature of free-hand sketch drawings, sketch-based image retrieval (SBIR) has abundant practical use cases in consumer electronics. However, SBIR remains a long-standing unsolved problem mainly because of the significant discrepancy between the sketch domain and the image domain. In this work, we propose a Generative Domain-migration Hashing (GDH) approach, which for the first time generates hashing codes from synthetic natural images that are migrated from sketches. The generative model learns a mapping that the distributions of sketches can be indistinguishable from the distribution of natural images using an adversarial loss, and simultaneously learns an inverse mapping based on the cycle consistency loss in order to enhance the indistinguishability. With the robust mapping learned from the generative model, GDH can migrate sketches to their indistinguishable image counterparts while preserving the domain-invariant information of sketches. With an end-to-end multi-task learning framework, the generative model and binarized hashing codes can be jointly optimized. Comprehensive experiments of both category-level and fine-grained SBIR on multiple large-scale datasets demonstrate the consistently balanced superiority of GDH in terms of efficiency, memory costs and effectiveness (Models and code at https://github.com/YCJGG/GDH).

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The prevalence of touchscreen in consumer electronics (range from portable devices to large home appliance) facilitates human-machine interactions free-hand drawings. The input of sketches is succinct, convenient and efficient for visually recording ideas, and can beat hundreds of words in some scenarios. As an extended application based on sketches, sketch-based image retrieval (SBIR) [1, 9, 39, 40, 48, 55, 56] has attracted increasing attention.

The primary challenge in SBIR is that free-hand sketches are inherently abstract and iconic, which magnifies cross-domain discrepancy between sketches and real-world images. Recent works attempt to employ cross-view learning methods [4, 9, 13, 14, 29, 32, 34, 37,38,39] to address such a challenge, where the common practice is to reduce the domain discrepancy by embedding both sketches and natural images to a common space and use the projected features for retrieval. The most critical deficiency of this line of approaches is the learned mappings within each domain cannot be well-generalized to the test data, especially for categories with large variance. Similar to other image-based retrieval problems, the query time grows increasingly with the database size and exponentially with the dimension of sketch/image representations. To this end, Deep Sketch Hashing (DSH) [29] is introduced to replace the full-precision sketch/image representations with binary vectors. However, the quantization error introduced by the binarization procedure can destroy both domain-invariant information and the semantic consistency across domains.

In this work, our primary goal is to improve deficiencies in aforementioned works and provide a practical solution to the scalable SBIR problem. We propose a Generative Domain-migration Hashing (GDH) method that improves the generalization capability by migrating sketches into the natural image domain, where the distribution migrated sketches can be indistinguishable from the distribution of natural images. Additionally, we introduce an end-to-end multi-task learning framework that jointly optimizes the cycle consistent migration as well as the hash codes, where the adversarial loss and the cycle consistency loss can simultaneously preserve the semantic consistency of the hashing codes. GDH also integrates an attention layer that guides the learning process to focus on the most representative regions.

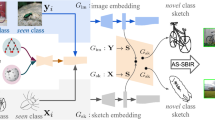

While SBIR aims to retrieve natural images that shares identical category labels with the query sketch, fine-grained SBIR aims to preserve the intra-category instance-level consistency in addition to the category-level consistency. For the consistency purpose, we refer to standard SBIR as category-level SBIR and the fine-grained version as fine-grained SBIR respectively throughout the paper. Since the bidirectional mappings learned in GDH are highly under-constrained (i.e., does not require the pixel-level alignment [15] between sketches and natural images), GDH can naturally provide an elegant solution for preserving the geometrical morphology and detailed instance-level characteristic between sketches and natural images. In addition, a triplet ranking loss is introduced to enhance the fine-grained learning based on visual similarities of intra-class instances. The pipeline of the proposed GDH method for both category-level and fine-grained SBIR tasks is illustrated in Fig. 1. Extensive experiments on various large-scale datasets for both category-level and fine-grained SBIR tasks demonstrate the consistently balanced superiority of GDH in terms of memory cost, retrieval time and accuracy. The main contributions of this work are as follows:

-

We for the first time propose a generative model GDH for the hashing-based SBIR problem. Comparing to existing methods, the generative model can essentially improve the generalization capability by migrating sketches into their indistinguishable counterparts in the natural image domain.

-

Guided by an adversarial loss and a cycle consistency loss, the optimized binary hashing codes can preserve the semantic consistency across domains. Meanwhile, training GDH does not require the pixel-level alignment across domains, and thus allows generalized and practical applications.

-

GDH can improve the category-level SBIR performance over the state-of-the-art hashing-based SBIR method DSH [29] by up to \(20.5\%\) on the TU-Berlin Extension dataset, and up to \(26.4\%\) on the Sketchy dataset respectively. Meanwhile, GDH can achieve comparable performance with real-valued fine-grained SBIR methods, while significantly reduce the retrieval time and memory cost with binary codes.

Illustration of our deep model for the domain-migration networks and compact binary codes learning. The domain-migration module consists of \(G_I\), \(G_S\), \(D_I\) and \(D_S\). The bottom right module is the hashing network H. The red arrows represent the cycle between real sketches and fake natural while the purple arrows represent the cycle between real natural images and fake sketches.

2 Related Work

In this section, we discuss the following four directions of related works.

Category-Level SBIR: The majority of existing category-level SBIR methods [4, 9, 13, 14, 22, 29, 32, 34, 37,38,39] rely on learning a common feature space for both sketches and natural images. However, learning such a common feature space based on that can end up with an overfitting solution to the training data.

Hashing-Based SBIR: If the learned common feature space is real-valued, the retrieval time depends on the database size, and the scalability of the algorithms can be consequently restrained. In order to to improve the efficiency, hashing-based methods [26, 28, 30, 31, 36, 42,43,44,45,46, 51, 61] are introduced to solve the SBIR problem. The state-of-the-art hashing-based SBIR method DSH [28] employed an end-to-end semi-heterogeneous CNNs to learn binarized hashing codes for retrieval. However, the generalization issue remains in DSH since the learned semi-heterogeneous CNNs are also non-linear mappings across the two domains.

Generative Adversarial Networks: The success of Generative Adversarial Networks (GANs) [10] in various image generation [6] and representation learning [33] tasks is inspiring in a way that sketches can be migrated into the natural image domain using the adversarial loss, where the migrated sketches cannot be distinguished from natural images. Image-to-image translation methods [16, 41] can serve this purpose and are capable of migrating sketches into natural images, however, the pixel-level alignment between each sketch and image pair required for training are impractical. In order to address such an issue, Zhu et al. [62] introduced a cycle consistency loss. In this work, we employ such a cycle consistency loss and force the bidirectional mappings to be consistent with each other. Benefiting from the highly under-constrained cycled learning, sketches can be migrated to their indistinguishable counterparts in the natural image domain.

Fine-Grained SBIR: Among a limited number of fine-grained SBIR methods [3, 20, 21, 35, 40, 48, 53,54,55], Yu et al. [55] proposed the multi-branch networks with triplet ranking loss, which preserved the visual similarities of intra-class sketch and natural image instances. In our work, we also exploit the triplet ranking loss for preserving the visual similarity of intra-class instances. With improved generalization capability to the test data and the binarized hashing codes, the proposed GDH method can achieve comparable performance with [55] on the fine-grained SBIR task, while requiring much less memory and retrieval time.

3 Generative Domain-Migration Hash

3.1 Preliminary

Given \(n_1\) training images \(I= \left\{ I_i \right\} ^{n_1}_{i=1}\) and \(n_2\) training sketches \(S= \left\{ S_i \right\} ^{n_2}_{i=1}\), the label vectors (row vectors) for all the training instances are \(Y^I = \left\{ \mathbf {y}^I_i \right\} ^{n_1}_{i=1}\in \{0, 1\}^{n_1\times c}\) and \(Y^S = \left\{ \mathbf {y}^S_i \right\} ^{n_2}_{i=1}\in \{0,1\}^{n_2\times c}\), respectively, where \(\mathbf {y}^I_i\) and \(\mathbf {y}^S_i\) are one-hot vectors and c is the number of classes. We aim to learn the migration from sketches to natural images, and simultaneously learn the hashing function \(\mathbf {H} : \{I, I_{fake} \} \rightarrow \left\{ -1,+1 \right\} ^K\), where K is the length of hash codes. Such that the semantic consistency can be preserved between the extracted hashing codes of both authentic and generated natural images.

3.2 Network Architecture

To serve the above purposes, we simultaneously optimize a pair of generative and discriminative networks and a hashing network.

Generative Networks: Let \(G_I\) and \(G_S\) be two parallel generative CNNs for migrating sketches to the natural images and vice versa: \(G_I: S \rightarrow I\) and \(G_S: I \rightarrow S\). Considering natural images contain much more information than their sketch counterparts, migrating sketches to natural images is essentially an upsampling process and potentially requires more parameters.

In order to suppress the background information and guide the learning process to concentrate on the most representative regions, we integrate an attention module [47, 60] in \(G_S\). The attention module contains a convolutional layer with \(1\times 1\) kernel size, where a softmax function with a threshold is applied to the output for obtaining a binary attention mask. Element-wise \(\odot \) multiplication can be performed between the binary attention mask and the feature map from ResBlocks.

Discriminative Networks: Along with two generators, two discriminative networks are correspondingly integrated in GDH, where \(D_I\) aims to distinguish the images with its mask (\(I \odot mask\)) and the generated images \(G_I\left( S\right) \), and \(D_S\) aims to distinguish the real sketches S and the generated sketches \(G_S\left( I\right) \).

Hashing Network: The hashing network \(\mathbf {H}\) aims to generate binary hashing codes of both real images I and generated images \(G_I\left( S\right) \), and can be trained based on both real image with its mask (\(I \odot mask\)) and generated image \(G_I\left( S\right) \) from the domain-migration network. The hashing network \(\mathbf {H}\) is modified from the 18-layer Deep Residual Network (Resnet) [12] by replacing the softmax layer with a fully-connected layer with a binary constraint on the values, where the dimension of the fully-connected layer equals to the length of the hashing codes.

We denote the parameters of the shared-weight hashing network as \(\varvec{\theta }_H\). For natural images and sketches, we formulate the deep hash function (i.e., the Hashing network) as \(\mathbf {B}^I = {\mathrm {sgn}}(\mathbf {H} (I \odot mask;\varvec{\theta }_H)) \in \{0, 1\}^{n_1 \times K}\) and \(\mathbf {B}^S ={\mathrm {sgn}}(\mathbf {H} (G_I (S);\varvec{\theta }_H)) \in \{0, 1\}^{n_2 \times K}\), respectively, where \({\mathrm {sgn}}(\cdot )\) is the sign function. Note that we use the row vector of the output for the convenience of computation. In the following section, we will introduce the deep generative hashing objective of joint learning of binary codes and hash functions.

We denote the parameters of the shared-weight hashing network as \(\varvec{\theta }_H\). For natural images and sketches, we formulate the deep hash function (i.e., the Hashing network) as \(\mathbf {B}^I = {\mathrm {sgn}}(\mathbf {H} (I \odot mask;\varvec{\theta }_H)) \in \{0, 1\}^{n_1 \times K}\) and \(\mathbf {B}^S ={\mathrm {sgn}}(\mathbf {H} (G_I (S);\varvec{\theta }_H)) \in \{0, 1\}^{n_2 \times K}\), respectively, where \({\mathrm {sgn}}(\cdot )\) is the sign function. Note that we use the row vector of the output for the convenience of computation. In the following section, we will introduce the deep generative hashing objective of joint learning of binary codes and hash functions.

3.3 Objective Formulation

There are five losses in our objective function. The adversarial loss and the cycle consistency loss guide the learning of the domain-migration network. The semantic and triplet losses preserve the semantic consistency and visual similarity of intra-class instances across domains. The quantization loss and unification constraint can preserve the feature space similarity of pair instances. Detailed discussion of each loss is provided in following paragraphs.

Adversarial and Cycle Consistency Loss: Our domain-migration networks are composed of four parts: \(G_I\), \(G_S\), \(D_I\) and \(D_S\) [62]. We denote the parameters of \(G_I\), \(G_S\), \(D_I\) and \(D_S\) as \(\varvec{\theta }_C\). Specifically, \(\varvec{\theta }_C|_{G_I}\) is the parameter of \(G_I\) and so forth. Note that the inputs of domain-migration networks should be image-sketch pairs and usually we have \(n_1 \gg n_2\). Thus we reuse the sketches from same category to match the images. Sketches from the same category are randomly repeated and S will be expanded to \(\hat{S} = \{ S_1,\cdots ,S_1, S_2 \cdots ,S_2, \cdots , S_{n_2},\cdots ,S_{n_2}\}\) to make sure \(|\hat{S}| = |I|\). Suppose the data distributions are \(I \sim p_{I}\) and \(\hat{S} \sim p_{\hat{S}}\). For the generator \(G_I : \hat{S}\rightarrow I\) and its discriminator \(D_I\), the adversarial loss can be written as

where the generator and the discriminator compete in a two-player minimax game: the generator tries to generate images \(G_I(\hat{S})\) that look similar to the images from domain I and its corresponding mask, while the discriminator tries to distinguish between real images and fake images. The adversarial loss of the other mapping function \(G_S : I\rightarrow \hat{S}\) is defined in the similar way. The Cycle Consistency Loss can prevent the learned mapping function \(G_I\) and \(G_S\) from conflicting against each other, which can be expressed as

where \(\Vert \cdot \Vert \) is the Frobenius norm. The full optimization problem for domain-migration networks is

We set the balance parameter \(\upsilon = 10\) in the experiment according to the previous work [62].

Semantic Loss: The label vectors of images and sketches are \(Y^I\) and \(Y^S\). Inspired by Fast Supervised Discrete Hashing [11], we consider the following semantic factorization problem with the projection matrix \(\mathbf {D} \in \mathbb {R}^{c\times K}\):

\(\mathcal {L}_{sem}\) aims to minimize the distance between the binary codes of the same category, and maximize the distance between the binary codes of different categories.

Quantization Loss: The quantization loss is introduced to preserve the intrinsic structure of the data, and can be formulated as follows:

Triplet Ranking Loss: For the fine-grained retrieval task, we integrate the triplet ranking loss into the objective function for preserving the similarity of paired cross-domain instances within an object category. For a given triplet \(\left( S_i,I_i^+,I_i^-\right) \), specifically, each triplet contains a query sketch \(S_i\) and a positive image sample \(I_i^+\) and a negative image sample \(I_i^-\). We define the triplet ranking loss function as follow:

where the parameter \(\Delta \) represents the margin between the similarities of the outputs of the two pairs \((S_i, I_i^+)\) and \((S_i, I_i^-)\). In other words, the hashing network ensures that the Hamming distance between the outputs of the negative pair \((S_i, I_i^-)\) is larger than the Hamming distance between the outputs of the positive pair \((S_i, I_i^+)\) by at least a margin of \(\Delta \). In this paper, we let \(\Delta \) equal to half of the code length (i.e., \(\Delta =0.5K\)).

Full Objective Function: We also desire the binary codes of a real natural image and a generated image to be close to each other. Thus, we employ a unification constraint \(\mathcal {L}_{c} = \Vert \mathbf {H} ( I ;\varvec{\theta }_H ) -\mathbf {H} (G_I (\hat{S}, \varvec{\theta }_C|_{G_I});\varvec{\theta }_H) \Vert ^2\) is added to the final objective function which is formulated as follows:

where \(\lambda \) is a control parameter, which equals 1 for fine-grained task and equals 0 for semantic-level SBIR only, The hyper-parameters \(\alpha \) and \(\beta \) control the contributions of the two corresponding terms.

3.4 Joint Optimization

Due to the non-convexity of the joint optimization and NP-hardness to output the discrete binary codes, it is infeasible to find the global optimal solution. Inspired by [11], we propose an optimization algorithm based on alternating iteration and sequentially optimize one variable while the others are fixed. In this way, variables \(\mathbf {D}\), \(\mathbf {B}^I\), \(\mathbf {B}^S\), parameter \(\varvec{\theta }_C\) of the domain-migration networks, and parameter \(\varvec{\theta }_H\) of the hash function will be iteratively updated.

\(\mathbf {D}\)-Step. By fixing all the variables except \(\mathbf {D}\), Eq. (7) can be simplified as a classic quadratic regression problem:

where \(\mathbf {I}\) is the identity matrix. Taking the derivative of the above function with respect to \(\mathbf {D}\) and setting it to zero, we have the analytical solution to Eq. (8):

\(\mathbf {B}^I\)-Step. When all the variables are fixed except \(\mathbf {B}^I\), we rewrite Eq. (7) as

Since \(tr\left( {\mathbf {B}^I}^{\top } \mathbf {B}^I\right) \) is a constant, Eq. (10) is equivalent to the following problem:

For \(\mathbf {B}^I \in \{-1,+1\}^{n_1 \times K} \), \(\mathbf {B}^I\) has a closed-form solution as follows:

\(\mathbf {B}^S\)-Step. Considering all the terms related to \(\mathbf {B}^S\), it can be learned by a similar formulation as Eq. (12):

\((\varvec{\theta }_C,\varvec{\theta }_H)\)-Step. After the optimization for \(\mathbf {D}\), \(\mathbf {B}^I\) and \(\mathbf {B}^S\), we update the network parameters \(\varvec{\theta }_C\) and \(\varvec{\theta }_H\) according to the following loss:

We train our networks on I and \(\hat{S}\), where the sketch-image pairs are randomly select to compose of the mini-batch, and then backpropagation algorithm with SGD is adopted for optimizing two networks. In practice, we use deep learning frameworks (e.g., Pytorch) to achieve all the steps. We iteratively update \(\mathbf {D}\rightarrow \mathbf {B}^I \rightarrow \mathbf {B}^S \rightarrow \{\varvec{\theta }_C ,\varvec{\theta }_H\}\) in each epoch. As such, GDH can be finally optimized within L epochs, where \(20 \le L \le 30\) in our experiment. The algorithm of GDH is illustrated in Algorithm 1.

Once GDH model is learned, for a given query sketch \(s_q\), we can infer its binary code \(\mathbf {b}^{s_q} = {\mathrm {sgn}}\left( \mathbf {H}\left( G_I\left( S_q, \varvec{\theta }_C|_{G_I} \right) ;\varvec{\theta }_H \right) \right) \) through the \(G_I\) network and the hash network \(\mathbf {H}\). For the image gallery, the hash codes \(\mathbf {b}^I = {\mathrm {sgn}}\left( \mathbf {H}\left( I \odot mask ;\varvec{\theta }_H\right) \right) \) of each image is computed through the hash network, where mask can be easily obtained by \(G_S\left( I;\varvec{\theta }_C|_{G_S}\right) \). Note that fake images generated by \(G_I\left( S_q, \varvec{\theta }_C|_{G_I} \right) \) are non-background and thus they don’t need multiply mask before feed into the hashing network.

4 Experiments and Results

In the experiment section, we aim to address the following three questions:

-

How does GDH perform as compared to other state-of-the-art binary or real-valued methods for category-level SBIR?

-

How does GDH perform as compared to other state-of-the-art real-valued methods for fine-grained SBIR?

-

How does each component or constraint contribute to the overall performance of GDH?

4.1 Datasets and Settings

Category-Level Retrieval. GDH is evaluated on two largest SBIR datasets: Sketchy [40] and TU-Berlin [8] Extension. The Sketchy database contains 125 categories with 75,471 sketches of 12,500 object images. We additionally utilize another 60,502 natural images [29] collected from ImageNet [5]. Hence, the whole image database contains 73,002 images in total. TU-Berlin is a sketch dataset with 250 object categories, where each category contains 80 sketches. An additional 204,489 natural images associated with TU-Berlin provided by [59] are used to construct the image database. Similar to previous hashing experiments [29], 50 and 10 sketches are respectively selected as the query sets for TU-Berlin and Sketchy, where the remaining are used as the gallery for training.

We compare GDH with 8 existing category-level SBIR methods, including 4 hand-crafted methods: LSK [38], SEHLO [37], GF-HOG [13] and HOG [4]; and 4 deep learning based methods: 3D shape [50], Sketch-a-Net (SaN) [56], GN Triplet [40] and Siamese CNN [35]. Furthermore, we also compare GDH with 7 state-of-the-art cross-modality hashing methods: Collective Matrix Factorization Hashing (CMFH) [7], Cross-Model Semi-Supervised Hashing (CMSSH) [2], Cross-View Hashing(CVH) [19], Semantic Correlation Maximization (SCMSeq and SCM-Orth) [57], Semantics-Preserving Hashing(SePH) [25], Deep Cross-Modality Hashing (DCMH) [17] and Deep Sketch Hash (DSH) [29]. Finally, we also compare our method to other four cross-view feature embedding methods: CCA [49], PLSR [27], XQDA [24] and CVFL [52]. The implementation details and experimental results of above methods are reported in [29].

We use the Adam solver [18] with a batch size of 32. Our balance parameters are set to \(\alpha =10^{-5}, \beta =10^{-5}\) and \(\lambda =0\) for both datasets. All networks are trained with an initial learning rate \(lr=0.0002\). After 25 epochs, we decrease the learning rate of the hashing network \(lr\rightarrow 0.1lr\) and terminate the optimization after 30 epochs for both datasets. Our method is implemented by Pytorch with dual 1080Ti GPUs and an i7-4790K CPU.

Fine-Grained Retrieval. We conduct experiments of GDH on the QMUL-Shoes and QMUL-Chairs datasets [55]. The two datasets are fine-grained instance-level SBIR datasets which contain 419 shoes sketch-photo pairs and 297 chairs sketch-photo pairs, respectively.

We compare our proposed GDH method with several fine-grained methods including 2 hand-crafted methods: HOG+BoW+RankSVM [23] and Dense HOG+RankSVM [55], and 3 deep feature baselines: Improved Sketch-a-Net (ISN) [56], 3D shape (3DS) [50] and Triplet Sketch-a-Net (TSN) [55]. All of these algorithms are real-valued methods. It is noteworthy that the networks in TSN [55] are heavily pre-trained and the data have been processed by complex augmentation. However, to emphasize the ability of our domain-migration model, data augmentation is not included in our experiment.

Note that, QMUL-Shoes and QMUL-Chairs are unique fine-grained datasets, in which only contains one category for each of them. Therefore, it is unnecessary to optimize the semantic loss in Eq. (7). To better fit the task of fine-grained retrieval, we skip the first five steps in Algorithm 1 and directly update the parameters of \(\varvec{\theta }_C\) and \(\varvec{\theta }_H\). Our balance parameters are set to \( \lambda =1\). The implementation details are the same as the settings for category-level retrieval.

4.2 Results and Discussions

Comparison with Category-Level SBIR Baselines. We compare our GDH method with the 10 baseline methods in terms of Mean Average Precision (MAP), retrieval time and memory cost on two datasets. The code lengths of outputs are 32, 64 and 128 bits. As reported in Table 1, GDH consistently achieves the best performance with much faster query time and much lower memory cost compared to other SBIR methods on both datasets. Also, GDH largely improves the state-of-the-art performance of Triplet-AlexNet by 24.2% and 23.7% on the TU-Berlin and Sketchy datasets, respectively. The performance of 128 bits is lower than the performance of 64 bits can be explained with the quantization error accumulation [44]. We also notice that the performance of compared methods on both datasets is much lower than reported in previous papers [50, 55]. The reason is that the data they previously used are all well-aligned with perfect background removal and the edge of objects can almost fit the sketches. Meanwhile, our experiments adopt realistic images with complicated background, which are greatly different from sketches.

Comparison with Cross-Modality Hashing. In Table 2, we compare our GDH method with cross-modality hashing/feature learning methods with 32, 64 and 128 bits binary codes. We use the learned deep features as the inputs for non-end-to-end learning methods for a fair comparison with GDH. GDH achieves the best performance compared to all the cross-modality baselines on both datasets. Specifically, GDH can outperform the best-performing hashing-based SBIR method DSH [29] by 20.5%/7.1%, 16.9%/10% and 8.1%/0.1% at different code lengths on both datasets, respectively.

Comparison for Fine-Grained SBIR. In Table 3, we report the top-1 and top-10 accuracies of GDH over other five methods on the Shoes and Chairs datasets for fine-grained SBIR. Compared to the state-of-the-art real-valued TSN (without data augmentation), the 128-bit GDH achieves 2.7%/2.7% and 2.6%/3.4% improvements in terms of top-1 and top-10 accuracies on both the Shoes and Chairs datasets respectively. Specifically, the top-10 accuracy on the Chairs dataset reaches 99%, which is even higher than the performance of TSN with data augmentation.

Remark. For fine-grained SBIR, despite binary hashing codes are used, comparable or even improved performance over the real-valued state-of-the-art methods can be observed in Table 3. On the other side, the binary codes in GDH allow much reduced memory costs and retrieval time than the real-valued approaches. However, GDH generally shows degraded performance on the fine-grained SBIR when comparing to its performance on category-level SBIR. Our explanation towards such a phenomenon is that the geometrical morphology and detailed instance-level characteristic within a category can be much more difficult to capture with binary hashing codes than the inter-category discrepancies. In Fig. 2, some examples based on the retrieval results of GDH are illustrated. More illustrations and experiments can be found in the Supplementary Material.

4.3 Ablation Study

We demonstrate the effectiveness of each loss component of GDH in Table 4. The detailed descriptions of the unification constraint \(\mathcal {L}_{c}\), the quantization loss \(\mathcal {L}_{q}\) and the adversarial and cycle consistent loss \(\mathcal {L}_{gan}\) are provided in Sect. 3.3. It can be observed that all these components are complementary and beneficial to the effectiveness of GDH. Especially, the adversarial and cycle consistent loss \(\mathcal {L}_{gan}\) and the quantization loss \(\mathcal {L}_{q}\) are equivalently critical for category-level SBIR, and he triplet ranking loss \(\mathcal {L}_{tri}\) is essential for fine-grained SBIR. It can also be observed that the attention layer is consistently effective for improving the overall performance with a stable margin.

Inspired by the mix-up operation [58], in order to further reduce the domain discrepancy, we propose a feature fusion method that employs a linear mix-up of two types of hashing binary codes: (1) \({\mathrm {sgn}}(\frac{1}{2} \mathbf {H} ( G_I ( G_S(I_i, \varvec{\theta }_C|_{G_I}), \varvec{\theta }_C|_{G_S} ); \varvec{\theta }_H ) + \frac{1}{2} \mathbf {H} ( I_i; \varvec{\theta }_H ))\) and (2) \({\mathrm {sgn}}(\mathbf {H} \left( G_I\left( S_i, \varvec{\theta }_C|_{G_I} \right) ; \varvec{\theta }_H \right) )\). Besides the linear embedding, we also evaluated other fusion strategies such as concatenation and the Kronecker product. However, none of these fusion methods is helpful. In Fig. 3, we illustrate that the generated sketches of GDH can well represent corresponding natural images. It is obviously observed that using sketches to generate fake natural images are more difficult than the inverse generation. Additionally, we conduct another experiment in the sketch domain rather than the natural image domain. By using a similar hashing technique in the sketch domain, all the sketches S and the corresponding generated fake sketches \(G_S(I)\) are embedded into the Hamming space as \(\mathbf {H}(S_i) = \mathbf {H} \left( S_i; \varvec{\theta }_H \right) \) and \(\mathbf {H}(I_i) = \mathbf {H} \left( G_S\left( I_i \right) ; \varvec{\theta }_H \right) \). However, it resulted in a dramatically decreased performance, especially when handling images that have complex backgrounds.

5 Conclusion

In this paper, we proposed a Generative Domain-migration Hashing method for both category-level and fine-grained SBIR tasks. Instead of mapping sketches and natural images into a common space, GDH for the first time employs a generative model that migrates sketches to their indistinguishable counterparts in the natural image domain. Guided by the adversarial loss and the cycle consistency loss, robust hashing codes for both real and synthetic images (i.e., migrated from sketches) can be obtained with an end-to-end multi-task learning framework that does not rely on the pixel-level alignment between cross-domain pairs. We additionally integrated an attention layer to effectively suppress the background information and guide the learning process of GDH to concentrate on the most critical regions. Extensive experiments on large-scale datasets demonstrated the consistently balanced superiority of GDH in terms of efficiency, memory costs and performance on both category-level and fine-grained SBIR tasks. GDH also outperformed the best-performing hashing-based SBIR method DSH [29] by up to \(20.5\%\) on the TU-Berlin Extension dataset, and up to \(26.4\%\) on the Sketchy dataset respectively.

References

Bozas, K., Izquierdo, E.: Large scale sketch based image retrieval using patch hashing. In: Bebis, G., et al. (eds.) ISVC 2012. LNCS, vol. 7431, pp. 210–219. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33179-4_21

Bronstein, M.M., Bronstein, A.M., Michel, F., Paragios, N.: Data fusion through cross-modality metric learning using similarity-sensitive hashing. In: The Twenty-Third IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2010, San Francisco, CA, USA, 13–18 June 2010, pp. 3594–3601 (2010)

Bui, T., Ribeiro, L., Ponti, M., Collomosse, J.P.: Generalisation and sharing in triplet convnets for sketch based visual search. CoRR abs/1611.05301 (2016)

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. In: 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2005), San Diego, CA, USA, 20–26 June 2005, pp. 886–893 (2005)

Deng, J., Dong, W., Socher, R., Li, L.J., Li, K., Fei-Fei, L.: ImageNet: a large-scale hierarchical image database. In: Proceedings of CVPR, pp. 248–255 (2009)

Denton, E.L., Chintala, S., Fergus, R., et al.: Deep generative image models using a Laplacian pyramid of adversarial networks. In: Advances in Neural Information Processing Systems, pp. 1486–1494 (2015)

Ding, G., Guo, Y., Zhou, J.: Collective matrix factorization hashing for multimodal data. In: 2014 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2014, Columbus, OH, USA, 23–28 June 2014, pp. 2083–2090 (2014)

Eitz, M., Hays, J., Alexa, M.: How do humans sketch objects? ACM Trans. Graph. 31(4), 44:1–44:10 (2012)

Eitz, M., Hildebrand, K., Boubekeur, T., Alexa, M.: An evaluation of descriptors for large-scale image retrieval from sketched feature lines. Comput. Graph. 34(5), 482–498 (2010)

Goodfellow, I., et al.: Generative adversarial nets. In: Advances in Neural Information Processing Systems, pp. 2672–2680 (2014)

Gui, J., Liu, T., Sun, Z., Tao, D., Tan, T.: Fast supervised discrete hashing. IEEE Trans. Pattern Anal. Mach. Intell. 40(2), 490–496 (2018)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016, pp. 770–778 (2016)

Hu, R., Barnard, M., Collomosse, J.P.: Gradient field descriptor for sketch based retrieval and localization. In: Proceedings of the International Conference on Image Processing, ICIP 2010, Hong Kong, China, 26–29 September 2010, pp. 1025–1028 (2010)

Hu, R., Collomosse, J.P.: A performance evaluation of gradient field HOG descriptor for sketch based image retrieval. Comput. Vis. Image Underst. 117(7), 790–806 (2013)

Isola, P., Zhu, J., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017, pp. 5967–5976 (2017)

Isola, P., Zhu, J.Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks (2017)

Jiang, Q., Li, W.: Deep cross-modal hashing. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017, pp. 3270–3278 (2017)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. CoRR abs/1412.6980 (2014)

Kumar, S., Udupa, R.: Learning hash functions for cross-view similarity search. In: Proceedings of the 22nd International Joint Conference on Artificial Intelligence, IJCAI 2011, Barcelona, Catalonia, Spain, 16–22 July 2011, pp. 1360–1365 (2011)

Li, K., Pang, K., Song, Y., Hospedales, T.M., Xiang, T., Zhang, H.: Synergistic instance-level subspace alignment for fine-grained sketch-based image retrieval. IEEE Trans. Image Process. 26(12), 5908–5921 (2017)

Li, K., Pang, K., Song, Y., Hospedales, T.M., Zhang, H., Hu, Y.: Fine-grained sketch-based image retrieval: the role of part-aware attributes. In: 2016 IEEE Winter Conference on Applications of Computer Vision, WACV 2016, Lake Placid, NY, USA, 7–10 March 2016, pp. 1–9 (2016)

Li, Y., Hospedales, T.M., Song, Y., Gong, S.: Intra-category sketch-based image retrieval by matching deformable part models. In: British Machine Vision Conference, BMVC 2014, Nottingham, UK, 1–5 September 2014 (2014)

Li, Y., Hospedales, T.M., Song, Y., Gong, S.: Free-hand sketch recognition by multi-kernel feature learning. Comput. Vis. Image Underst. 137, 1–11 (2015)

Liao, S., Hu, Y., Zhu, X., Li, S.Z.: Person re-identification by local maximal occurrence representation and metric learning. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2015, Boston, MA, USA, 7–12 June 2015, pp. 2197–2206 (2015)

Lin, Z., Ding, G., Hu, M., Wang, J.: Semantics-preserving hashing for cross-view retrieval. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2015, Boston, MA, USA, 7–12 June 2015, pp. 3864–3872 (2015)

Liong, V.E., Lu, J., Wang, G., Moulin, P., Zhou, J.: Deep hashing for compact binary codes learning. In: Proceedings of CVPR, pp. 2475–2483 (2015)

Liu, H., Ma, Z., Han, J., Chen, Z., Zheng, Z.: Regularized partial least squares for multi-label learning. Int. J. Mach. Learn. Cybern. 9(2), 335–346 (2018)

Liu, L., Shao, L., Shen, F., Yu, M.: Discretely coding semantic rank orders for image hashing. In: Proceedings of CVPR, pp. 1425–1434 (2017)

Liu, L., Shen, F., Shen, Y., Liu, X., Shao, L.: Deep sketch hashing: fast free-hand sketch-based image retrieval. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017, pp. 2298–2307 (2017)

Liu, W., Mu, C., Kumar, S., Chang, S.F.: Discrete graph hashing. In: Proceedings of NIPS, pp. 3419–3427 (2014)

Liu, W., Wang, J., Ji, R., Jiang, Y.G., Chang, S.F.: Supervised hashing with kernels. In: Proceedings of CVPR, pp. 2074–2081 (2012)

Lowe, D.G.: Object recognition from local scale-invariant features. In: ICCV, pp. 1150–1157 (1999)

Mathieu, M.F., Zhao, J.J., Zhao, J., Ramesh, A., Sprechmann, P., LeCun, Y.: Disentangling factors of variation in deep representation using adversarial training. In: Advances in Neural Information Processing Systems, pp. 5040–5048 (2016)

Parui, S., Mittal, A.: Similarity-invariant sketch-based image retrieval in large databases. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014, Part VI. LNCS, vol. 8694, pp. 398–414. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10599-4_26

Qi, Y., Song, Y., Zhang, H., Liu, J.: Sketch-based image retrieval via Siamese convolutional neural network. In: 2016 IEEE International Conference on Image Processing, ICIP 2016, Phoenix, AZ, USA, 25–28 September 2016, pp. 2460–2464 (2016)

Qin, J., et al.: Binary coding for partial action analysis with limited observation ratios. In: Proceedings of CVPR, pp. 146–155 (2017)

Saavedra, J.M.: Sketch based image retrieval using a soft computation of the histogram of edge local orientations (S-HELO). In: 2014 IEEE International Conference on Image Processing, ICIP 2014, Paris, France, 27–30 October 2014, pp. 2998–3002 (2014)

Saavedra, J.M., Barrios, J.M.: Sketch based image retrieval using learned keyshapes (LKS). In: Proceedings of the British Machine Vision Conference 2015, BMVC 2015, Swansea, UK, 7–10 September 2015, pp. 164.1–164.11 (2015)

Saavedra, J.M., Bustos, B.: An improved histogram of edge local orientations for sketch-based image retrieval. In: Goesele, M., Roth, S., Kuijper, A., Schiele, B., Schindler, K. (eds.) DAGM 2010. LNCS, vol. 6376, pp. 432–441. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15986-2_44

Sangkloy, P., Burnell, N., Ham, C., Hays, J.: The sketchy database: learning to retrieve badly drawn bunnies. ACM Trans. Graph. 35(4), 119:1–119:12 (2016)

Sangkloy, P., Lu, J., Fang, C., Yu, F., Hays, J.: Scribbler: controlling deep image synthesis with sketch and color. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), vol. 2 (2017)

Shen, F., Gao, X., Liu, L., Yang, Y., Shen, H.T.: Deep asymmetric pairwise hashing. In: Proceedings of the 2017 ACM on Multimedia Conference, MM 2017, Mountain View, CA, USA, 23–27 October 2017, pp. 1522–1530 (2017)

Shen, F., Liu, W., Zhang, S., Yang, Y., Shen, H.T.: Learning binary codes for maximum inner product search. In: The IEEE International Conference on Computer Vision (ICCV), pp. 4148–4156, December 2015

Shen, F., Shen, C., Liu, W., Shen, H.T.: Supervised discrete hashing. In: Proceedings of CVPR, pp. 37–45 (2015)

Shen, F., Xu, Y., Liu, L., Yang, Y., Huang, Z., Shen, H.T.: Unsupervised deep hashing with similarity-adaptive and discrete optimization. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) (2018)

Shen, F., Yang, Y., Liu, L., Liu, W., Dacheng Tao, H.T.S.: Asymmetric binary coding for image search. IEEE TMM 19(9), 2022–2032 (2017)

Song, J., Qian, Y., Song, Y.Z., Xiang, T., Hospedales, T.: Deep spatial-semantic attention for fine-grained sketch-based image retrieval. In: ICCV (2017)

Song, J., Yu, Q., Song, Y., Xiang, T., Hospedales, T.M.: Deep spatial-semantic attention for fine-grained sketch-based image retrieval. In: IEEE International Conference on Computer Vision, ICCV 2017, Venice, Italy, 22–29 October 2017, pp. 5552–5561 (2017)

Vía, J., Santamaría, I., Pérez, J.: Canonical correlation analysis (CCA) algorithms for multiple data sets: application to blind SIMO equalization. In: 13th European Signal Processing Conference, EUSIPCO 2005, Antalya, Turkey, 4–8 September 2005, pp. 1–4 (2005)

Wang, F., Kang, L., Li, Y.: Sketch-based 3D shape retrieval using convolutional neural networks. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2015, Boston, MA, USA, 7–12 June 2015, pp. 1875–1883 (2015)

Weiss, Y., Torralba, A., Fergus, R.: Spectral hashing. In: Proceedings of NIPS, pp. 1753–1760 (2008)

Xie, W., Peng, Y., Xiao, J.: Cross-view feature learning for scalable social image analysis. In: Proceedings of the Twenty-Eighth AAAI Conference on Artificial Intelligence, Québec City, Québec, Canada, 27–31 July 2014, pp. 201–207 (2014)

Xu, P., et al.: Cross-modal subspace learning for fine-grained sketch-based image retrieval. Neurocomputing 278, 75–86 (2018)

Xu, P., et al.: Instance-level coupled subspace learning for fine-grained sketch-based image retrieval. In: Hua, G., Jégou, H. (eds.) ECCV 2016, Part I. LNCS, vol. 9913, pp. 19–34. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46604-0_2

Yu, Q., Liu, F., Song, Y., Xiang, T., Hospedales, T.M., Loy, C.C.: Sketch me that shoe. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016, pp. 799–807 (2016)

Yu, Q., Yang, Y., Liu, F., Song, Y., Xiang, T., Hospedales, T.M.: Sketch-a-Net: a deep neural network that beats humans. Int. J. Comput. Vis. 122(3), 411–425 (2017)

Zhang, D., Li, W.: Large-scale supervised multimodal hashing with semantic correlation maximization. In: Proceedings of the Twenty-Eighth AAAI Conference on Artificial Intelligence, Québec City, Québec, Canada, 27–31 July 2014, pp. 2177–2183 (2014)

Zhang, H., Cissé, M., Dauphin, Y.N., Lopez-Paz, D.: mixup: beyond empirical risk minimization. CoRR abs/1710.09412 (2017)

Zhang, H., Liu, S., Zhang, C., Ren, W., Wang, R., Cao, X.: SketchNet: sketch classification with web images. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016, pp. 1105–1113 (2016)

Zhang, X., et al.: HashGAN: attention-aware deep adversarial hashing for cross modal retrieval. CoRR abs/1711.09347 (2017)

Zhang, Z., Chen, Y., Saligrama, V.: Efficient training of very deep neural networks for supervised hashing. In: Proceedings of CVPR, pp. 1487–1495 (2016)

Zhu, J., Park, T., Isola, P., Efros, A.A.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: IEEE International Conference on Computer Vision, ICCV 2017, Venice, Italy, 22–29 October 2017, pp. 2242–2251 (2017)

Acknowledgment

This work was supported in part by the National Natural Science Foundation of China under Project 61502081 and Project 61632007, the Fundamental Research Funds for the Central Universities under Project ZYGX2014Z007.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhang, J. et al. (2018). Generative Domain-Migration Hashing for Sketch-to-Image Retrieval. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds) Computer Vision – ECCV 2018. ECCV 2018. Lecture Notes in Computer Science(), vol 11206. Springer, Cham. https://doi.org/10.1007/978-3-030-01216-8_19

Download citation

DOI: https://doi.org/10.1007/978-3-030-01216-8_19

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-01215-1

Online ISBN: 978-3-030-01216-8

eBook Packages: Computer ScienceComputer Science (R0)