Abstract

We present an unsupervised learning framework for simultaneously training single-view depth prediction and optical flow estimation models using unlabeled video sequences. Existing unsupervised methods often exploit brightness constancy and spatial smoothness priors to train depth or flow models. In this paper, we propose to leverage geometric consistency as additional supervisory signals. Our core idea is that for rigid regions we can use the predicted scene depth and camera motion to synthesize 2D optical flow by backprojecting the induced 3D scene flow. The discrepancy between the rigid flow (from depth prediction and camera motion) and the estimated flow (from optical flow model) allows us to impose a cross-task consistency loss. While all the networks are jointly optimized during training, they can be applied independently at test time. Extensive experiments demonstrate that our depth and flow models compare favorably with state-of-the-art unsupervised methods.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

Single-view depth prediction and optical flow estimation are two fundamental problems in computer vision. While the two tasks aim to recover highly correlated information from the scene (i.e., the scene structure and the dense motion field between consecutive frames), existing efforts typically study each problem in isolation. In this paper, we demonstrate the benefits of exploring the geometric relationship between depth, camera motion, and flow for unsupervised learning of depth and flow estimation models.

With the rapid development of deep convolutional neural networks (CNNs), numerous approaches have been proposed to tackle dense prediction problems in an end-to-end manner. However, supervised training CNN for such tasks often involves in constructing large-scale, diverse datasets with dense pixelwise ground truth labels. Collecting such densely labeled datasets in real-world requires significant amounts of human efforts and is prone to error. Existing efforts of RGB-D dataset construction [18, 45, 53, 54] often have limited scope (e.g., in terms of locations, scenes, and objects), and hence are lack of diversity. For optical flow, dense motion annotations are even more difficult to acquire [37]. Consequently, existing CNN-based methods rely on synthetic datasets for training the models [5, 12, 16, 24]. These synthetic datasets, however, do not capture the complexity of motion blur, occlusion, and natural image statistics from real scenes. The trained models usually do not generalize well to unseen scenes without fine-tuning on sufficient ground truth data in a new visual domain.

Joint learning v.s. separate learning. Single-view depth prediction and optical flow estimation are two highly correlated tasks. Existing work, however, often addresses these two tasks in isolation. In this paper, we propose a novel cross-task consistency loss to couple the training of these two problems using unlabeled monocular videos. Through enforcing the underlying geometric constraints, we show substantially improved results for both tasks.

Several work [17, 21, 28] have been proposed to capitalize on large-scale real-world videos to train the CNNs in the unsupervised setting. The main idea lies to exploit the brightness constancy and spatial smoothness assumptions of flow fields or disparity maps as supervisory signals. These assumptions, however, often do not hold at motion boundaries and hence makes the training unstable.

Many recent efforts [59, 60, 65, 73] explore the geometric relationship between the two problems. With the estimated depth and camera pose, these methods can produce dense optical flow by backprojecting the 3D scene flow induced from camera ego-motion. However, these methods implicitly assume perfect depth and camera pose estimation when “synthesizing” the optical flow. The errors in either depth or camera pose estimation inevitably produce inaccurate flow predictions.

In this paper, we present a technique for jointly learning a single-view depth estimation model and a flow prediction model using unlabeled videos as shown in Fig. 2. Our key observation is that the predictions from depth, pose, and optical flow should be consistent with each other. By exploiting this geometry cue, we present a novel cross-task consistency loss that provides additional supervisory signals for training both networks. We validate the effectiveness of the proposed approach through extensive experiments on several benchmark datasets. Experimental results show that our joint training method significantly improves the performance of both models (Fig. 1). The proposed depth and flow models compare favorably with state-of-the-art unsupervised methods.

We make the following contributions. (1) We propose an unsupervised learning framework to simultaneously train a depth prediction network and an optical flow network. We achieve this by introducing a cross-task consistency loss that enforces geometric consistency. (2) We show that through the proposed unsupervised training our depth and flow models compare favorably with existing unsupervised algorithms and achieve competitive performance with supervised methods on several benchmark datasets. (3) We release the source code and pre-trained models to facilitate future research: http://yuliang.vision/DF-Net/.

Supervised v.s. unsupervised learning. Supervised learning of depth or flow networks requires large amount of training data with pixelwise ground truth annotations, which are difficult to acquire in real scenes. In contrast, our work leverages the readily available unlabeled video sequences to jointly train the depth and flow models.

2 Related Work

Supervised Learning of Depth and Flow. Supervised learning using CNNs has emerged to be an effective approach for depth and flow estimation to avoid hand-crafted objective functions and computationally expensive optimization at test time. The availability of RGB-D datasets and deep learning leads to a line of work on single-view depth estimation [13, 14, 35, 38, 62, 72]. While promising results have been shown, these methods rely on the absolute ground truth depth maps. These depth maps, however, are expensive and difficult to collect. Some efforts [8, 74] have been made to relax the difficulty of collecting absolute depth by exploring learning from relative/ordinal depth annotations. Recent work also explores gathering training datasets from web videos [7] or Internet photos [36] using structure-from-motion and multi-view stereo algorithms.

Compared to ground truth depth datasets, constructing optical flow datasets of diverse scenes in real-world is even more challenging. Consequently, existing approaches [12, 26, 47] typically rely on synthetic datasets [5, 12] for training. Due to the limited scalability of constructing diverse, high-quality training data, fully supervised approaches often require fine-tuning on sufficient ground truth labels in new visual domains to perform well. In contrast, our approach leverages the readily available real-world videos to jointly train the depth and flow models. The ability to learn from unlabeled data enables unsupervised pre-training for domains with limited amounts of ground truth data.

Self-supervised Learning of Depth and Flow. To alleviate the dependency on large-scale annotated datasets, several works have been proposed to exploit the classical assumptions of brightness constancy and spatial smoothness on the disparity map or the flow field [17, 21, 28, 43, 71]. The core idea is to treat the estimated depth and flow as latent layers and use them to differentiably warp the source frame to the target frame, where the source and target frames can either be the stereo pair or two consecutive frames in a video sequence. A photometric loss between the synthesized frame and the target frame can then serve as an unsupervised proxy loss to train the network. Using photometric loss alone, however, is not sufficient due to the ambiguity on textureless regions and occlusion boundaries. Hence, the network training is often unstable and requires careful hyper-parameter tuning of the loss functions. Our approach builds upon existing unsupervised losses for training our depth and flow networks. We show that the proposed cross-task consistency loss provides a sizable performance boost over individually trained models.

Methods Exploiting Geometry Cues. Recently, a number of work exploits the geometric relationship between depth, camera pose, and flow for learning depth or flow models [60, 65, 68, 73]. These methods first estimate the depth of the input images. Together with the estimated camera poses between two consecutive frames, these methods “synthesize” the flow field of rigid regions. The synthesized flow from depth and pose can either be used for flow prediction in rigid regions [48, 60, 65, 68] as is or used for view synthesis to train depth model using monocular videos [73]. Additional cues such as surface normal [67], edge [66], physical constraints [59] can be incorporated to further improve the performance.

These approaches exploit the inherent geometric relationship between structure and motion. However, the errors produced by either the depth or the camera pose estimation propagate to flow predictions. Our key insight is that for rigid regions the estimated flow (from flow prediction network) and the synthesized rigid flow (from depth and camera pose networks) should be consistent. Consequently, coupled training allows both depth and flow networks to learn from each other and enforce geometrically consistent predictions of the scene.

Structure from Motion. Joint estimation of structure and camera pose from multiple images of a given scene is a long-standing problem [15, 46, 64]. Conventional methods can recover (semi-)dense depth estimation and camera pose through keypoint tracking/matching. The outputs of these algorithms can potentially be used to help train a flow network, but not the other way around. Our work differs as we are also interested in learning a depth network to recover dense structure from a single input image.

Multi-task Learning. Simultaneously addressing multiple tasks through multi-task learning [52] has shown advantages over methods that tackle individual ones [70]. For examples, joint learning of video segmentation and optical flow through layered models [6, 56] or feature sharing [9] helps improve accuracy at motion boundaries. Single-view depth model learning can also benefit from joint training with surface normal estimation [35, 67] or semantic segmentation [13, 30].

Our approach tackles the problems of learning both depth and flow models. Unlike existing multi-task learning methods that often require direct supervision using ground truth training data for each task, our approach instead leverage meta-supervision to couple the training of depth and flow models. While our models are jointly trained, they can be applied independently at test time.

Overview of our unsupervised joint learning framework. Our framework consists of three major modules: (1) a Depth Net for single-view depth estimation; (2) a Pose Net that takes two stacked input frames and estimates the relative camera pose between the two input frames; and (3) a Flow Net that estimates dense optical flow field between the two input frames. Given a pair of input images \(\mathbf {I}_t\) and \(\mathbf {I}_{t+1}\) sampled from an unlabeled video, we first estimate the depth of each frame, the 6D camera pose, and the dense forward and backward flows. Using the predicted scene depth and the estimated camera pose, we can synthesize 2D forward and backward optical flows (referred as rigid flow) by backprojecting the induced 3D forward and backward scene flows (Sect. 3.2). As we do not have ground truth depth and flow maps for supervision, we leverage standard photometric and spatial smoothness costs to regularize the network training (Sect. 3.3, not shown in this figure for clarity). To enforce the consistency of flow and depth prediction in both directions, we exploit the forward-backward consistency (Sect. 3.4), and adopt the valid masks derived from it to filter out invalid regions (e.g., occlusion/dis-occlusion) for the photometric loss. Finally, we propose a novel cross-network consistency loss (Sect. 3.5)—encouraging the optical flow estimation (from the Flow Net) and the rigid flow (from the Depth and Pose Net) to be consistent to each other within in valid regions.

3 Unsupervised Joint Learning of Depth and Flow

3.1 Method Overview

Our goal is to develop an unsupervised learning framework for jointly training the single-view depth estimation network and the optical flow prediction network using unlabeled video sequences. Figure 3 shows the high-level sketch of our proposed approach. Given two consecutive frames \((I_t, I_{t+1})\) sampled from an unlabeled video, we first estimate depth of frame \(I_t\) and \(I_{t+1}\), and forward-backward optical flow fields between frame \(I_t\) and \(I_{t+1}\). We then estimate the 6D camera pose transformation between the two frames \((I_t, I_{t+1})\).

With the predicted depth map and the estimated 6D camera pose, we can produce the 3D scene flow induced from camera ego-motion and backproject them onto the image plane to synthesize the 2D flow (Sect. 3.2). We refer this synthesized flow as rigid flow. Suppose the scenes are mostly static, the synthesized rigid flow should be consistent with the results from the estimated optical flow (produced by the optical flow prediction model). However, the prediction results from the two branches may not be consistent with each other. Our intuition is that the discrepancy between the rigid flow and the estimated flow provides additional supervisory signals for both networks. Hence, we propose a cross-task consistency loss to enforce this constraint (Sect. 3.5). To handle non-rigid transformations that cannot be explained by the camera motion and occlusion-disocclusion regions, we exploit the forward-backward consistency check to identify valid regions (Sect. 3.4). We avoid enforcing the cross-task consistency for those forward-backward inconsistent regions.

Our overall objective function can be formulated as follows:

All of the four loss terms are applied to both depth and flow networks. Also, all of the four loss terms are symmetric for forward and backward directions, for simplicity we only derive them for the forward direction.

3.2 Flow Synthesis Using Depth and Pose Predictions

Given the two input frames \(I_t\) and \(I_{t+1}\), the predicted depth map \(\hat{D}_t\), and relative camera pose \(\hat{T}_{t\rightarrow t+1}\), here we wish to establish the dense pixel correspondence between the two frames. Let \(p_t\) denotes the 2D homogeneous coordinate of an pixel in frame \(I_t\) and K denotes the intrinsic camera matrix. We can compute the corresponding point of \(p_t\) in frame \(I_{t+1}\) using the equation [73]:

We can then obtain the synthesized forward rigid flow at pixel \(p_t\) in \(I_t\) by

3.3 Brightness Constancy and Spatial Smoothness Priors

Here we briefly review two loss functions that we used in our framework to regularize network training. Leveraging the brightness constancy and spatial smoothness priors used in classical dense correspondence algorithms [4, 23, 40], prior work has used the photometric discrepancy between the warped frame and the target frame as an unsupervised proxy loss function for training CNNs without ground truth annotations.

Photometric Loss. Suppose that we have frame \(I_t\) and \(I_{t+1}\), as well as the estimated flow \(F_{t\rightarrow t+1}\) (either from the optical flow predicted from the flow model or the synthesized rigid flow induced from the estimated depth and camera pose), we can produce the warped frame \(\bar{I}_t\) with the inverse warping from frame \(I_{t+1}\). Note that the projected image coordinates \(p_{t+1}\) might not lie exactly on the image pixel grid, we thus apply a differentiable bilinear interpolation strategy used in the spatial transformer networks [27] to perform frame synthesis.

With the warped frame \(\bar{I}_t\) from \(I_{t+1}\), we formulate the brightness constancy objective function as

where \(\rho (\cdot )\) is a function to measure the difference between pixel values. Previous work simply choose \(L_1\) norm or the appearance matching loss [21], which is not invariant to illumination changes in real-world scenarios [61]. Here we adopt the ternary census transform based loss [43, 55, 69] that can better handle complex illumination changes.

Smoothness Loss. The brightness constancy loss is not informative in low-texture or homogeneous region of the scene. To handle this issue, existing work incorporates a smoothness prior to regularize the estimated disparity map or flow field. We adopt the spatial smoothness loss as proposed in [21].

3.4 Forward-Backward Consistency

According to the brightness constancy assumption, the warped frame should be similar to the target frame. However, the assumption does not hold for occluded and dis-occluded regions. We address this problem by using the commonly used forward-backward consistency check technique to identify invalid regions and do not impose the photometric loss on those regions.

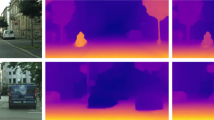

Valid Masks. We implement the occlusion detection based on forward-backward consistency assumption [58] (i.e., traversing flow vector forward and then backward should arrive at the same position). Here we use a simple criterion proposed in [43]. We mark pixels as invalid whenever this constraint is violated. Figure 4 shows two examples of the marked invalid regions by forward-backward consistency check using the synthesized rigid flow (animations can be viewed in Adobe Reader).

Denote the valid region by V (either from rigid flow or estimated flow), we can modify the photometric loss term (4) as

Forward-Backward Consistency Loss. In addition to using forward-backward consistency check for identifying invalid regions, we can further impose constraints on the valid regions so that the network can produce consistent predictions for both forward and backward directions. Similar ideas have been exploited in [25, 43] for occlusion-aware flow estimation. Here, we apply the forward-backward consistency loss to both flow and depth predictions.

For flow prediction, the forward-backward consistency loss is of the form:

Similarly, we impose a consistency penalty for depth:

where \(\bar{D}_t\) is warped from \(D_{t+1}\) using the synthesized rigid flow from t to \(t+1\).

Valid mask visualization. We estimate the invalid mask by checking the forward-backward consistency from the synthesized rigid flow, which can not only detect occluded regions, but also identify the moving objects (cars) as they cannot be explained by the estimated depth and pose.  (See supplementary material)

(See supplementary material)

While we exploit robust functions for enforcing photometric loss, forward-backward consistency for each of the tasks, the training of depth and flow networks using unlabeled data remains non-trivial and sensitive to the choice of hyper-parameters [33]. Building upon the existing loss functions, in the following we introduce a novel cross-task consistency loss to further regularize the network training.

3.5 Cross-Task Consistency

In Sect. 3.2, we show that the motion of rigid regions in the scene can be explained by the ego-motion of the camera and the corresponding scene depth. On the one hand, we can estimate the rigid flow by backprojecting the induced 3D scene flow from the estimated depth and relative camera pose. On the other hand, we have direct estimation results from an optical flow network. Our core idea is the that these two flow fields should be consistent with each other for non-occluded and static regions. Minimizing the discrepancy between the two flow fields allows us to simultaneously update the depth and flow models.

We thus propose to minimize the endpoint distance between the flow vectors in the rigid flow (computed from the estimated depth and pose) and that in the estimated flow (computed from the flow prediction model). We denote the synthesized rigid flow as \(F_\text {rigid}=(u_\text {rigid},v_\text {rigid})\) and the estimated flow as \(F_\text {flow}=(u_\text {flow},v_\text {flow})\). Using the computed valid masks (Sect. 3.4), we impose the cross-task consistency constraints over valid pixels.

4 Experimental Results

In this section, we validate the effectiveness of our proposed method for unsupervised learning of depth and flow on several standard benchmark datasets. More results can be found in the supplementary material. Our source code and pre-trained models are available on http://yuliang.vision/DF-Net/.

4.1 Datasets

Datasets for Joint Network Training. We use video clips from the train split of KITTI raw dataset [18] for joint learning of depth and flow models. Note that our training does not involve any depth/flow labels.

Datasets for Pre-training. To avoid the joint training process converging to trivial solutions, we (unsupervisedly) pre-train the flow network on the SYNTHIA dataset [51]. For pre-training both depth and pose networks, we use either KITTI raw dataset or the CityScapes dataset [11].

The SYNTHIA dataset [51] contains multi-view frames captured by driving vehicles in different scenarios and traffic conditions. We take all the four-view images of the left camera from all summer and winter driving sequences, which contains around 37K image pairs. The CityScapes dataset [11] contains real-world driving sequences, we follow Zhou et al. [73] and pre-process the dataset to generate around 75K training image pairs.

Datasets for Evaluation. For evaluating the performance of our depth network, we use the test split of the KITTI raw dataset. The depth maps for KITTI raw are sampled at irregularly spaced positions, captured using a rotating LIDAR scanner. Following the standard evaluation protocol, we evaluate the performance using only the regions with ground truth depth samples (bottom parts of the images). We also evaluate the generalization of our depth network on general scenes using the Make3D dataset [53].

For evaluating our flow network, we use the challenging KITTI flow 2012 [19] and KITTI flow 2015 [44] datasets. The ground truth optical flow is obtained from a 3D laser scanner and thus only covers about 50% of the pixels.

4.2 Implementation Details

We implement our approach in TensorFlow [1] and conduct all the experiments on a single Tesla K80 GPU with 12 GB memory. We set \(\lambda _s = 3.0\), \(\lambda _f=0.2\), and \(\lambda _c=0.2\). For network training, we use the Adam optimizer [31] with \(\beta _1=0.9\), \(\beta _2=0.99\). In the following, we provide more implementation details in network architecture, network pre-training, and the proposed unsupervised joint training.

Network Architecture. For the pose network, we adopt the architecture from Zhou et al. [73]. For the depth network, we use the ResNet-50 [22] as our feature backbone with ELU [10] activation functions. For the flow network, we adopt the UnFlow-C structure [43]—a variant of FlowNetC [12]. As our network training is model-agnostic, more advanced network architectures (e.g., pose [20], depth [36], or flow [57]) can be used for further improving the performance.

Unsupervised Depth Pre-training. We train the depth and pose networks with a mini-batch size of 6 image pairs whose size is \(576 \times 160\), from KITTI raw dataset or CityScapes dataset for 100K iterations. We use a learning rate is 2e-4. Each iteration takes around 0.8s (forward and backprop) during training.

Unsupervised Flow Pre-training. Following Meister et al. [43], we train the flow network with a mini-batch size of 4 image pairs whose size is \(1152\times 320\) from SYNTHIA dataset for 300K iterations. We keep the initial learning rate as 1e-4 for the first 100K iterations and then reduce the learning rate by half after each 100K iterations. Each iteration takes around 2.4 s (forward and backprop).

Unsupervised Joint Training. We jointly train the depth, pose, and flow networks with a mini-batch size of 4 image pairs from KITTI raw dataset for 100K iterations. Input size for the depth and pose networks is \(576\times 160\), while the input size for the flow network is \(1152\times 320\). We divide the initial learning rate by 2 for every 20K iterations. Our depth network produces depth predictions at 4 spatial scales, while the flow network produces flow fields at 5 scales. We enforce the cross-network consistency in the finest 4 scales. Each iteration takes around 3.6 s (forward and backprop) during training.

Image Resolution of Network Inputs/Outputs. As the input size of the UnFlow-C network [43] must be divisible by 64, we resize input image pairs of the two KITTI flow datasets to \(1280 \times 384\) using bilinear interpolation. We then resize the estimated optical flow and rescale the predicted flow vectors to match the original input size. For depth estimation, we resize the input image to the same size of training input to predict the disparity first. We then resize and rescale the predicted disparity to the original size and compute the inverse the obtain the final prediction.

denotes stereo input pairs,

denotes stereo input pairs,  denotes monocular video clips. The best and the second best performance in each block are highlighted as bold and underline.

denotes monocular video clips. The best and the second best performance in each block are highlighted as bold and underline.4.3 Evaluation Metrics

Following Zhou et al. [73], we evaluate our depth network using several error metrics (absolute relative difference, square related difference, RMSE, log RMSE). For optical flow estimation, we compute the average endpoint error (EPE) on pixels with the ground truth flow available for each dataset. On KITTI flow 2015 dataset [44], we also compute the F1 score, which is the percentage of pixels that have EPE greater than 3 pixels and 5% of the ground truth value.

4.4 Experimental Evaluation

Single-View Depth Estimation. We compare our depth network with state-of-the-art algorithms on the test split of the KITTI raw dataset provided by Eigen et al. [14]. As shown in Table 1, our method achieves the state-of-the-art performance when compared with models trained with monocular video sequences. However, our method performs slightly worse than the models that exploit calibrated stereo image pairs (i.e., pose supervision) or with additional ground truth depth annotation. We believe that performance gap can be attributed to the error induced by our pose network. Extending our approach to calibrated stereo videos is an interesting future direction.

We also conduct an ablation study by removing the forward-backward consistency loss or cross-task consistency loss. In both cases our results show significant performance of degradation, highlighting the importance the proposed consistency loss. Figure 5 shows qualitative comparison with [14, 73], our method can better capture thin structure and delineate clear object contour.

To evaluate the generalization ability of our depth network on general scenes, we also apply our trained model to the Make3D dataset [53]. Table 2 shows that our method achieves the state-of-the-art performance compared with existing unsupervised models and is competitive with respect to supervised learning models (even without fine-tuning on Make3D datasets).

indicates that the model is trained with ground truth annotation, while

indicates that the model is trained with ground truth annotation, while  indicates the model is trained in an unsupervised manner. The best and the second best performance in each block are highlighted as bold and underline.

indicates the model is trained in an unsupervised manner. The best and the second best performance in each block are highlighted as bold and underline.Optical Flow Estimation. We compare our flow network with conventional variational algorithms, supervised CNN methods, and several unsupervised CNN models on the KITTI flow 2012 and 2015 datasets. As shown in Table 3, our method achieves state-of-the-art performance on both datasets. A visual comparison can be found in Fig. 6. With optional fine-tuning on available ground truth labels on the KITTI flow datasets, we show that our approach achieves competitive performance sharing similar network architectures. This suggests that our method can serve as an unsupervised pre-training technique for learning optical flow in domains where the amounts of ground truth data are scarce.

Pose Estimation. For completeness, we provide the performance evaluation of the pose network. We follow the same evaluation protocol as [73] and use a 5-frame based pose network. As shown in Table 4, our pose network shows competitive performance with respect to state-of-the-art visual SLAM methods or other unsupervised learning methods. We believe that a better pose network would further improve the performance of both depth or optical flow estimation.

5 Conclusions

We presented an unsupervised learning framework for both sing-view depth prediction and optical flow estimation using unlabeled video sequences. Our key technical contribution lies in the proposed cross-task consistency that couples the network training. At test time, the trained depth and flow models can be applied independently. We validate the benefits of joint training through extensive experiments on benchmark datasets. Our single-view depth prediction model compares favorably against existing unsupervised models using unstructured videos on both KITTI and Make3D datasets. Our flow estimation model achieves competitive performance with state-of-the-art approaches. By leveraging geometric constraints, our work suggests a promising future direction of advancing the state-of-the-art in multiple dense prediction tasks using unlabeled data.

References

Abadi, M., et al.: TensorFlow: large-scale machine learning on heterogeneous distributed systems. arXiv preprint arXiv:1603.04467 (2016)

Bailer, C., Taetz, B., Stricker, D.: Flow fields: dense correspondence fields for highly accurate large displacement optical flow estimation. In: ICCV (2015)

Brox, T., Bregler, C., Malik, J.: Large displacement optical flow. In: CVPR (2009)

Bruhn, A., Weickert, J., Schnörr, C.: Lucas/Kanade meets Horn/Schunck: combining local and global optic flow methods. IJCV 61(3), 211–231 (2005)

Butler, D.J., Wulff, J., Stanley, G.B., Black, M.J.: A naturalistic open source movie for optical flow evaluation. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012. LNCS, vol. 7577, pp. 611–625. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33783-3_44

Chang, J., Fisher, J.W.: Topology-constrained layered tracking with latent flow. In: ICCV (2013)

Chen, W., Deng, J.: Learning single-image depth from videos using quality assessment networks. In: ECCV (2018)

Chen, W., Fu, Z., Yang, D., Deng, J.: Single-image depth perception in the wild. In: NIPS (2016)

Cheng, J., Tsai, Y.H., Wang, S., Yang, M.H.: SegFlow: joint learning for video object segmentation and optical flow. In: ICCV (2017)

Clevert, D.A., Unterthiner, T., Hochreiter, S.: Fast and accurate deep network learning by exponential linear units (ELUs). In: ICLR (2016)

Cordts, M., et al.: The cityscapes dataset for semantic urban scene understanding. In: CVPR (2016)

Dosovitskiy, A., et al.: FlowNet: learning optical flow with convolutional networks. In: ICCV (2015)

Eigen, D., Fergus, R.: Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In: ICCV (2015)

Eigen, D., Puhrsch, C., Fergus, R.: Depth map prediction from a single image using a multi-scale deep network. In: NIPS (2014)

Furukawa, Y., Curless, B., Seitz, S.M., Szeliski, R.: Towards internet-scale multi-view stereo. In: CVPR (2010)

Gaidon, A., Wang, Q., Cabon, Y., Vig, E.: Virtual worlds as proxy for multi-object tracking analysis. In: CVPR (2016)

Garg, R., Carneiro, G., Reid, I.: Unsupervised CNN for single view depth estimation: geometry to the rescue. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9912, pp. 740–756. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46484-8_45

Geiger, A., Lenz, P., Stiller, C., Urtasun, R.: Vision meets robotics: the KITTI dataset. IJRR 32(11), 1231–1237 (2013)

Geiger, A., Lenz, P., Urtasun, R.: Are we ready for autonomous driving? The KITTI vision benchmark suite. In: CVPR (2012)

Godard, C., Mac Aodha, O., Brostow, G.: Digging into self-supervised monocular depth estimation. arXiv preprint arXiv:1806.01260 (2018)

Godard, C., Mac Aodha, O., Brostow, G.J.: Unsupervised monocular depth estimation with left-right consistency. In: CVPR (2017)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: CVPR (2016)

Horn, B.K., Schunck, B.G.: Determining optical flow. Artif. Intell. 17(1–3), 185–203 (1981)

Huang, P.H., Matzen, K., Kopf, J., Ahuja, N., Huang, J.B.: DeepMVS: learning multi-view stereopsis. In: CVPR (2018)

Hur, J., Roth, S.: MirrorFlow: exploiting symmetries in joint optical flow and occlusion estimation. In: ICCV (2017)

Ilg, E., Mayer, N., Saikia, T., Keuper, M., Dosovitskiy, A., Brox, T.: Flownet 2.0: evolution of optical flow estimation with deep networks. In: CVPR (2017)

Jaderberg, M., Simonyan, K., Zisserman, A., Kavukcuoglu, K.: Spatial transformer networks. In: NIPS (2015)

Yu, J.J., Harley, A.W., Derpanis, K.G.: Back to basics: unsupervised learning of optical flow via brightness constancy and motion smoothness. In: Hua, G., Jégou, H. (eds.) ECCV 2016. LNCS, vol. 9915, pp. 3–10. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-49409-8_1

Karsch, K., Liu, C., Kang, S.B.: Depth transfer: depth extraction from video using non-parametric sampling. TPAMI 36(11), 2144–2158 (2014)

Kendall, A., Gal, Y., Cipolla, R.: Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In: NIPS (2017)

Kingma, D., Ba, J.: Adam: a method for stochastic optimization. In: ICLR (2014)

Kuznietsov, Y., Stückler, J., Leibe, B.: Semi-supervised deep learning for monocular depth map prediction. In: CVPR (2017)

Lai, W.S., Huang, J.B., Yang, M.H.: Semi-supervised learning for optical flow with generative adversarial networks. In: NIPS (2017)

Laina, I., Rupprecht, C., Belagiannis, V., Tombari, F., Navab, N.: Deeper depth prediction with fully convolutional residual networks. In: 3DV (2016)

Li, B., Shen, C., Dai, Y., van den Hengel, A., He, M.: Depth and surface normal estimation from monocular images using regression on deep features and hierarchical CRFs. In: CVPR (2015)

Li, Z., Snavely, N.: MegaDepth: learning single-view depth prediction from internet photos. In: CVPR (2018)

Liu, C., Freeman, W.T., Adelson, E.H., Weiss, Y.: Human-assisted motion annotation. In: CVPR (2008)

Liu, F., Shen, C., Lin, G.: Deep convolutional neural fields for depth estimation from a single image. In: CVPR (2015)

Liu, M., Salzmann, M., He, X.: Discrete-continuous depth estimation from a single image. In: CVPR (2014)

Lucas, B.D., Kanade, T., et al.: An iterative image registration technique with an application to stereo vision. In: IJCAI (1981)

Mahjourian, R., Wicke, M., Angelova, A.: Unsupervised learning of depth and ego-motion from monocular video using 3D geometric constraints. In: CVPR (2018)

Mayer, N., et al.: A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation. In: CVPR (2016)

Meister, S., Hur, J., Roth, S.: UnFlow: unsupervised learning of optical flow with a bidirectional census loss. In: AAAI (2018)

Menze, M., Geiger, A.: Object scene flow for autonomous vehicles. In: CVPR (2015)

Silberman, N., Hoiem, D., Kohli, P., Fergus, R.: Indoor segmentation and support inference from RGBD images. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012. LNCS, vol. 7576, pp. 746–760. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33715-4_54

Newcombe, R.A., Lovegrove, S.J., Davison, A.J.: DTAM: Dense tracking and mapping in real-time. In: ICCV (2011)

Ranjan, A., Black, M.J.: Optical flow estimation using a spatial pyramid network. In: CVPR (2017)

Ranjan, A., Jampani, V., Kim, K., Sun, D., Wulff, J., Black, M.J.: Adversarial Collaboration: Joint unsupervised learning of depth, camera motion, optical flow and motion segmentation. arXiv preprint arXiv:1805.09806 (2018)

Ren, Z., Yan, J., Ni, B., Liu, B., Yang, X., Zha, H.: Unsupervised deep learning for optical flow estimation. In: AAAI (2017)

Revaud, J., Weinzaepfel, P., Harchaoui, Z., Schmid, C.: EpicFlow: edge-preserving interpolation of correspondences for optical flow. In: CVPR (2015)

Ros, G., Sellart, L., Materzynska, J., Vazquez, D., Lopez, A.M.: The synthia dataset: a large collection of synthetic images for semantic segmentation of urban scenes. In: CVPR (2016)

Ruder, S.: An overview of multi-task learning in deep neural networks. arXiv preprint arXiv:1706.05098 (2017)

Saxena, A., Chung, S.H., Ng, A.Y.: Learning depth from single monocular images. In: NIPS (2006)

Saxena, A., Chung, S.H., Ng, A.Y.: 3-D depth reconstruction from a single still image. IJCV 76(1), 53–69 (2008)

Stein, F.: Efficient computation of optical flow using the census transform. In: Rasmussen, C.E., Bülthoff, H.H., Schölkopf, B., Giese, M.A. (eds.) DAGM 2004. LNCS, vol. 3175, pp. 79–86. Springer, Heidelberg (2004). https://doi.org/10.1007/978-3-540-28649-3_10

Sun, D., Wulff, J., Sudderth, E.B., Pfister, H., Black, M.J.: A fully-connected layered model of foreground and background flow. In: CVPR (2013)

Sun, D., Yang, X., Liu, M.Y., Kautz, J.: PWC-net: CNNs for optical flow using pyramid, warping, and cost volume. In: CVPR (2018)

Sundaram, N., Brox, T., Keutzer, K.: Dense point trajectories by GPU-accelerated large displacement optical flow. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) ECCV 2010. LNCS, vol. 6311, pp. 438–451. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15549-9_32

Tung, H.Y.F., Harley, A., Seto, W., Fragkiadaki, K.: Adversarial inversion: inverse graphics with adversarial priors. In: ICCV (2017)

Vijayanarasimhan, S., Ricco, S., Schmid, C., Sukthankar, R., Fragkiadaki, K.: SFM-net: learning of structure and motion from video. arXiv preprint arXiv:1704.07804 (2017)

Vogel, C., Roth, S., Schindler, K.: An evaluation of data costs for optical flow. In: Weickert, J., Hein, M., Schiele, B. (eds.) GCPR 2013. LNCS, vol. 8142, pp. 343–353. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-40602-7_37

Wang, P., Shen, X., Lin, Z., Cohen, S., Price, B., Yuille, A.L.: Towards unified depth and semantic prediction from a single image. In: CVPR (2015)

Weinzaepfel, P., Revaud, J., Harchaoui, Z., Schmid, C.: DeepFlow: large displacement optical flow with deep matching. In: ICCV (2013)

Wu, C.: VisualSFM: a visual structure from motion system (2011)

Wulff, J., Sevilla-Lara, L., Black, M.J.: Optical flow in mostly rigid scenes. In: CVPR (2017)

Yang, Z., Wang, P., Wang, Y., Xu, W., Nevatia, R.: LEGO: learning edge with geometry all at once by watching videos. In: CVPR (2018)

Yang, Z., Wang, P., Xu, W., Zhao, L., Nevatia, R.: Unsupervised learning of geometry with edge-aware depth-normal consistency. In: AAAI (2018)

Yin, Z., Shi, J.: GeoNet: unsupervised learning of dense depth, optical flow and camera pose. In: CVPR (2018)

Zabih, R., Woodfill, J.: Non-parametric local transforms for computing visual correspondence. In: Eklundh, J.-O. (ed.) ECCV 1994. LNCS, vol. 801, pp. 151–158. Springer, Heidelberg (1994). https://doi.org/10.1007/BFb0028345

Zamir, A.R., Sax, A., Shen, W., Guibas, L., Malik, J., Savarese, S.: Taskonomy: disentangling task transfer learning. In: CVPR (2018)

Zhan, H., Garg, R., Weerasekera, C.S., Li, K., Agarwal, H., Reid, I.: Unsupervised learning of monocular depth estimation and visual odometry with deep feature reconstruction. In: CVPR (2018)

Zhang, Z., Schwing, A.G., Fidler, S., Urtasun, R.: Monocular object instance segmentation and depth ordering with CNNs. In: ICCV (2015)

Zhou, T., Brown, M., Snavely, N., Lowe, D.G.: Unsupervised learning of depth and ego-motion from video. In: CVPR (2017)

Zoran, D., Isola, P., Krishnan, D., Freeman, W.T.: Learning ordinal relationships for mid-level vision. In: ICCV (2015)

Acknowledgement

This work was supported in part by NSF under Grant No. (#1755785). We thank NVIDIA Corporation for the donation of GPUs.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Zou, Y., Luo, Z., Huang, JB. (2018). DF-Net: Unsupervised Joint Learning of Depth and Flow Using Cross-Task Consistency. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds) Computer Vision – ECCV 2018. ECCV 2018. Lecture Notes in Computer Science(), vol 11209. Springer, Cham. https://doi.org/10.1007/978-3-030-01228-1_3

Download citation

DOI: https://doi.org/10.1007/978-3-030-01228-1_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-01227-4

Online ISBN: 978-3-030-01228-1

eBook Packages: Computer ScienceComputer Science (R0)