Abstract

Incorporating spatio-temporal human visual perception into video quality assessment (VQA) remains a formidable issue. Previous statistical or computational models of spatio-temporal perception have limitations to be applied to the general VQA algorithms. In this paper, we propose a novel full-reference (FR) VQA framework named Deep Video Quality Assessor (DeepVQA) to quantify the spatio-temporal visual perception via a convolutional neural network (CNN) and a convolutional neural aggregation network (CNAN). Our framework enables to figure out the spatio-temporal sensitivity behavior through learning in accordance with the subjective score. In addition, to manipulate the temporal variation of distortions, we propose a novel temporal pooling method using an attention model. In the experiment, we show DeepVQA remarkably achieves the state-of-the-art prediction accuracy of more than 0.9 correlation, which is \(\sim \)5% higher than those of conventional methods on the LIVE and CSIQ video databases.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Video quality assessment

- Visual sensitivity

- Convolutional neural network

- Attention mechanism

- HVS

- Temporal pooling

1 Introduction

With the explosive demand for video streaming services, it is vital to provide videos with high quality under unpredictable network conditions. Accordingly, video quality prediction plays an essential role in providing satisfactory streaming services to users. Since the ultimate receiver of video contents is a human, it is essential to develop a model or methodology to pervade human perception into the design of video quality assessment (VQA).

In this paper, we seek to measure the video quality by modeling a mechanism of the human visual system (HVS) by using convolutional neural networks (CNNs). When the HVS perceives a video, the perceived quality is determined by the combination of the spatio-temporal characteristics and the spatial error signal. For example, a local distortion can be either emphasized or masked by visual sensitivity depending on the spatio-temporal characteristics [1,2,3]. For image quality assessment (IQA), deep learning-based visual sensitivity was successfully applied to extract perceptual behavior on spatial characteristics [3]. In contrast, a video is a set of consecutive frames that contain various motion properties. The temporal variation of contents strongly affects the visual perception of the HVS, thus the problem is much more difficult than IQA. Moreover, several temporal quality pooling strategies have been attempted on VQA, but none of them could achieve high correlation as demonstrated for IQA, which still remains as a challenging issue to build a methodology to characterize the temporal human perception. In this respect, we explore a data-driven deep scheme to improve video quality remarkably from the two major motivations: Temporal motion effect and Temporal memory for quality judgment.

Example of predicted sensitivity map: (a) and (c) are a set of consecutive distorted frames; (b) and (d) are the original frames of (a) and (c); (e) is the spatial error map of (c), calculated by an error function err(c, d); (f) is the motion map of distorted frame (c), calculated as subtraction of (c) and (a); (g) is the temporal error map between the distorted frames’ motion map (f) and orginal motion map (d−b); (h) is the predicted spatio-temporal sensitivity map of the distorted frame (c).

Temporal Motion Effect. Our major motivation comes from the combined masking effects caused by spatial and temporal characteristics of a video. Figures 1(a)–(d) show a set of consecutive distorted frames and their originals and Figs. 1(e)–(g) show key examples of the spatial error map, a motion map, and a temporal error map of the distorted frame in (c). Each map will be explained in detail in Sect. 3.2. Being seen as a snapshot, several blocking artifacts induced by wireless network distortion are noticeable around pedestrians as shown in (a). However, they are hardly observable if they are shown in a playing video. This is due to a temporal masking effect which explains the phenomenon that the changes in hue, luminance, and size are less visible to humans when there exist large motions [4]. On the other hand, when a severe error in the motion map occurs as demonstrated in Fig. 1(g), spatial errors become more visible to humans, which is known as a mosquito noise in video processing studies [5, 6]. Owing to these complex interactions between the spatial errors and motions, conventional IQA methods usually result in inaccurate predictions of the perceptual quality of distorted videos. In the meantime, among VQA studies, many attempts have been made to address the above phenomena by modeling the spatio-temporal sensitivity of the HVS [7,8,9,10]. However, these studies yielded limited performances because it is formidable to design a general purpose model considering both spatial and temporal behaviors of the HVS. Therefore, we propose a top-down approach where we establish the relationship between the distortions and perceptual scores first, then it is followed by pixel-wise sensitivities considering both spatial and temporal factors. Figure 1(h) is an example of the predicted spatio-temporal sensitivity map by ours. The dark regions such as the pedestrians are predicted less sensitively by the strong motion in Fig. 1(f), while the bright regions have high weights by the temporal error component in Fig. 1(g).

Temporal Memory for Quality Judgment. In addition, as our second motivation, we explore the retrospective quality judgment patterns of humans given the quality scores of the frames in a video, which is demonstrated in Fig. 2. If there exist severely distorted frames in a video (Video B), humans generally determine that it has lower quality than a video having uniform quality distribution (Video A) even though both of them have the same average quality. Accordingly, a simple statistical temporal pooling does not work well in VQA [1, 11, 12]. Therefore, there has been a demand for an advanced temporal pooling strategy which reflects humans’ retrospective decision behavior on video quality.

Our framework, which we call as Deep Video Quality Assessor (DeepVQA), fully utilizes the advantages of a convolutional neural network. To predict the spatio-temporal sensitivity map, a fully convolutional model is employed to extract useful information regarding visual perception which is embedded in a VQA database. Moreover, we additionally develop a novel pooling algorithm by borrowing an idea from an ‘attention mechanism’, where a neural network model focuses on only specific parts of an input [13,14,15]. To weight the predicted quality score of each frame adaptively, the proposed scheme uses a convolution operation, which we named a convolutional neural aggregation network (CNAN). Rather than taking a single frame quality score, our pooling method considers the distribution of predicted scores. Our contributions are summarized as follows:

-

1.

The spatio-temporal sensitivity map is predicted through self-training without any prior knowledge of the HVS. In addition, a temporal pooling method is adaptively performed by utilizing the CNAN network.

-

2.

Since the spatio-temporal sensitivity map and temporal pooling weight are derived as intermediate results, it is able to infer and visualize an important cue of human perception based on the correlation between the subjective and objective scores from the reverse engineering perspective, which is totally different from modeling based conventional methods.

-

3.

Through achieving the state-of-the-art performance via end-to-end optimization, the human perception can be more clearly verified by the CNN/Attention based full reference (FR) VQA framework.

2 Related Works

2.1 Spatio-Temporal Visual Sensitivity

Numerous VQA models have been developed with respect to the human visual sensitivity. From these works, masking effects have been explained by a spatio-temporal contrast sensitivity function (CSF) [16,17,18]. According to the spatio-temporal CSF which resembles a band-pass filter, humans are not sensitive to signals with very low or high frequencies. Therefore, if strong contrast or motions exist, distortions are less noticeable in accordance with the masking effects [4, 19, 20]. Based on these observations, various VQA methods have been developed. Saad et al. [7] used motion coherency and ego-motion as features that affect temporal masking. Mittal et al. [21] introduced a natural video statistics (NVS) theory, which is based on experimental results that pixel distributions can affect the visual sensitivity. However, there is a limitation in reflecting the complicated behavior of the HVS into the visual sensitivity models by these prior knowledge. Therefore, we design a learning-based model that learns human visual sensitivity autonomously from visual cues that affect the HVS.

Recently, there have been attempts to learn visual sensitivity by using deep-learning in I/VQA [3, 22, 23]. However, they did not consider motion properties when they extracted quality features. Therefore, a limitation still exists in predicting the effect of large motion variance.

2.2 Temporal Pooling

Temporal quality pooling methods have been studied in the VQA field. As mentioned, the simple strategy of taking the average has been employed in many VQA algorithms [24,25,26]. Other studies have analyzed the score distribution and adaptively pooled the temporal scores from the HVS perspective [12]. However, since these naive pooling strategies utilize only limited temporal features, it is difficult to generalize to practical videos.

Recently, the attention mechanism has been developed in machine learning field [13, 15]. Attention mechanisms in neural networks are based on the visual attention in the HVS. The attention-based method essentially allows the model to focus on specific regions and adjust focus over the temporal axis. Motivated by this, there was a study to solve temporal pooling through attention feature embedding [14]. However, since it adaptively embeds a weight vector to each independent score feature vector, it is difficult to effectively utilize the scheme for video temporal quality pooling due to the lack of consideration of the temporal score context. Instead, we use the convolution operation to detect specific patterns of score distribution, so it adaptively weights and combines the temporal scores as shown in Fig. 2.

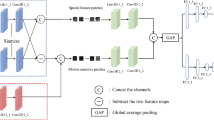

Architecture of DeepVQA. The model takes a distorted frame, the spatial error map, the frame difference map and the temporal error map as input. Step 1: The CNN model is regressed onto a subjective score by the average pooling. Step 2: the overall frame scores are pooled using the CNAN and regressed onto the subjective score.

3 DeepVQA Framework

3.1 Architecture

Visual sensitivity indicates which area of a given spatial error signal is perceived more sensitively to the HVS. The most intuitive way to learn visual sensitivity is to extract the weight map for a given spatial error map. As mentioned in Sect. 1, the visual sensitivity of a video content is determined by the spatial and temporal factors. Hence, by putting the sufficient information containing these factors as inputs, the model is able to learn visual sensitivity that reflects spatial and temporal masking effects. The proposed framework is depicted in Fig. 3. In our method, the spatio-temporal sensitivity map is first learned in step 1, then, a sensitivity weighted error map for each frame is temporally aggregated by the CNAN in step 2. As shown in Fig. 3, the CNAN takes a set of video frame scores in step 1 and computes a single pooled score as output.

A deep CNN with 3 \(\times \) 3 filters is used for step 1 inspired by the recent CNN based work [3] for IQA. To generate the spatio-temporal sensitivity map without losing the location information, the model contains only convolutional layers. In the beginning, the distorted frames and spatial error maps are fed to spatial sensitivity representation. In addition, the model takes the frame difference and temporal error maps into account for a temporal factor. Each set of the input maps goes through independent convolutional layers, and feature maps are concatenated after the second convolutional layer. Zero-padding is applied before each convolution to preserve the feature map size. Two stridden convolutions are used for subsampling. Therefore the size of the final output is 1/4 compared to that of the original frame, and the ground-truth spatial error maps are downscaled to 1/4 correspondingly. At the end of the model in step 1, two fully connected layers are used to regress features onto the subjective scores.

In step 2, the proposed CNAN is trained using the pre-trained CNN model in step 1 and regressed onto the subjective video score as shown in Fig. 3. Once each feature is derived from the previous CNN independently, they are fed into the CNAN. By the CNAN, an aggregated score yields the final score. After then, two fully connected layers are used to regress the final score.

Frame Normalization. From the HVS perspective, each input in Fig. 3 is preprocessed to make the necessary properties stand out. Since the CSF shows a band-pass filter shape peaking at around 4 cycles per degree, and sensitivity drops rapidly at low-frequency [27]. Therefore, the distorted frames are simply normalized by subtracting the lowpass filtered frames from its grey scaled frames (range in [0, 1]). The normalized frames are denoted by \(\hat{I}_{r}^{t}\) and \(\hat{I}_{d}^{t}\) for given distorted \({I}_{d}^{t}\) and reference \({I}_{r}^{t}\) frames where t is frame index.

Patch-Based Video Learning. In the previous deep-learning based IQA works, a patch-based approach was successfully applied [3, 23, 28,29,30]. In our model, each video frame is split into patches, and then all the sensitivity patches in one frame are extracted. Next, these are used to reconstruct the sensitivity map as shown in Fig. 3. To avoid the overlapped regions of the predicted perceptual error map, the step of the sliding window is determined as \(step_{patch} = size_{patch}-(N_{ign} \times 2 \times R)\), where \(N_{ign}\) is the number of ignored pixels, and R is the size ratio of the input and the perceptual error map. In the experiment, the ignored pixel \(N_{ign}\) was setted 4, and the patch size \(size_{patch}\) was 112 \(\times \) 112. To train the model, one video was split into multiple patches, which were then used as one training sample. In step 1, 12 frames per video were uniformly sampled, and 120 frames were used to train the model in step 2.

3.2 Spatio-Temporal Sensitivity Learning

The goal of spatio-temporal sensitivity learning is to derive an importance of each pixel for a given error map. To achieve this, we utilize the distorted frame and spatial error map as the spatial factors. Also, the frame difference and temporal error maps are used as the temporal factor. We define a spatial error map \(\varvec{{e}}_\textsc {s}^t\) as a normalized log difference, as in [3],

where \(\epsilon =1\) for the experiment. To represent motion map, the frame difference is calculated along the consecutive frames. Since each video contains different frames per second (fps), the frame difference map considering fps variation is simply defined as \(\varvec{{f}}^t_d = |{I}_{d}^{t+\delta }-{I}_{d}^{t}|\) where \(\delta = \left\lfloor fps/25 \right\rfloor \). In a similar way, the temporal error map, which is the difference between the motion information of the distorted and reference frames, is defined as \(\varvec{{e}}_\textsc {t}^t = | \varvec{{f}}^t_d-\varvec{{f}}^t_r|\), where \(\varvec{{f}}^t_r\) is the frame difference of the reference frame. Then, the spatio-temporal sensitivity map \(\varvec{\mathbf {s}}^t\) is obtained from the CNN model of step 1 as

where \(CNN_{s1}\) is the CNN model of step 1 with parameters \(\theta _{s1}\). To calculate a global score of each frame, the perceptual error map is defined by \(\varvec{\mathbf {p}}^t = \varvec{\mathbf {s}}^t\bigodot \varvec{\mathbf {e}}^t_\textsc {s}\), where \(\bigodot \) is element-wise product.

Because we use zero-padding before each convolution, we ignore border pixels which tend to be zero. Each four rows and columns for each border are excluded in the experiment. Therefore, the spatial score \({\mu }_\mathbf {p}^t\) is derived by averaging the cropped perceptual error map \(\mathbf {p}^t\) as

where H and W are the height and width of \(\mathbf {p}^t\), (i, j) is a pixel index in cropped region \(\varOmega \). Then, the score in step 1 is obtained by average pooling over spatial scores as \({\mu }_{s1} = \sum _t{{\mu }_\mathbf {p}^t}\). The pooled score is, then, fed into two fully connected layers to rescale the prediction. Then the final objective loss function is defined by a weighted summation of loss function and the regularization term as

where \(\hat{\mathbf {I}}_{d},\varvec{\mathbf {e}}_\textsc {s},\varvec{\mathbf {f}}_d,\varvec{\mathbf {e}}_\textsc {t}\) are sequences of each input, \(f(\cdot )\) is a regression function with parameters \(\phi _1\) and \(\mathbf {s}_{sub}\) is the ground-truth subjective score of the distorted video. In addition, a total variation (TV) and \(L_2\) norm of the parameters are used to relieve high-frequency noise in the spatio-temporal sensitivity map and to avoid overfitting [3]. \(\lambda _1\) and \(\lambda _2\) are their weight parameters, respectively.

3.3 Convolutional Neural Aggregation Network

In step 1, the average of the perceptual error maps over spatial and temporal axes is regressed to a global video score. As mentioned, simply applying a mean pooling results in inaccurate predictions. To deal with this problem, we conduct temporal pooling for each frame’s predicted score using the CNAN in step 2.

The memory attention mechanism has been successfully applied in various applications to pool spatial or temporal data [13,14,15]. Likewise, the CNAN is designed to predict human patterns of score judgment over all the frames’ scores. The basic idea is to use a convolutional neural model to learn external memories through a differentiable addressing/attention scheme. Then the learned memories adaptively weight and combine scores across all frames.

Figure 4 shows the architecture of the CNAN for temporal pooling. The overall frame scores from step 1 are represented by a single vector \(\varvec{\mu }_\mathbf {p}\). We then define a set of corresponding significance \(\mathbf e \) in the attention block using the memory kernel \(\mathbf {m}\). To generate the significance \(\mathbf e \), one dimensional convolution is performed on the given \(\varvec{\mu }_\mathbf {p}\) using the memory kernel \(\mathbf {m}\). In other words, the significance is designed to learn a specific pattern of score variation during a certain filter length. This operation can be described as a simple convolution \(\mathbf e = \mathbf {m}*\varvec{\mu }_\mathbf {p}\). To maintain the dimension of weights equal to \(\varvec{\mu }_\mathbf {p}\), we padded zeros to the border of input \(\varvec{\mu }_\mathbf {p}\). They are then passed to the softmax operator to generate positive temporal weights \(\omega _t\) with \(\sum _t\omega _t=1\) as

Finally, the temporal weight \(\omega _t\) derived from the attention block, is applied to the origin score vector to generate the final aggregated video score as \({\mu }_{s2}=\sum _t{\omega _t{\mu }_\mathbf {p}^t}\). Therefore, the objective function in step 2 is represented as

where, \(f_{\phi _2}(\cdot )\) represents a nonlinear regression function with parameters \(\phi _2\), and \(\theta _{s1}\) refers to parameters in step 1.

4 Experimental Results

Since our goal is to learn spatio-temporal sensitivity and to aggregate frame scores via the CNAN, we chose the baseline model which takes only two spatial inputs (DeepQA [3]). Moreover, to study the effect of the temporal input, two simpler models of DeepVQA without CNAN are defined. First, DeepVQA-3ch takes only two spatial inputs and the frame difference map. Second, DeepVQA-4ch takes all input maps. For both models, average pooling was conducted as described in step 1. We indicate the complete model as DeepVQA-CNAN.

Examples of the predicted sensitivity maps; (a), (f), (k), and (p) are distorted frames with Wireless, IP, H.264 compression, and MPEG-2 compression; (b), (g), (l), and (q) are the objective error maps; (c), (h), (m), and (r) are the frame difference maps; (d), (i), (n), and (s) are the temporal error maps; (e), (j), (o), and (t) are the predicted spatio-temporal sensitivity maps.

4.1 Dataset

To evaluate the proposed algorithm, two different VQA databases were used: LIVE VQA [11], and CSIQ [31] databases. The LIVE VQA database contains 10 references and 150 distorted videos with four distortion types: wireless, IP, H.264 compression and MPEG-2 compression distortions. The CSIQ database includes 12 references and 216 distorted videos with six distortion types: motion JPEG (MJPEG), H.264, HEVC, wavelet compression using SNOW codec, packet-loss in a simulated wireless network and additive white Gaussian noise (AWGN). In the experiment, the ground-truth subjective scores were rescaled to the range [0, 1]. For differential mean opinion score (DMOS) values, their scale was reversed so that the larger values indicate perceptually better videos. Following the recommendation from the video quality experts group [32], we evaluated the performance of the proposed algorithm using two standard measures, i.e., Spearman’s rank order correlation coefficient (SROCC) and Pearson’s linear correlation coefficient (PLCC).

4.2 Spatio-Temporal Sensitivity Prediction

To study the relevance of trained DeepVQA-4ch to the HVS, the predicted spatio-temporal sensitivity maps are shown in Fig. 5. Here, DeepVQA-4ch was trained with \(\lambda _1=0.02\), \(\lambda _2=0.005\). An example frames with four types of artifacts (wireless, IP, H.264 and MPEG-2) are represented in Figs. 5(a), (f), (k) and (p). Figures 5(b), (g), (l) and (q) are the spatial error maps, (c), (h), (m) and (r) are the frame difference maps, (d), (i), (n) and (s) are the temporal error maps, and (e), (j), (o) and (t) are the predicted sensitivity maps. In Fig. 5, darker regions indicate that pixel values are low. In case of wireless and IP distortions, temporal errors ((d) and (i)) are large in overall areas. Since humans are very sensitive to this motion variation cues, predicted sensitivity values ((e) and (j)) are high in all areas. Conversely, for H.264 and M-JPEG2 distortions, temporal errors ((n) and (s)) are relatively lower than those of wireless and IP distortions. In this case, the frame difference map which contains the motion information is a dominant factor in predicting the sensitivity map. In Fig. 5, a foreground object is being tracked in a video. Therefore, the motion maps ((m) and (r)) in the background region have higher values than those of the object. Finally, the value of background regions in the predicted sensitivity maps ((o) and (t)) is relatively low. These results are consistent with the previous studies on the temporal masking effect, which cannot be obtained only by considering spatial masking effect. Therefore, it can be concluded that the temporal information, as well as spatial error, is important to quantify the visual quality of videos.

4.3 CNAN Temporal Pooling

To evaluate the CNAN, we analyzed the relationship between the temporal pooling weight \(\omega \) computed in the attention block and the normalized spatial score \({\mu }_\mathbf {p}\) computed in step 1. Here, the size of kernel \(\mathbf {m}\) was set to 21 \(\times \) 1 experimentally. Figures 6(a) and (b) show two predicted temporal score distributions of \({\mu }_\mathbf {p}\) (straight line) and its temporal weights \(\omega \) (dotted line). In Fig. 6(a), the scores tend to rise or fall sharply at about every 5 frames. Conversely, the temporal weight has a higher value when the predicted score is low. This is because, as mentioned in Sect. 1, the human rating is highly affected by negative peak experiences than the overall average quality [7, 12]. Therefore, it is obvious that the learned model mimics the temporal pooling mechanism of a human.

Figure 6(b) shows that the scores are uniformly distributed except for the middle region. As explained before, the CNAN shows the filter response for a particular pattern by memory kernel \(\mathbf {m}\). Thus, the filter response of a monotonous input signal also tends to be monotonous. However, at near the \(30^{th}\) frame, the temporal pooling weight \(\omega \) is large when the frame score abruptly changes. Therefore, the CNAN enables to reflect the behavior of scoring appropriately and leads to a performance improvement in Tables 3 and 4.

4.4 Number of Frames vs. Computation Cost

The number of video frames used for training the DeepVQA model has a great impact on a computational cost. As shown in Fig. 6, although the quality scores vary for each frame, the distribution shows certain patterns. Therefore, it is feasible to predict a quality score by using only a few sampled frames. To study the computational cost, we measure the performance according to the sampling rate.

For the simulation, a machine powered by a Titan X and equipped with the Theano. SROCC over 130 epochs with the 4 subset frames (6, 12, 48 and 120) is depicted in Fig. 7. When the number of sampled frames was 12, the SROCC was slightly higher than those of the other cases with a faster convergence speed. However, when the sampled frame was 120, the model suffered overfitting after 70 epochs, showing performance degradation. As shown in Table 1, DeepVQA obviously shows higher performance and lower execution time when using a video subset which contains a small number of frames.

Examples of the predicted sensitivity maps with different channel inputs: (a) is the distorted frame; (b) is the original frame; (c) is the frame difference map; (d) is the temporal error map; (e) is the spatial error map (f)–(h) are its predicted sensitivity maps from DeepQA (2ch) [3], DeepVQA-3ch and DeepVQA-4ch, respectively.

4.5 Ablation Study

We verify the ablation of each input map and CNAN in our framework. To evaluate the ablation set, we tested each model (DeepQA (2ch [3]), DeepVQA-3ch, DeepVQA-4ch and DeepVQA-CNAN) on the LIVE and CSIQ databases. The experimental settings will be explained in Sect. 4.6 and the comparison results are tabulated in Tables 3 and 4. DeepQA [3] using only the distorted frame and spatial error map yielded lower performance than DeepVQA-3ch and 4ch. Since DeepQA only infers the visual sensitivity of the spatial masking effect, it is strongly influenced by the spatial error signals. However, the performances of DeepVQA-3ch and 4ch which were designed to infer the temporal motion effects were gradually improved. Moreover, the DeepVQA model combined with CNAN showed the highest performance since it considers the human patterns of quality judgment.

To study the effect of each channel input, we visualized the spatio-temporal sensitivity maps over different channel input. Figure 8 shows the predicted sensitivity map with different channel inputs. Figures 8(a), (b) and (e) are the distorted frame, its original and the spatial error map, respectively. In the case of Fig. 8(f), the local region of the sensitivity map looks similar to the spatial blocking artifact. However, when the frame difference map (Fig. 8(c)) is added in the model as Fig. 8 (g), the sensitivity is decreased for the regions with strong motions (darker region) as we expected. Finally, as Fig. 8(h), when all the four inputs including the temporal error map (Fig. 8(d)) are used, the sensitivity map is learned to consider all of the motion effects as described in Sect. 1. In addition, as the number of channels increases, the predicted sensitivity map tends to be smoother, which agrees with the HVS well [3].

4.6 Performance Comparison

To evaluate the performances, we compared DeepVQA with state-of-the-art I/VQA methods on the LIVE and CSIQ databases. We first randomly divided the reference videos into two subsets (80% for training and 20% for testing) and their corresponding distorted videos were divided in the same way so that there was no overlap between the two sets. DeepVQA was trained in a non-distortion-specific way so that all the distortion types were used simultaneously. The training stage of step 1 (step 2) iterated 300 (20) epochs, then a model with the lowest validation error was chosen over the epochs. The accuracy of step 1 mostly saturated after 200 epochs as shown in Fig. 7. The correlation coefficients of the testing model are the median values of 20 repeated experiments while dividing the training and testing sets randomly in order to eliminate the performance bias. DeepVQA-3ch, DeepVQA-4ch and DeepVQA-CNAN were compared to FR I/VQA models: PSNR, SSIM [33], VIF [34], ST-MAD [35], ViS3 [36], MOVIE [25] and DeepQA [3]. For IQA metrics (PSNR, SSIM, VIF and DeepQA), we took an average pooling for each frame score to get a video score. In addition, the no-reference (NR) VQA models were benchmarked: V-BLIINDS [7], SACONVA [26]. To verify the temporal pooling performance, we further compare the existing temporal pooling method: VQPooling [12].

Tables 3 and 4 show the PLCC and SROCC comparisons for individual distortion types on the LIVE and CSIQ databases. The last column in each table reports overall SROCC and PLCC for all the distortion types, and the top three models for each criterion are shown in bold. Since our proposed model is a non-distortion specific model, the model should work well for overall performance when various distortion types coexist in the dataset. In our experiment, the highest SROCC and PLCC of overall distortion types were achieved by DeepVQA-CNAN in all the databases. In addition, DeepVQA-CNAN are generally competitive in most distortion types, even when each type of distortion is evaluated separately. Because the most of the distortion types in LIVE and CSIQ is distorted by video compression, which cause local blocking artifacts, there are many temporal errors in the databases. For this reason, the spatio-temporal sensitivity map is excessively activated in the large-scale block distortion type such as Fig. 5(j). Therefore, DeepVQA achieved relatively low performance in face of Wireless and IP distortions which include a large size of blocking artifacts. As shown in Table 4, since AWGN causes only spatial distortion, it shows a relatively low performance compared to the other types having blocking artifacts. Nevertheless, DeepVQA achieved a competitive and consistent accuracy across all the databases. Also, comparing the DeepVQA-4ch and DeepQA, we can infer that using the temporal inputs helps the model to extract useful features leading to an increase in an accuracy. Furthermore, VQPooling (DeepVQA-VQPooling) showed a slight improvement compared to DeepVQA-4ch, but CNAN showed approximately \(\sim \)2% improvement. Therefore, it can be concluded that temporal pooling via the CNAN improves performance the overall prediction.

4.7 Cross Dataset Test

To test the generalization capability of DeepVQA, the model was trained using the subset of the CSIQ video database, and tested on the LIVE video database. Since the CSIQ video database contains broader kinds of distortion types, we selected four distortion types (H.264, MJPEG, PLoss, and HEVC) which are similar in the LIVE database. The results are shown in Table 2, where both DeepVQA and DeepVQA-CNAN show nice performances. We can conclude that this models do not depend on the databases.

5 Conclusion

In this paper, we proposed a novel FR-VQA framework using a CNN and a CNAN. By learning a human visual behavior in conjunction with spatial and temporal effects, it turned out the proposed model is able to learn the spatio-temporal sensitivity from a human perception point of view. Moreover, the temporal pooling technique using the CNAN predicted the temporal scoring behavior of humans. Through the rigorous simulations, we demonstrated that the predicted sensitivity maps agree with the HVS. The spatio-temporal sensitivity maps were robustly predicted against the various motion and distortion types. In addition, DeepVQA achieved the state-of-the-art correlations on LIVE and CSIQ databases. In the future, we plan to advance the proposed framework to NR-VQA, which is one of the most challenging problems.

References

Ninassi, A., Le Meur, O., Le Callet, P., Barba, D.: Considering temporal variations of spatial visual distortions in video quality assessment. IEEE J. Sel. Top. Signal Process. 3(2), 253–265 (2009)

Bovik, A.C.: Automatic prediction of perceptual image and video quality. Proc. IEEE 101(9), 2008–2024 (2013)

Kim, J., Lee, S.: Deep learning of human visual sensitivity in image quality assessment framework. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

Suchow, J.W., Alvarez, G.A.: Motion silences awareness of visual change. Curr. Biol. 21(2), 140–143 (2011)

Fenimore, C., Libert, J.M., Roitman, P.: Mosquito noise in mpeg-compressed video: test patterns and metrics. In: Proceedings of SPIE the International Society For Optical Engineering, pp. 604–612. International Society for Optical Engineering (2000)

Jacquin, A., Okada, H., Crouch, P.: Content-adaptive postfiltering for very low bit rate video. In: Proceedings of Data Compression Conference, DCC 1997, pp. 111–120. IEEE (1997)

Saad, M.A., Bovik, A.C., Charrier, C.: Blind prediction of natural video quality. IEEE Trans. Image Process. 23(3), 1352–1365 (2014)

Manasa, K., Channappayya, S.S.: An optical flow-based full reference video quality assessment algorithm. IEEE Trans. Image Process. 25(6), 2480–2492 (2016)

Kim, T., Lee, S., Bovik, A.C.: Transfer function model of physiological mechanisms underlying temporal visual discomfort experienced when viewing stereoscopic 3D images. IEEE Trans. Image Process. 24(11), 4335–4347 (2015)

Kim, J., Zeng, H., Ghadiyaram, D., Lee, S., Zhang, L., Bovik, A.C.: Deep convolutional neural models for picture-quality prediction: challenges and solutions to data-driven image quality assessment. IEEE Signal Process. Mag. 34(6), 130–141 (2017)

Seshadrinathan, K., Soundararajan, R., Bovik, A.C., Cormack, L.K.: Study of subjective and objective quality assessment of video. IEEE Trans. Image Process. 19(6), 1427–1441 (2010)

Park, J., Seshadrinathan, K., Lee, S., Bovik, A.C.: Video quality pooling adaptive to perceptual distortion severity. IEEE Trans. Image Process. 22(2), 610–620 (2013)

Vinyals, O., Bengio, S., Kudlur, M.: Order matters: sequence to sequence for sets. arXiv preprint arXiv:1511.06391 (2015)

Yang, J., et al.: Neural aggregation network for video face recognition. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2492–2495

Graves, A., Wayne, G., Danihelka, I.: Neural turing machines. arXiv preprint arXiv:1410.5401 (2014)

Robson, J.: Spatial and temporal contrast-sensitivity functions of the visual system. JOSA 56(8), 1141–1142 (1966)

Lee, S., Pattichis, M.S., Bovik, A.C.: Foveated video quality assessment. IEEE Trans. Multimed. 4(1), 129–132 (2002)

Lee, S., Pattichis, M.S., Bovik, A.C.: Foveated video compression with optimal rate control. IEEE Trans. Image Process. 10(7), 977–992 (2001)

Legge, G.E., Foley, J.M.: Contrast masking in human vision. JOSA 70(12), 1458–1471 (1980)

Kim, H., Lee, S., Bovik, A.C.: Saliency prediction on stereoscopic videos. IEEE Trans. Image Process. 23(4), 1476–1490 (2014)

Mittal, A., Saad, M.A., Bovik, A.C.: A completely blind video integrity oracle. IEEE Trans. Image Process. 25(1), 289–300 (2016)

Le Callet, P., Viard-Gaudin, C., Barba, D.: A convolutional neural network approach for objective video quality assessment. IEEE Trans. Neural Netw. 17(5), 1316–1327 (2006)

Kim, J., Nguyen, A.D., Lee, S.: Deep CNN-based blind image quality predictor. IEEE Trans. Neural Netw. Learn. Syst. PP(99), 1–14 (2018)

Chandler, D.M., Hemami, S.S.: VSNR: a wavelet-based visual signal-to-noise ratio for natural images. IEEE Trans. Image Process. 16(9), 2284–2298 (2007)

Seshadrinathan, K., Bovik, A.C.: Motion tuned spatio-temporal quality assessment of natural videos. IEEE Trans. Image Process. 19(2), 335–350 (2010)

Li, Y., Po, L.M., Cheung, C.H., Xu, X., Feng, L., Yuan, F., Cheung, K.W.: No-reference video quality assessment with 3D shearlet transform and convolutional neural networks. IEEE Trans. Circuits Syst. Video Technol. 26(6), 1044–1057 (2016)

Daly, S.J.: Visible differences predictor: an algorithm for the assessment of image fidelity. In: Human Vision, Visual Processing, and Digital Display III, vol. 1666, pp. 2–16. International Society for Optics and Photonics (1992)

Kim, J., Lee, S.: Fully deep blind image quality predictor. IEEE J. Sel. Top. Signal Process. 11(1), 206–220 (2017)

Oh, H., Ahn, S., Kim, J., Lee, S.: Blind deep S3D image quality evaluation via local to global feature aggregation. IEEE Trans. Image Process. 26(10), 4923–4936 (2017)

Ye, P., Kumar, J., Kang, L., Doermann, D.: Unsupervised feature learning framework for no-reference image quality assessment. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1098–1105. IEEE (2012)

Laboratory of computational perception & image quality, Oklahoma State University, CSIQ video database. http://vision.okstate.edu/?loc=stmad

VQEG: Final report from the video quality experts group on the validation of objective models of video quality assessment, phase II

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Sheikh, H.R., Bovik, A.C.: Image information and visual quality. IEEE Trans. Image Process. 15(2), 430–444 (2006)

Vu, P.V., Vu, C.T., Chandler, D.M.: A spatiotemporal most-apparent-distortion model for video quality assessment. In: 18th IEEE International Conference on Image Processing (ICIP), pp. 2505–2508. IEEE (2011)

Vu, P.V., Chandler, D.M.: ViS3: an algorithm for video quality assessment via analysis of spatial and spatiotemporal slices. J. Electron. Imaging 23(1), 013016 (2014)

Acknowledgment

This work was supported by Institute for Information & communications Technology Promotion through the Korea Government (MSIP) (No. 2016-0-00204, Development of mobile GPU hardware for photo-realistic real-time virtual reality).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Kim, W., Kim, J., Ahn, S., Kim, J., Lee, S. (2018). Deep Video Quality Assessor: From Spatio-Temporal Visual Sensitivity to a Convolutional Neural Aggregation Network. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds) Computer Vision – ECCV 2018. ECCV 2018. Lecture Notes in Computer Science(), vol 11205. Springer, Cham. https://doi.org/10.1007/978-3-030-01246-5_14

Download citation

DOI: https://doi.org/10.1007/978-3-030-01246-5_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-01245-8

Online ISBN: 978-3-030-01246-5

eBook Packages: Computer ScienceComputer Science (R0)