Abstract

The prediction accuracy has been the long-lasting and sole standard for comparing the performance of different image classification models, including the ImageNet competition. However, recent studies have highlighted the lack of robustness in well-trained deep neural networks to adversarial examples. Visually imperceptible perturbations to natural images can easily be crafted and mislead the image classifiers towards misclassification. To demystify the trade-offs between robustness and accuracy, in this paper we thoroughly benchmark 18 ImageNet models using multiple robustness metrics, including the distortion, success rate and transferability of adversarial examples between 306 pairs of models. Our extensive experimental results reveal several new insights: (1) linear scaling law - the empirical \(\ell _2\) and \(\ell _\infty \) distortion metrics scale linearly with the logarithm of classification error; (2) model architecture is a more critical factor to robustness than model size, and the disclosed accuracy-robustness Pareto frontier can be used as an evaluation criterion for ImageNet model designers; (3) for a similar network architecture, increasing network depth slightly improves robustness in \(\ell _\infty \) distortion; (4) there exist models (in VGG family) that exhibit high adversarial transferability, while most adversarial examples crafted from one model can only be transferred within the same family. Experiment code is publicly available at https://github.com/huanzhang12/Adversarial_Survey.

D. Su and H. Zhang—Contribute equally to this work.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Image classification is a fundamental problem in computer vision and serves as the foundation of multiple tasks such as object detection, image segmentation, object tracking, action recognition, and autonomous driving. Since the breakthrough achieved by AlexNet [1] in ImageNet Challenge (ILSVRC) 2012 [2], deep neural networks (DNNs) have become the dominant force in this domain. From then on, DNN models with increasing depth and more complex building blocks have been proposed. While these models continue to achieve steadily increasing accuracies, their robustness has not been thoroughly studied, thus little is known if the high accuracies come at the price of reduced robustness.

A common approach to evaluate the robustness of DNNs is via adversarial attacks [3,4,5,6,7,8,9,10,11], where imperceptible adversarial examples are crafted to mislead DNNs. Generally speaking, the easier an adversarial example can be generated, the less robust the DNN is. Adversarial examples may lead to significant property damage or loss of life. For example, [12] has shown that a subtly-modified physical Stop sign can be misidentified by a real-time object recognition system as a Speed Limit sign. In addition to adversarial attacks, neural network robustness can also be estimated in an attack-agnostic manner. For example, [13] and [14] theoretically analyzed the robustness of some simple neural networks by estimating their global and local Lipschitz constants, respectively. [15] proposes to use extreme value theory to estimate a lower bound of the minimum adversarial distortion, and can be efficiently applied to any neural network classifier. [16] proposes a robustness lower bound based on linear approximations of ReLU activations. In this work, we evaluate DNN robustness by using specific attacks as well as attack-agnostic approaches. We also note that the adversarial robustness studied in this paper is different from [17], where “robustness” is studied in the context of label semantics and accuracy.

Since the last ImageNet challenge has ended in 2017, we are now at the beginning of post-ImageNet era. In this work, we revisit 18 DNN models submitted to the ImageNet Challenge or achieved state-of-the-art performance. These models have different sizes, classification performance, and belong to multiple architecture families such as AlexNet [1], VGG Nets [18], Inception Nets [19], ResNets [20], DenseNets [21], MobileNets [22], and NASNets [23]. Therefore, they are suitable to analyze how different factors influence the model robustness. Specifically, we aim to examine the following questions in this study:

-

1.

Has robustness been sacrificed for the increased classification performance?

-

2.

Which factors influence the robustness of DNNs?

In the course of evaluation, we have gained a number of insights and we summarize our contributions as follows:

-

Tested on a large number of well-trained deep image classifiers, we find that robustness is scarified when solely pursuing a higher classification performance. Indeed, Fig. 2(a) and (b) clearly show that the \(\ell _2\) and \(\ell _\infty \) adversarial distortions scale almost linearly with the logarithm of model classification errors. Therefore, the classifiers with very low test errors are highly vulnerable to adversarial attacks. We advocate that ImageNet network designers should evaluate model robustness via our disclosed accuracy-robustness Pareto frontier.

-

The networks of a same family, e.g., VGG, Inception Nets, ResNets, and DenseNets, share similar robustness properties. This suggests that network architecture has a larger impact on robustness than model size. Besides, we also observe that the \(\ell _\infty \) robustness slightly improves when ResNets, Inception Nets, and DenseNets become deeper.

-

The adversarial examples generated by the VGG family can transfer very well to all the other 17 models, while most adversarial examples of other models can only transfer within the same model family. Interestingly, this finding provides us an opportunity to reverse-engineer the architecture of black-box models.

-

We present the first comprehensive study that compares the robustness of 18 popular and state-of-the-art ImageNet models, offering a complete picture of the accuracy v.s. robustness trade-off. In terms of transferability of adversarial examples, we conduct thorough experiments on each pair of the 18 ImageNet networks (306 pairs in total), which is the largest scale to date.

2 Background and Experimental Setup

In this section, we introduce the background knowledge and how we set up experiments. We study both untargeted attack and targeted attack in this paper. Let \(\mathbf {x}_0\) denote the original image and \(\mathbf {x}\) denote the adversarial image of \(\mathbf {x}_0\). The DNN model \(F(\cdot )\) outputs a class label (or a probability distribution of class labels) as the prediction. Without loss of generality, we assume that \(F(\mathbf {x}_0)=y_0\), which is the ground truth label of \(\mathbf {x}_0\), to avoid trivial solution. For untargeted attack, the adversarial image \(\mathbf {x}\) is crafted in a way that \(\mathbf {x}\) is close to \(\mathbf {x}_0\) but \(F(\mathbf {x})\ne y_0\). For targeted attack, a target class t (\(t\ne y_0\)) is provided and the adversarial image \(\mathbf {x}\) should satisfy that (i) \(\mathbf {x}\) is close to \(\mathbf {x}_0\), and (ii) \(F(\mathbf {x})=t\).

2.1 Deep Neural Network Architectures

In this work, we study the robustness of 18 deep image classification models belonging to 7 architecture families, as summarized below. Their basic properties of these models are given in Table 1.

-

AlexNet. AlexNet [1] is one of the pioneering and most well-known deep convolutional neural networks. Compared to many recent architectures, AlexNet has a relatively simple layout that is composed of 5 convolutional layers followed by two fully connected layers and a softmax output layer.

-

VGG Nets. The overall architecture of VGG nets [18] are similar to AlexNet, but they are much deeper with more convolutional layers. Another main difference between VGG nets and AlexNet is that all the convolutional layers of VGG nets use a small (\(3 \times 3\)) kernel while the first two layers of AlexNet use \(11\times 11\) and \(5\times 5\) kernels, respectively. In our paper, we study VGG networks with 16 and 19 layers, with 138 million and 144 million parameters, respectively.

-

Inception Nets. The family of Inception nets utilizes the inception modules [24] that act as multi-level feature extractors. Specifically, each inception module consists of multiple branches of \(1\times 1\), \(3\times 3\), and \(5\times 5\) filters, whose outputs will stack along the channel dimension and be fed into the next layer in the network. In this paper, we study the performance of all popular networks in this family, including Inception-v1 (GoogLeNet) [19], Inception-v2 [25], Inception-v3 [26], Inception-v4, and Inception-ResNet [27]. All these models are much deeper than AlexNet/VGG but have significantly fewer parameters.

-

ResNets. To solve the vanishing gradient problem for training very deep neural networks, the authors of [20] proposes ResNets, where each layer learns the residual functions with reference to the input by adding skip-layer paths, or “identity shortcut connections”. This architecture enables practitioners to train very deep neural networks to outperform shallow models. In our study, we evaluate 3 ResNets with different depths.

-

DenseNets. To further exploit the “identity shortcut connections" techniques from ResNets, [21] proposes DenseNets that connect all layers with each other within a dense block. Besides tackling gradient vanishing problem, the authors also claimed other advantages such as encouraging feature reuse and reducing the number of parameters in the model. We study 3 DenseNets with different depths and widths.

-

MobileNets. MobileNets [22] are a family of light weight and efficient neural networks designed for mobile and embedded systems with restricted computational resources. The core components of MobileNets are depthwise separable filters with factorized convolutions. Separable filters can factorize a standard convolution into two parts, a depthwise convolution and a \(1\times 1\) pointwise convolution, which can reduce computation and model size dramatically. In this study, we include 3 MobileNets with different depths and width multipliers.

-

NASNets. NASNets [23] are a family of networks automatically generated by reinforcement learning using a policy gradient algorithm to optimize architectures [28]. Building blocks of the model are first searched on a smaller dataset and then transfered to a larger dataset.

2.2 Robustness Evaluation Approaches

We use both adversarial attacks and attack-agnostic approaches to evaluate network robustness. We first generate adversarial examples of each network using multiple state-of-the-art attack algorithms, and then analyze the attack success rates and the distortions of adversarial images. In this experiment, we assume to have full access to the targeted DNNs, known as the white-box attack. To further study the transferability of the adversarial images generated by each network, we consider all the 306 network pairs and for each pair, we conduct transfer attack that uses one model’s adversarial examples to attack the other model. Since transfer attack is widely used in the black-box setting [31,32,33,34,35,36], where an adversary has no access to the explicit knowledge of the target models, this experiment can provide some evidence on networks’ black-box robustness. Finally, we compute CLEVER [15] score, a state-of-the-art attack-agnostic network robustness metric, to estimate each network’s intrinsic robustness. Below, we briefly introduce all the evaluation approaches used in our study.

We evaluate the robustness of DNNs using the following adversarial attacks:

-

Fast Gradient Sign Method (FGSM). FGSM [3] is one of the pioneering and most efficient attacking algorithms. It only needs to compute the gradient once to generate an adversarial example \(\mathbf {x}\):

$$\begin{aligned} \mathbf {x}\leftarrow \mathrm {clip}[\mathbf {x}_0-\epsilon \ \mathbf{sgn }(\nabla J(\mathbf {x}_0,t))], \end{aligned}$$where \(\mathbf{sgn }(\nabla J(\mathbf {x}_0,t))\) is the sign of the gradient of the training loss with respect to \(\mathbf {x}_0\), and \(\mathrm {clip}(\mathbf {x})\) ensures that \(\mathbf {x}\) stays within the range of pixel values. It is efficient for generating adversarial examples as it is just an one-step attack.

-

Iterative FGSM (I-FGSM). Albeit efficient, FGSM suffers from a relatively low attack success rate. To this end, [37] proposes iterative FGSM to enhance its performance. It applies FGSM multiple times with a finer distortion, and is able to fool the network in more than \(99\%\) cases. When we run I-FGSM for T iterations, we set the per-iteration perturbation to \(\frac{\epsilon }{T}\ \mathbf{sgn }(\nabla J(\mathbf {x}_0,t))\). I-FGSM can be viewed as a projected gradient descent (PGD) method inside an \(\ell _\infty \) ball [38], and it usually finds adversarial examples with small \(\ell _\infty \) distortions.

-

C&W attack. [39] formulates the problem of generating adversarial examples \(\mathbf {x}\) as the following optimization problem

$$\begin{aligned}&\min \limits _{\mathbf {x}}\, \lambda f(\mathbf {x},t)+\Vert \mathbf {x}-\mathbf {x}_0\Vert _2^2\\&\mathrm {s.t.}\,\,\,\, \mathbf {x}\in [0,1]^p, \end{aligned}$$where \(f(\mathbf {x},t)\) is a loss function to measure the distance between the prediction of \(\mathbf {x}\) and the target label t. In this work, we choose

$$f(\mathbf {x},t)=\max \{\max \limits _{i\ne t}[(\mathbf{Logit }(\mathbf {x}))_i-(\mathbf{Logit }(\mathbf {x}))_t],-\kappa \}$$as it was shown to be effective by [39]. \(\mathbf{Logit }(\mathbf {x})\) denotes the vector representation of \(\mathbf {x}\) at the logit layer, \(\kappa \) is a confidence level and a larger \(\kappa \) generally improves transferability of adversarial examples.

C&W attack is by far one of the strongest attacks that finds adversarial examples with small \(\ell _2\) perturbations. It can achieve almost \(100\%\) attack success rate and has bypassed 10 different adversary detection methods [40].

-

EAD-L1 attack. EAD-L1 attack [41] refers to the Elastic-Net Attacks to DNNs, which is a more general formulation than C&W attack. It proposes to use elastic-net regularization, a linear combination of \(\ell _1\) and \(\ell _2\) norms, to penalize large distortion between the original and adversarial examples. Specifically, it learns the adversarial example \(\mathbf {x}\) via

$$\begin{aligned}&\min \limits _{\mathbf {x}}\,\, \lambda f(\mathbf {x},t)+\Vert \mathbf {x}-\mathbf {x}_0\Vert _2^2+\beta \Vert \mathbf {x}-\mathbf {x}_0\Vert _1\\&\mathrm {s.t.}\,\,\,\, \mathbf {x}\in [0,1]^p, \end{aligned}$$where \(f(\mathbf {x},t)\) is the same as used in the C&W attack. [41,42,43,44] show that EAD-L1 attack is highly transferable and can bypass many defenses and analysis.

We also evaluate network robustness using an attack-agnostic approach:

-

CLEVER. CLEVER [15] (Cross-Lipschitz Extreme Value for nEtwork Robustness) uses extreme value theory to estimate a lower bound of the minimum adversarial distortion. Given an image \(\mathbf {x}_0\), CLEVER provides an estimated lower bound on the \(\ell _p\) norm of the minimum distortion \(\delta \) required to misclassify the distorted image \(\mathbf {x}_0+\delta \). A higher CLEVER score suggests that the network is likely to be more robust to adversarial examples. CLEVER is attack-agnostic and reflects the intrinsic robustness of a network, rather than the robustness under a certain attack.

2.3 Dataset

In this work, we use the ImageNet [45] as the benchmark dataset, due to the following reasons: (i) ImageNet dataset can take full advantage of the studied DNN models since all of them were designed for ImageNet challenges; (ii) comparing to the widely-used small-scale datasets such as MNIST, CIFAR-10 [46], and GTSRB [47], ImageNet has significantly more images and classes and is more challenging; and (iii) it has been shown by [39, 48] that ImageNet images are easier to attack but harder to defend than the images from MNIST and CIFAR datasets. Given all these observations, ImageNet is an ideal candidate to study the robustness of state-of-the-art deep image classification models.

A set of randomly selected 1,000 images from the ImageNet validation set is used to generate adversarial examples from each model. For each image, we conduct targeted attacks with a random target and a least likely target as well as an untargeted attack. Misclassified images are excluded. We follow the setting in [15] to compute CLEVER scores for 100 out of the all 1,000 images, as CLEVER is relatively more computational expensive. Additionally, we conducted another experiment by taking the subset of images (327 images in total) that are correctly classified by all of 18 examined ImageNet models. The results are consistent with our main results and are given in supplementary material.

2.4 Evaluation Metrics

In our study, the robustness of the DNN models is evaluated using the following four metrics:

-

Attack success rate. For non-targeted attack, success rate indicates the percentage of the adversarial examples whose predicted labels are different from their ground truth labels. For targeted attack, success rate indicates the percentage of the adversarial examples that are classified as the target class. For both attacks, a higher success rate suggests that the model is easier to attack and hence less robust. When generating adversarial examples, we only consider original images that are correctly classified to avoid trial attacks.

-

Distortion. We measure the distortion between adversarial images and the original ones using \(\ell _2\) and \(\ell _\infty \) norms. \(\ell _2\) norm measures the Euclidean distance between two images, and \(\ell _\infty \) norm is a measure of the maximum absolute change to any pixel (worst case). Both of them are widely used to measure adversarial perturbations [39,40,41]. A higher distortion usually suggests a more robust model. To find adversarial examples with minimum distortion for each model, we use a binary search strategy to select the optimal attack parameters \(\epsilon \) in I-FGSM and \(\lambda \) in C&W attack. Because each model may have different input sizes, we divide \(\ell _2\) distortions by the number of total pixels for a fair comparison.

-

CLEVER score. For each image, we compute its \(\ell _2\) CLEVER score for target attacks with a random target class and a least-likely class, respectively. The reported number is the averaged score of all the tested images. The higher the CLEVER score, the more robust the model is.

-

Transferability. We follow [31] to define targeted and non-targeted transferability. For non-targeted attack, transferability is defined as the percentage of the adversarial examples generated for one model (source model) that are also misclassified by another model (target model). We refer to this percentage as error rate, and a higher error rate means better non-targeted transferability. For targeted attack, transferability is defined as matching rate, i.e., the percentage of the adversarial examples generated for source model that are misclassified as the target label (or within top-k labels) by the target model. A higher matching rate indicates better targeted transferability.

3 Experiments

After examining all the 18 DNN models, we have learned insights about the relationships between model architectures and robustness, as discussed below.

3.1 Evaluation of Adversarial Attacks

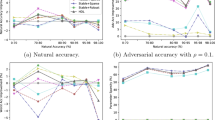

We have carefully conducted a controlled experiment by pulling images from a common set of 1000 test images when evaluating the robustness of different models. For assessing the robustness of each model, the originally misclassified images are excluded. We compare the success rates of targeted attack with a random target of FGSM, I-FGSM, C&W and EAD-L1 with different parameters for all 18 models. The success rate of FGSM targeted attack is low so we also show its untargeted attack success rate in Fig. 1(b).

For targeted attack, the success rate of FGSM is very low (below 3% for all settings), and unlike in the untargeted setting, increasing \(\epsilon \) in fact decreases attack success rate. This observation further confirms that FGSM is a weak attack, and targeted attack is more difficult and needs iterative attacking methods. Figure 1(c) shows that, with only 10 iterations, I-FGSM can achieve a very good targeted attack success rate on all models. C&W and EAD-L1 can also achieve almost 100% success rate on almost all of the models when \(\kappa = 0\).

For C&W and EAD-L1 attacks, increasing the confidence \(\kappa \) can significantly make the attack harder to find a feasible adversarial example. A larger \(\kappa \) usually makes the adversarial distortion more universal and improves transferability (as we will show shortly), but at the expense of decreasing the success rate and increasing the distortion. However, we find that the attack success rate with large \(\kappa \) cannot be used as a robustness measure, as it is not aligned with the \(\ell _p\) norm of adversarial distortions. For example, for MobileNet-0.50-160, when \(\kappa = 40\), the success rate is close to 0, but in Fig. 2 we show that it is one of the most vulnerable networks. The reason is that the range of the logits output can be different for each network, so the difficulty of finding a fixed logit gap \(\kappa \) is different on each network, and is not related to its intrinsic robustness.

We defer the results for targeted attack with the least likely target label to the Supplementary section because the conclusions made are similar.

3.2 Linear Scaling Law in Robustness v.s. Accuracy

Here we study the empirical relation between robustness and accuracy of different ImageNet models, where the robustness is evaluated in terms of the \(\ell _\infty \) and \(\ell _2\) distortion metrics from successful I-FGSM and C&W attacks respectively, or \(\ell _2\) CLEVER scores. In our experiments the attack success rates of these attacks are nearly 100% for each model. The scatter plots of distortions/scores v.s. top-1 prediction accuracy are displayed in Fig. 2. We define the classification error as 1 minus top-1 accuracy (denoted as \(1 - \text {acc}\)). By regressing the distortion metric with respect to the classification error of networks on the Pareto frontier of robustness-accuracy distribution (i.e., AlexNet, VGG 16, VGG 19, ResNet_v2_152, Inception_ResNet_v2 and NASNet), we find that the distortion scales linearly with the logarithm of classification error. That is, the distortion and classification error has the following relation: \(\text {distortion} = a+b \cdot \log \text {(classification-error)}\). The fitted parameters of a and b are given in the captions of Fig. 2. Take I-FGSM attack as an example, the linear scaling law suggests that to reduce the classification error by a half, the \(\ell _\infty \) distortion of the resulting network will be expected to reduce by approximately 0.02, which is roughly \(60\%\) of the AlexNet distortion. Following this trend, if we naively pursue a model with low test error, the model robustness may suffer. Thus, when designing new networks for ImageNet, we suggest to evaluate the model’s accuracy-robustness tradeoff by comparing it to the disclosed Pareto frontier.

3.3 Robustness of Different Model Sizes and Architectures

We find that model architecture is a more important factor to model robustness than the model size. Each family of networks exhibits a similar level of robustness, despite different depths and model sizes. For example, AlexNet has about 60 million parameters but its robustness is the best; on the other hand, Mobilenet-0.50-160 has only 1.5 million parameters but is more vulnerable to adversarial attacks in all metrics.

We also observe that, within the same family, for DenseNet, ResNet and Inception, models with deeper architecture yields a slight improvement of the robustness in terms of the \(\ell _\infty \) distortion metric. This might provide new insights for designing robust networks and further improve the Pareto frontier. This result also echoes with [49], where the authors use a larger model to increase the \(\ell _\infty \) robustness of a CNN based MNIST model.

3.4 Transferability of Adversarial Examples

Figures 3, 4 and 5 show the transferability heatmaps of FGSM, I-FGSM and EAD-L1 over all 18 models (306 pairs in total). The value in the i-th row and j-th column of each heatmap matrix is the proportion of the adversarial examples successfully transferred to target model j out of all adversarial examples generated by source model i (including both successful and failed attacks on the source model). Specifically, the values on the diagonal of the heatmap are the attack success rate of the corresponding model. For each model, we generate adversarial images using the aforementioned attacks and pass them to the target model to perform black-box untargeted and targeted transfer attacks. To evaluate each model, we use the success rate for evaluating the untargeted transfer attacks and the top-5 matching rate for evaluating targeted transfer attacks.

Note that not all models have the same input image dimension. We also find that simply resizing the adversarial examples can significantly decrease the transfer attack success rate [50]. To alleviate the disruptive effect of image resizing on adversarial perturbations, when transferring an adversarial image from a network with larger input dimension to a smaller dimension, we crop the image from the center; conversely, we add a white boarder to the image when the source network’s input dimension is smaller.

Generally, the transferability of untargeted attacks is significantly higher than that of targeted attacks, as indicated in Figs. 3, 4 and 5. We highlighted some interesting findings in our experimental results:

-

1.

In the untargeted transfer attack setting, FGSM and I-FGSM have much higher transfer success rates than those in EAD-L1 (despiting using a large \(\kappa \)). Similar to the results in [41], we find that the transferability of C&W is even worse than that of EAD-L1 and we defer the results to the supplement. The ranking of attacks on transferability in untargeted setting is given by

$$ \begin{aligned} \text {FGSM} \succeq \text {I-FGSM} \succeq \text {EAD-L1} \succeq \text {C} \& \text {W}. \end{aligned}$$ -

2.

Again in the untargeted transfer attack setting, for FGSM, a larger \(\epsilon \) yields better transferability, while for I-FGSM, less iterations yield better transferability. For untargeted EAD-L1 transfer attacks, a higher \(\kappa \) value (confidence parameter) leads to better transferability, but it is still far behind I-FGSM.

-

3.

Transferability of adversarial examples is sometimes asymmetric; for example, in Fig. 4, adversarial examples of VGG 16 are highly transferable to Inception-v2, but adversarial examples of Inception-v2 do not transfer very well to VGG.

-

4.

We find that VGG 16 and VGG 19 models achieve significantly better transferability than other models, in both targeted and untargeted setting, for all attacking methods, leading to the “stripe patterns”. This means that adversarial examples generated from VGG models are empirically more transferable to other models. This observation might be explained by the simple convolutional nature of VGG networks, which is the stem of all other networks. VGG models are thus a good starting point for mounting black-box transfer attacks. We also observe that the most transferable model family may vary with different attacks.

-

5.

Most recent networks have some unique features that might restrict adversarial examples’ transferability to only within the same family. For example, as shown in Fig. 4, when using I-FGSM in the untargeted transfer attack setting, for DenseNets, ResNets and VGG, transferability between different depths of the same architecture is close to 100%, but their transfer rates to other architectures can be much worse. This provides us an opportunity to reserve-engineer the internal architecture of a black-box model, by feeding it with adversarial examples crafted for a certain architecture and measure the attack success rates.

4 Conclusions

In this paper, we present the largest scale to date study on adversarial examples in ImageNet models. We show comprehensive experimental results on 18 state-of-the-art ImageNet models using adversarial attack methods focusing on \(\ell _1\), \(\ell _2\) and \(\ell _\infty \) norms and also an attack-agnostic robustness score, CLEVER. Our results show that there is a clear trade-off between accuracy and robustness, and a better performance in testing accuracy in general reduces robustness. Tested on the ImageNet dataset, we discover an empirical linear scaling law between distortion metrics and the logarithm of classification errors in representative models. We conjecture that following this trend, naively pursuing high-accuracy models may come with the great risks of lacking robustness. We also provide a thorough adversarial attack transferability analysis between 306 pairs of these networks and discuss the robustness implications on network architecture.

In this work, we focus on image classification. To the best of our knowledge, the scale and profound analysis on 18 ImageNet models have not been studied thoroughly in the previous literature. We believe our findings could also provide insights to robustness and adversarial examples in other computer vision tasks such as object detection [51] and image captioning [5], since these tasks often use the same pre-trained image classifiers studied in this paper for feature extraction.

References

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems (NIPS), pp. 1097–1105 (2012)

Russakovsky, O., et al.: Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 115(3), 211–252 (2015)

Goodfellow, I., Shlens, J., Szegedy, C.: Explaining and harnessing adversarial examples. In: International Conference on Learning Representations (ICLR) (2015)

Xu, X., Chen, X., Liu, C., Rohrbach, A., Darell, T., Song, D.: Fooling vision and language models despite localization and attention mechanism. In: Proceedings of the Thirtieth IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Chen, H., Zhang, H., Chen, P.Y., Yi, J., Hsieh, C.J.: Attacking visual language grounding with adversarial examples: a case study on neural image captioning. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, vol. 1: Long Papers, pp. 2587–2597 (2018)

Metzen, J.H., Kumar, M.C., Brox, T., Fischer, V.: Universal adversarial perturbations against semantic image segmentation. Statistics 1050, 19 (2017)

Cheng, M., Yi, J., Zhang, H., Chen, P.Y., Hsieh, C.J.: Seq2Sick: evaluating the robustness of sequence-to-sequence models with adversarial examples. arXiv preprint arXiv:1803.01128 (2018)

Carlini, N., Wagner, D.: Audio adversarial examples: targeted attacks on speech-to-text. In: Deep Learning and Security Workshop (2018)

Sun, M., Tang, F., Yi, J., Wang, F., Zhou, J.: Identify susceptible locations in medical records via adversarial attacks on deep predictive models. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), pp. 793–801 (2018)

Xiao, C., Li, B., Zhu, J.Y., He, W., Liu, M., Song, D.: Generating adversarial examples with adversarial networks. In: Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, IJCAI 2018, International Joint Conferences on Artificial Intelligence Organization, pp. 3905–3911, July 2018

Xiao, C., Zhu, J.Y., Li, B., He, W., Liu, M., Song, D.: Spatially transformed adversarial examples. In: International Conference on Learning Representations (ICLR) (2018)

Eykholt, K., et al.: Robust physical-world attacks on deep learning visual classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1625–1634 (2018)

Szegedy, C., et al.: Intriguing properties of neural networks. In: International Conference on Learning Representations (ICLR) (2014)

Hein, M., Andriushchenko, M.: Formal guarantees on the robustness of a classifier against adversarial manipulation. In: Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems (NIPS), pp. 2263–2273 (2017)

Weng, T.W., et al.: Evaluating the robustness of neural networks: an extreme value theory approach. In: International Conference on Learning Representations (ICLR) (2018)

Weng, T.W., et al.: Towards fast computation of certified robustness for ReLU networks. In: Proceedings of the 35th International Conference on Machine Learning (ICML) (2018)

Stock, P., Cisse, M.: Convnets and imagenet beyond accuracy: explanations, bias detection, adversarial examples and model criticism. arXiv preprint arXiv:1711.11443 (2017)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. In: International Conference on Learning Representations (ICLR) (2015)

Szegedy, C., et al.: Going deeper with convolutions. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2015, Boston, MA, USA, 7–12 June 2015, pp. 1–9 (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016, pp. 770–778 (2016)

Huang, G., Liu, Z., van der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

Howard, A.G., et al.: MobileNets: efficient convolutional neural networks for mobile vision applications. CoRR abs/1704.04861 (2017)

Zoph, B., Vasudevan, V., Shlens, J., Le, Q.V.: Learning transferable architectures for scalable image recognition. In: 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Lin, M., Chen, Q., Yan, S.: Network in network. In: International Conference on Learning Representations, ICLR (ICLR) (2014)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift. In: Proceedings of the 32nd International Conference on Machine Learning, ICML 2015, Lille, France, 6–11 July 2015, pp. 448–456 (2015)

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., Wojna, Z.: Rethinking the inception architecture for computer vision. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016, pp. 2818–2826 (2016)

Szegedy, C., Ioffe, S., Vanhoucke, V., Alemi, A.A.: Inception-v4, inception-resnet and the impact of residual connections on learning. In: Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, California, USA, 4–9 February 2017, pp. 4278–4284 (2017)

Zoph, B., Le, Q.V.: Neural architecture search with reinforcement learning. In: International Conference on Learning Representations (ICLR) (2017)

Wu, N., Sivakumar, S., Guadarrama, S., Andersen, D.: TensorFlow-Slim Image Classification Model Library (2017). Github https://github.com/tensorflow/models/tree/master/research/slim

He, K., Zhang, X., Ren, S., Sun, J.: Identity mappings in deep residual networks. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9908, pp. 630–645. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46493-0_38

Liu, Y., Chen, X., Liu, C., Song, D.: Delving into transferable adversarial examples and black-box attacks. In: International Conference on Learning Representations (ICLR) (2017)

Papernot, N., McDaniel, P., Goodfellow, I.: Transferability in machine learning: from phenomena to black-box attacks using adversarial samples. arXiv preprint arXiv:1605.07277 (2016)

Chen, P.Y., Zhang, H., Sharma, Y., Yi, J., Hsieh, C.J.: ZOO: zeroth order optimization based black-box attacks to deep neural networks without training substitute models. In: Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security, pp. 15–26. ACM (2017)

Tu, C., et al.: AutoZOOM: autoencoder-based zeroth order optimization method for attacking black-box neural networks. CoRR abs/1805.11770 (2018)

Cheng, M., Le, T., Chen, P.Y., Yi, J., Zhang, H., Hsieh, C.J.: Query-efficient hard-label black-box attack: an optimization-based approach. arXiv preprint arXiv:1807.04457 (2018)

Tu, C.C., et al.: AutoZOOM: autoencoder-based zeroth order optimization method for attacking black-box neural networks. arXiv preprint arXiv:1805.11770 (2018)

Kurakin, A., Goodfellow, I.J., Bengio, S.: Adversarial machine learning at scale. In: International Conference on Learning Representations (ICLR) (2017)

Cisse, M., Bojanowski, P., Grave, E., Dauphin, Y., Usunier, N.: Parseval networks: improving robustness to adversarial examples. In: International Conference on Machine Learning (ICML), pp. 854–863 (2017)

Carlini, N., Wagner, D.A.: Towards evaluating the robustness of neural networks. In: 2017 IEEE Symposium on Security and Privacy (Oakland) 2017, San Jose, CA, USA, 22–26 May 2017, pp. 39–57 (2017)

Carlini, N., Wagner, D.: Adversarial examples are not easily detected: bypassing ten detection methods. In: Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security, AISec 2017, pp. 3–14. ACM, New York (2017)

Chen, P.Y., Sharma, Y., Zhang, H., Yi, J., Hsieh, C.J.: EAD: elastic-net attacks to deep neural networks via adversarial examples. In: AAAI (2018)

Sharma, Y., Chen, P.Y.: Attacking the Madry defense model with \({L_1}\)-based adversarial examples. arXiv preprint arXiv:1710.10733 (2017)

Lu, P.H., Chen, P.Y., Chen, K.C., Yu, C.M.: On the limitation of magnet defense against \({L_1}\)-based adversarial examples. In: IEEE/IFIP DSN Workshop (2018)

Lu, P.H., Chen, P.Y., Yu, C.M.: On the limitation of local intrinsic dimensionality for characterizing the subspaces of adversarial examples. In: ICLR Workshop (2018)

Deng, J., Dong, W., Socher, R., Li, L.J., Li, K., Fei-Fei, L.: ImageNet: a large-scale hierarchical image database. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2009, pp. 248–255. IEEE (2009)

Krizhevsky, A.: Learning multiple layers of features from tiny images (2009)

Stallkamp, J., Schlipsing, M., Salmen, J., Igel, C.: Man vs. computer: benchmarking machine learning algorithms for traffic sign recognition. Neural Netw. 32, 323–332 (2012)

Moosavi-Dezfooli, S., Fawzi, A., Frossard, P.: DeepFool: a simple and accurate method to fool deep neural networks. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016, pp. 2574–2582 (2016)

Madry, A., Makelov, A., Schmidt, L., Tsipras, D., Vladu, A.: Towards deep learning models resistant to adversarial attacks. In: International Conference on Learning Representations (ICLR) (2018)

Athalye, A., Engstrom, L., Ilyas, A., Kwok, K.: Synthesizing robust adversarial examples. In: 35th International Conference on Machine Learning (ICML) (2018)

Xie, C., Wang, J., Zhang, Z., Zhou, Y., Xie, L., Yuille, A.: Adversarial examples for semantic segmentation and object detection. In: International Conference on Computer Vision (ICCV). IEEE (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Su, D., Zhang, H., Chen, H., Yi, J., Chen, PY., Gao, Y. (2018). Is Robustness the Cost of Accuracy? – A Comprehensive Study on the Robustness of 18 Deep Image Classification Models. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds) Computer Vision – ECCV 2018. ECCV 2018. Lecture Notes in Computer Science(), vol 11216. Springer, Cham. https://doi.org/10.1007/978-3-030-01258-8_39

Download citation

DOI: https://doi.org/10.1007/978-3-030-01258-8_39

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-01257-1

Online ISBN: 978-3-030-01258-8

eBook Packages: Computer ScienceComputer Science (R0)