Abstract

Matrix approximation has been increasingly popular for recommender systems, which have achieved excellent accuracy among collaborative filtering methods. However, they do not work well especially when there are a large set of items with various types and a huge number of users with diverse interests. In this case, the complicated structure of sparse rating matrix introduces challenges to the single global or local matrix approximation. In this paper, we propose an Adaptive Ensemble Probabilistic Matrix Approximation method (AEPMA), which can potentially alleviate the data sparsity and improve the recommendation accuracy. By integrating the global information over the entire rating matrix and local information on subsets of user/item ratings in a stochastic gradient boosting framework, AEPMA has the ability to capture the overall structures information and local strong associations in an adaptive weight strategy. A series of experiments on three real-world datasets (Ciao, Epinions and Douban) have shown that AEPMA can effectively improve the recommendation accuracy and scalability.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The variety and number of products provided by companies have increased dramatically. Companies produce a large number of products to meet the needs of customers. Although this gives more options to customers, Customers are facing more and more information, and how to obtain information accurately and effectively has become a dilemma. Recommender systems are becoming more important due to the increasing challenge-information overload. Recommender systems provide users with personalized recommendation service based on their preferences, needs, and past behaviors.

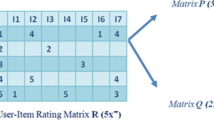

Till now, the widely-used historical data is user-item rating matrix which describes the user’s observed preference. Most popular recommendation techniques (e.g., matrix approximation-based (MA) collaborative filtering) are proposed on rating matrix. In order to predict the rating accurately, many global-based methods have been proposed. The traditional matrix ratings prediction based on global information [2, 5, 9, 24] works by studying the latent feature matrix of users/items. Although this method has the advantages of prediction simple and easy to understand the method from math, the interpretability of the recommendation results is low and these methods failed to detect strong associations among a small set of items/users.

In order to solve the problem of the subsets of users’ unique interests, researchers adopted local methods [1, 25] to predict the missing values of rating matrix. They apply matrix clustering and community detection to matrix approximation methods. The main idea is to partition the large user-item matrix into a set of smaller submatrices, and the usual method for partition is to consider user-based clustering or item-based clustering. However, sub-matrix may appear over-fitting in this local method, and ignore the overall structure on the rating matrix. Now we proposed the new model AEPMA (Adaptive Ensemble Probabilistic Matrix Approximation), which help us sift through all the available global and local information to make accurate matrix rating prediction. The intuition is that, weaker between correlation of two models, more accurate the prediction values for missing value are. So we take both the global and local information into consideration. Simultaneously, we apply a gradient-boosting framework to learn the more accurate values and not sensitive to abnormal points. We learn the weight of different components in the model, which plays an important role in adaptive and effective prediction. More importantly, there is no manual setting of the parameters, both the weight and learning rate.

2 Related Work

Matrix approximation-based collaborative filtering methods have been proposed to alleviate the data missing issue. Some is from the overall structure, RSVD [9] is a standard matrix factorization method inspired by the effective to the domain of collaborative filtering, which is from the domain of natural language processing. Then NMF [24] view the recommendation task as a actual situation, so the components are non-negative and NMF assume the ratings follows the Poisson distribution. Then the Gaussian distribution assumption has been attempted, PMF [2] is a Probabilistic Matrix Factorization model, which define the conditional distribution over the ratings as Gaussian distributions. And later BPMF [5] – a Bayesian extension of PMF, in which the model is using Markov chain Monte Carlo (MCMC) methods for approximate inference.

Although these methods work well, these methods still limited in detecting the overall structure. More recently, model such as ACCAMS [1] focused on local strong correlation. ACCAMS [1] is an additive model of co-clustering, which can partition rating matrix into blocks that are highly similar through a clustering of the rows and columns. SIACC [25] is a extension of ACCAMS, and has a better effect on co-clustering by using a social influence. WEMAREC [4] takes the rating distribution into consideration. And as a weighted and ensemble model, the submatrix is generated using different co-clustering constraints in WEMAREC. Furthermore, LLORMA [3], SMA [25] also focused on using ensembles of factorization to exploit local structure. But these ensembles models only focused on ratings inside clusters and ignore the majority of user ratings outside clusters. Since training data are often insufficient in the detected clusters, the performance of local ensemble models may degrade due to overfitting. To tackle this problem, we address these issues of ratings prediction by applying an ensemble approach, which can incorporate both global and local information.

In this paper, we unify localized relationships in user-item subgroups and common associations among all users and items to improve the recommendation accuracy. The most related works are Probabilistic Matrix Factorization (PMF) and ACCAMS. In AEPMA, the proposed method can learn global information and local information simultaneously, since we can alternate optimization iteration to obtain of the adaptive sample weight. We use stochastic gradient boosting framework to learn more hidden information of the complex rating matrix. More importantly, In the boosting framework, the ensemble models can enhance the recommendation accuracy and stability.

3 The Proposed AEPMA Model

The structure of rating matrix is more and more complicated. The single framework such as PMF can not accurately predict the rating. So we propose a boosting-based matrix approximation for describing the different information of the rating matrix. Because the user-item rating matrix is represented in a global strategy by PMF, such as the whole rating matrix, which ignore the local structure among rating information. In AEPMA, We can capture sufficient information by combining global rating predictions and local rating predictions. Then a stochastic gradient boosting framework is adopted to produce accurate ratings prediction and enhance the recommendation stability. More importantly, we learn adaptive weight for each predictive rating matrix. Which can sufficiently prevent overfitting. Similar to shrinkage in XGBOOST, the learned weights reduce the influence of prediction in each stage and leave space for finer prediction.

3.1 Global and Local Matrix Approximation

We exploit Global and Local Matrix Approximation (GLMA) which is a new probabilistic model which combined global and local information. More importantly, the user-item rating weight can be learned adaptively. And the rating with most suitable global or local model for each user/item should be with large weights. The conditional distribution over the observed ratings for the global and local model can be given as follows:

Where S is the prediction rating by local method ACCAMS, And U, V are the global user, item latent feature vectors, which is inferred from all user-item rating matrix. And \({\alpha ^1}, {\beta ^1}\) are the weight vectors of the local model for all user-item ratings, respectively, and accordingly \({\alpha ^2}, {\beta ^2}\) are the weight vectors of the global model for all user-item ratings, Thus, \({\alpha _i^1, \beta _j^1}\) reflect the weights of the local model for the \({i^{th}}\) user and \({j^{th}}\) item. The local predictions that reflected the unique interests shared among only subsets of users/items should be with large weights, \({\alpha _i^2,\beta _j^2}\) denote the weights of the globally optimized model, the ratings that reflect the overall structures should be with large weight.

For \(\alpha ^1, \beta ^1, \alpha ^2, \beta ^2\), we choose a Laplacian prior here, because the models with most suitable global or local model for user-item ratings should be with large weight, the variable should be sparse. More importantly, the adaptive weight can make the model learn useful information and avoid overfitting. Thus the log of the posterior distribution over the user and item features and weights can be given as follows:

Where \({{u_\alpha }}, {{u_\beta }}\) are the location parameter of the Laplacian distribution, and accordingly \({{b_\alpha }},{{b_\beta }}\) are the scale parameter of the Laplacian distribution. Unfortunately, it is very difficult to solve the above optimization problem directly. In order to simplify the model, we try to obtain the approximate solution using Jensen’s inequality, the lower bound of Eq. (2) can be obtained as follows:

If we keep the hyperparameters of the prior distribution fixed may easily lead to overfitting. And we want to obtain the adaptive weight of the model, so we estimate the parameters and hyperparameters simultaneously during model training. In order to estimate the hyperparameters, while fixed the rest variables and then iterate until convergence. The hyperparameters can be given as:

3.2 Boosting-Based Matrix Approximation

The structure of rating matrix is more and more complicated, the single framework has trouble discovering abundant hidden information of the rating matrix. Thus, we propose a boosting-based mixture matrix approximation model- Adaptive Ensemble Probabilistic Matrix Approximation (AEPMA).

In order to describe the different information of the rating matrix, We propose an ensemble mixture matrix approximation approach for rating prediction. In AEPMA model, we learn an additive model X, with K products \({{\omega _k}*{\mathrm{{R}}^k}}\). Thus the prediction rating matrix \({\hat{X}}\) is presented:

Where K is the number of individual learner, \({R^{k}}\) is the prediction rating matrix of \({k^{th}}\) individual learner. \({R^k} = {\left( {{U^k}} \right) ^T}{V^k}\), and \({\omega _k}\) is the weight of prediction rating matrix \({R^{k}}\). And \(\left( {{U^k},{V^k}} \right) \) is the pair of user, item latent factor vectors.

In order to discover the global structure information and detect local strong association. We let the first learner is GLMA, so we can get the prediction rating \(\mathrm{{S}^1}\), and the other individual learner corresponds to PMF. Thus the optimal prediction rating value is then equal to:

To achieve the rating matrix approximation, we use the Frobenius norm-based objective function as follows:

Residual Matrix Update. AEPMA solves this problem in a gradient boosting manner, which iteratively adds a new individual learner to better approximate the true rating matrix. The partial residual rating matrix is learn from the negative gradient of the loss function. In AEPMA, the negative gradient of the loss function is the difference of the true ratings and the prediction ratings.

To fit the \(k - 1\) learner PMF, \({R^{k - 1}}= {\omega _{k-1}}*U_{k-1}^T {V_{k-1}}\), with rank \({r_{k-1}}\) to the residual matrix \({X^{k - 1}}\). Where the matrix rank \({r_{k-1}}\) is adaptive, because the distribution of the residual is different. Then The specific residual matrix \({X^k}\) calculation method is shown in Fig. 1:

Due to the forward stage-wise manner, We constantly iterative add a new model to better approximate rating matrix X. The prediction from previously learned \(k- 1\) models is fixed, Thus the \({k^{th}}\) residual rating matrix can be indicated by the previously learned \(k- 1\) models. Thus, we can define the residual rating matrix at stage k as:

where \({\omega _k}\) is the weight of \({k^{th}}\) prediction rating matrix \({R^{k}}\). \({S^{1}}\) is the prediction rating matrix which fitting the GLMA model. And accordingly \({R^{k-1}}\) is the prediction rating matrix fitting the PMF model, \({R^{k-1}} = {\left( {{U^{k-1}}} \right) ^T}{V^{k-1}}\). And \(\left( {{U^{k-1}},{V^{k-1}}} \right) \) is the pair of user, item factor latent vectors. Then the input residual rating matrix \({X^k}\) of the \({k^{th}}\) individual learner PMF can be written as;

In the \({k^{th}}\) epoch, according to the Probabilistic Matrix Factorization(PMF) model, we can obtain the user/item factor latent vectors. In our proposed method solves each model of R in a greedy sequential manner, which means that once the solution for \({R^k}\) is obtained at stage k, it is fixed during the remaining iterations. And in our model, we want to consider the local and global information simultaneously, so the general we choose more than three models.

Adaptive Weight. One important step in the approximate algorithm is to propose adaptive weight. In AEPMA, we assign smaller weight to those components R less explained(large residuals). Let us define the residual probability distribution \({P_{ij}^k}\) \({R_{ij}^k-\hat{X}_{ij}^k} \sim \mathrm{N}\left( {0,\sigma _u^2} \right) \), Then large residuals is far from the mean, in which the corresponding probability is relatively small. Thus the weight of each prediction rating matrix is given by;

In the above equation, higher weight values is assigned to components with smaller residual. In other word, The better the fitting rating matrix, the greater the corresponding weight.

In APEMA, each user-item rating is characterized by a mixture model, and then to predict user-item ratings by the mixture components and the weight of each model. We can predict the user-items ratings as follows:

4 Experiments

4.1 Experiment Setup

In the following, we introduce our experimental setup include dataset, baseline methods, and evaluation measures.

Datasets. We selected the following three real-world datasets that has widely used for evaluating recommendation algorithm – Ciao, Epinions, and Douban which are usually used in literatures. The rating score is from 1 to 5 score. For each datasets, we randomly split it into five equal sized subsets. Four subsets are used as training set and the left one as testing set in each fold. In the five-fold cross-validation, the result are represented by averaging the results over five different train-test splits. These datasets are summarized in Table 1.

Baselines. We compared the recommendation accuracy of our proposed method against various state-of-the-art methods, including PMF [2], BPMF [5], LLORMA [3], WEMAREC [4], ACCAMS [1], SMA [25]. Because in the paper (Low-Rank Matrix Approximation with Stability), the author proposed that the performance of SMA is better than BPMF, LLORMA and WEMAREC. Thus the proposed method (AEPMA) is compared against three state-of-the-art matrix approximation based CF models, which are described as follows:

-

PMF: A probabilistic matrix factorization, which define the conditional distribution of the observed ratings as Gaussian distribution.

-

RSVD: A global-based matrix factorization method, in which user/item features are estimated by minimizing the sum-squared error.

-

ACCAMS: An additive co-clustering model to approximate rating matrix, which can partition rating matrix into blocks that are highly similar through a clustering of the rows and columns. Then using the mean of the values to represent the block missing ratings.

-

SMA: An low-rank matrix approximation framework, which achieving high stability.

Metrics. The root mean square error (RMSE) and Mean Absolute Error (MAE) is adopted as the evaluation metric for recommendation accuracy. The RMSE is defined as \(RMSE = \sqrt{\frac{{\sum \limits _{{X_{ij}} \in \mathrm{T}} {{{\left( {{X_{ij}} - {{\hat{R}}_{ij}}} \right) }^2}} }}{{\left| \mathrm{T} \right| }}}\).

where T is the set of ratings in the testing set and \(\left| \mathrm{T} \right| \) is the size of the test ratings. \({\hat{R}_{ij}}\) is the predicted rating \({X_{ij}}\) is represented the true rating value from \({i^{th}}\) user to \({j^{th}}\) item in the testing set. The MAE is defined by \(MAE = \frac{1}{{\left| \mathrm{T} \right| }}\sum \limits _{{X_{ij}} \in \mathrm{T}} {\left| {{X_{ij}} - {{\hat{R}}_{ij}}} \right| }\).

4.2 Recommendation Performance

Table 2 compares RMSE and MAE in our method with classic matrix approximation method. We can see our method can achieve both lower generalization error and lower expected risk than other methods.

In this experiment, we compare the recommendation accuracy of AEPMA against various state-of-the-art methods, including PMF, RSVD, ACCAMS and SMA. In most of these methods, we use the same parameters values provided in the original papers, and for ACCAMS, we tuned its parameters including the number of users clusters and item cluster, and the number of stencils. In PMF, we set the max-number of iterations as 300 in our experiment. And the regularization parameter on latent is 0.01. In AEPMA, in order to reduce the manual setting of the learning rate, we use adam for stochastic optimization. And we choose 0.001 as stepsize, 0.9, 0.999 as the exponential decay rates for the moment estimates. Then we set the number of individual learners as three. The relative improvements that AEPMA achieves relative to four state-of-the-art methods on three datasets are calculated. As shown in Fig. 2. Obviously, AEPMA performs better than ACCAMS and SMA, which demonstrates that the model with global structure information is better than the only local ensemble matrix approximation methods. Simultaneously, Our method is much better than the only global method PMF and RSVD. From the relative improvements, we can see the SMA is better on the dense dataset (Douban) and perform poor on the sparse datasets (Ciao and Epinions). More importantly, In order to prove that the importance of GLMA method, we compare the performance in terms of MAE, RMSE for PMF+ (global) and ACCAMS+ (local). In the boosting framework, PMF+ is fitting PMF then get \({S^{1}}\), accordingly ACCAMS+ is fitting ACCAMS to get \({S^{1}}\). In additional, our method which using global and local information is better than the method only global on local. And we also find that the model can achieve relatively stable prediction accuracy due to the framework of boosting. A smaller RMSE or MAE value indicate better performance. Because there are too many ratings, a small improvement in RMSE or MAE can have a significant impact on the recommendation result. As shown in Table 2, It can be seen that AEPMA consistently outperforms the global method (PMF, RSVD) and the local method (ACCAMS, SMA), Which means that considering both local and global information is more useful than only considering unilateral influence.

The true datasets have different rating density, For example, The Ciao and Epinions (the rating density is 0.036% and 0.010%) is sparse. Simultaneously, We can see in the Table 2, the recommendation accuracy on sparse dataset is worse than the dense datasets. Thus how to improve the recommendation accuracy of sparse data, is the challenge of the recommendation system. More importantly, ACCAMS is better than SMA on the Ciao and Epinions, but worse than SMA on the Douban dataset. In AEPMA, we exploit Global and Local information, and use the boosting framework to learn the hidden information. Thus the proposed AEPMA can outperform on both sparse and dense datasets. Table 2 show that the ensemble-based local methods (ACCAMS, SMA) especially outperforms the global method (PMF, RSVD). Thus we pay attention to the relative improvements that AEPMA achieves to two local baselines on three datasets, as shown in Fig. 2. Obviously, AEPMA performs better than ACCAMS and SMA, which demonstrates the global information benefit the AEPMA model. Figure 2 also reflects that the sparsity of data influence the recommendation accuracy. The relative improvements that AEPMA achieves relative to SMA on sparse datasets (Ciao, Epinions), is superior to the relative improvement to ACCAMS. In other word, The ACCAMS performs better than SMA on sparse dataset, but worse on dense dataset. Because the ACCAMS use the mean of values to the block can lead to overfitting on the dense datasets. In AEPMA, we exploit Global and Local information can fully learn the complicated ratings. More importantly, the boosting framework can improve the model stability and robustness. In the global model such as PMF and RSVD, the same vectors of latent factors inferred from all user-item rating matrix is adopted to describe all users and items, However in many real-world user-item rating matrics, if we think of the global latent factors as “common interests”, then subset of users may share “unique interests” that are not reflected by the “common interest”. Thus, Fig. 3 investigates the effect of global and local information in our model. We fix the boosting framework and change the \({S^{1}}\). We can see AEPMA is better than PMF+ and ACCAMS+, and ACCAMS+ performs better than PMF+. Because ACCAMS+ trained the model by both global (boosting) and local (\({S^{1}}\)) information. But AEPMA can learn the sample weight adaptively to learn sufficient information. Thus AEPMA is superior to ACCAMS+.

Figure 4(a) analyzes the running time with the number of matrices increases. The method AEPMA based on boosting can reduce the bias. And with the number of iterations increasing, the RMSE and MAE can decrease gradually, but running time increases. So in this experiment, we choose a compromise method, the number of matrices is smaller than five. Figure 4(b, c) analyzes the impact of clustering method with different numbers of clusters k and stencils s on Ciao dataset. From Fig. 4(b, c), it can be seen that the performances is destroyed when s is large. The main reason is that large stencils will make overfitting. Meanwhile, we discover that AEPMA is stable under varying k with fixing s. Thus small k is enough to approximate the rating matrix.

5 Conclusions

Traditional matrix approximation based collaborative filtering methods have a major drawback that they perform poorly at detecting strong associations among a small set of closely related items. In this paper, we can capture sufficient information by combining global and local information. More importantly, by placing a Laplacian prior on the user and item weight vectors, we can adaptively learn the sample weight. In the stochastic gradient boosting framework, we can learn the hidden information and enhance the recommendation accuracy and scalability. Experimental study on three real-world datasets demonstrates that proposed AEPMA method can outperform several state-of-art ensemble matrix approximation methods.

References

Beutel, A., Ahmed, A., Smola, A.J.: ACCAMS: additive co-clustering to approximate matrices succinctly. In: Proceedings of International Conference on World Wide Web, pp. 119–129 (2016)

Salakhutdinov, R., Mnih, A.: Probabilistic matrix factorization. In: Proceedings of International Conference on Machine Learning, pp. 880–887 (2007)

Lee, J., Kim, S., Lebanon, G., Singer, Y.: Local low-rank matrix approximation. In: Proceedings of the 30th International Conference on Machine Learning (ICML 2013), pp. 82–90 (2013)

Chen, C., Li, D., Zhao, Y., Lv, Q., Shang, L.: WEMAREC: accurate and scalable recommendation through weighted and ensemble matrix approximation. In: Proceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2015), pp. 303–312 (2015)

Salakhutdinov, R., Mnih, A.: Bayesian probabilistic matrix factorization using Markov chain Monte Carlo. In: Proceedings of the 25th International Conference on Machine Learning (ICML 2008), pp. 880–887. ACM (2008)

Chen, C., Li, D., Lv, Q., Yan, J., Chu, S.M., Shang, L.: MPMA: mixture probabilistic matrix approximation for collaborative filtering. In: Proceedings of the 25th International Joint Conference on Artificial Intelligence (IJCAI 2016), pp. 1382–1388 (2016)

Li, D., Chen, C., Lv, Q., Yan, J., Shang, L., Chu, S.: Low-rank matrix approximation with stability. In: Proceedings of the 33rd International Conference on Machine Learning (ICML 2016), pp. 295–303 (2016)

Srebro, N., Jaakkola, T.: Weighted low-rank approximations. In: Proceedings of the 20th International Conference on Machine Learning (ICML 2003), pp. 720–727 (2003)

Aharon, M., Elad, M., Bruckstein, A.: K-SVD: an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 54(11), 4311–4322 (2006)

Jing, L., Wang, P., Yang, L.: Sparse probabilistic matrix factorization by Laplace distribution for collaborative filtering. In: Proceedings of the International Conference on Artificial Intelligence, pp. 1771–1777 (2015)

Lee, J., Kim, S., Lebanon, G., Singer, Y.: Local low-rank matrix approximation. In: Proceedings of the 30th International Conference on Machine Learning, pp. 82–90 (2013)

Mackey, L.W., Jordan, M.I., Talwalkar, A.: Divide-and-conquer matrix factorization. In: Advances in Neural Information Processing Systems, pp. 1134–1142 (2011)

Koren, Y.: Factorization meets the neighborhood: a multifaceted collaborative filtering model. In: Knowledge Discovery and Data Mining KDD, pp. 426–434 (2008)

Herlocker, J.L., Konstan, J.A., Borchers, A., Riedl, J.: An algorithmic framework for performing collaborative filtering. In: Proceedings of the 22nd International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 1999), pp. 230–237 (1999)

Sarwar, B., Karypis, G., Konstan, J., Riedl, J.: Item-based collaborative filtering recommendation algorithms. In: Proceedings of the 10th International Conference on World Wide Web (WWW 2001), pp. 285–295 (2001)

Zhang, Y., Zhang, M., Liu, Y., Ma, S.: Improve collaborative filtering through bordered block diagonal form matrices. In: Proceedings of the 36th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2013), pp. 313–322 (2013)

Lawrence, N.D., Urtasun, R.: Non-linear matrix factorization with Gaussian processes. In: Proceedings of the International Conference on Machine Learning (2009)

Mirbakhsh, N., Ling, C.X.: Clustering-based matrix factorization. ArXiv Report arXiv:1301.6659 (2013)

Duchi, J., Hazan, E., Singer, Y.: Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. 12, 2121–2159 (2011)

Moulines, E., Bach, F.R.: Non-asymptotic analysis of stochastic approximation algorithms for machine learning. In: Advances in Neural Information Processing Systems, pp. 451–459 (2011)

Polyak, B.T., Juditsky, A.B.: Acceleration of stochastic approximation by averaging. SIAM J. Control. Optim. 30(4), 838–855 (1992)

Sutskever, I., Martens, J., Dahl, G., Hinton, G.: On the importance of initialization and momentum in deep learning. In: Proceedings of the 30th International Conference on Machine Learning (ICML 2013), pp. 1139–1147 (2013)

Zeiler, M.D.: ADADELTA: an adaptive learning rate method. arXiv preprint arXiv:1212.5701 (2012)

Lee, D.D., Seung, H.S.: Algorithms for non-negative matrix factorization. In: Advances in Neural Information Processing Systems, pp. 556–562 (2001)

Li, D., Chen, C., Lv, Q., Yan, J., Shang, L., Chu, SM.: Low-rank matrix approximation with stability (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Li, X., Jing, L., Liu, H. (2018). Adaptive Ensemble Probabilistic Matrix Approximation for Recommendation. In: Lai, JH., et al. Pattern Recognition and Computer Vision. PRCV 2018. Lecture Notes in Computer Science(), vol 11258. Springer, Cham. https://doi.org/10.1007/978-3-030-03338-5_28

Download citation

DOI: https://doi.org/10.1007/978-3-030-03338-5_28

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-03337-8

Online ISBN: 978-3-030-03338-5

eBook Packages: Computer ScienceComputer Science (R0)