Abstract

Discriminative Correlation Filter (DCF) based trackers have tremendously improved the tracking performance. They adopt the first frame of video sequence to initialize the tracker and provide a fast solution due to its formulation in the Fourier domain. Previous work that applies a DCF layer on the top of pretrianed CNN, however, has not taken full advantage of CNN feature maps. In this paper, we propose a tracking architecture to fuse the local and global response map for visual tracking in an accuracy and robust way. The feature map extracted from pretrained CNN is applied to a fully-convolutional DCF layer and a non-local layer for capturing local and global response map. Experiments show that our method achieves state-of-the-art performance on three popular benchmarks: OTB-2013, OTB-2015 and VOT2016.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Visual tracking is one of the fundamental problems in the field of computer vision and has a variety of applications, such as human-computer interactions, smart surveillance systems and autonomous driving. Given the initial state of target object in the first frame of video sequence, visual tracking aims to describe the movement of target object by motion modeling and keeping estimating its trajectory under the hard computational constraints of real-time vision system (e.g., deformation, illumination variations, motion blur and occlusion).

Discriminative Correlation Filters (DCF) is an efficient algorithm which learns to discriminate the target object from surrounding background by solving a ridge regression problem extremely efficiently [4, 15]. Recently, DCF based trackers [10, 20] have shown great performance improvements on several popular tracking benchmarks [18, 31, 32] on the part of accuracy, robustness and speed. Compared to other tracking algorithms, DCF owns its performance to a Fourier domain formulation as an cheap element-wise operation and dense response scores over all searching locations. In contrast, sparsely sampling used by other algorithms only generates sparse response scores and may lose the target object in complex environment.

Instead of using multi-dimensional features [12], robust scale estimation [10] or non-linear kernels [15], recent DCF based trackers try to learn DCF directly over a deep convolutional neural network (CNN) [7, 11, 19] and benefit from the end-to-end training [26]. This has shown that with the discriminate CNN feature map pretrianed on ImageNet dataset offline, DCF gains much improvements on performance. However, in the aforementioned work, these algorithms simply integrate pre-trained deep model with DCF, without considering the limitations of CNN features.

In this paper, we propose a non-local neural network learning scheme for better visual tracking. We first modify a given CNN model into a fully-convolutional network in which we can provide search image with arbitrary size and get more accuracy location. Then we reformulate DCF as a convolutional layer without bias on the top of CNN feature map, which generate local response map of the input image. Meanwhile, CNN feature map is fed into a non-local layer for capturing global response map, which is response for modeling background objects. Similar to DCF, finial response map combined by local response map and global response map generates dense response scores over all searching locations in an input image. For scale estimation, rather than feeding several search patches extracted based on the center location of the target in different scales, we crop feature maps in different scales from original feature map after CNN. This operation helps to efficiently speed our model in one forward propagation. Moreover, fully-convolutional DCF layer and non-local layer are fully differentiable, this allows us to train and update the model through back propagation algorithm (Fig. 1).

In summary, our contributions are as follows:

-

We propose a non-local network architecture for visual tracking by using a combination of fully-convolutional DCF layer and non-local layer, which capture local and global response map for better discriminating target object from background objects.

-

We replace image pyramids with feature map pyramids to save more than half the time in scale estimation progress.

-

Extensive experiments on three popular tracking benchmarks, OTB-2013 [31], OTB-2015 [32] and VOT-2016 [18], show that our tracker achieves state-of-the-art performance.

2 Related Work

Visual tracking has been widely studied in the literature [25, 33]. In this section we mainly introduce two aspects which are most related to our work.

Correlation Filter: Since the seminal work of Bolme (et al.) [4] employed Correlation Filter for visual tracking, Several algorithms [2, 9, 10, 15] were built upon it and devoted notable efforts to its improvement, such as using multi-dimensional features [12], robust scale estimation [10], reducing boundary effects [13] and non-linear kernels [15]. Recently, CNN based DCF trackers have been introduced [2, 6, 26], they reformulate DCF as one convolutional layer and apply it on the top of pretrained CNN models to obtain response map. Since DCF is interpreted as a differentiable convolutional layer, the loss function can be easily back-propagated through DCF layer to the CNN model.

Non-local Neural Network: Non-local operations [30] is the extension of non-local means which is a classical filtering algorithm that computes a weighted mean of all pixels in an image. It is an efficient and simple approach for capturing long-distance dependences in CNN by enlarging receptive fields. Unlike convolutional operations which processes a local neighborhood in space and needs to stack much operations to capture long-range dependences, non-local operations capture long-range dependencies directly by computing interactions between any two positions, regardless of their positional distance. Thus, we can apply non-local operations for capturing global feature map for modeling the background objects with only a few layers (Fig. 2).

Overview of our proposed tracking architecture. We apply one search image into the pretrained CNN for feature extraction, then the feature map is fed into fully-convolutional DCF layer and non-local layer for capturing local and global response map respectively, an accumulative operation is employed for final response map.

3 Learning Non-local Representation

In this section, we present a convolutional framework for learning the combination of local and global response map. The local response map is captured through a fully-convolutional layer which is formulated as standard DCFs, and global response map obtained by non-local operations is the response at a position as a weighted sum of the features at all positions. Then, we sum the local and global response map for final response map.

3.1 Fully-Convolutional DCF Reformulation

We adopt VGG16 network [24] for base model, and modify it in order to allow the model to be interpreted as a feature extractor for DCF layer.

A fully-convolutional neural network is a transformation that it commutes with translation [3]. It can benefit from a much larger input image with more background information, rather than a search image of the target size. To be specific, given the position of target object in the first frame of video sequence, we can obtain an input image centered on the target object and the size is 5 times larger than it, without worrying about decrease of accuracy. For a translation operator \(L_{\tau }\) and an input image X, we have \((L_{\tau }X)[\mu ]=X[\mu - \tau ]\), a neural network \(f_{\rho }\) with learnable parameters \(\rho \) that maps input image X to feature map \(f_{\rho }(X)\) is fully-convolutional with integer stride n if

for any translation \(\tau \).

On the basis of fully-convolutional neural network, we follow the DCF reformulation in [26] and reformulate it into a fully-convolutional DCF layer. We crop a search patch (denoted as X as well) from the given input image X, which represents the object of interest and typically larger than the target object. DCF layer learns a discriminative classifier with correlation filter \(\mathcal {W}\) by minimizing the L2 loss between response map and the corresponding Gaussian function label Y:

where \(\lambda \) is the regularization parameter. Equation 2 amounts to predicting the target translation through an exhaustive search of the maximum value in the response map.

We take \(\mathcal {W} \star f_{\rho }(X)\) as the convolution operation on feature map \(f_{\rho }(X)\), which can be achieved through one convolutional layer without bias term. Thus we can reformulate the Eq. 2 in convolution neural network, which learns a convolutional layer by minimizing the following loss function:

where \(\mathcal {F}_{\mathcal {W}}(X)=\mathcal {W} \star f_{\rho }(X)\), and \(\lambda r(\mathcal {W})\) is the weight decay. We simply use the first frame of video sequence as training image and set the batch N of each iteration 1. We take the L2 norm as \(r(\mathcal {W})\) based on DCF function. In order to cover the target object, we set the size k of filter \(\mathcal {W}\) equal to the target object after several down-samplings.

In general, target object with large size will provide more representations in feature map and result in more accuracy and robust tracking performance, so we tend to enlarge small size target object to an appropriate size through bilinear interpolation in the search patch. However, as mentioned above, the size k of filter \(\mathcal {W}\) must be set equal to the target object, this constraint will highly increase the computation of DCF layer and slow down tracking speed.

Considering above weakness, we introduce large separable convolution layer [27] to act as DCF layer, its structure is illustrated in Fig. 3. A large separable convolution layer is composed of two separate convolution sequences, each convolution sequence is made up of a \(k \times 1\) convolution layer followed by a \(1 \times k\) convolution layer or a \(1 \times k\) convolution layer followed by a \(k \times 1\) convolution layer.

The standard DCF layer is parameterized by convolution kernel \(\mathcal {W}\) of size \(k \times k \times C \times 1\) where C is the channels of feature map, and it has the computational cost of:

where the computational cost depends on the kernel size \(k \times k\), channels of feature map C and feature map size \(H \times W\).

Considering large separable convolution layer cost:

which is the sum of two separate convolution sequences.

By replacing standard DCF layer with two separate convolution sequences, we get a reduction in computation of:

In our architecture, we set k to be equal to the size of target object and \(C = 64\), the large separable convolution layer uses nearly 2/k times less computation than standard DCF layer at only little reduction in performace.

Then, we get the fully-convolutional DCF layer in the form of two separate convolution sequences with L2 loss as the objective function, We name it as fully-convolutional DCF layer.

3.2 Non-local Neural Network

DCF layer captures local response map using two separate convolution sequences with fixed kernel size. As a result of convolution operation, DCF layer is only sensitive to the neighborhood of target object, the background objects far away from the center location of target object are not considered sufficiently, thus it is unlikely to be able to agree with the ground truth soft label. Some tracking algorithms have suggested to use more discriminative deep features (e.g. DeepSRDCF [7] and CCOT [11]) or learn complex deep trackers (e.g. MDNet [21]). Instead of using more complex deep features which may dramaticly increase network computation and result in overfitting, we employ non-local operations as a residual layer for capturing global response map of background objects.

Non-local [30] is a lightweight stack of several convolution layers and softmax layer, we apply a non-local block in our neural network to compute the response at a position as a weighted sum of the feature map of all positions:

where y is the computed response map, i is the position of the input feature map x whose value is to be computed and j is the index that enumerates all possible positions, g is a pairwise function which computes responses based on relationships between different locations. The unary function h computes a representation of the input feature map as position j. We set \(J(x_{i}) = \sum _{\forall j} g(x_{i}, x_{j})\) to normalize the response (Fig. 4).

Here we apply Embedded Gaussian to compute similarity in an embedding space: \(g(x_{i}, x_{j}) = e^{\theta (x_{i})^{T}\phi (x_{j})}\), \(h(x_{j}) = W_{g}x_{j}\) and \(\theta (x_{i}) = W_{\theta }x_{i}\), \(\phi (x_{j}) = W_{\phi }x_{j}\) are two embeddings. These operations can be achieved through a softmax layer and several convolutional layers without bias term. For arbitrary position i in an input feature map, non-local layer computes the similarity between position i and all positions j to model the global residual response map.

Compared to DCF layer which sums up the weighted feature map in a local neighborhood (e.g., \(i - s \le j \le i + s\) with kernel size equal to the object size \(2s+1\)), non-local layer can caputer weighted feature all over the feature map, which enhances the influence of background objects over the target object.

3.3 Scale Estimation

Instead of feeding several search images extracted based on the center location of the target object in different scales, we crop feature maps in different scales from original feature map after VGG16 network. These feature maps are then resized into a fixed size before fed into DCF layer and non-local layer to generate final response map. This operation helps to share process of feature extraction and save much computation without degrading performance.

We use a smooth function to update the size \(s_{t}\) of the predicted target object:

where \(s = (w, h)\) and \(s_{t}^{*}\) is the predicted size of the target object in frame t with the maximum response value, \(\beta \) is the weight function to smooth the update of predicted size.

4 Experiments

In this section, we investigate the effect of incorporating of local response map by DCF layer and global response map by non-local layer. We first introduce implementation details. Then we compare our tracker with state-of-the-art trackers on the benchmark datasets for performance evaluation.

4.1 Implementation Details

Our experiment is performed in Python based on Tensorflow [1], and runs at around 5 FPS on a PC with an i7 3.7 GHz CPU and speeded up by a NVIDIA GeForce Titan X GPU. For each new video sequence, we obtain the training image from the first frame whose size is 5 times the width and height of the target object through padding or cropping. Then we feed it into pretrained VGG16 network [24] for feature extraction. After extracting the feature map, we respectively apply DCF layer and non-local layer on it to obtain local and global response map, and sum them together for final response map. The regression target map for computing loss is generated by a two-dimensional Gaussian function with a peak value of 1.0. We calculate the difference between response map and regression target map using L2 loss function and apply the adam optimizer with a learning rate of 5e−8 for back propagation. We stop training until the loss is below the given threshold and update the model when the final response map has a variance above another given threhold with a learning rate of 5e−9.

4.2 Comparison with State-of-the-Art

Here, we extensively consider to evaluate our tracker on three popular tracking benchmarks OTB-2013 [31], OTB-2015 [32] and VOT-2016 [18], comparing with several state-of-the-art trackers.

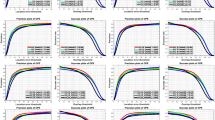

OTB-2013 Dataset: OTB-2013 benchmark [31] contains 50 fully annotated video sequences with various challenges in object tracking, such as deformation, fast motion and occlusion. We compare our method with most recent state-of-the-art trackers including KCF [15], ECO [6], Staple [2], SRDCF [8], LCT [20], SINT [28], SiamFC [3], SRDCFdecon [9], DeepSRDCF [7], Struck [14], MDNet [21], CF2 [19], C-COT [11], SCT [5], MEEM [34], TCNN [22], HDT [23], STCT [29], MUSTer [17] and CREST [26]. We adopt the one-pass evaluation (OPE) with precision and success plots metrics defined in [31] to evaluate the robustness of trackers. Figure 5 shows the comparison of our tracker with other trackers. The figure legend indicates the average distance precision score and the area-under-curve score at 20 pixels for precision plots and success plots. We can see that our tracker achieves state-of-the-art performance among all the trackers. The higher precision score at low location error threshold (5 10) means that our tracker hardly misses the target even though at a strict threshold. It is worth noticing that, compared with CREST [26] which also uses one convolutional layer to formulate the DCF, our tracker outperforms it in both measures.

OTB-2015 Dataset: OTB-2015 benchmark [32] contains 100 fully annotated video sequences. We also compare our tracker with several trackers, include MDNet [21], C-COT [11], SRDCFdecon [9], HDT [23], Staple [2], SRDCF [8], DeepSRDCF [7], CNN-SVM [16], CF2 [19], LCT [20], DSST [10], MEEM [34], KCF [15] and CREST [26]. We adopt the one-pass evaluation (OPE) with precision and success plots metrics defined in [31] to evaluate the robustness of trackers. Figure 6 shows the comparison of our tracker with other trackers. We can see that our tracker also achieves state-of-the-art performance among all the trackers. Our tracker is slightly underperform the C-COT [11] when compared with OTB-2013 benchmark [31], it means that our tracker is not as robust as C-COT [11] in much challenging conditions.

VOT-2016 Dataset: VOT-2016 benchmark [18] contains 60 challenging videos from a set of more than 300 videos. We compare our model with the top-ranked trackers, including TCNN [22], C-COT [11], ECO [6], Staple [2], EBT [35], MDNet [21], SiamFC [3] SSAT [18] and CREST [26], in terms of expected average overlap (EAO), accuracy values (Av) and robustness values (Rv). Table 1 shows the comparison of our tracker with the state-of-art trackers. Among all the trackers, ECO [6] achieves the best performance. These top five trackers are all based on deep CNN and our tracker achieves the similar performace with other trackers.

5 Conclusion

In this paper, we present a novel network architecture for accuracy and robust visual tracking by fusing the local and global response map. Instead of applying a DCF layer on the top of the pretrianed CNN and merely regressing the local response map, we introduce a convolutional DCF layer for capturing local response map and a non-local layer for global response map, which helps the model to distinguish the target object from the background. Also, we replace image pyramids with feature map pyramids in scale estimation, this operation help to save half the time in one forwarding. Experiments on three popular tracking benchmarks show that our model achieves state-of-the-art performance.

References

Abadi, M., et al.: Tensorflow: a system for large-scale machine learning. In: Proceedings of the 12th USENIX Conference on Operating Systems Design and Implementation, vol. 16, pp. 265–283. USENIX Association (2016)

Bertinetto, L., Valmadre, J., Golodetz, S., Miksik, O., Torr, P.H.S.: Staple: complementary learners for real-time tracking. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1401–1409. IEEE (2016)

Bertinetto, L., Valmadre, J., Henriques, J.F., Vedaldi, A., Torr, P.H.S.: Fully-convolutional siamese networks for object tracking. In: Hua, G., Jégou, H. (eds.) ECCV 2016. LNCS, vol. 9914, pp. 850–865. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-48881-3_56

Bolme, D.S., Beveridge, J.R., Draper, B.A., Lui, Y.M.: Visual object tracking using adaptive correlation filters. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 2544–2550. IEEE (2010)

Choi, J., Chang, H.J., Jeong, J., Demiris, Y., Choi, J.Y.: Visual tracking using attention-modulated disintegration and integration. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, pp. 4321–4330. IEEE (2016)

Danelljan, M., Bhat, G., Khan, F.S., Felsberg, M.: Eco: efficient convolution operators for tracking. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition, pp. 21–26. IEEE (2017)

Danelljan, M., Häger, G., Khan, F.S., Felsberg, M.: Convolutional features for correlation filter based visual tracking. In: 2015 IEEE International Conference on Computer Vision Workshop, pp. 58–66. IEEE (2015)

Danelljan, M., Häger, G., Khan, F.S., Felsberg, M.: Learning spatially regularized correlation filters for visual tracking. In: 2015 IEEE International Conference on Computer Vision, pp. 4310–4318. IEEE (2015)

Danelljan, M., Häger, G., Khan, F.S., Felsberg, M.: Adaptive decontamination of the training set: a unified formulation for discriminative visual tracking. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1430–1438. IEEE (2016)

Danelljan, M., Häger, G., Shahbaz Khan, F., Felsberg, M.: Accurate scale estimation for robust visual tracking. In: Proceedings of the British Machine Vision Conference. BMVA Press (2014)

Danelljan, M., Robinson, A., Shahbaz Khan, F., Felsberg, M.: Beyond correlation filters: learning continuous convolution operators for visual tracking. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9909, pp. 472–488. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46454-1_29

Galoogahi, H.K., Sim, T., Lucey, S.: Multi-channel correlation filters. In: 2013 IEEE International Conference on Computer Vision, pp. 3072–3079. IEEE (2013)

Galoogahi, H.K., Sim, T., Lucey, S.: Correlation filters with limited boundaries. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition, pp. 4630–4638. IEEE (2015)

Hare, S., et al.: Struck: structured output tracking with kernels. IEEE Trans. Pattern Anal. Mach. Intell. 38(10), 2096–2109 (2016)

Henriques, J.F., Caseiro, R., Martins, P., Batista, J.: High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 37(3), 583–596 (2015)

Hong, S., You, T., Kwak, S., Han, B.: Online tracking by learning discriminative saliency map with convolutional neural network. In: Proceedings of the 32nd International Conference on International Conference on Machine Learning, pp. 597–606. JMLR.org (2015)

Hong, Z., Chen, Z., Wang, C., Mei, X., Prokhorov, D., Tao, D.: Multi-store tracker (muster): a cognitive psychology inspired approach to object tracking. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition, pp. 749–758. IEEE (2015)

Kristan, M.: The visual object tracking VOT2016 challenge results. In: Hua, G., Jégou, H. (eds.) ECCV 2016. LNCS, vol. 9914, pp. 777–823. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-48881-3_54

Ma, C., Huang, J.B., Yang, X., Yang, M.H.: Hierarchical convolutional features for visual tracking. In: 2015 IEEE International Conference on Computer Vision, pp. 3074–3082. IEEE (2015)

Ma, C., Yang, X., Zhang, C., Yang, M.H.: Long-term correlation tracking. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition, pp. 5388–5396. IEEE (2015)

Nam, H., Han, B.: Learning multi-domain convolutional neural networks for visual tracking. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, pp. 4293–4302. IEEE (2016)

Nam, H., Baek, M., Han, B.: Modeling and propagating cnns in a tree structure for visual tracking. arXiv preprint arXiv:1608.07242 (2016)

Qi, Y., et al.: Hedged deep tracking. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, pp. 4303–4311. IEEE (2016)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. CoRR (2014)

Smeulders, A.W.M., Chu, D.M., Cucchiara, R., Calderara, S., Dehghan, A., Shah, M.: Visual tracking: an experimental survey. IEEE Trans. Pattern Anal. Mach. Intell. 36(7), 1442–1468 (2014)

Song, Y., Ma, C., Gong, L., Zhang, J., Lau, R.W.H., Yang, M.H.: Crest: convolutional residual learning for visual tracking. In: 2017 IEEE International Conference on Computer Vision, pp. 2574–2583. IEEE (2017)

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., Wojna, Z.: Rethinking the inception architecture for computer vision. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, pp. 2818–2826. IEEE (2016)

Tao, R., Gavves, E., Smeulders, A.W.M.: Siamese instance search for tracking. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1420–1429. IEEE (2016)

Wang, L., Ouyang, W., Wang, X., Lu, H.: Stct: sequentially training convolutional networks for visual tracking. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1373–1381. IEEE (2016)

Wang, X., Girshick, R., Gupta, A., He, K.: Non-local neural networks. arXiv preprint arXiv:1711.07971 (2017)

Wu, Y., Lim, J., Yang, M.H.: Online object tracking: a benchmark. In: 2013 IEEE Conference on Computer Vision and Pattern Recognition, pp. 2411–2418. IEEE (2013)

Wu, Y., Lim, J., Yang, M.H.: Object tracking benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 37(9), 1834–1848 (2015)

Yilmaz, A., Javed, O., Shah, M.: Object tracking: a survey. ACM Comput. Surv. 38(4), 13 (2006)

Zhang, J., Ma, S., Sclaroff, S.: MEEM: robust tracking via multiple experts using entropy minimization. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8694, pp. 188–203. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10599-4_13

Zhu, G., Porikli, F., Li, H.: Beyond local search: tracking objects everywhere with instance-specific proposals. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, pp. 943–951. IEEE (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhang, P., Wang, Z. (2018). Learning Non-local Representation for Visual Tracking. In: Lai, JH., et al. Pattern Recognition and Computer Vision. PRCV 2018. Lecture Notes in Computer Science(), vol 11259. Springer, Cham. https://doi.org/10.1007/978-3-030-03341-5_18

Download citation

DOI: https://doi.org/10.1007/978-3-030-03341-5_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-03340-8

Online ISBN: 978-3-030-03341-5

eBook Packages: Computer ScienceComputer Science (R0)