Abstract

Off-policy algorithms have played important roles in deep reinforcement learning. Since the off-policy based policy gradient is a biased estimation, the previous works employed importance sampling to achieve the unbiased estimation, where the behavior policy is known in advance. However, it is difficult to choose the reasonable behavior policy for complex agents. Moreover, importance sampling usually produces the large variance. To address these problems, this paper presents a novel actor-critic policy gradient algorithm. Specifically, we employ the tree-backup method in off-policy setting to achieve the unbiased estimation of target policy gradient without using importance sampling. Meanwhile, we combine the naive episode-experience replay and the experience replay to obtain the trajectory samples and reduce the strong correlations between these samples. The experimental results demonstrate the advantages of the proposed method over the competed methods.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Off-policy actor-critic policy gradient

- Tree-backup algorithm

- All-action method

- Episode-experience replay

1 Introduction

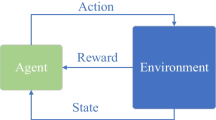

In reinforcement learning, off-policy methods have been receiving much more attention. It breaks the dilemma of on-policy methods that the agent only can learn the policy it is executing. In off-policy setting, the agent is able to learn its target policy while executing another behavior policy. There are mainly two forms of off-policy algorithms, one based on the value function and the other based on the policy gradient.

The value-function approach has worked well in many applications. Q-learning, the prototype of DQN [9], is a classical value function based off-policy algorithm [16]. Different from the on-policy value function methods such as SARSA, Q-learning directly learns its optimal action-value function by executing an exploratory policy. However, Q-learning is just guaranteed to converge to optimal policy for the tabular case and may diverge when using function approximation [2]. The large overestimations of the action values also may lead it to perform poorly in many stochastic environments [1, 3, 5]. Using off-policy per-decision importance sampling Monte-Carlo method [11] is also a choice. However, using importance sampling to correct bias may produce large variance and therefore makes the learning unstable. In recent years, the work of Harutyunyan et al. [4] shows that if the behavior policy \(\mu \) and target policy \(\pi \) are not too far away, off-policy policy evaluation, without correcting for the “off-policyness” of a trajectory, still converges to the desired \(Q^\pi \). Using this conclusion, when \(\mu \) is similar to \(\pi \), we can directly think of the off-policy methods as the on-policy methods and don’t need to use importance sampling technique as before. However, the similarity between policies are difficult to control which makes their method is restrictive and not practical. Thus, using importance sampling seems to be still inevitable. Remi Munos et al. [10] proposed a new off-policy algorithm, Retrace(\(\lambda \)), which uses an importance sampling ratio truncated at 1. and can safely use samples collected from any behavior policy \(\mu \) regardless of the \(\mu \)’s degree of “off-policyness”. However, one of its inherent disadvantages is that the existence of importance sampling ratio makes it have to select a explicit behavior policy \(\mu \) in training and as we all know, the training performance is directly affected by behavior policy, but choosing a reasonable behavior policy, especially for a complex agent, is often a difficult task.

From the perspective of policy gradient, Degris et al. [5] proposes the off-policy policy-gradient theorem and introduces the first off-policy actor-critic method, called off-PAC. This method uses the actor-critic framework in which critic learns an off-policy estimator of the action value function by GTD(\(\lambda \)) algorithm and this estimator is then used by the actor to update the policy which uses incremental update algorithm with eligibility traces. However, facing the problem of biased estimation caused by different sample distribution, the gradient of objective function in off-PAC also chooses to use the importance sampling technique. Following the off-policy policy gradient theorem, Ziyu Wang et al. proposes a new off-policy actor critic algorithm with experience replay, called ACER [15]. To make it stable, sample efficient, and perform remarkably well on challenging environments, it introduces many innovations, such as truncated importance sampling technique, stochastic dueling network architectures, and a new trust region policy optimization method. However, like the problem in Retrace(\(\lambda \)), it also need to choose a reasonable behavior policy which sometimes is a hard work.

In summary, using importance sampling technique to correct the sample distribution difference is a popular method. Simultaneously, in order to avoid variance explosion problem of ordinary importance sampling, many variants of importance sampling are proposed, such as weighted importance sampling [8] or truncated importance sampling etc. However, it should be noted that these variants often come at the cost of increasing bias of the estimator. In addition, the most key drawback of importance sampling is that its behavior policy should be known, Markov(purely a function of the current state), and represented as explicit action probabilities. However, for complex agents, none of these may be true [11].

Based on the discussion above, we try to improve the estimator from the perspective of actor and critic to make our off-policy policy gradient estimator unbiased theoretically without using importance sampling technique. In detail, we use all-action method [14] in actor and exploit tree-backup method to achieve unbiased n-step return to estimate the action value function in critic. Meanwhile, inspired by the experience replay technique, in order to provide tree-backup algorithm with enough low correlation trajectory samples in learning process, we propose the episode-experience replay, which combines the naive episode-experience replay and experience replay. The experimental results demonstrate the advantages of the proposed method over the competed methods.

2 Preliminaries and Notation

In this paper, we consider the episodic framework, in which the agent interacts with its environment in a sequence of episodes, numbered \(m=1,2,\ldots \), each of which consists of a finite number of time steps, \(t=0,1,2,\ldots ,T_{end}^m\). The first state of each episode, \(s_0 \in \mathcal {S}\) is chosen according to a fixed initial distribution \(p_0(s_0)\). We model the problem as a Markov decision processes which comprises: a state space \(\mathcal {S}\), an discrete action space \(\mathcal {A}\), a distribution \(\mathcal {P}:\mathcal {S}\times \mathcal {S}\times \mathcal {A}\rightarrow [0,1]\), where \(p(s'|s, a)\) is the probability of transitioning into state \(s'\) from state s after taking action a, and an expected reward function \(\mathcal {R}:\mathcal {S}\times \mathcal {A}\rightarrow \mathbb {R}\) that provided an expected reward r(s, a) for taking action a in state s and transitioning into \(s'\). We assume here that \(\mathcal {A}\) is finite and the environment is completely characterized by one-step state-transition probabilities, \(p(s'|s, a)\), and expected rewards, r(s, a), for all \(s, s' \in \mathcal {S}\) and \(a \in \mathcal {A}\).

The target policy of an agent is noted as \(\pi _\theta \) which maps states to a probability distribution over the actions \(\pi _\theta \): \(\mathcal {S}\rightarrow \mathcal {P}(\mathcal {A})\), where \(\theta \in \mathbb {R}^n\) is a vector of n parameters. The return from a state is defined as the sum of discounted future reward \(R_t=\sum _{i=t}^{T_{end}}\gamma ^{i-t}r(s_i, a_i)\) with a discounted factor \(\gamma \in [0,1]\). Note that the return depends on the actions chosen, and therefore on the policy, and may be stochastic. We defined the state-value function for \(\pi _\theta \) to be: \(V^{\pi _\theta }(s)=E_{\pi _\theta }(R_0|s_0=s)\) and action-value function: \(Q^{\pi _\theta }(s, a)=E_{\pi _\theta }(R_0|s_0=s, a_0=a)\), both of which are the expected total discounted reward.

The behavior policy is noted as \(b_\mu \): \(\mathcal {S}\rightarrow \mathcal {P}(\mathcal {A})\), where \(\mu \in \mathbb {R}^m\) is a vector of m parameters. We observe a stream of data, which includes state \(s_t \in \mathcal {S}\), action \(a_t \in \mathcal {A}\), and reward \(r_t \in \mathbb {R}\) for \(t=1,2,\ldots \) with actions selected from a distinct behavior policy, \(b_\mu (a|s) \in (0,1]\). Our aim is to choose \(\theta \) so as to maximize the following scalar objective function:

where \(d^b(s)=lim_{t \rightarrow \infty }P(s_t=s| s_0, b)\) is the limiting distribution of states under b and \(P(s_t=s| s_0, b)\) is the probability that \(s_t=s\) when starting in \(s_0\) and executing b. The objective function is weighted by \(d^b\) for the reason that in the off-policy setting, data is obtained according to the behavior distribution. For simplicity of notation, we will write \(\pi \) and implicitly mean \(\pi _\theta \).

3 Off-Policy Actor-Critic Combined with Tree-Backup

In off-policy setting, for the reason that using samples from behavior policy’s distribution different from the sample distribution of target policy to calculate the naive policy gradient estimator may introduce the bias and therefore changes the solution that the estimator will converge to [12], many off-policy methods choose to use importance sampling technique, one general technique for correcting this bias of estimator. However, as discussed above, taking into account the shortcomings of this technology such as acquiring behavior policy to explicitly be represented as action probabilities and may leading the estimator to cause large variance, we choose to use all-action method and tree-backup algorithm to allow the estimator can estimate the policy gradient without using importance sampling.

3.1 Off-Policy Actor-Critic

The off-policy policy-gradient theorem proposed by Degris et al. [5] is:

where \(\rho (s,a)=\frac{\pi (a|s)}{b(a|s)}\) is the ordinary importance sampling ratio, \(\psi (s,a)=\frac{\nabla _{\theta }\pi (a|s)}{\pi (a|s)}\) is the eligibility vector. Using ordinary importance sampling radio \(\rho (s,a)\) in equation above can achieve the unbiased estimator of the actor-critic policy gradient under target policy \(\pi \) given samples from the behavior policy b’s sample distribution. However, in the case that an unlikely event occurs, \(\rho (s,a)\) can be very large and thus leads the estimator to cause a high variance and instability. There are also many techniques to reduce the variance of the estimator, at the cost of introducing the bias, such as weighted importance sampling technique which performs a weighted average of the samples and therefore smooth the variance [8] or importance weight truncation technique which directly uses constant value c to truncate the importance sampling ratio, i.e. \(\overline{\rho _{t}}=min\{c,\rho _{t}\}\) [15], etc. However, the most key inherent drawback of importance sampling technique is that its behavior policy should be known, Markov(purely a function of the current state), and represented as explicit action probabilities. But for complex agents, none of these may be true [6].

3.2 Off-Policy Actor-Critic Combined with All-Action and Tree-Backup

Based on the disadvantages of the importance sampling discussed above, we try to find another way to eliminate the bias without using importance sampling.

Here, firstly, we start from the Eq. (2) and do some changes on it. This process is shown as below:

where we extract the series over actions to remove random variable \(a_t\). Such operation can avoid introducing importance sampling in the actor. Algorithms of this form are called all-action methods because an update is made for all actions possible in each state encountered irrespective of which action was actually taken [14].

Since the exact value of action value function \(Q^\pi (s, a)\) is unknown, the next step is to replace \(Q^\pi (s, a)\) with some estimator. Here, limited by the lack of trajectory samples starting with the \(s_t\), \(a(a \ne a_t)\), we can only use action-value function approximation \(Q^\omega (s_t, a)\) to estimate \(Q^\pi (s_t, a)\) directly. But for \(Q^\pi (s_t, a_t)\), considering the error reduction property of n-step return [13] and that we can obtain the trajectory samples beginning with \(s_t\), \(a_t\), we choose to use n-step return to estimate it.

However, due to the difference of sample distribution, directly using naive n-step return to estimate will produce bias. Thus, we need some techniques to remove this bias. In order to avoid the disadvantages of importance sampling, we choose to use tree-backup algorithm to estimate the \(Q^\pi (s_t, a_t)\). Tree-backup algorithm itself is designed to estimate the action-value function in off-policy setting. At each step along a trajectory, there are several possible choices according to the target policy. The one-step target combines the value estimations for these actions according to their probabilities of being taken under the target policy. At each step, the behavior policy chooses one of the actions, and for that action, one time step later, there is a new estimation of its value, based on the reward received and the estimated value of the next state. The tree-backup algorithm then forms a new target, using the old value estimations for the actions that were not taken, and the new estimated value for the action that was taken [11]. If the process above is iterated over n steps, we can get the n-step tree-backup estimator \(G_{t:t+n}\) of \(Q^{\pi }(s_{t},a_{t})\):

where \(\pi _{i}\) is short for \(\pi (a_{i}|s_{i})\). In order to improve the computational efficiency, we can use the iterative form as below:

In general, we replace the \(Q^\pi (s_t,a)\) with differentiable action-value function \(Q^\omega (s_t,a)\) directly, and use n-step tree-backup estimator \(G_{t:t+n}\) to replace the \(Q^\pi (s_t, a_t)\). So, we can get the estimator \(\hat{g(\theta )}\) as below to estimate the off-policy actor-critic policy gradient \(g(\theta )\):

where \(G_{i: i+n}=r_{i+1}+\gamma \sum _{a \ne a_{i+1}}\pi (a| s_{i+1};\theta )Q^\omega (s_{i+1},a)+\gamma \pi (a_{i+1}| s_{i+1})G_{i+1:i+n}\).

One key advantage of tree-backup algorithm is that we won’t need to determine the behavior policy any more. As we all know, the selection of behavior policy directly affects the algorithm’s performance and choosing a reasonable behavior policy, especially for a complex agent, is always a difficult task.

It should be noted that the selection of n reflects the trade-off of bias and variance [15]. Typically the only-actor policy gradient estimators using Monte-Carlo return \(R_t\) as its critic, such as REINFORCE [17], will have higher variance and lower bias whereas the actor-critic estimators using function approximation as critic will have higher bias and lower variance. The greater the value of n is selected, the more information the \(G_{t: t+n}\) will get and therefore the smaller bias the \(G_{t: t+n}\) will produce, however, the larger the variance of \(G_{t: t+n}\) will be. Extremely when \(n=0\), then \(G_{t: t+n}\) degenerates the normal \(Q^\omega (s_t, a_t)\). In the experimental part of this paper, we will explain this problem through one simple experiment.

3.3 Episode-Experience Replay

In practice, the experiences obtained by trial-and-error usually take an expensive price, such as the loss of the equipment or the consumption of the time, etc. If these experiences are just utilized to adjust the networks only once and then thrown away, it may be very wasteful [7]. Experience replay technique is a straight and effective way to reuse the experience. DQN [9] uses the experience replay which store the experiences from the Atari games to gain the excellent performance.

There is no doubt that experience replay technique is very useful for one-step TD algorithms. However, tree-backup algorithm or other n-step TD algorithms all need trajectory samples rather than single experience samples. In previous papers, people usually execute one behavior policy and exploit the eligibility trace with importance sampling to learn along off-policy trajectory in the on-line way or use one behavior policy to produce an off-policy episode, and then use this episode to achieve trajectory samples to learn in the off-line way, et. There are some inherent drawbacks in those sampling methods. Firstly, because these trajectory samples stems from a same episode, there is strong correlations between samples. Secondly, it is difficult to provide the training with sufficient samples productively like the experience replay. Last but not least, these sampling methods also need a behavior policy explicitly represented as action probabilities, which, as we discussed above, is not an easy work.

In order to overcome all these shortcomings above, we propose episode-experience replay technique which combines the naive episode-experience replay technique and experience replay technique. Unlike the experience replay which just stores the single experience \((s_t, a_t, r_t, s_{t+1}) \thicksim b\), episode-experience replay works on episodes by storing the complete episode \(s_0,a_0,r_1,s_1,a_1,r_2,s_2\ldots \thicksim b\) in the episode-experience pool and selecting episodes from the pool randomly. For the reason that the policy parameters are updated all the time, therefore the episodes stored in pool which are originated from policy with old parameters can be viewed as off-policy episodes. In off-policy setting, this way is able to provide enough off-policy episodes productively for agent to learn and can greatly improve the data efficiency and the speed that we achieve the off-policy samples. However, directly using consecutive samples \(\{(s_t, a_t, r_{t+1},s_{t+1})_{t=0:T_{end}^i-1}\}_{i=1\ldots M}\) from those selected episodes, which we called naive episode-experience replay, as a training batch to learn is ineffective, due to the strong correlations between samples. So we combine the experience replay and episode-experience replay to get off-policy episode samples effectively while reducing correlations between samples. The process is shown as below in Fig. 1:

In this process, we first select m episode samples randomly from episode-experience pool. Then from each episode, we just produce only one trajectory sample. In detail, we also randomly choose one experience in each episode and use it as the starting point of the trajectory sample. The terminal point of trajectory point is the last experience of each episode. It should be noted that when we choose a starting experience in each episode, we should use one list to record the place in the corresponding episode for that experience which can help us go back to the trajectory’s concrete position in episode quickly. At the same time, the m experience samples just can be used as the training samples to train critic.

3.4 Algorithm

Pseudocode for our method is shown in Algorithm 1. Here, in order not to introduce the importance sampling, we use deep q-learning algorithm to train the critic network and the training samples for critic come from the starting experiences sampled in the process of episode-experience replay. For the reason that the network \(Q^\omega \) being updated is also used in calculating the target value and it will make the target value drastically change with the change of the \(Q^\omega \)’s parameters which may cause instability, inspired by [6], we use target network \(Q^{\omega '}\) to calculate the target value and use the “soft” target update, rather than directly copying the weights.

4 Experiment

In this section, we will check the rationality and effectiveness of our algorithm by learning under several simulation environments from OpenAI gym. We designed our experiment to investigative the following questions:

-

1.

What are the effect that different choices of n make on the algorithm performance?

-

2.

Compared to the original sampling method, using episode-experience Replay will bring much improvement in performance?

-

3.

Compared to some commonly used reinforcement learning methods, how much improvement will out algorithm bring?

4.1 Performance Comparison on Difference Choices of n

As we propose in Sect. 3, the choice of n reflects the trade-off of variance and bias. Here, we use the CartPole-v0 simulation environment and choose \(n=1,3,6,9,15\) for training. Figure 2 shows performance comparison on different n. We can find that different choices of n have a direct effect on the algorithm’ s performance and in this simulation environment, in terms of stability, convergence speed and performance, \(n = 9\) is the best choice relatively (Here, we use naive episode-experience replay to highlight the effect).

4.2 Performance Comparison on Difference Sampling Methods

In this subsection, we mainly compare the algorithm’s performance when using two kind different trajectory sampling methods, naive episode-experience replay and episode-experience replay during training. Due to the strong correlations between samples from naive episode-experience replay, as Fig. 3 shows, it has great fluctuations in performance during training. We can Obviously find that regardless of convergence speed and algorithm stability, episode-experience replay is more advantageous than naive episode-experience replay.

4.3 Performance Comparison with Other Conventional Algorithms

We compare the algorithm’s performance with DQN and Actor-Critic algorithm under three simulation environments from OpenAI Gym: CartPole-v0, MountainCar-v0, Acrobot-v0. As Fig. 4 shows, compared to DQN, Actor-Critic policy gradient algorithm performs more stable during training. DQN seems to have a great volatility which may stems from the inherent drawback of value function method, discontinuous change of policy based on value function. However, although Actor-Critic algorithm is more stable than DQN, the final performance it converges to is worser than DQN. Table 1 lists the average return of each algorithm, our method achieve the highest average return under these three simulation environments. In general, from the perspective of convergence speed and performance stability, our method performs better.

5 Conclusion

In this paper, we mainly study the Actor-Critic policy gradient problems in off-policy setting. Considering sample distribution difference between behavior policy and target policy will cause the estimator to produce bias and the the limitations of using the importance sampling technique, we use all-action method and tree-backup algorithm to allow the estimator to use samples from behavior policy directly to unbiasedly estimate the target policy gradient. Here, the all-action method helps remove the random variable \(a_t\), thus we can avoid importance sampling in the actor. The tree-backup method can help avoid importance sampling in the critic when using n-step return as the estimator of action value function.

In addition, in order to improve efficiency, we propose episode-experience replay technique which combines naive episode-experience replay technique and experience replay technique to overcome some main disadvantages of previous sampling methods, such as strong correlations between samples, low production of trajectory samples and requiring explicitly represented behavior policy, etc.

By experiments on the OpenAI gym simulation platform, results demonstrate the advantages of the proposed method over the competed methods.

References

Anschel, O., Baram, N., Shimkin, N.: Averaged-DQN: Variance reduction and stabilization for deep reinforcement learning. arXiv preprint arXiv:1611.01929 (2016)

Degris, T., White, M., Sutton, R.S.: Off-policy actor-critic. CoRR abs/1205.4839 (2012). http://arxiv.org/abs/1205.4839

Fujimoto, S., van Hoof, H., Meger, D.: Addressing function approximation error in actor-critic methods. arXiv preprint arXiv:1802.09477 (2018)

Harutyunyan, A., Bellemare, M.G., Stepleton, T., Munos, R.: Q(\(\lambda \)) with off-policy corrections. In: Ortner, R., Simon, H.U., Zilles, S. (eds.) ALT 2016. LNCS (LNAI), vol. 9925, pp. 305–320. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46379-7_21

Hasselt, H.V.: Double Q-learning. In: Lafferty, J.D., Williams, C.K.I., Shawe-Taylor, J., Zemel, R.S., Culotta, A. (eds.) Advances in Neural Information Processing Systems 23, pp. 2613–2621. Curran Associates Inc. (2010). http://papers.nips.cc/paper/3964-double-q-learning.pdf

Lillicrap, T.P., et al.: Continuous control with deep reinforcement learning. arXiv preprint arXiv:1509.02971 (2015)

Lin, L.J.: Reinforcement learning for robots using neural networks. Technical report. School of Computer Science, Carnegie-Mellon University, Pittsburgh, PA (1993)

Mahmood, A.R., van Hasselt, H.P., Sutton, R.S.: Weighted importance sampling for off-policy learning with linear function approximation. In: Advances in Neural Information Processing Systems, pp. 3014–3022 (2014)

Mnih, V., et al.: Playing Atari with deep reinforcement learning. arXiv preprint arXiv:1312.5602 (2013)

Munos, R., Stepleton, T., Harutyunyan, A., Bellemare, M.: Safe and efficient off-policy reinforcement learning. In: Advances in Neural Information Processing Systems, pp. 1054–1062 (2016)

Precup, D., Sutton, R.S., Singh, S.P.: Eligibility traces for off-policy policy evaluation. In: ICML, pp. 759–766. Citeseer (2000)

Schaul, T., Quan, J., Antonoglou, I., Silver, D.: Prioritized experience replay. arXiv preprint arXiv:1511.05952 (2015)

Sutton, R.S., Barto, A.G.: Reinforcement Learning: An introduction, vol. 1. MIT Press, Cambridge (1998)

Sutton, R.S., Singh, S., McAllester, D.: Comparing policy-gradient algorithms (2000). http://citeseerx.ist.psu.edu/viewdoc/download

Wang, Z., et al.: Sample efficient actor-critic with experience replay. arXiv preprint arXiv:1611.01224 (2016)

Watkins, C.J., Dayan, P.: Q-learning. Mach. Learn. 8(3–4), 279–292 (1992)

Williams, R.J.: Simple statistical gradient-following algorithms for connectionist reinforcement learning. In: Sutton, R.S. (ed.) Reinforcement Learning. SECS, vol. 173, pp. 5–32. Springer, Boston (1992). https://doi.org/10.1007/978-1-4615-3618-5_2

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Jiang, H., Qian, J., Xie, J., Yang, J. (2018). Episode-Experience Replay Based Tree-Backup Method for Off-Policy Actor-Critic Algorithm. In: Lai, JH., et al. Pattern Recognition and Computer Vision. PRCV 2018. Lecture Notes in Computer Science(), vol 11256. Springer, Cham. https://doi.org/10.1007/978-3-030-03398-9_48

Download citation

DOI: https://doi.org/10.1007/978-3-030-03398-9_48

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-03397-2

Online ISBN: 978-3-030-03398-9

eBook Packages: Computer ScienceComputer Science (R0)