Abstract

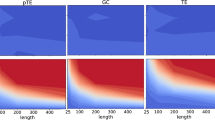

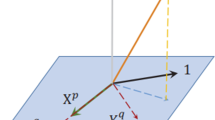

In this paper, we propose a novel definition of Wiener causality to describe the intervene between time series, based on relative entropy. In comparison to the classic Granger causality, by which the interdependence of the statistic moments beside the second moments are concerned, this definition of causality theoretically takes all statistic aspects into considerations. Furthermore under the Gaussian assumption, not only the intervenes between the co-variances but also those between the means are involved in the causality. This provides an integrated description of statistic causal intervene. Additionally, our implementation also requires minimum assumption on data, which allows one to easily combine modern predictive model with causality inference. We demonstrate that REC outperform the standard causality method on a series of simulations under various conditions.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

For the case of high dimensions, the similar results can be derived by the same fashion.

- 2.

Also as \(GC_{y\rightarrow x}=\ln \{\mathrm{tr}[\varSigma (x|x^{p})]/\mathrm{tr}[\varSigma (x|x^{p}\oplus y^{q})]\}\).

References

Hu, S., Wang, H., Zhang, J., Kong, W., Cao, Y., Kozma, R.: Comparison analysis: granger causality and new causality and their applications to motor imagery. IEEE Trans. Neural Netw. Learn. Syst. 27(7), 1429–1444 (2016)

Pearl, J.: Causality: Models, Reasoning, and Inference. Cambridge University Press, Cambridge (1999)

Wiener, N.: The Theory of Prediction, Modern Mathematics for Engineers. McGraw-Hill, New York (1956)

Granger, C.: Investigating causal relations by econometric models and cross-spectral methods. Econ. J. Econ. Soc. 37(3), 424–438 (1969)

Schreiber, T.: Measuring information transfer. Phys. Rev. Lett. 85(2), 461 (2000)

Liang, X.S., Kleeman, R.: Information transfer between dynamical system components. Phys. Rev. Lett. 95(24), 244101 (2005)

Bernett, L., Barrett, A.B., Seth, A.K.: Granger causality and transfer entropy are equivalent for Gaussian variables. Phys. Rev. Lett. 103(23), 238701 (2009)

Sobrino, A., Olivas, J.A., Puente, C.: Causality and imperfect causality from texts: a frame for causality in social sciences. In: International Conference on Fuzzy Systems, Barcelona, pp. 1–8 (2010)

Ding, M., Chen, Y., Bressler, S.: Handbook of Time Series Analysis: Recent Theoretical Developments and Applications. Wiley, Wienheim (2006)

Seth, A.K., Barrett, A.B., Barnett, L.: Granger causality analysis in neuroscience and neuroimaging. J. Neurosci. 35(8), 3293–3297 (2015)

Chen, T.Q., Guestrin, C.: XGBoost: a scalable tree boosting system. arXiv:1603.02754v3 (2016)

Kullback, S., Leibler, R.A.: On information and sufficiency. Ann. Math. Stat. 22(1), 79 (1951)

Cliff, O.M., Prokopenko, M., Fitch, R.: Minimising the Kullback-leibler divergence for model selection in distributed nonlinear systems. Entropy 20(2), 51 (2018)

Cover, T.M., Thomas, J.A.: Elements of Information Theory. Wiley, New York (1991)

Oseledec, V.I.: A multiplicative ergodic theorem: Liapunov characteristic number for dynamical systems. Trans. Moscow Math. Soc. 19, 197 (1968)

He, S.Y.: Parameter estimation of hidden periodic model in random fields. Sci. China A 42(3), 238 (1998)

Acknowledgments

This work is jointly supported by the National Natural Sciences Foundation of China under Grant No. 61673119, the Key Program of the National Science Foundation of China No. 91630314, the Laboratory of Mathematics for Nonlinear Science, Fudan University, and the Shanghai Key Laboratory for Contemporary Applied Mathematics, Fudan University.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Chen, J., Feng, J., Lu, W. (2018). A Wiener Causality Defined by Relative Entropy. In: Cheng, L., Leung, A., Ozawa, S. (eds) Neural Information Processing. ICONIP 2018. Lecture Notes in Computer Science(), vol 11302. Springer, Cham. https://doi.org/10.1007/978-3-030-04179-3_11

Download citation

DOI: https://doi.org/10.1007/978-3-030-04179-3_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-04178-6

Online ISBN: 978-3-030-04179-3

eBook Packages: Computer ScienceComputer Science (R0)