Abstract

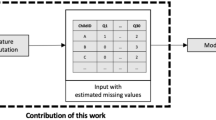

The benefits of caregivers implementing Pivotal Response Treatment (PRT) with children on the Autism spectrum is empirically supported in current Applied Behavior Analysis (ABA) research. Training caregivers in PRT practices involves providing instruction and feedback from trained professional clinicians. As part of the training and evaluation process, clinicians systematically score video probes of the caregivers implementing PRT in several categories, including if an instruction was given when the child was paying adequate attention to the caregiver. This paper examines how machine learning algorithms can be used to aid in classifying video probes. The primary focus of this research explored how attention can be automatically inferred through video processing. To accomplish this, a dataset was created using video probes from PRT sessions and used to train machine learning models. The ambiguity inherent in these videos provides a substantial set of challenges for training an intelligence feedback system.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Koegel, R.L.: How to teach pivotal behaviors to children with autism: a training manual (1988)

Lecavalier, L., Smith, T., Johnson, C., et al.: Moderators of parent training for disruptive behaviors in young children with autism spectrum disorder. J. Abnorm. Child Psychol. 45(6), 1235–1245 (2017)

Brutzer, S., Høferlin, B., Heidemann, G.: Evaluation of background subtraction techniques for video surveillance. In: IEEE CVPR, pp. 1937–1944 (2011)

Machalicek, W., O’Reilly, M.F., Rispoli, M., et al.: Training teachers to assess the challenging behaviors of students with autism using video tele-conferencing. Educ. Train. Autism Dev. Disabil. 45(2), 203–215 (2010)

Vismara, L.A., McCormick, C., Young, G.S., et al.: Preliminary findings of a telehealth approach to parent training in autism. J. Autism Dev. Disord. 43(12), 2953–2969 (2013)

Jain, A., Zamir, A.R., Savarese, S., Saxena, A.: Structural-RNN: deep learning on spatio-temporal graphs. In: IEEE CVPR, pp. 5308–5317 (2016)

Fragkiadaki, K., Levine, S., Felsen, P., Malik, J.: Recurrent network models for human dynamics. In: IEEE ICCV, pp. 4346–4354 (2015)

Chen, C.Y., Grauman, K.: Efficient activity detection in untrimmed video with max-subgraph search. IEEE Trans. Pattern Anal. Mach. Intell. 39(5), 908–921 (2017)

Ma, S., Sigal, L., Sclaroff, S.: Learning activity progression in LSTMs for activity detection and early detection. In: IEEE CVPR, pp. 1942–1950 (2016)

Zhao, R., Ali, H., van der Smagt, P.: Two-stream RNN/CNN for action recognition in 3D videos. arXiv preprint arXiv:1703.09783 (2017)

Jazouli, M., Elhoufi, S., Majda, A., Zarghili, A., Aalouane, R.: Stereotypical motor movement recognition using microsoft kinect with artificial neural network. World Acad. Sci. Eng. Technol. Int. J. Comput. Electr. Autom. Control Inf. Eng. 10(7), 1270–1274 (2016)

Zhang, Y., Liu, X., Chang, M.-C., Ge, W., Chen, T.: Spatio-temporal phrases for activity recognition. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012. LNCS, vol. 7574, pp. 707–721. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33712-3_51

Sener, F., Ikizler-Cinbis, N.: Two-person interaction recognition via spatial multiple instance embedding. J. Vis. Commun. Image Represent. 32, 63–73 (2015)

Van Gemeren, C., Poppe, R., Veltkamp, R.C.: Spatio-temporal detection of fine-grained dyadic human interactions. International Workshop on Human Behavior Understanding, pp. 116–133. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46843-3_8

Bazzani, L., Cristani, M., Tosato, D., Farenzena, M., et al.: Social interactions by visual focus of attention in a three-dimensional environment. Expert Syst. 30(2), 115–127 (2013)

Duffner, S., Garcia, C.: Visual focus of attention estimation with unsupervised incremental learning. IEEE Trans. Circ. Syst. Video Technol. 26(12), 2264–2272 (2016)

Pusiol, G., Soriano, L., Frank, M.C., Fei-Fei, L.: Discovering the signatures of joint attention in child-caregiver interaction. In: Proceedings of the Cognitive Science Society, vol. 36 (2014)

Rajagopalan, S.S., Murthy, O.R., Goecke, R., Rozga, A.: Play with me measuring a child’s engagement in a social interaction. In: IEEE FG, pp. 1–8, IEEE (2015)

Rajagopalan, S.S., Morency, L.-P., Baltrus̆aitis, T., Goecke, R.: Extending long short-term memory for multi-view structured learning. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9911, pp. 338–353. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46478-7_21

Presti, L., Sclaroff, S., Rozga, A.: Joint alignment and modeling of correlated behavior streams. In: IEEE ICCV, pp. 730–737, IEEE (2016)

Signh, N.: The effects of parent training in pivotal response treatment (PRT) and continued support through telemedicine on gains in communication in children with autism spectrum disorder. Degree of Doctor of Medicine, University of Arizona, April 2014. http://arizona.openrepository.com/arizona/handle/10150/315907

Suhrheinrich, S., Reed, S., Schreibman, L., Bolduc, C.: Classroom Pivotal Response Teaching for Children with Autism. Guilford Press, New York (2011)

Cao, Z., Simon, T., Wei, S.E., Sheikh, Y.: Realtime multi-person 2D pose estimation using part affinity fields. In: IEEE CVPR, IEEE (2017)

Considine, B.: https://www.youtube.com/watch?v=VwoAYir7Vsk. Accessed 30 Apr 2018

Pedregosa, F., Varoquaux, G., Gramfort, A., et al.: Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011)

Kratzert, F.: https://kratzert.github.io/2017/02/24/finetuning-alexnet-with-tensorflow.html. Accessed 30 Apr 2018

Krizhevsky, A., Sutskever, I., Hinton, E.: ImageNet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012). https://doi.org/10.1145/3065386

Acknowledgement

The authors thank Arizona State University and National Science Foundation for their funding support. This material is partially based upon work supported by the National Science Foundation under Grant No. 1069125.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Heath, C.D.C., Venkateswara, H., McDaniel, T., Panchanathan, S. (2018). Detecting Attention in Pivotal Response Treatment Video Probes. In: Basu, A., Berretti, S. (eds) Smart Multimedia. ICSM 2018. Lecture Notes in Computer Science(), vol 11010. Springer, Cham. https://doi.org/10.1007/978-3-030-04375-9_21

Download citation

DOI: https://doi.org/10.1007/978-3-030-04375-9_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-04374-2

Online ISBN: 978-3-030-04375-9

eBook Packages: Computer ScienceComputer Science (R0)