Abstract

Transfer learning algorithms utilize knowledge from a data-rich source domain to learn a model in the target domain where labeled data is scarce. This paper presents a novel solution for the challenging and interesting problem of Heterogeneous Transfer Learning (HTL) where the source and target task have heterogeneous feature and label spaces. Contrary to common space based HTL algorithms, the proposed HTL algorithm adapts source data for the target task. The correspondence required for aligning the heterogeneous features of the source and target domain is obtained through labels across two domains that are semantically aligned using web-induced knowledge. The experimental results suggest that the proposed algorithm performs significantly better than state-of-the-art transfer approaches on three diverse real-world transfer tasks.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Traditional supervised algorithms require sufficient labeled data to learn a computational model with a reasonable generalization to unseen examples. However, for many real-world problems, collecting labeled data is often very expensive and cumbersome. Transfer learning approaches utilise knowledge from an auxiliary domain with abundant labeled data (source domain) to perform tasks in domains with scarce labeled data (target domain). HTL [35] algorithms transfer knowledge from one domain to the other when the two domains have different features. Due to the heterogeneous feature spaces, the first task of any HTL algorithm is to decide a “common” space for adaptation. The second task is to bridge the gap between the data differences that arise when the data from both the domains is projected onto the common space. This is generally achieved by leveraging some pivotal information that is shared among the domains. These pivots could be in the form of instance correspondences [39], overlapping features [13], shared label space [16, 28, 33], common meta-features/latent space [10, 18, 20, 38] or any task specific/independent information [5, 6].

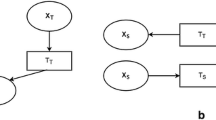

Latent Space Transformation (LST) approaches to HTL project the data from both the domains onto a shared subspace for adaptation, thus learning two transformations, one each for the source and target domain. On the other hand, Feature Space Remapping (FSR) approaches consider the common space as either of the two domains and determine a single transformation to transform data from the source domain to the target domain or vice-versa. The recent state-of-the-art HTL approaches leverage the common label space either to determine the cross-domain correspondences for learning the transformation(s) [16, 28, 33] or formulate a solution for obtaining the transformations as a minimization objective [17, 26, 27, 31, 37]. However, these approaches are not directly applicable for knowledge transfer between domains with heterogeneous label spaces.

We propose a novel FSR algorithm (refer [1, 23] for our preliminary work) that works even when there are no shared features and instance correspondences between the source and target domain. It utilises the label space dependencies estimated through Normalised Google Distance to co-align the data from the two domains in the target space while preserving the original structure of the source data. Being a FSR framework, the proposed approach overcomes the need to determine an optimal shared subspace as compared to LST approaches and unlike [17, 27], does not suffer from out-of-sample extension problem. The approach also utilises source instances whose labels are absent in the target domain, by encoding the absent labels using the inter-label relationships to the target labels. Along with inter-label dependencies across the heterogeneous label spaces, the approach also utilises intra-label relationships among target labels to learn a robust target model.

1.1 Problem Definition

Let \(S \in \mathbb {R}^{n_S \times d_S}\) and \(T \in \mathbb {R}^{n_T \times d_T}\) be the source and target domain data respectively where \(n_S\) and \(n_T\) represent the number of labeled data points in each domain respectively and \(n_S\ggg n_T\). The number of features in the source and target domain are denoted by \(d_S\) and \(d_T\) respectively. The features in the two domains are different and \(d_S \ne d_T\). \(x^S\) denotes a labeled source instance with \(y^S\) as the associated label. Similarly, \(x^T\) is a labeled target instance with \(y^T\) as its label. The source and the target label space may or may not be overlapping. However, we assume that there exists semantic relationships within and across the label spaces. Let the number of unique labels in the source and target domain be \(L_S\) and \(L_T\) respectively. The goal of the proposed approach is to learn relevant source data points \(B_S \in \mathbb {R}^{n_S \times d_T}\) that adapt well to the target task. The set of relevant source data is used along with the limited target data \(\{x^T_i,y^T_i\}_{i=1}^{n_T}\) to learn the model for the target task.

2 Related Work

There have been many approaches for transfer learning, some of which have been extended for heterogenerous transfer learning. Manifold alignment based LST approaches [21, 24, 31, 34] can be viewed as constrained dimensionality reduction frameworks that intend to find a low-dimensional embedding for multiple domains where the geometric structure of the original domains is preserved. These appraoches assume that the heterogeneous source and target domain share a smooth low-dimensional manifold (subspace). However, such a strong manifold assumption may not hold good for real-world heterogeneous transfer tasks, especially for datasets with high-dimensional features [37]. Supervised Heterogeneous Feature Augmentation (HFA) [17] is a SVM-based LST optimisation framework that uses a common augmented feature space for adaptation. Since HFA does not return the transformation matrices explicitly, it suffers from the out-of-sample extension problem. Subspace Co-Projection (SCP) [37] is a semi-supervised LST optimisation framework that learns the model weights in the projected subspace simultaneously with the transformations. The closed form solution of SCP requires large matrix inversions for high-dimensional datasets. Co-regularised Heterogeneous Transfer Learning (Co-HTL) [8] is a supervised LST approach that jointly aligns the data from the domains in the shared subspace. A common limitation of these LST approaches is that they require determining the optimal subspace by performing a grid-search on the dimension of the shared subspace (d).

In contrast, the FSR approaches directly map the features across the domains. However, learning the direct transformation involves estimation of a larger set of parameters in comparison with LST approaches. Sparse Heterogeneous Feature Remapping (SHFR) [16] leverages the common labels across the domains encoded using error correcting output codes (ECOC) as pivots to generate cross-domain correspondences. Supervised Heterogeneous Domain Adaptation using Random Forests (SHDA-RF) [33] relies on common label distributions that are obtained from leaf nodes of decision trees trained on labeled source and target domain data as the pivots. Both SHFR and SHDA-RF rely on a common set of labels between source and target domain to estimate correspondences and hence, cannot be directly applied to bridge two domains with heterogeneous label spaces.

The proposed FSR optimization framework overcomes the limitations of the above-mentioned approaches. It bridges the domains with heterogeneous feature and label spaces in a generic setting without relying on instance or feature correspondences. Even if there exists very few labeled instances in the target domain, the proposed algorithm is effective for transferring knowledge as asserted by the experimental results.

3 Proposed Methodology

Unlike LST approaches that have an inherent limitation of determining the optimal subspace for transfer, the proposed HTL algorithm, Web-Induced Heterogeneous Transfer Learning with Sample Selection (WIHTLSS), is conceived as a FSR minimisation objective with the goal of constructively utilising data from the source and target domains to learn a robust target model. Given the source domain data S and target domain data T, the proposed objective (Eq. 1) iteratively minimises the overall loss incurred by jointly aligning the data of the source and target domain in the target space while learning the transformed source data \(B_S\) and the transformation \(P \in \mathbb {R}^{d_S \times d_T}\) that links the heterogeneous features of the source and target domain.

The first term, L, in Eq. 1 preserves the original structure in the transformed source data, while the second and the third term, D and G, align the transformed source data closer to the target data distribution. These two terms together determine the extent of alignment between transformed source and target instances. Excluding D and G will not adapt the transformed source to the target, and excluding L will overly bias the transformed source instances towards the limited labeled target instances, thus not generalising across the target. There is a trade-off between leveraging the relatedness to the source domain and the extent to which we want to adapt the transformed source data to the target task. This tradeoff is regulated by the variables \(\beta \) and \(\kappa \). The last term, R, is the regulariser that prevents overfitting.

We define L in terms of the reconstruction error (Eq. 2), which measures the extent to which the structure of the original source data is preserved in the target domain. The reconstruction error computes the loss incurred due to projection of source data, i.e., the difference between the original domain data and the transformed data being remapped to the original space.

Here, \(P' \in \mathbb {R}^{d_T \times d_S}\) denotes the transpose of the transformation P. The reconstruction error takes advantage of the relatedness of the source and target domains to fill in the void in the target space with missing label data. An added benefit of using reconstruction error is that unlabeled data can be used to induce regularization which in turn helps to learn a more robust transformation.

The second and third term (D and G) that measure the mis-alignment between transformed source and target instances are defined in terms of weighted pairwise distances between the transformed source and target instances (presented in Eq. 3).

Inspired by the contrastive loss [29], the second term D constrains the transformed source instances to be closer to the target instances with the same or related labels whereas the third term G ensures that dissimilar transformed source instances are pushed apart and kept at a minimum distance m. The similarity between the labels of the \(i^{th}\) transformed source instance and the \(j^{th}\) labeled target instance (denoted as \(W_{ij}\)) is assigned as the weight for aligning them.

As the domains have heterogeneous labels, weighting the distance based on the relatedness of the labels allows the use of source data tagged with related labels, when the exact target label is absent in the source domain. We define the encoding of the source labels in terms of target labels using the Normalised Google Distance (NGD) [7]. Equation 4 defines the NGD between two search keywords \(y_1\) and \(y_2\), where f() denotes the number of web page hits returned by the Google search engine and N is the number of pages indexed by Google multiplied by the average number of singleton search keywords on those pages.

Since NGD is a dissimilarity measure that returns the value between \([0,\infty )\), we standardize it to a similarity measure W that outputs a value between [0,1] (Eq. 5).

where Z denotes the maximum NGD score obtained from the labels across the domains. Using the similarity matrix W, we induce semantic co-alignment in our framework by exploiting the inter-label space similarities.

While minimising the inter-domain differences using the label information, there is a significant risk of over-fitting on the limited target training data. Hence, we adopt an explicit regulariser R(., .) in the objective function to penalise over-fitting as depicted in Eq. 6.

The overall objective J() is given in Eq. 7.

The proposed optimisation problem is not jointly convex with respect to the two variables \(B_S\) and P. However, it is convex with respect to any one of them while the other has been fixed. Consequently, we utilise an alternating algorithm for solving the unconstrained optimisation (similar to the E-M process), by iteratively fixing one variable to estimate the remaining one until convergence. The proposed unconstrained optimization problem can be easily solved by using methods that are gradient-based or hessian-based. In contrast to gradient-based methods, hessian-based methods such as quasi-newton have a faster rate of convergence but demand extensive memory (\(O(d_S.d_T)^2\)) when dealing with high-dimensional datasets. The cross-lingual text transfer tasks involve high dimensional datasets where as the cross-domain activity recognition tasks have a significantly smaller dimension size. Hence, we use Conjugate Gradient Descent (CGD) method [9] (gradient-based) to solve the cross-lingual transfer tasks and quasi-newton method (hessian-based) for the cross-domain activity recognition tasks. The optimisation routine comprises of two alternating steps.

Step 1: Fix \(B_S\), and solve for P, using the gradient update for P.

After updating P, the next step involves solving for \(B_S\).

Step 2: Use P from Step 1 and update the value of \(B_S\).

Here, \({(W_S)}_{ii} = \sum _{j=1}^{n_T}{W_{ij}}\) and \({(W_D)}_{ii} = \sum _{j=1}^{n_T}{(1 - W_{ij})}\) where \(W_S, W_D \in [0,1]^{n_S \times n_S}\). The two alternating steps of the proposed algorithm are repeated iteratively until convergence. These steps are summarized in Algorithm 1.

The existing HTL algorithms assume that the knowledge is transferred to a different but related target domain [25]. As the feature spaces are disparate, quantifying the extent of this relatedness apriori is a hard problem. In practice, the source and the target domain data can be very distant for real-world tasks and furthermore, only a handful of source data might be relevant to the target task. Since the proposed optimization objective tries to bring the similar instances closer while keeping the dissimilar ones away, we define a transformed source instance to be relevant if it is in the close proximity of at least one target instance. We select only the k-nearest transformed source instances for every target instance to train the final model. This helps to avoid negative transfer to a certain extent at the instance-level by selecting the relevant source instances for the target task. The optimal value of k is obtained through experimentation on a validation set. The extra computation cost of finding the nearest neighbors \(O(n_S.n_T.d_T)\) is negligible as compared to the overall cost of the optimization routine.

3.1 Merging Heterogeneous Label Spaces

As the label spaces are heterogeneous, we have to link the label spaces first before using the relevant transformed source data and the target data T to train the final model. Given \(L_T\) unique labels in the target domain, we encode the label y of a training instance with a vector of size \(L_T\). The \(i^{th}\) entry of the encoded vector is the semantic similarity, computed via NGD, between y and the \(i^{th}\) target label. This encoding encapsulates the inter-label space similarities of the source and target domain along with intra-label space similarities in the target domain. The associations between the labels within and across the domains enrich the transformation by providing additional discriminating information to learn a robust model. Replacing the label y with its encoding for every labeled instance \((x,y) \in (B_S \cup T)\) results in a multi-output regression problem. Independent single output regressors ignore relationships between the outputs. These are captured through stacked regressors [19]. Stacked regression is a 2-stage process that feeds the predictions made in the first stage as an augmented input for the second stage. For an unseen target instance, the target label encoding that is closest to the predicted values (computed via Euclidean distance) is chosen as the predicted label.

4 Experiments

The performance of WIHTLSS is compared against the following baseline and state-of-the-art transfer approaches:

-

Random Forest (BRF) [22]: We train a random forest using 100 decision trees on only target training data where each tree is learned using \(\sqrt{d_T}\) features [32]. Since the labeled target data is very limited, instance bagging is not used for tree construction.

-

Support Vector Machine with Error Correcting Output Codes (SVM-ECOC): In our experiments, we use linear kernel and Error Correcting Output Codes (ECOC) on the target data to get the SVM baseline results. We obtain the optimal value of the box-penalty parameter by experimentation on the validation set.

-

Sparse Heterogeneous Feature Remapping (SHFR) [16]: SHFR-ECOC is a FSR approach based on SVM-ECOC that utilizes common labels as pivots to generate cross-domain correspondences in the form of SVM weight vectors. A linear and sparse mapping is learned by employing Least Absolute Shrinkage and Selection Operator (LASSO) [30] on these correspondences. We perform a grid search to get the optimal box constraint parameter and the optimal length of the Error Correcting Output Codes using a validation set (for every fold). Since SHFR-ECOC reuses the source SVM model to evaluate the transfer performance, to ensure a fair comparison against WIHTLSS, we modify the original approach to SHFR-RF, where we train a random forest model on the transformed source data along with the target data.

-

Supervised Heterogeneous Domain Adaptation using Random Forests (SHDA-RF) [33]: Similar to SHFR, SHDA-RF is another supervised FSR transfer approach that leverages the shared label space to generate cross-domain correspondences. After estimating the cross-domain correspondences from the random forest models trained on the source and target data, we fine tune the regulariser \(\lambda \) and report the best results over 16-folds. Both SHDA and SHFR estimate corresponding data by leveraging the common labels across the domains. For tasks with heterogeneous outputs, we vary the label-similarity threshold (\(W_{ij} \in [0.3,1]\)) to generate the correspondences.

-

Domain Adaptation using Manifold Alignment (DAMA) [31]: DAMA is a LST approach that learns a low-dimensional embedding where the projected data from the source and target domain is aligned using labels while preserving the original structure. We vary the hyper-parameter \(\beta \) to capture the trade-off between preserving the original topology and label-induced alignment in the shared subspace to get the best results. NGD is used to calculate the similarity of the labels across the domains (instead of 0/1 value used in the paper). The optimal size of the shared subspace was determined through experimentation on the validation set.

-

Co-regularised Heterogeneous Transfer Learning (Co-HTL) [8]: Similar to DAMA, Co-HTL is another LST approach that also leverages label relationships to co-align source and target data in a common space. Here too, NGD is used to calculate the similarity between the labels of the domains (instead of the using the divergence between the label-embeddings obtained from word2vec [15] model or the divergence between topic distribution vectors obtained via Latent Dirichlet Allocation [14]). The optimal size of the shared subspace was determined through experimentation on the validation set.

We consider three heterogeneous transfer tasks to compare the performance of WIHTLSS against the aforementioned algorithms.

-

Cross-lingual Text Transfer: We evaluate the cross-lingual transfer performance on two benchmark text datasets namely, (1) Amazon Cross-Lingual Sentiment (Amazon CLS) datasetFootnote 1 [11] and (2) Reuters Multi-Lingual datasetFootnote 2 [36]. We utilise the same experimental setting as mentioned by Zhou et al. [16] to test the performance of the proposed algorithm on cross-lingual sentiment and text classification tasks.

-

Cross-domain Activity Recognition: We picked three single-resident CASASFootnote 3 [12] datasets for this transfer task. We follow the same procedure as mentioned by Sukhija et al. [33] to construct the smart home datasets from raw sensor records. The labeled data from one smart home is treated as the source domain and limited labeled sensor data in another serves as the target domain. We employ the same experimental setting as mentioned by Sukhija et al. to evaluate six cross-domain activity recognition tasks.

-

Deep Representation Transfer: In this experiment, the CIFAR-100Footnote 4 [3] image representations obtained from the last fully connected layer (4096 features) of the VGG-19 model [2] (pretrained on the Imagenet datasetFootnote 5) act as the target domain whereas the related ImagenetFootnote 6 [4] image representations acquired from the last fully connected layer (4096 features) of the pre-trained VGG-16 modelFootnote 7 act as the source domain. We perform dimensionality reduction using Principal Component Analysis (PCA) [39] to preserve 60% variance on the obtained source and target image representations. In every fold, for a source-target pair, we pick 100 images per label to form the source domain data and 5 images per label as the target training data. The optimal value of the hyper-parameters is determined using a disjoint validation set (100 images per label). We report the classification error on the test setFootnote 8 consisting of 100 images per label. We repeat this process over 16 different folds and report the mean error and standard deviation on the following (Source\(\rightarrow \)Target) transfer tasks:

-

1.

Imagenet(321–326, 118–121, 125) \(\rightarrow \) CIFAR-100(Butterfly-14, Crab-26)

-

2.

Imagenet(52–68, 33–37) \(\rightarrow \) CIFAR-100(Snake-78, Turtle-93)

The numbers within the brackets for the Imagenet(.) dataset are the label identitiesFootnote 9 corresponding to the human readable labels.

-

1.

5 Results and Discussion

We report and analyse the performance of several state-of-the-art transfer algorithms against WIHTLSS in three HTL scenarios, namely (1) when the source and target domain are characterised by heterogeneous feature spaces but share the same label space, (2) with heterogeneous feature spaces but an overlapping label space and (3) when the labels across the domains are also heterogeneous.

The cross-lingual transfer experiments belong to the first scenario where the vocabulary differences in the source and target documents lead to heterogeneous feature spaces. However, the same set of labels are shared between the domains. The cross-domain activity recognition experiments correspond to the second HTL scenario due to an overlapping set of activity labels across the source and target smart homes. However, the daily activities of different smart home residents lead to semantically related label spaces. The deep representation transfer tasks belong to the third scenario. With respect to a binary target task (distinguishing 2 classes from the CIFAR-100 dataset), we pick images with distinct but related labels as the source (related images from the Imagenet) to analyse the impact of leveraging semantic label relationships determined through NGD to align heterogeneous representations obtained from deep models.

The reason for learning a linear transformation stems from the characteristics of these transfer tasks. As we are trying to map bag-of-words representation for cross-lingual transfer tasks, the relationship between words (that are synonymous) within a language and across different languages tends to be linear [16]. Similarly for cross-domain activity recognition tasks, the feature values of the sensors (in a functional area such as kitchen, bedroom etc.) across different smart homes appear to be linearly related.

The baseline and transfer results on all transfer tasks are shown in Table 1. Since the baseline random forest (BRF) performed significantly better than classical linear SVM on most transfer tasks, we kept random forest as the final model for all transfer approaches. For cross-lingual transfer tasks on Amazon CLS dataset and Reuters Multilingual dataset, the notable performance improvement of the transfer approaches over the baseline BRF indicates the feasibility of cross-lingual knowledge transfer. It can be observed that the proposed framework WIHTLSS significantly outperforms (p-value < 0.05) SHFR-RF by 3.5–7%, SHDA-RF by 2.5–3%, DAMA by 7–15% and Co-HTL by 1.5–3.5% in every cross-lingual transfer setting.

The experimental results on CASAS datasets are also depicted in Table 1. It can be seen that WIHTLSS outperforms all the other approaches on the CASAS datasets. Also, the performance of feature space remapping (FSR) approaches namely SHDA-RF and SHFR-RF is significantly better than DAMA. Among the FSR approaches, WIHTLSS outperforms the best performing algorithms, SHDA-RF and Co-HTL, by 3% on average (p-value < 0.05). Even for the deep representation transfer tasks, the proposed algorithm significantly outperforms (p-value < 0.05) all the baseline and transfer approaches.

We investigate the impact of having more labeled data in the target domain on the transfer performance of WIHTLSS. Due to lack of space, we show the results on a subset of the transfer tasks. The experimental results in Fig. 1 illustrate that having more labeled data in the target domain yields better transfer performance for all approaches. It can be observed that the transfer improvement is significant even when there are just 10 or 50 samples per label in the target domain. However, the utility of transfer reduces beyond certain point at which there are sufficient labeled instances in the target domain to directly learn a model. Similar trend was observed for other tasks as well.

5.1 Impact of the Hyper-parameters

We first perform a grid search on the hyper-parameter \(\beta \) (while keeping \(\lambda =0,\kappa =0\)) to assess the effectiveness of label-similarity induced alignment on the transfer performance of WIHTLSS. From Fig. 2(a), it is evident that aligning data of the domains in the target space by leveraging semantic relationships between heterogeneous labels is beneficial. When \(\beta =0\), the performance is significantly poor in comparison to the baseline for all the transfer tasks. This suggests that only preserving the original structure of data in the target space does not guarantee a reasonable alignment where instances of related classes are closer than unrelated classes. We hypothesised to bring the instances with same or related labels closer to each other for learning a better transformation. It can be observed that there is substantial improvement in the transfer performance with increase in the importance of \(\beta \). This indicates that leveraging the label similarities in the proposed formulation has a positive influence on the transfer performance of WIHTLSS. However, overemphasising the importance of label-space induced alignment has a detrimental effect on the transfer performance as the learned transformation becomes biased towards the limited labeled target data (which does not necessarily represent the true target distribution). Further, replacing NGD based similarity measure with divergence from the word2vec embeddings [15] did not result in significant change in the performance.

After obtaining optimal \(\beta \), we vary \(\kappa \) to investigate the importance of the 3rd term G. For this experiment, we fix the margin \(m=1\), keep \(\lambda =0\) and use the optimal \(\beta \) for D. It can be observed from Fig. 2(b) that the transfer performance improves with increase in importance of G. This suggests that pushing dissimilar instances apart helps in learning a better transformation. However, over-regularization of this label-dissimilarity induced alignment worsens the transfer performance as it negatively affects the original structure. By fixing the optimal value of \(\kappa \), we then determine the optimal margin (refer Fig. 2(c)). Our hypothesis here is that with low margin, dissimilar source instances will continue to remain close to the target instances resulting in poorer performance when compared to larger values of the margin. This can be observed in Fig. 2(c). The decrease in the performance for smaller values of margin is low for the cross-lingual and cross-domain activity recognition tasks, but significant in the deep representation transfer task. Similarly, beyond the optimal value of the margin, pushing away the dissimilar instances affects the distribution of the instances in the target space significantly, resulting in performance degradation. Finally, we fine tuned the regulariser term by varying the hyper-parameter \(\lambda \). It can be observed from Fig. 2(d) that WIHTLSS performs the best, on average, when \(\lambda \) is set to 0.1.

Figure 3 depicts the impact of varying the number of nearest neighbors (k) on the transfer performance of WIHTLSS. It can be observed that the transfer performance improves with increase in the number of nearest neighbors. This result validates our hypothesis that the transformed source instances that were the nearest neighbors of any target instance are actually relevant for the target task. However, when we add more neighbors beyond a certain point, transformed source data points that are quite far also contribute towards learning the model. Apart from the deep transfer task (butterfly v/s crab), in the extreme case, when all the source instances are considered, it can be observed that the transfer performance suffers for all other tasks.

In our deep representation transfer experiment, for the binary target task (butterfly v/s crab), we used only related image representations as the source i.e. the source image representations only contain the encodings of different types of butterfly and crab. Hence, all the image representations of the source are actually relevant for the binary target task. This is in agreement with our observations as well (refer Fig. 3).

5.2 Convergence Analysis

The convergence results for the optimisation routines are shown in Fig. 4. With quasi-newton method for cross-domain activity recognition experiments, the optimisation error decreases gradually over iterations and convergence is achieved within 15–20 iterations (on average). However, with CGD algorithm on cross-lingual transfer experiments, the error drops sharply in the first few iterations and convergence is reached inside 5–10 iterations.

6 Summary and Future Work

WIHTLSS is an integration of collective inference and FSR based transfer learning approach that bridges domains with heterogeneous feature and label spaces without relying on instance or feature correspondences. Assuming some semantic relationships within and across the label spaces, it utilizes web knowledge to align the source data in the target space while preserving the original structure. It can be viewed as a simple version of dictionary learning without orthogonality or sparsity constraints but using L2 norm constraints on the dictionary and the new representation. The label-induced alignment terms are the modified Laplacian regularisation terms where the adjacency matrix is calculated based on the NGD. The experimental results on real-world HTL tasks with identical and different output spaces indicate the superiority of WIHTLSS over state-of-the-art supervised transfer approaches.

A limitation of the proposed approach is that it requires some amount of labeled data in the target domain. Consequently, if labeled data is absent in the target domain, annotating relatively small number of unlabeled data becomes an inescapable task. Besides leveraging the semantic label relationships to learn the transformation, one can investigate the possibility of leveraging unlabeled domain knowledge or the task associated characteristic properties to determine a quantifiable measure of the relatedness W. Further, the proposed algorithm utilises a single source domain for knowledge transfer, as part of future work, we wish to explore how to effectively combine data from multiple related modalities simultaneously to make an improved final prediction on the target.

Notes

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

References

Sukhija, S.: Label space driven heterogeneous transfer learning with web induced alignment. In: Proceedings of the 32nd AAAI Conference on Artificial Intelligence (2018)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition, arXiv preprint arXiv:1409.1556 (2014)

Krizhevsky, A., Hinton, G.: Learning multiple layers of features from tiny images, Citeseer (2009)

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., Li, F.-F.: Imagenet: a large-scale hierarchical image database. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2009)

Zhu,Y., et al.: Heterogeneous transfer learning for image classification. In: Proceedings of the AAAI National Conference on Artificial Intelligence (2011)

Prettenhofer, P., Stein, B.: Cross-language text classification using structural correspondence learning. In: Proceedings of the 48th Annual Meeting of the Association for Computational Linguistics (ACL) (2010)

Cilibrasi, R.L., Vitanyi, P.M.B.: The google similarity distance. In: IEEE Transactions on Knowledge and Data Engineering (2007)

Wei, Y., Zhu, Y., Leung, C.W.-k., Song, Y., Yang, Q.: Instilling social to physical: co-regularized heterogeneous transfer learning. In: Proceedings of the AAAI National Conference on Artificial Intelligence (2016)

Branch, M.A., Coleman, T.F., Li, Y.: A subspace, interior, and conjugate gradient method for large-scale bound-constrained minimization problems. SIAM J. Sci. Comput. 21(1), 1–23 (1999)

Hu, D.H., Yang, Q.: Transfer learning for activity recognition via sensor mapping. In: Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence (IJCAI) (2011)

Lichman, M.: UCI Machine Learning Repository. University of California, Irvine, School of Information and Computer Sciences (2013). http://archive.ics.uci.edu/ml

Cook, D.J., Crandall, A.S., Thomas, B.L., Krishnan, N.C.: CASAS: a smart home in a box. Computer 46(7), 62–69 (2013)

Pan, S.J., Yang, Q.: A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 22(10), 1345–1359 (2010)

Blei, D.M., Ng, A.Y., Jordan, M.I.: Latent dirichlet allocation. J. Mach. Learn. Res. 3(Jan), 993–1022 (2003)

Mikolov, T., Sutskever, I., Chen, K., Corrado, G.S., Dean, J.: Distributed representations of words and phrases and their compositionality. In: Advances in Neural Information Processing Systems (2013)

Zhou, J.T., Tsang, I.W., Pan, S.J., Tan, M.: Heterogeneous domain adaptation for multiple classes. In: Proceedings of the International Conference on Artificial Intelligence and Statistics (2014)

Li, W., Duan, L., Xu, D., Tsang, I.W.: Learning with augmented features for supervised and semi-supervised heterogeneous domain adaptation. IEEE Trans. Pattern Anal. Mach. Intell. 36(6), 1134–1148 (2014)

van Kasteren, T.L.M., Englebienne, G., Kröse, B.J.A.: Transferring knowledge of activity recognition across sensor networks. In: Floréen, P., Krüger, A., Spasojevic, M. (eds.) Pervasive 2010. LNCS, vol. 6030, pp. 283–300. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-12654-3_17

Borchani, H., Varando, G., Bielza, C., Larrañaga, P.: A survey on multi-output regression. Wiley Interdisc. Rev. Data Min. Knowl. Discovery 5(5), 216–233 (2015)

He, J., Liu, Y., Yang, Q.: Linking heterogeneous input spaces with pivots for multi-task learning. In: Proceedings of the SIAM International Conference on Data Mining (2014)

Wang, C., Mahadevan, S.: Manifold alignment using procrustes analysis. In: Proceedings of the International Conference on Machine learning (2008)

Breiman, L.: Random forests. Mach. Learn. 45(1), 5–32 (2001)

Sukhija, S.: Label space driven feature space remapping. In: Proceedings of the ACM India Joint International Conference on Data Science and Management of Data (2018)

Wang, C., Mahadevan, S.: Manifold alignment without correspondence. In: Proceedings of the International Joint Conference on Artificial Intelligence (2009)

Rosenstein, M.T., Marx, Z., Kaelbling, L.P., Dietterich, T.G.: To transfer or not to transfer. In: NIPS 2005 Workshop on Transfer Learning (2005)

Hubert Tsai, Y.-H., Yeh, Y.R., Frank Wang, Y.-C.: Learning cross-domain landmarks for heterogeneous domain adaptation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2016)

Yan, Y., et al.: Learning discriminative correlation subspace for heterogeneous domain adaptation. In: Proceedings of the 26th International Joint Conference on Artificial Intelligence (2017)

Sukhija, S., Krishnan, N.C., Kumar, D.: Supervised heterogeneous transfer learning using random forests. In: Proceedings of the ACM India Joint International Conference on Data Science and Management of Data (2018)

Hadsell, R., Chopra, S., LeCun, Y.: Dimensionality reduction by learning an invariant mapping. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR) (2006)

Efron, B., Hastie, T., Johnstone, I., Tibshirani, R.: Least angle regression. Ann. Stat. 32(2), 407–499 (2004)

Wang, C., Mahadevan, S.: Heterogeneous domain adaptation using manifold alignment. In: Proceedings of the International Joint Conference on Artificial Intelligence (2011)

Kyrillidis, A., Zouzias, A.: Non-uniform feature sampling for decision tree ensembles. In: Proceedings of the IEEE International Conference on Acoustics Speech and Signal Processing (2014)

Sukhija, S., Krishnan, N.C., Singh, G.: Supervised heterogeneous domain adaptation via random forests. In: Proceedings of the International Joint Conference on Artificial Intelligence (2016)

Wang, C., Mahadevan, S.: A general framework for manifold alignment. In: Proceedings of the AAAI Fall Symposium: Manifold Learning and Its Applications (2009)

Yang, Q., Chen, Y., Xue, G.-R., Dai, W., Yu, Y.: Heterogeneous transfer learning for image clustering via the social web. In: Proceedings of the International Joint Conference on Natural Language Processing (2009)

Amini, M., Usunier, N., Goutte, C.: Learning from multiple partially observed views-an application to multilingual text categorization. In: Advances in Neural Information Processing Systems (2009)

Xiao, M., Guo, Y.: Semi-supervised subspace co-projection for multi-class heterogeneous domain adaptation. In: Appice, A., Rodrigues, P.P., Santos Costa, V., Gama, J., Jorge, A., Soares, C. (eds.) ECML PKDD 2015. LNCS (LNAI), vol. 9285, pp. 525–540. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-23525-7_32

Zhou, G., He, T., Wu, W., Hu, X.T.: Linking heterogeneous input features with pivots for domain adaptation. Proceedings of the 24th International Conference on Artificial Intelligence (IJCAI) (2015)

Zhou, J.T., Pan, S.J., Tsang, I.W., Yan, Y.: Hybrid heterogeneous transfer learning through deep learning. In: Proceedings of the AAAI National Conference on Artificial Intelligence (2014)

Acknowledgment

This research is supported by the Department of Science and Technology, India under grant YSS/2015/001206, and by the Indian Institute of Technology Ropar under the ISIRD grant.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Sukhija, S., Krishnan, N.C. (2019). Web-Induced Heterogeneous Transfer Learning with Sample Selection. In: Berlingerio, M., Bonchi, F., Gärtner, T., Hurley, N., Ifrim, G. (eds) Machine Learning and Knowledge Discovery in Databases. ECML PKDD 2018. Lecture Notes in Computer Science(), vol 11052. Springer, Cham. https://doi.org/10.1007/978-3-030-10928-8_46

Download citation

DOI: https://doi.org/10.1007/978-3-030-10928-8_46

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-10927-1

Online ISBN: 978-3-030-10928-8

eBook Packages: Computer ScienceComputer Science (R0)